已实现数据集转换

一、CoCo

1.1 CoCo2VOC

from pycocotools.coco import COCO

import os

from lxml import etree, objectify

import shutil

from tqdm import tqdm

import sys

import argparse

# 将类别名字和id建立索引

def catid2name(coco):

classes = dict()

for cat in coco.dataset['categories']:

classes[cat['id']] = cat['name']

return classes

# 将标签信息写入xml

def save_anno_to_xml(filename, size, objs, save_path):

E = objectify.ElementMaker(annotate=False)

anno_tree = E.annotation(

E.folder("DATA"),

E.filename(filename),

E.source(

E.database("The VOC Database"),

E.annotation("PASCAL VOC"),

E.image("flickr")

),

E.size(

E.width(size['width']),

E.height(size['height']),

E.depth(size['depth'])

),

E.segmented(0)

)

for obj in objs:

E2 = objectify.ElementMaker(annotate=False)

anno_tree2 = E2.object(

E.name(obj[0]),

E.pose("Unspecified"),

E.truncated(0),

E.difficult(0),

E.bndbox(

E.xmin(obj[1]),

E.ymin(obj[2]),

E.xmax(obj[3]),

E.ymax(obj[4])

)

)

anno_tree.append(anno_tree2)

anno_path = os.path.join(save_path, filename[:-3] + "xml")

etree.ElementTree(anno_tree).write(anno_path, pretty_print=True)

# 利用cocoAPI从json中加载信息

def load_coco(anno_file, xml_save_path):

if os.path.exists(xml_save_path):

shutil.rmtree(xml_save_path)

os.makedirs(xml_save_path)

coco = COCO(anno_file)

classes = catid2name(coco)

imgIds = coco.getImgIds()

classesIds = coco.getCatIds()

for imgId in tqdm(imgIds):

size = {

}

img = coco.loadImgs(imgId)[0]

filename = img['file_name']

width = img['width']

height = img['height']

size['width'] = width

size['height'] = height

size['depth'] = 3

annIds = coco.getAnnIds(imgIds=img['id'], iscrowd=None)

anns = coco.loadAnns(annIds)

objs = []

for ann in anns:

object_name = classes[ann['category_id']]

# bbox:[x,y,w,h]

bbox = list(map(int, ann['bbox']))

xmin = bbox[0]

ymin = bbox[1]

xmax = bbox[0] + bbox[2]

ymax = bbox[1] + bbox[3]

obj = [object_name, xmin, ymin, xmax, ymax]

objs.append(obj)

save_anno_to_xml(filename, size, objs, xml_save_path)

def parseJsonFile(data_dir, xmls_save_path):

assert os.path.exists(data_dir), "data dir:{} does not exits".format(data_dir)

if os.path.isdir(data_dir):

data_types = ['train2017', 'val2017']

for data_type in data_types:

ann_file = 'instances_{}.json'.format(data_type)

xmls_save_path = os.path.join(xmls_save_path, data_type)

load_coco(ann_file, xmls_save_path)

elif os.path.isfile(data_dir):

anno_file = data_dir

load_coco(anno_file, xmls_save_path)

if __name__ == '__main__':

"""

脚本说明:

该脚本用于将coco格式的json文件转换为voc格式的xml文件

参数说明:

data_dir:json文件的路径

xml_save_path:xml输出路径

"""

parser = argparse.ArgumentParser()

parser.add_argument('-d', '--data-dir', type=str, default='./data/labels/coco/train.json', help='json path')

parser.add_argument('-s', '--save-path', type=str, default='./data/convert/voc', help='xml save path')

opt = parser.parse_args()

print(opt)

if len(sys.argv) > 1:

parseJsonFile(opt.data_dir, opt.save_path)

else:

data_dir = './data/labels/coco/train.json'

xml_save_path = './data/convert/voc'

parseJsonFile(data_dir=data_dir, xmls_save_path=xml_save_path)

1.2 CoCo2YOLO

from pycocotools.coco import COCO

import os

import shutil

from tqdm import tqdm

import sys

import argparse

images_nums = 0

category_nums = 0

bbox_nums = 0

# 将类别名字和id建立索引

def catid2name(coco):

classes = dict()

for cat in coco.dataset['categories']:

classes[cat['id']] = cat['name']

return classes

# 将[xmin,ymin,xmax,ymax]转换为yolo格式[x_center, y_center, w, h](做归一化)

def xyxy2xywhn(object, width, height):

cat_id = object[0]

xn = object[1] / width

yn = object[2] / height

wn = object[3] / width

hn = object[4] / height

out = "{} {:.5f} {:.5f} {:.5f} {:.5f}".format(cat_id, xn, yn, wn, hn)

return out

def save_anno_to_txt(images_info, save_path):

filename = images_info['filename']

txt_name = filename[:-3] + "txt"

with open(os.path.join(save_path, txt_name), "w") as f:

for obj in images_info['objects']:

line = xyxy2xywhn(obj, images_info['width'], images_info['height'])

f.write("{}\n".format(line))

# 利用cocoAPI从json中加载信息

def load_coco(anno_file, xml_save_path):

if os.path.exists(xml_save_path):

shutil.rmtree(xml_save_path)

os.makedirs(xml_save_path)

coco = COCO(anno_file)

classes = catid2name(coco)

imgIds = coco.getImgIds()

classesIds = coco.getCatIds()

with open(os.path.join(xml_save_path, "classes.txt"), 'w') as f:

for id in classesIds:

f.write("{}\n".format(classes[id]))

for imgId in tqdm(imgIds):

info = {

}

img = coco.loadImgs(imgId)[0]

filename = img['file_name']

width = img['width']

height = img['height']

info['filename'] = filename

info['width'] = width

info['height'] = height

annIds = coco.getAnnIds(imgIds=img['id'], iscrowd=None)

anns = coco.loadAnns(annIds)

objs = []

for ann in anns:

object_name = classes[ann['category_id']]

# bbox:[x,y,w,h]

bbox = list(map(float, ann['bbox']))

xc = bbox[0] + bbox[2] / 2.

yc = bbox[1] + bbox[3] / 2.

w = bbox[2]

h = bbox[3]

obj = [ann['category_id'], xc, yc, w, h]

objs.append(obj)

info['objects'] = objs

save_anno_to_txt(info, xml_save_path)

def parseJsonFile(json_path, txt_save_path):

assert os.path.exists(json_path), "json path:{} does not exists".format(json_path)

if os.path.exists(txt_save_path):

shutil.rmtree(txt_save_path)

os.makedirs(txt_save_path)

assert json_path.endswith('json'), "json file:{} It is not json file!".format(json_path)

load_coco(json_path, txt_save_path)

if __name__ == '__main__':

"""

脚本说明:

该脚本用于将coco格式的json文件转换为yolo格式的txt文件

参数说明:

json_path:json文件的路径

txt_save_path:txt保存的路径

"""

parser = argparse.ArgumentParser()

parser.add_argument('-jp', '--json-path', type=str, default='./data/labels/coco/train.json', help='json path')

parser.add_argument('-s', '--save-path', type=str, default='./data/convert/yolo', help='txt save path')

opt = parser.parse_args()

if len(sys.argv) > 1:

print(opt)

parseJsonFile(opt.json_path, opt.save_path)

# print("image nums: {}".format(images_nums))

# print("category nums: {}".format(category_nums))

# print("bbox nums: {}".format(bbox_nums))

else:

json_path = './data/labels/coco/train.json' # r'D:\practice\compete\goodsDec\data\train\train.json'

txt_save_path = './data/convert/yolo'

parseJsonFile(json_path, txt_save_path)

# print("image nums: {}".format(images_nums))

# print("category nums: {}".format(category_nums))

# print("bbox nums: {}".format(bbox_nums))

二、VOC

2.1 VOC2CoCo

import xml.etree.ElementTree as ET

import os

import json

from datetime import datetime

import sys

import argparse

coco = dict()

coco['images'] = []

coco['type'] = 'instances'

coco['annotations'] = []

coco['categories'] = []

category_set = dict()

image_set = set()

category_item_id = -1

image_id = 000000

annotation_id = 0

def addCatItem(name):

global category_item_id

category_item = dict()

category_item['supercategory'] = 'none'

category_item_id += 1

category_item['id'] = category_item_id

category_item['name'] = name

coco['categories'].append(category_item)

category_set[name] = category_item_id

return category_item_id

def addImgItem(file_name, size):

global image_id

if file_name is None:

raise Exception('Could not find filename tag in xml file.')

if size['width'] is None:

raise Exception('Could not find width tag in xml file.')

if size['height'] is None:

raise Exception('Could not find height tag in xml file.')

image_id += 1

image_item = dict()

image_item['id'] = image_id

image_item['file_name'] = file_name

image_item['width'] = size['width']

image_item['height'] = size['height']

image_item['license'] = None

image_item['flickr_url'] = None

image_item['coco_url'] = None

image_item['date_captured'] = str(datetime.today())

coco['images'].append(image_item)

image_set.add(file_name)

return image_id

def addAnnoItem(object_name, image_id, category_id, bbox):

global annotation_id

annotation_item = dict()

annotation_item['segmentation'] = []

seg = []

# bbox[] is x,y,w,h

# left_top

seg.append(bbox[0])

seg.append(bbox[1])

# left_bottom

seg.append(bbox[0])

seg.append(bbox[1] + bbox[3])

# right_bottom

seg.append(bbox[0] + bbox[2])

seg.append(bbox[1] + bbox[3])

# right_top

seg.append(bbox[0] + bbox[2])

seg.append(bbox[1])

annotation_item['segmentation'].append(seg)

annotation_item['area'] = bbox[2] * bbox[3]

annotation_item['iscrowd'] = 0

annotation_item['ignore'] = 0

annotation_item['image_id'] = image_id

annotation_item['bbox'] = bbox

annotation_item['category_id'] = category_id

annotation_id += 1

annotation_item['id'] = annotation_id

coco['annotations'].append(annotation_item)

def read_image_ids(image_sets_file):

ids = []

with open(image_sets_file, 'r') as f:

for line in f.readlines():

ids.append(line.strip())

return ids

def parseXmlFilse(data_dir, json_save_path, split='train'):

assert os.path.exists(data_dir), "data path:{} does not exist".format(data_dir)

labelfile = split + ".txt"

image_sets_file = os.path.join(data_dir, "ImageSets", "Main", labelfile)

xml_files_list = []

if os.path.isfile(image_sets_file):

ids = read_image_ids(image_sets_file)

xml_files_list = [os.path.join(data_dir, "Annotations", f"{

i}.xml") for i in ids]

elif os.path.isdir(data_dir):

# 修改此处xml的路径即可

# xml_dir = os.path.join(data_dir,"labels/voc")

xml_dir = data_dir

xml_list = os.listdir(xml_dir)

xml_files_list = [os.path.join(xml_dir, i) for i in xml_list]

for xml_file in xml_files_list:

if not xml_file.endswith('.xml'):

continue

tree = ET.parse(xml_file)

root = tree.getroot()

# 初始化

size = dict()

size['width'] = None

size['height'] = None

if root.tag != 'annotation':

raise Exception('pascal voc xml root element should be annotation, rather than {}'.format(root.tag))

# 提取图片名字

file_name = root.findtext('filename')

assert file_name is not None, "filename is not in the file"

# 提取图片 size {width,height,depth}

size_info = root.findall('size')

assert size_info is not None, "size is not in the file"

for subelem in size_info[0]:

size[subelem.tag] = int(subelem.text)

if file_name is not None and size['width'] is not None and file_name not in image_set:

# 添加coco['image'],返回当前图片ID

current_image_id = addImgItem(file_name, size)

print('add image with name: {}\tand\tsize: {}'.format(file_name, size))

elif file_name in image_set:

raise Exception('file_name duplicated')

else:

raise Exception("file name:{}\t size:{}".format(file_name, size))

# 提取一张图片内所有目标object标注信息

object_info = root.findall('object')

if len(object_info) == 0:

continue

# 遍历每个目标的标注信息

for object in object_info:

# 提取目标名字

object_name = object.findtext('name')

if object_name not in category_set:

# 创建类别索引

current_category_id = addCatItem(object_name)

else:

current_category_id = category_set[object_name]

# 初始化标签列表

bndbox = dict()

bndbox['xmin'] = None

bndbox['xmax'] = None

bndbox['ymin'] = None

bndbox['ymax'] = None

# 提取box:[xmin,ymin,xmax,ymax]

bndbox_info = object.findall('bndbox')

for box in bndbox_info[0]:

bndbox[box.tag] = int(box.text)

if bndbox['xmin'] is not None:

if object_name is None:

raise Exception('xml structure broken at bndbox tag')

if current_image_id is None:

raise Exception('xml structure broken at bndbox tag')

if current_category_id is None:

raise Exception('xml structure broken at bndbox tag')

bbox = []

# x

bbox.append(bndbox['xmin'])

# y

bbox.append(bndbox['ymin'])

# w

bbox.append(bndbox['xmax'] - bndbox['xmin'])

# h

bbox.append(bndbox['ymax'] - bndbox['ymin'])

print('add annotation with object_name:{}\timage_id:{}\tcat_id:{}\tbbox:{}'.format(object_name,

current_image_id,

current_category_id,

bbox))

addAnnoItem(object_name, current_image_id, current_category_id, bbox)

json_parent_dir = os.path.dirname(json_save_path)

if not os.path.exists(json_parent_dir):

os.makedirs(json_parent_dir)

json.dump(coco, open(json_save_path, 'w'))

print("class nums:{}".format(len(coco['categories'])))

print("image nums:{}".format(len(coco['images'])))

print("bbox nums:{}".format(len(coco['annotations'])))

if __name__ == '__main__':

"""

脚本说明:

本脚本用于将VOC格式的标注文件.xml转换为coco格式的标注文件.json

参数说明:

voc_data_dir:两种格式

1.voc2012文件夹的路径,会自动找到voc2012/imageSets/Main/xx.txt

2.xml标签文件存放的文件夹

json_save_path:json文件输出的文件夹

split:主要用于voc2012查找xx.txt,如train.txt.如果用格式2,则不会用到该参数

"""

voc_data_dir = 'D:/jinxData/voctest/Annotations'

json_save_path = 'D:/jinxData/voc/voc2coco/train.json'

split = 'train'

parseXmlFilse(data_dir=voc_data_dir, json_save_path=json_save_path, split=split)

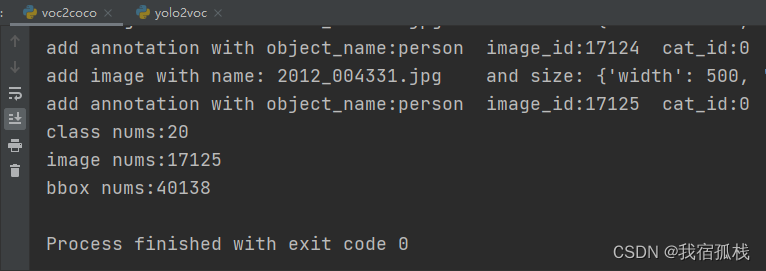

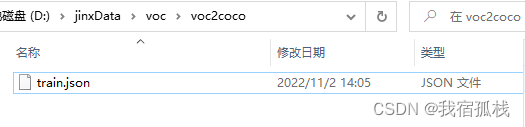

将annotations目录下的所有xml标注文件按coco格式写入了json文件中。

2.2 VOC2YOLO

import os

import json

import shutil

from lxml import etree

from tqdm import tqdm

category_set = set()

image_set = set()

bbox_nums = 0

class VOC2YOLO:

def __init__(self):

self.original_datasets = 'voc'

self.to_datasets = 'yolo'

def parse_xml_to_dict(self, xml):

"""

将xml文件解析成字典形式,参考tensorflow的recursive_parse_xml_to_dict

Args:

xml: xml tree obtained by parsing XML file contents using lxml.etree

Returns:

Python dictionary holding XML contents.

"""

if len(xml) == 0: # 遍历到底层,直接返回tag对应的信息

return {

xml.tag: xml.text}

result = {

}

for child in xml:

child_result = self.parse_xml_to_dict(child) # 递归遍历标签信息

if child.tag != 'object':

result[child.tag] = child_result[child.tag]

else:

if child.tag not in result: # 因为object可能有多个,所以需要放入列表里

result[child.tag] = []

result[child.tag].append(child_result[child.tag])

return {

xml.tag: result}

def write_classIndices(self, category_set):

class_indices = dict((k, v) for v, k in enumerate(category_set))

json_str = json.dumps(dict((val, key) for key, val in class_indices.items()), indent=4)

with open('class_indices.json', 'w') as json_file:

json_file.write(json_str)

def xyxy2xywhn(self, bbox, size):

bbox = list(map(float, bbox))

size = list(map(float, size))

xc = (bbox[0] + (bbox[2] - bbox[0]) / 2.) / size[0]

yc = (bbox[1] + (bbox[3] - bbox[1]) / 2.) / size[1]

wn = (bbox[2] - bbox[0]) / size[0]

hn = (bbox[3] - bbox[1]) / size[1]

return (xc, yc, wn, hn)

def parser_info(self, info: dict, only_cat=True, class_indices=None):

filename = info['annotation']['filename']

image_set.add(filename)

objects = []

width = int(info['annotation']['size']['width'])

height = int(info['annotation']['size']['height'])

for obj in info['annotation']['object']:

obj_name = obj['name']

category_set.add(obj_name)

if only_cat:

continue

xmin = round(float(obj['bndbox']['xmin']))

ymin = round(float(obj['bndbox']['ymin']))

xmax = round(float(obj['bndbox']['xmax']))

ymax = round(float(obj['bndbox']['ymax']))

bbox = self.xyxy2xywhn((xmin, ymin, xmax, ymax), (width, height))

if class_indices is not None:

obj_category = class_indices[obj_name]

object = [obj_category, bbox]

objects.append(object)

return filename, objects

def parseXmlFilse(self, voc_dir, save_dir):

assert os.path.exists(voc_dir), "ERROR {} does not exists".format(voc_dir)

if os.path.exists(save_dir):

shutil.rmtree(save_dir)

os.makedirs(save_dir)

xml_files = [os.path.join(voc_dir, i) for i in os.listdir(voc_dir) if os.path.splitext(i)[-1] == '.xml']

for xml_file in xml_files:

with open(xml_file) as fid:

xml_str = fid.read()

xml = etree.fromstring(xml_str)

info_dict = self.parse_xml_to_dict(xml)

self.parser_info(info_dict, only_cat=True)

with open(save_dir + "/classes.txt", 'w') as classes_file:

for cat in sorted(category_set):

classes_file.write("{}\n".format(cat))

class_indices = dict((v, k) for k, v in enumerate(sorted(category_set)))

xml_files = tqdm(xml_files)

for xml_file in xml_files:

with open(xml_file) as fid:

xml_str = fid.read()

xml = etree.fromstring(xml_str)

info_dict = self.parse_xml_to_dict(xml)

filename, objects = self.parser_info(info_dict, only_cat=False, class_indices=class_indices)

if len(objects) != 0:

global bbox_nums

bbox_nums += len(objects)

with open(save_dir + "/" + filename.split(".")[0] + ".txt", 'w') as f:

for obj in objects:

f.write(

"{} {:.5f} {:.5f} {:.5f} {:.5f}\n".format(obj[0], obj[1][0], obj[1][1], obj[1][2],

obj[1][3]))

if __name__ == '__main__':

voc2yolo = VOC2YOLO()

voc_dir = 'D:/jinxData/voctest/Annotations'

save_dir = 'D:/jinxData/voctest/convert'

voc2yolo.parseXmlFilse(voc_dir, save_dir)

print("image nums: {}".format(len(image_set)))

print("category nums: {}".format(len(category_set)))

print("bbox nums: {}".format(bbox_nums))

此处得到的是全部的标签信息,可根据如下代码进行train、val和test的比例划分:

import os

import random

def voc_proportion_divide(xmlfilepath, txtsavepath, trainval_percent, train_percent):

'''

vod数据集比例自定义划分

Args:

xmlfilepath: xml文件的地址, xml一般存放在Annotations下,如'D:\jinx\Annatations'

txtsavepath:地址选择自己数据下的ImageSets/Main,如'D:\jinx\ImageSets\Main'

trainval_percent: 训练和验证集比例

train_percent: 训练集比例(如trainval_percent=0.8,train_percent=0.7表示0.7train、 0.1val、0.2test)

'''

total_xml = os.listdir(xmlfilepath)

if not os.path.exists(txtsavepath):

os.makedirs(txtsavepath)

num = len(total_xml)

list_index = range(num)

tv = int(num * trainval_percent)

tr = int(tv * train_percent)

trainval = random.sample(list_index, tv)

train = random.sample(trainval, tr)

file_trainval = open(txtsavepath + '/trainval.txt', 'w')

file_test = open(txtsavepath + '/test.txt', 'w')

file_train = open(txtsavepath + '/train.txt', 'w')

file_val = open(txtsavepath + '/val.txt', 'w')

for i in list_index:

name = total_xml[i][:-4] + '\n'

if i in trainval:

file_trainval.write(name)

if i in train:

file_train.write(name)

else:

file_val.write(name)

else:

file_test.write(name)

file_trainval.close()

file_train.close()

file_val.close()

file_test.close()

三、YOLO

3.1 YOLO2CoCo

import argparse

import json

import os

import sys

import shutil

from datetime import datetime

import cv2

coco = dict()

coco['images'] = []

coco['type'] = 'instances'

coco['annotations'] = []

coco['categories'] = []

category_set = dict()

image_set = set()

image_id = 000000

annotation_id = 0

def addCatItem(category_dict):

for k, v in category_dict.items():

category_item = dict()

category_item['supercategory'] = 'none'

category_item['id'] = int(k)

category_item['name'] = v

coco['categories'].append(category_item)

def addImgItem(file_name, size):

global image_id

image_id += 1

image_item = dict()

image_item['id'] = image_id

image_item['file_name'] = file_name

image_item['width'] = size[1]

image_item['height'] = size[0]

image_item['license'] = None

image_item['flickr_url'] = None

image_item['coco_url'] = None

image_item['date_captured'] = str(datetime.today())

coco['images'].append(image_item)

image_set.add(file_name)

return image_id

def addAnnoItem(object_name, image_id, category_id, bbox):

global annotation_id

annotation_item = dict()

annotation_item['segmentation'] = []

seg = []

# bbox[] is x,y,w,h

# left_top

seg.append(bbox[0])

seg.append(bbox[1])

# left_bottom

seg.append(bbox[0])

seg.append(bbox[1] + bbox[3])

# right_bottom

seg.append(bbox[0] + bbox[2])

seg.append(bbox[1] + bbox[3])

# right_top

seg.append(bbox[0] + bbox[2])

seg.append(bbox[1])

annotation_item['segmentation'].append(seg)

annotation_item['area'] = bbox[2] * bbox[3]

annotation_item['iscrowd'] = 0

annotation_item['ignore'] = 0

annotation_item['image_id'] = image_id

annotation_item['bbox'] = bbox

annotation_item['category_id'] = category_id

annotation_id += 1

annotation_item['id'] = annotation_id

coco['annotations'].append(annotation_item)

def xywhn2xywh(bbox, size):

bbox = list(map(float, bbox))

size = list(map(float, size))

xmin = (bbox[0] - bbox[2] / 2.) * size[1]

ymin = (bbox[1] - bbox[3] / 2.) * size[0]

w = bbox[2] * size[1]

h = bbox[3] * size[0]

box = (xmin, ymin, w, h)

return list(map(int, box))

def parseXmlFilse(image_path, anno_path, save_path, json_name='train.json'):

assert os.path.exists(image_path), "ERROR {} dose not exists".format(image_path)

assert os.path.exists(anno_path), "ERROR {} dose not exists".format(anno_path)

if os.path.exists(save_path):

shutil.rmtree(save_path)

os.makedirs(save_path)

json_path = os.path.join(save_path, json_name)

category_set = []

with open(anno_path + '/classes.txt', 'r') as f:

for i in f.readlines():

category_set.append(i.strip())

category_id = dict((k, v) for k, v in enumerate(category_set))

addCatItem(category_id)

images = [os.path.join(image_path, i) for i in os.listdir(image_path)]

files = [os.path.join(anno_path, i) for i in os.listdir(anno_path)]

images_index = dict((v.split(os.sep)[-1][:-4], k) for k, v in enumerate(images))

for file in files:

if os.path.splitext(file)[-1] != '.txt' or 'classes' in file.split(os.sep)[-1]:

continue

if file.split(os.sep)[-1][:-4] in images_index:

index = images_index[file.split(os.sep)[-1][:-4]]

img = cv2.imread(images[index])

shape = img.shape

filename = images[index].split(os.sep)[-1]

current_image_id = addImgItem(filename, shape)

else:

continue

with open(file, 'r') as fid:

for i in fid.readlines():

i = i.strip().split()

category = int(i[0])

category_name = category_id[category]

bbox = xywhn2xywh((i[1], i[2], i[3], i[4]), shape)

addAnnoItem(category_name, current_image_id, category, bbox)

json.dump(coco, open(json_path, 'w'))

print("class nums:{}".format(len(coco['categories'])))

print("image nums:{}".format(len(coco['images'])))

print("bbox nums:{}".format(len(coco['annotations'])))

if __name__ == '__main__':

"""

脚本说明:

本脚本用于将yolo格式的标注文件.txt转换为coco格式的标注文件.json

参数说明:

anno_path:标注文件txt存储路径

save_path:json文件输出的文件夹

image_path:图片路径

json_name:json文件名字

"""

anno_path = 'D:/jinxData/TT100K45/labels/test'

save_path = 'D:/jinxData/YOLO/yolo2coco/test'

image_path = 'D:/jinxData/TT100K45/images/test'

json_name = 'train.json'

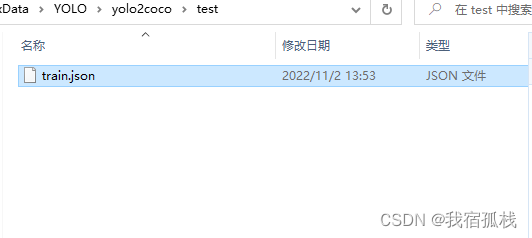

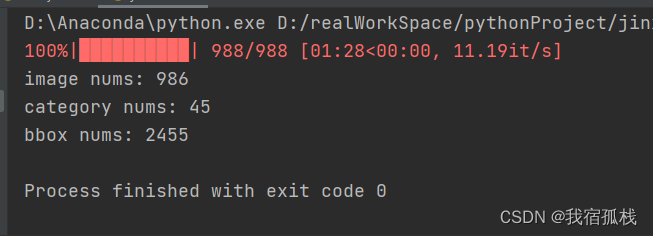

parseXmlFilse(image_path, anno_path, save_path, json_name)

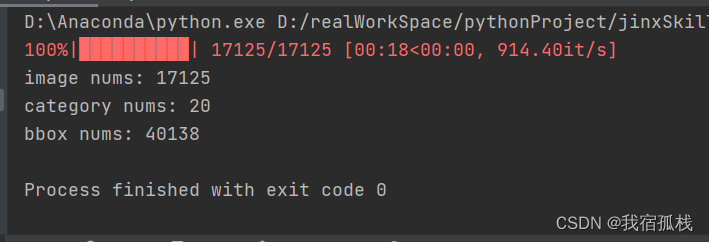

train和val同理。

3.2 YOLO2VOC

import argparse

import os

import sys

import shutil

import cv2

from lxml import etree, objectify

# 将标签信息写入xml

from tqdm import tqdm

images_nums = 0

category_nums = 0

bbox_nums = 0

def save_anno_to_xml(filename, size, objs, save_path):

E = objectify.ElementMaker(annotate=False)

anno_tree = E.annotation(

E.folder("DATA"),

E.filename(filename),

E.source(

E.database("The VOC Database"),

E.annotation("PASCAL VOC"),

E.image("flickr")

),

E.size(

E.width(size[1]),

E.height(size[0]),

E.depth(size[2])

),

E.segmented(0)

)

for obj in objs:

E2 = objectify.ElementMaker(annotate=False)

anno_tree2 = E2.object(

E.name(obj[0]),

E.pose("Unspecified"),

E.truncated(0),

E.difficult(0),

E.bndbox(

E.xmin(obj[1][0]),

E.ymin(obj[1][1]),

E.xmax(obj[1][2]),

E.ymax(obj[1][3])

)

)

anno_tree.append(anno_tree2)

anno_path = os.path.join(save_path, filename[:-3] + "xml")

etree.ElementTree(anno_tree).write(anno_path, pretty_print=True)

def xywhn2xyxy(bbox, size):

bbox = list(map(float, bbox))

size = list(map(float, size))

xmin = (bbox[0] - bbox[2] / 2.) * size[1]

ymin = (bbox[1] - bbox[3] / 2.) * size[0]

xmax = (bbox[0] + bbox[2] / 2.) * size[1]

ymax = (bbox[1] + bbox[3] / 2.) * size[0]

box = [xmin, ymin, xmax, ymax]

return list(map(int, box))

def parseXmlFilse(image_path, anno_path, save_path):

global images_nums, category_nums, bbox_nums

assert os.path.exists(image_path), "ERROR {} dose not exists".format(image_path)

assert os.path.exists(anno_path), "ERROR {} dose not exists".format(anno_path)

if os.path.exists(save_path):

shutil.rmtree(save_path)

os.makedirs(save_path)

category_set = []

with open(anno_path + '/classes.txt', 'r') as f:

for i in f.readlines():

category_set.append(i.strip())

category_nums = len(category_set)

category_id = dict((k, v) for k, v in enumerate(category_set))

images = [os.path.join(image_path, i) for i in os.listdir(image_path)]

files = [os.path.join(anno_path, i) for i in os.listdir(anno_path)]

images_index = dict((v.split(os.sep)[-1][:-4], k) for k, v in enumerate(images))

images_nums = len(images)

for file in tqdm(files):

if os.path.splitext(file)[-1] != '.txt' or 'classes' in file.split(os.sep)[-1]:

continue

if file.split(os.sep)[-1][:-4] in images_index:

index = images_index[file.split(os.sep)[-1][:-4]]

img = cv2.imread(images[index])

shape = img.shape

filename = images[index].split(os.sep)[-1]

else:

continue

objects = []

with open(file, 'r') as fid:

for i in fid.readlines():

i = i.strip().split()

category = int(i[0])

category_name = category_id[category]

bbox = xywhn2xyxy((i[1], i[2], i[3], i[4]), shape)

obj = [category_name, bbox]

objects.append(obj)

bbox_nums += len(objects)

save_anno_to_xml(filename, shape, objects, save_path)

if __name__ == '__main__':

"""

脚本说明:

本脚本用于将yolo格式的标注文件.txt转换为voc格式的标注文件.xml

参数说明:

anno_path:标注文件txt存储路径

save_path:json文件输出的文件夹

image_path:图片路径

"""

anno_path = 'D:/jinxData/TT100K45/labels/test'

save_path = 'D:/jinxData/YOLO/yolo2voc/test'

image_path = 'D:/jinxData/TT100K45/images/test'

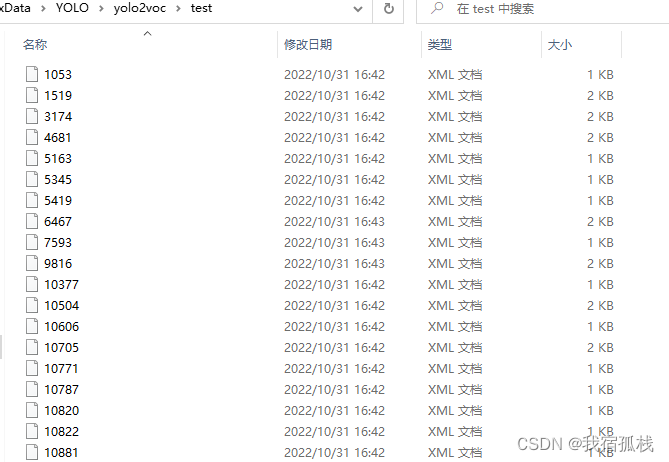

parseXmlFilse(image_path, anno_path, save_path)

print("image nums: {}".format(images_nums))

print("category nums: {}".format(category_nums))

print("bbox nums: {}".format(bbox_nums))

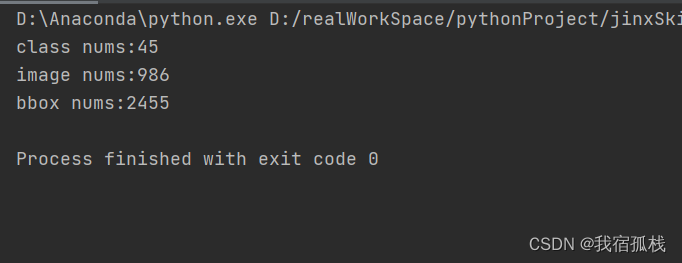

train、val和test分别执行一次即可。

以上代码参考自博文数据转换。

四、TT100K

4.1 TT100K2YOLO

import os

import json

from random import random

import cv2

import shutil

import json

import xml.dom.minidom

from tqdm import tqdm

import argparse

class TT100K2COCO:

def __init__(self):

self.original_datasets = 'tt100k'

self.to_datasets = 'coco'

def class_statistics(self):

# os.makedirs('annotations', exist_ok=True)

# 存放数据的父路径

parent_path = 'D:/jinxData/TT100K/data'

# 读TT100K原始数据集标注文件

with open(os.path.join(parent_path, 'annotations.json')) as origin_json:

origin_dict = json.load(origin_json)

classes = origin_dict['types']

# 建立统计每个类别包含的图片的字典

sta = {

}

for i in classes:

sta[i] = []

images_dic = origin_dict['imgs']

# 记录所有保留的图片

saved_images = []

# 遍历TT100K的imgs

for image_id in images_dic:

image_element = images_dic[image_id]

image_path = image_element['path']

# 添加图像的信息到dataset中

image_path = image_path.split('/')[-1]

obj_list = image_element['objects']

# 遍历每张图片的标注信息

for anno_dic in obj_list:

label_key = anno_dic['category']

# 防止一个图片多次加入一个标签类别

if image_path not in sta[label_key]:

sta[label_key].append(image_path)

# 只保留包含图片数超过100的类别

result = {

k: v for k, v in sta.items() if len(v) >= 100}

for i in result:

print("the type of {} includes {} images".format(i, len(result[i])))

saved_images.extend(result[i])

saved_images = list(set(saved_images))

print("total types is {}".format(len(result)))

type_list = list(result.keys())

result = {

"type": type_list, "details": result, "images": saved_images}

print(type_list)

# 保存结果

json_name = os.path.join(parent_path, 'statistics.json')

with open(json_name, 'w', encoding="utf-8") as f:

json.dump(result, f, ensure_ascii=False, indent=1)

def original_datasets2object_datasets_re(self):

'''

重新划分数据集

:return:

'''

# os.makedirs('annotations2', exist_ok=True)

# 存放数据的父路径

parent_path = 'D:/jinxData/TT100K/data'

# 读TT100K原始数据集标注文件

with open(os.path.join(parent_path, 'annotations.json')) as origin_json:

origin_dict = json.load(origin_json)

with open(os.path.join(parent_path, 'statistics.json')) as select_json:

select_dict = json.load(select_json)

classes = select_dict['type']

train_dataset = {

'info': {

}, 'licenses': [], 'categories': [], 'images': [], 'annotations': []}

val_dataset = {

'info': {

}, 'licenses': [], 'categories': [], 'images': [], 'annotations': []}

test_dataset = {

'info': {

}, 'licenses': [], 'categories': [], 'images': [], 'annotations': []}

label = {

} # 记录每个标志类别的id

count = {

} # 记录每个类别的图片数

owntype_sum = {

}

info = {

"year": 2021, # 年份

"version": '1.0', # 版本

"description": "TT100k_to_coco", # 数据集描述

"contributor": "Tecent&Tsinghua", # 提供者

"url": 'https://cg.cs.tsinghua.edu.cn/traffic-sign/', # 下载地址

"date_created": 2021 - 1 - 15

}

licenses = {

"id": 1,

"name": "null",

"url": "null",

}

train_dataset['info'] = info

val_dataset['info'] = info

test_dataset['info'] = info

train_dataset['licenses'] = licenses

val_dataset['licenses'] = licenses

test_dataset['licenses'] = licenses

# 建立类别和id的关系

for i, cls in enumerate(classes):

train_dataset['categories'].append({

'id': i, 'name': cls, 'supercategory': 'traffic_sign'})

val_dataset['categories'].append({

'id': i, 'name': cls, 'supercategory': 'traffic_sign'})

test_dataset['categories'].append({

'id': i, 'name': cls, 'supercategory': 'traffic_sign'})

label[cls] = i

count[cls] = 0

owntype_sum[cls] = 0

images_dic = origin_dict['imgs']

obj_id = 1

# 计算出每个类别共‘包含’的图片数

for image_id in images_dic:

image_element = images_dic[image_id]

image_path = image_element['path']

image_name = image_path.split('/')[-1]

# 在所选的类别图片中

if image_name not in select_dict['images']:

continue

# 处理TT100K中的标注信息

obj_list = image_element['objects']

# 记录图片中包含最多的实例所属的type

includes_type = {

}

for anno_dic in obj_list:

if anno_dic["category"] not in select_dict["type"]:

continue

# print(anno_dic["category"])

if anno_dic["category"] in includes_type:

includes_type[anno_dic["category"]] += 1

else:

includes_type[anno_dic["category"]] = 1

# print(includes_type)

own_type = max(includes_type, key=includes_type.get)

owntype_sum[own_type] += 1

# TT100K的annotation转换成coco的

for image_id in images_dic:

image_element = images_dic[image_id]

image_path = image_element['path']

image_name = image_path.split('/')[-1]

# 在所选的类别图片中

if image_name not in select_dict['images']:

continue

print("dealing with {} image".format(image_path))

# shutil.copy(os.path.join(parent_path,image_path),os.path.join(parent_path,"dataset/JPEGImages"))

# 处理TT100K中的标注信息

obj_list = image_element['objects']

# 记录图片中包含最多的实例所属的type

includes_type = {

}

for anno_dic in obj_list:

if anno_dic["category"] not in select_dict["type"]:

continue

# print(anno_dic["category"])

if anno_dic["category"] in includes_type:

includes_type[anno_dic["category"]] += 1

else:

includes_type[anno_dic["category"]] = 1

# print(includes_type)

own_type = max(includes_type, key=includes_type.get)

count[own_type] += 1

num_rate = count[own_type] / owntype_sum[own_type]

# 切换dataset的引用对象,从而划分数据集根据每个类别类别的总数量按7:2:1分为了train_set,val_set,test_set。

# 其中每个图片所属类别根据该图片包含的类别的数量决定(归属为含有类别最多的类别)

if num_rate < 0.7:

dataset = train_dataset

elif num_rate < 0.9:

dataset = val_dataset

else:

print("dataset=test_dataset")

dataset = test_dataset

for anno_dic in obj_list:

if anno_dic["category"] not in select_dict["type"]:

continue

x = anno_dic['bbox']['xmin']

y = anno_dic['bbox']['ymin']

width = anno_dic['bbox']['xmax'] - anno_dic['bbox']['xmin']

height = anno_dic['bbox']['ymax'] - anno_dic['bbox']['ymin']

label_key = anno_dic['category']

dataset['annotations'].append({

'area': width * height,

'bbox': [x, y, width, height],

'category_id': label[label_key],

'id': obj_id,

'image_id': image_id,

'iscrowd': 0,

# mask, 矩形是从左上角点按顺时针的四个顶点

'segmentation': [[x, y, x + width, y, x + width, y + height, x, y + height]]

})

# 每个标注的对象id唯一

obj_id += 1

# 用opencv读取图片,得到图像的宽和高

im = cv2.imread(os.path.join(parent_path, image_path))

# print(image_path)

H, W, _ = im.shape

# 添加图像的信息到dataset中

dataset['images'].append({

'file_name': image_name,

'id': image_id,

'width': W,

'height': H})

# 保存结果

for phase in ['train', 'val', 'test']:

json_name = os.path.join(parent_path, 'dataset/annotations/{}.json'.format(phase))

json_name = json_name.replace('\\', '/')

with open(json_name, 'w', encoding="utf-8") as f:

if phase == 'train':

json.dump(train_dataset, f, ensure_ascii=False, indent=1)

if phase == 'val':

json.dump(val_dataset, f, ensure_ascii=False, indent=1)

if phase == 'test':

json.dump(test_dataset, f, ensure_ascii=False, indent=1)

def divide_TrainValTest(self, source, target):

'''

创建文件路径

:param source: 源文件位置

:param target: 目标文件位置

'''

# for i in ['train', 'val', 'test']:

# path = target + '/' + i

# if not os.path.exists(path):

# os.makedirs(path)

# 遍历目录下的文件名,复制对应的图片到指定目录

for root, dirs, files in os.walk(source):

for file in files:

file_name = os.path.splitext(file)[0]

if file_name == 'train' or file_name == 'val' or file_name =='test' or file_name =='classes':

continue

image_path = os.path.join(file_name + '.jpg')

# print(image_path)

if 'train' in source:

shutil.copyfile('D:/jinxData/TT100K/data/image_reparation/'

+ image_path, target + '/train/' + image_path)

elif 'val' in source:

shutil.copyfile('D:/jinxData/TT100K/data/image_reparation/'

+ image_path, target + '/val/' + image_path)

elif 'test' in source:

shutil.copyfile('D:/jinxData/TT100K/data/image_reparation/'

+ image_path, target + '/test/' + image_path)

if __name__ == '__main__':

tt100k = TT100K2COCO()

# tt100k.class_statistics()

# tt100k.original_datasets2object_datasets_re()

# tt100k.coco_json2yolo_txt('train')

# tt100k.coco_json2yolo_txt('val')

# tt100k.coco_json2yolo_txt('test')

tt100k.divide_TrainValTest('D:/jinxData/TT100K/data/dataset/annotations/train', 'D:/jinxData/TT100K/data')

tt100k.divide_TrainValTest('D:/jinxData/TT100K/data/dataset/annotations/val', 'D:/jinxData/TT100K/data')

tt100k.divide_TrainValTest('D:/jinxData/TT100K/data/dataset/annotations/test', 'D:/jinxData/TT100K/data')