文章目录

本篇文章用案例介绍如何使用Celery来完成多个worker基于不同队列的任务消费的Demo

一、背景

公司业务涉及多云平台,对于资源同步,数据备份,爬虫等都需要执行大量且耗时较久的任务,单台机器的worker多进程已经无法满足大量任务的执行,这个时候就需要考虑衡量扩展的方式,来满足业务的需求。

二、Celery 分布式任务架构

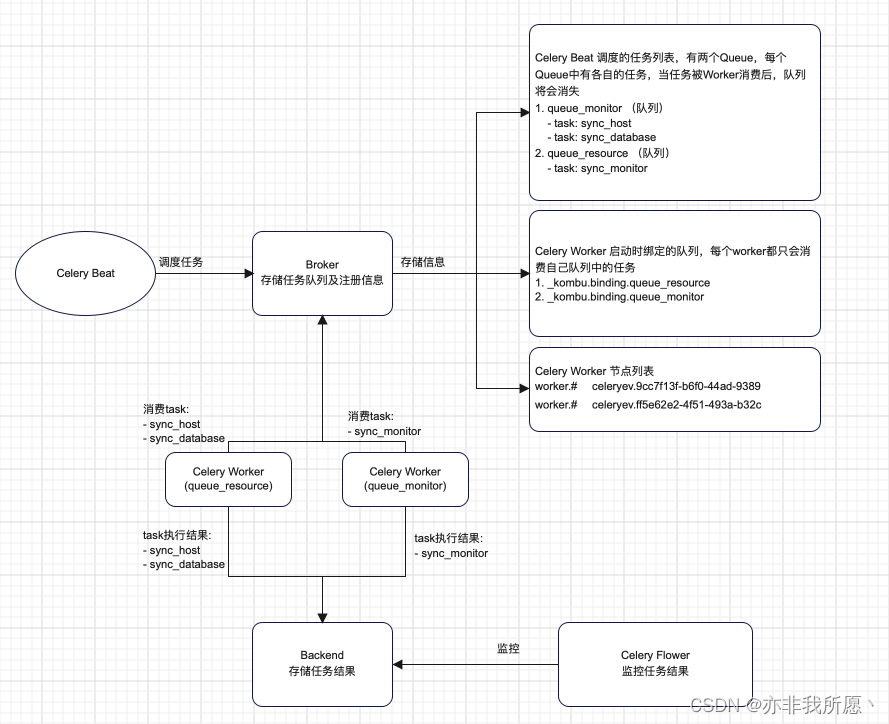

2.1 角色介绍

celery beat: 用于分派任务到任务队列

celery worker: 用于从任务队列中消费任务,运行两个,一个消费queue_resource中的任务,另一个消费queue_monitor中的任务

celery flower: 监控任务结果信息

broker: 本例中使用redis,基于kombu,存储worker节点,注册的任务队列,未消费的任务,

backend: 本例中使用redis,保留任务执行结果

2.2 任务流程图

三、Celery 任务部署

3.1 安装依赖

python 3.9

pip install celery==5.2.7

pip install redis

pip install flower

3.2 启动 beat

可以看到,Celery beat已经开始分派任务: Scheduler: Sending due task …

./venv/bin/celery -A scheduler.celery beat -l INFO

celery beat v5.2.7 (dawn-chorus) is starting.

__ - ... __ - _

LocalTime -> 2023-04-08 17:00:40

Configuration ->

. broker -> redis://localhost:6379/10

. loader -> celery.loaders.app.AppLoader

. scheduler -> celery.beat.PersistentScheduler

. db -> celerybeat-schedule

. logfile -> [stderr]@%INFO

. maxinterval -> 5.00 minutes (300s)

[2023-04-08 17:00:40,273: INFO/MainProcess] beat: Starting...

[2023-04-08 17:00:40,314: INFO/MainProcess] Scheduler: Sending due task sync-monitor-001 (sync_monitor)

[2023-04-08 17:00:40,340: INFO/MainProcess] Scheduler: Sending due task sync-host-001 (sync_host)

[2023-04-08 17:00:40,343: INFO/MainProcess] Scheduler: Sending due task sync-database-001 (sync_database)

[2023-04-08 17:01:00,001: INFO/MainProcess] Scheduler: Sending due task sync-database-001 (sync_database)

[2023-04-08 17:01:00,011: INFO/MainProcess] Scheduler: Sending due task sync-host-001 (sync_host)

[2023-04-08 17:01:00,018: INFO/MainProcess] Scheduler: Sending due task sync-monitor-001 (sync_monitor)

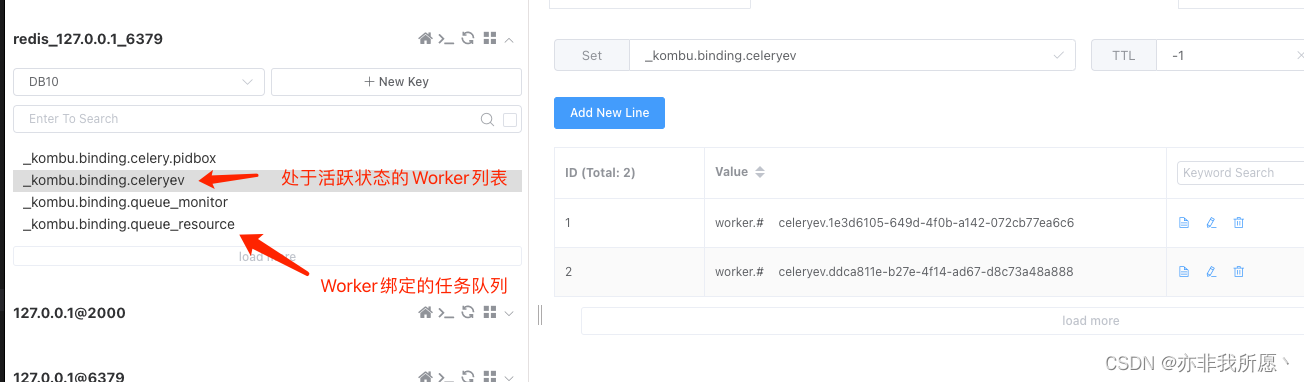

观察任务队列

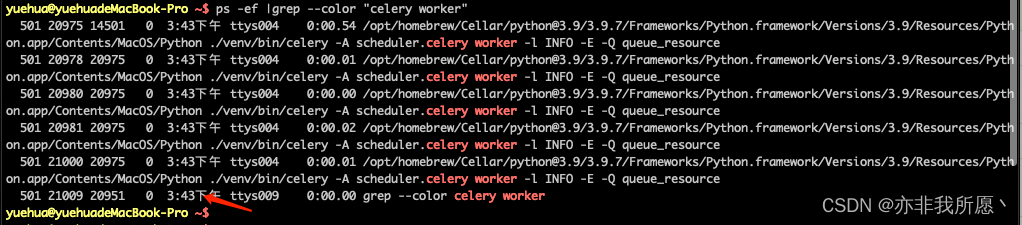

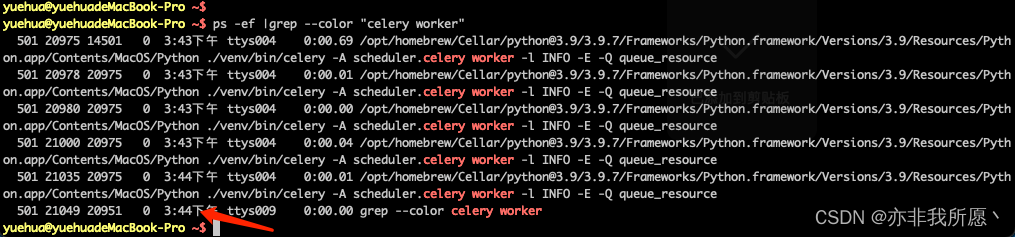

3.3 启动 worker

下面分别启动了两个worker,且各自receive自己的queue中的任务,最终运行完成,输出success

./venv/bin/celery -A scheduler.celery worker -l INFO -E -c 4 -Q queue_monitor

-------------- celery@yuehuadeMacBook-Pro.local v5.2.7 (dawn-chorus)

--- ***** -----

-- ******* ---- macOS-11.5.2-arm64-arm-64bit 2023-04-08 17:03:38

- *** --- * ---

- ** ---------- [config]

- ** ---------- .> app: celery_app:0x104308670

- ** ---------- .> transport: redis://localhost:6379/10

- ** ---------- .> results: redis://localhost:6379/11

- *** --- * --- .> concurrency: 4 (prefork)

-- ******* ---- .> task events: ON

--- ***** -----

-------------- [queues]

.> queue_monitor exchange=queue_monitor(direct) key=queue_monitor

[tasks]

. sync_database

. sync_host

. sync_monitor

[2023-04-08 17:03:38,768: INFO/MainProcess] Connected to redis://localhost:6379/10

[2023-04-08 17:03:38,780: INFO/MainProcess] mingle: searching for neighbors

[2023-04-08 17:03:39,809: INFO/MainProcess] mingle: all alone

[2023-04-08 17:03:39,852: INFO/MainProcess] celery@yuehuadeMacBook-Pro.local ready.

[2023-04-08 17:03:39,885: INFO/MainProcess] Task sync_monitor[fc4faff3-16ab-40ae-9c6f-fd8e25c5d933] received

[2023-04-08 17:03:39,889: INFO/MainProcess] Task sync_monitor[3348aac6-ffaa-4821-82e2-6cec6ab0a9e5] received

[2023-04-08 17:03:39,890: WARNING/ForkPoolWorker-2] this is a scheduled monitor task

[2023-04-08 17:03:39,890: WARNING/ForkPoolWorker-4] this is a scheduled monitor task

[2023-04-08 17:03:39,896: INFO/MainProcess] Task sync_monitor[467bada6-61aa-472e-bff2-6f27d81b6172] received

[2023-04-08 17:03:39,910: INFO/MainProcess] Task sync_monitor[2a267e9f-5d6e-439d-9698-83bcd0665c49] received

[2023-04-08 17:03:39,911: WARNING/ForkPoolWorker-1] this is a scheduled monitor task

[2023-04-08 17:03:39,911: WARNING/ForkPoolWorker-3] this is a scheduled monitor task

[2023-04-08 17:03:39,944: WARNING/ForkPoolWorker-2]

[2023-04-08 17:03:39,944: WARNING/ForkPoolWorker-4]

[2023-04-08 17:03:39,945: WARNING/ForkPoolWorker-2] 阿里云

[2023-04-08 17:03:39,945: WARNING/ForkPoolWorker-4] 阿里云

[2023-04-08 17:03:39,946: WARNING/ForkPoolWorker-3]

[2023-04-08 17:03:39,947: WARNING/ForkPoolWorker-3] 阿里云

[2023-04-08 17:03:39,947: WARNING/ForkPoolWorker-1]

[2023-04-08 17:03:39,947: WARNING/ForkPoolWorker-1] 阿里云

[2023-04-08 17:03:39,955: INFO/ForkPoolWorker-4] Task sync_monitor[3348aac6-ffaa-4821-82e2-6cec6ab0a9e5] succeeded in 0.06502870800000005s: []

[2023-04-08 17:03:39,955: INFO/ForkPoolWorker-2] Task sync_monitor[fc4faff3-16ab-40ae-9c6f-fd8e25c5d933] succeeded in 0.0649843750000001s: []

[2023-04-08 17:03:39,957: INFO/ForkPoolWorker-3] Task sync_monitor[2a267e9f-5d6e-439d-9698-83bcd0665c49] succeeded in 0.04604374999999994s: []

[2023-04-08 17:03:39,959: INFO/ForkPoolWorker-1] Task sync_monitor[467bada6-61aa-472e-bff2-6f27d81b6172] succeeded in 0.048174624999999915s: []

./venv/bin/celery -A scheduler.celery worker -l INFO -E -c 4 -Q queue_resource

-------------- celery@yuehuadeMacBook-Pro.local v5.2.7 (dawn-chorus)

--- ***** -----

-- ******* ---- macOS-11.5.2-arm64-arm-64bit 2023-04-08 17:05:26

- *** --- * ---

- ** ---------- [config]

- ** ---------- .> app: celery_app:0x102bd4670

- ** ---------- .> transport: redis://localhost:6379/10

- ** ---------- .> results: redis://localhost:6379/11

- *** --- * --- .> concurrency: 4 (prefork)

-- ******* ---- .> task events: ON

--- ***** -----

-------------- [queues]

.> queue_resource exchange=queue_resource(direct) key=queue_resource

[tasks]

. sync_database

. sync_host

. sync_monitor

[2023-04-08 17:05:26,987: INFO/MainProcess] Connected to redis://localhost:6379/10

[2023-04-08 17:05:26,993: INFO/MainProcess] mingle: searching for neighbors

[2023-04-08 17:05:28,021: INFO/MainProcess] mingle: all alone

[2023-04-08 17:05:28,059: INFO/MainProcess] celery@yuehuadeMacBook-Pro.local ready.

[2023-04-08 17:05:28,079: INFO/MainProcess] Task sync_host[ceb41611-cd7a-4d39-bf5e-aad1d4a31853] received

[2023-04-08 17:05:28,083: INFO/MainProcess] Task sync_database[f44e60a4-20bc-4bdc-a011-383f0dbd5593] received

[2023-04-08 17:05:28,084: WARNING/ForkPoolWorker-2] this is a scheduled host task

[2023-04-08 17:05:28,084: WARNING/ForkPoolWorker-4] this is a scheduled database task

[2023-04-08 17:05:28,088: INFO/MainProcess] Task sync_database[4cc7f4f2-6921-4417-91fb-10c80a3db2a6] received

[2023-04-08 17:05:28,095: INFO/MainProcess] Task sync_host[4eee9ac3-9f66-4239-802d-d59f59fc895b] received

[2023-04-08 17:05:28,095: WARNING/ForkPoolWorker-1] this is a scheduled database task

[2023-04-08 17:05:28,095: WARNING/ForkPoolWorker-3] this is a scheduled host task

[2023-04-08 17:05:28,101: INFO/MainProcess] Task sync_host[92dc3658-b3a0-4fb7-baea-a4c9d266e3ab] received

[2023-04-08 17:05:28,113: INFO/MainProcess] Task sync_database[d81e651f-31c0-4fb2-b5b2-11a02465c3f9] received

[2023-04-08 17:05:28,116: INFO/MainProcess] Task sync_host[894e4e4e-60b7-48f4-8594-78009e13185d] received

[2023-04-08 17:05:28,123: INFO/MainProcess] Task sync_database[c7c17430-cfc3-4e46-87dd-cf436612a04c] received

[2023-04-08 17:05:28,124: WARNING/ForkPoolWorker-2]

[2023-04-08 17:05:28,124: WARNING/ForkPoolWorker-2] cn-zhangjiakou

[2023-04-08 17:05:28,125: WARNING/ForkPoolWorker-4]

[2023-04-08 17:05:28,125: WARNING/ForkPoolWorker-4] cn-zhangjiakou

[2023-04-08 17:05:28,128: INFO/MainProcess] Task sync_database[8b47f800-bb6e-44cf-8d46-9e59798f37a1] received

[2023-04-08 17:05:28,131: INFO/MainProcess] Task sync_host[f9842aab-9a5c-4cf6-9f76-d38f9c86bcaf] received

[2023-04-08 17:05:28,131: INFO/ForkPoolWorker-2] Task sync_host[ceb41611-cd7a-4d39-bf5e-aad1d4a31853] succeeded in 0.047811792000000075s: []

[2023-04-08 17:05:28,132: INFO/ForkPoolWorker-4] Task sync_database[f44e60a4-20bc-4bdc-a011-383f0dbd5593] succeeded in 0.048283166000000044s: []

[2023-04-08 17:05:28,134: INFO/MainProcess] Task sync_database[7251dcf9-c304-44c8-9d33-727aac6c3958] received

[2023-04-08 17:05:28,136: WARNING/ForkPoolWorker-3]

[2023-04-08 17:05:28,136: WARNING/ForkPoolWorker-3] cn-zhangjiakou

[2023-04-08 17:05:28,136: WARNING/ForkPoolWorker-1]

[2023-04-08 17:05:28,136: WARNING/ForkPoolWorker-1] cn-zhangjiakou

[2023-04-08 17:05:28,138: INFO/MainProcess] Task sync_host[3150cd69-aca3-4836-b4cc-35c54dff44dc] received

[2023-04-08 17:05:28,139: WARNING/ForkPoolWorker-4] this is a scheduled database task

[2023-04-08 17:05:28,139: WARNING/ForkPoolWorker-2] this is a scheduled host task

[2023-04-08 17:05:28,144: INFO/ForkPoolWorker-1] Task sync_database[4cc7f4f2-6921-4417-91fb-10c80a3db2a6] succeeded in 0.048955582999999914s: []

[2023-04-08 17:05:28,144: INFO/ForkPoolWorker-3] Task sync_host[4eee9ac3-9f66-4239-802d-d59f59fc895b] succeeded in 0.04881654199999996s: []

我们再次观察broker,发现之前的任务队列已经被消费完毕,且两个worker分别绑定了自己的queue

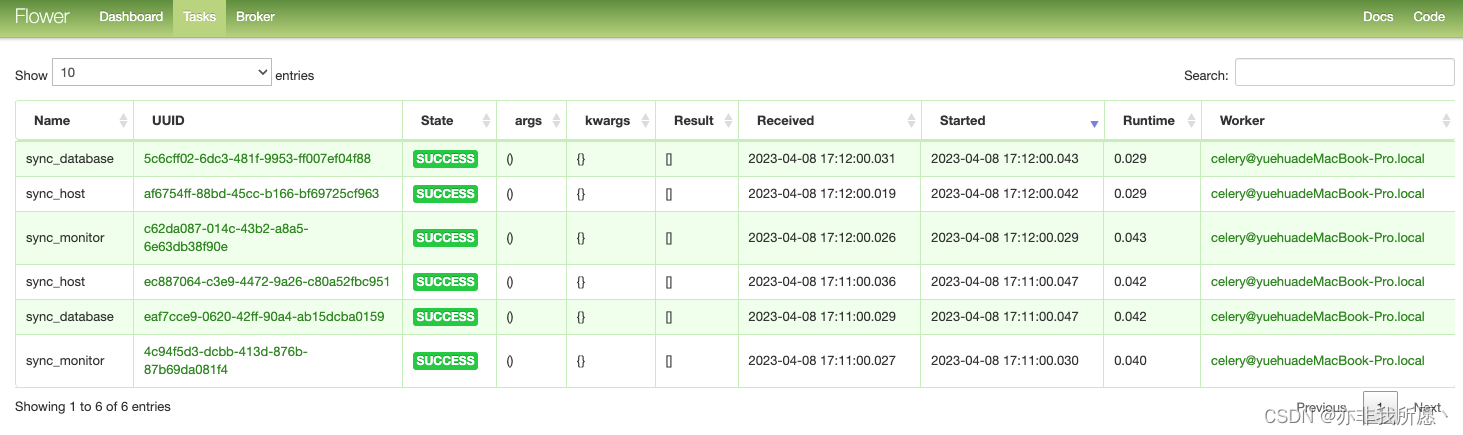

3.4 启动 flower

flower应该优先启动,否则无法监控到已经消费过的任务结果

./venv/bin/celery -A scheduler.celery flower --port=5555

查看历史任务状态,由于这里我们的Worker都是启动在同一个节点上,所以Worker的名称都是一样的

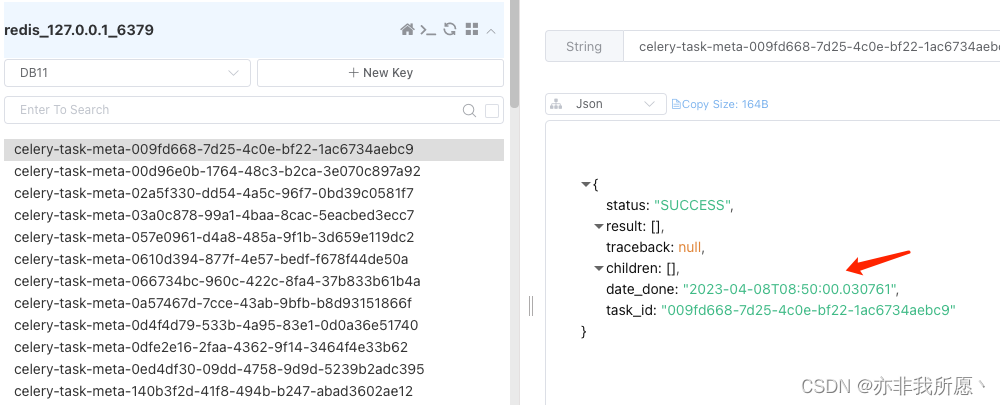

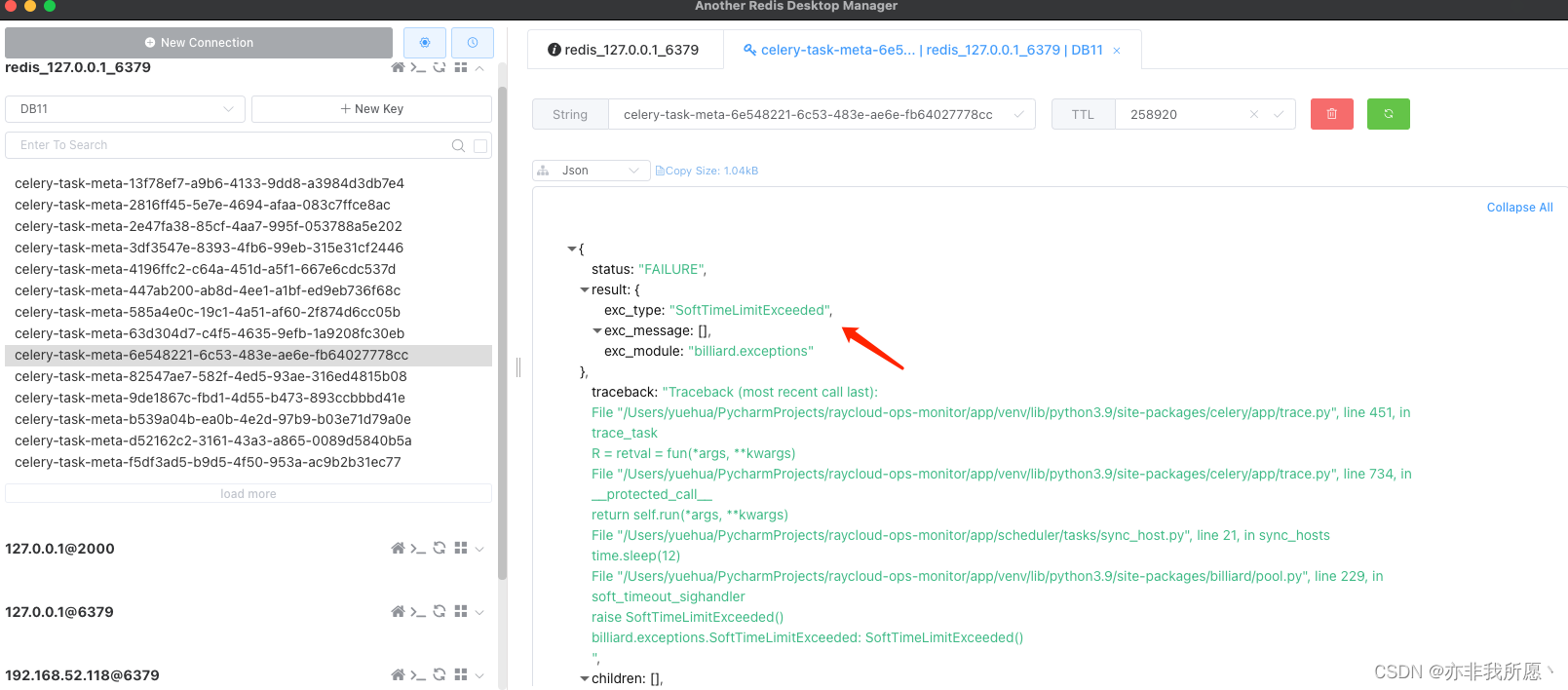

3.5 broker & backend

redis db 10: broker 地址

redis db 11: backend 地址

broker

backend

可以看到任务已经执行完成,这里的时区问题是我docker redis的时区问题,非celery,celery中也可以通过timezone指定时区

三、参数配置

3.1 全局参数配置

broker_url

用来保存beat分配的任务队列,broker注册信息,broker主机的地址

task_serializer

任务保存的数据格式,默认为json

accept_content

接收内容的数据格式,默认为json

result_backend

任务结果保存后端地址

result_expires

任务结果的过期时间,默认为60 * 60 * 24

result_serializer

任务结果保存的数据格式,默认为json

timezone

时区设置,如果未配置,则使用UTC时区

enable_utc

启用utc时区

3.2 celery worker 配置

worker_concurrency

并发执行任务的子进程数, 默认为vCPU核心数, 启动worker的-c参数

worker_prefetch_multiplier

一次性每个子进程数预取的消息数, 默认为4, 表示单子进程处理4个任务消息, 具体情况看任务执行时间, 如果有某些执行耗时较久的任务, 则设置为1比较合适

worker_max_tasks_per_child

worker执行的最大任务数后替换为新的进程, 默认不限制, 用于释放内存

worker_max_memory_per_child

在一个worker被一个新的worker替换之前, 它可能消耗的最大常驻内存量(KB),如果单个任务导致一个worker超过了这个限制,则该任务完成后替换新的worker, 默认无限制

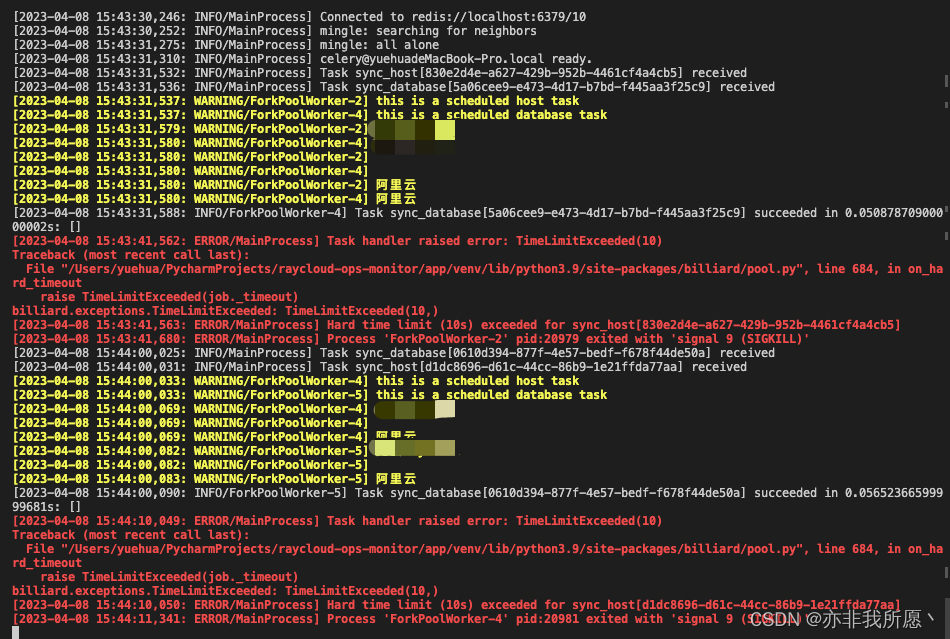

task_time_limit

worker处理任务的最长时间, 超过这个时间, 处理该任务的worker将被杀死并被一个新的worker取代, 单位秒, 默认无限制

刚启动worker的子进程时间

当任务超过硬限制时间后,子进程重新启动的时间

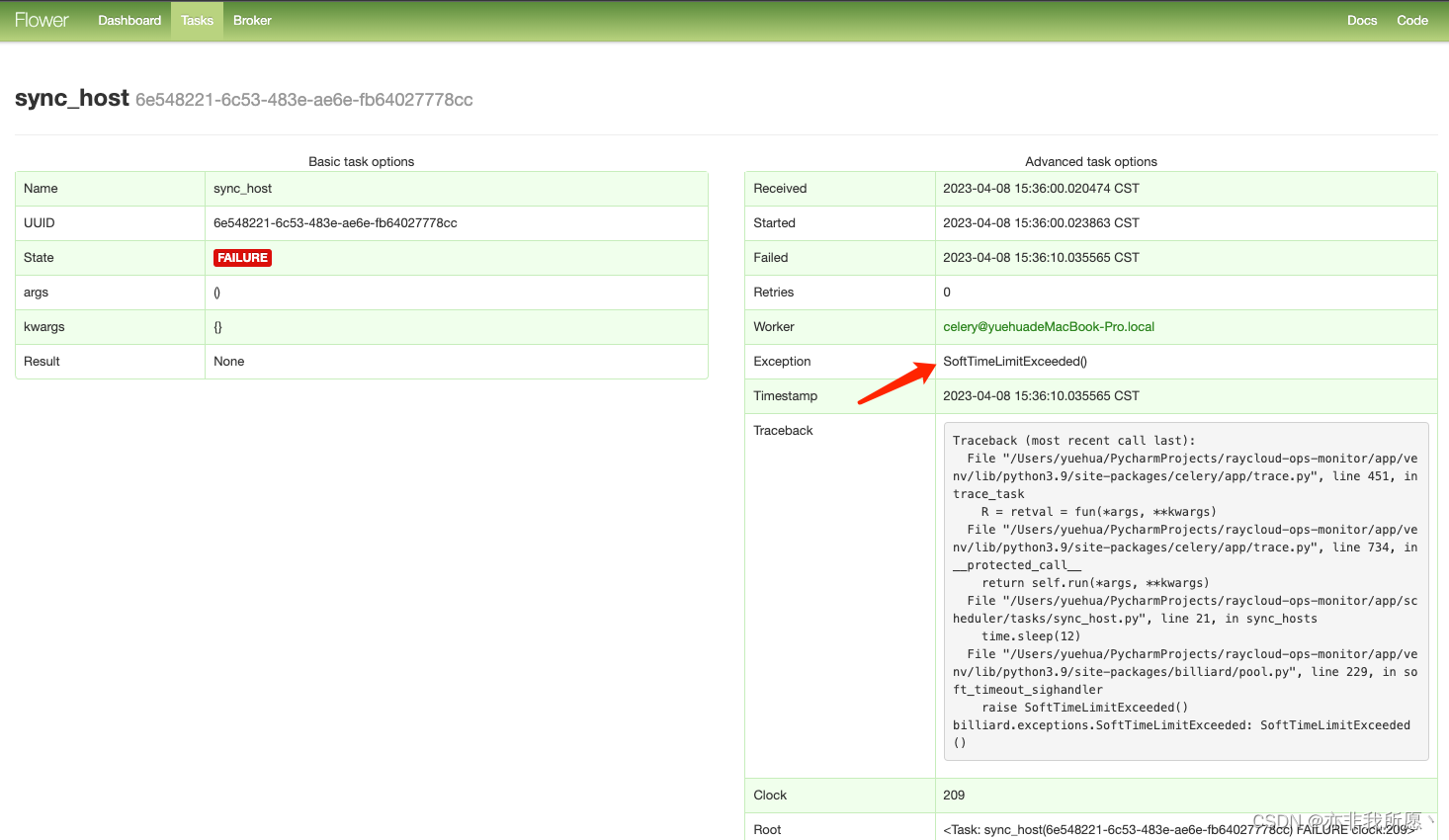

task_soft_time_limit

worker处理任务的最长时间, 超过这个时间, 则将会引发一个SoftTimeLimitExceeded, 可以在硬限制到来之前进行清理任务, 单位秒, 默认无限制

imports

导入任务模块, worker启动时要导入的一系列任务, 按配置顺序导入

3.3 celery queues/routes/annotations 配置

task_queues

tasks的queue队列配置

task_annotations

tasks的限速配置

task_routes

tasks的路由配置,本例中没有静态指定task_queues,所以是动态路由模式

3.4 celery events 配置

worker_send_task_events

任务状态监控, flower集成, 启动的-E参数, 默认关闭

3.5 celery beat 配置

beat_schedule

定时任务配置,用于根据schedule分配任务

四、完整代码

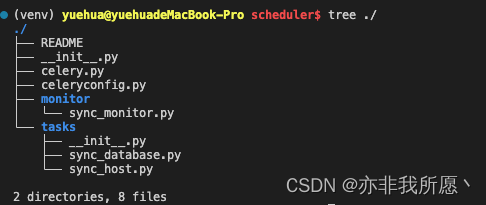

4.1 目录结构

celery.py:celery 主程序入口

celeryconfig.py:celery 配置文件

monitor:queue_monitor 执行的任务

tasks:quque_resource 执行的任务

4.2 完整代码

./celery.py

from celery import Celery

# Celery对象

celery_app = Celery('celery_app')

# 导入配置

celery_app.config_from_object('scheduler.celeryconfig')

./celeryconfig.py

from celery.schedules import crontab

from settings import config

# -------------------- 全局参数配置 -------------------- #

# Broker地址

broker_url = config.CELERY_BROKER

# 任务结果保存后端

result_backend = config.CELERY_BACKEND

# 任务保存的数据格式

task_serializer = 'json'

# 任务结果保存的数据格式

result_serializer = 'json'

# redis backend中的任务结果保存时间, 默认1天, 单位为秒

result_expires = 60 * 60 * 24 * 3

# 接收的内容数据格式

accept_content = ['json']

# 启用UTC

# enable_utc = True

# 如果未设置, 则使用UTC时区

timezone = 'Asia/Shanghai'

# --------------------celery worker 配置-------------------- #

# 并发执行任务的子进程数, 默认为vCPU核心数, 启动worker的-c参数

worker_concurrency = 4

# 一次性每个子进程数预取的消息数, 默认为4, 表示单子进程处理4个任务消息, 具体情况看任务执行时间, 如果有某些执行耗时较久的任务, 则设置为1比较合适

worker_prefetch_multiplier = 4

# worker执行的最大任务数后替换为新的进程, 默认不限制, 用于释放内存

worker_max_tasks_per_child = 500

# 在一个worker被一个新的worker替换之前, 它可能消耗的最大常驻内存量(KB),如果单个任务导致一个worker超过了这个限制,则该任务完成后替换新的worker, 默认无限制

worker_max_memory_per_child = 50000

# worker处理任务的最长时间, 超过这个时间, 处理该任务的worker将被杀死并被一个新的worker取代, 单位秒, 默认无限制

task_time_limit = 3600

# worker处理任务的最长时间, 超过这个时间, 则将会引发一个SoftTimeLimitExceeded, 可以在硬限制到来之前进行清理任务, 单位秒, 默认无限制

task_soft_time_limit = 1800

# 导入任务模块, worker启动时要导入的一系列任务, 按配置顺序导入

imports = (

'scheduler.tasks.sync_host',

'scheduler.tasks.sync_database',

'scheduler.monitor.sync_monitor'

)

# -------------------- celery queues/routes/annotations 配置 -------------------- #

# task_queues: 分流

# task_annotations: 限速

task_routes = {

'scheduler.tasks.*': {

'queue': 'queue_resource',

},

'scheduler.monitor.*': {

'queue': 'queue_monitor',

}

}

# -------------------- celery events 配置 -------------------- #

# 任务状态监控, flower集成, 启动的-E参数, 默认关闭

worker_send_task_events = True

# -------------------- celery beat 配置 -------------------- #

beat_schedule = {

'sync-host-001': {

'task': 'sync_host',

'schedule': crontab(minute="*/1"),

'options': {

'queue': 'queue_resource'}

},

'sync-database-001': {

'task': 'sync_database',

'schedule': crontab(minute="*/1"),

'options': {

'queue': 'queue_resource'}

},

'sync-monitor-001': {

'task': 'sync_monitor',

'schedule': crontab(minute='*/1'),

'options': {

'queue': 'queue_monitor'}

}

}

./monitor/sync_monitor.py

from sqlalchemy import create_engine

from sqlalchemy.orm import sessionmaker

from models.database import CloudDatabase

from scheduler.celery import celery_app

from settings import config

engine = create_engine(config.DATABASE_URL, echo=False)

SessionLocal = sessionmaker(autocommit=False, autoflush=False, bind=engine)

@celery_app.task(name="sync_monitor", bind=True)

def sync_monitors(self):

print("this is a scheduled monitor task")

db = SessionLocal()

objs = db.query(CloudDatabase).filter_by(platform="阿里云").first()

print(objs.account_name, objs.platform)

return []

./tasks/sync_host.py

from scheduler.celery import celery_app

from sqlalchemy import create_engine

from sqlalchemy.orm import sessionmaker

from models.database import CloudDatabase

from settings import config

engine = create_engine(config.DATABASE_URL, echo=False)

SessionLocal = sessionmaker(autocommit=False, autoflush=False, bind=engine)

@celery_app.task(name="sync_host", bind=True)

def sync_hosts(self):

print("this is a scheduled host task")

db = SessionLocal()

objs = db.query(CloudDatabase).filter_by(platform="阿里云").first()

print(objs.account_name, objs.region)

return []

./tasks/sync_database.py

from sqlalchemy import create_engine

from sqlalchemy.orm import sessionmaker

from models.database import CloudDatabase

from scheduler.celery import celery_app

from settings import config

engine = create_engine(config.DATABASE_URL, echo=False)

SessionLocal = sessionmaker(autocommit=False, autoflush=False, bind=engine)

@celery_app.task(name="sync_database", bind=True)

def sync_databases(self):

print("this is a scheduled database task")

db = SessionLocal()

objs = db.query(CloudDatabase).filter_by(platform="阿里云").first()

print(objs.account_name, objs.region)

return []