目录

IntelRealSense系列SDK开发

RealSense系列深度传感器由Intel公司开发的消费级深度相机,需使用SDK对其进行开发,主要是了获得其传感器数据。

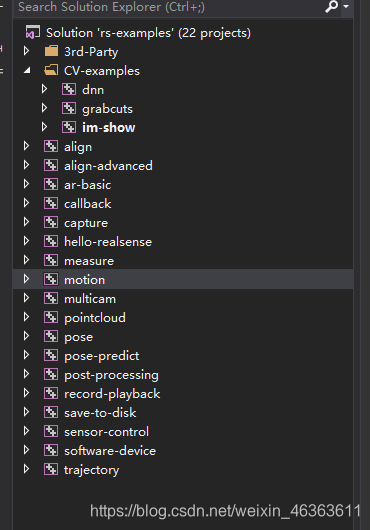

Samples

其中,

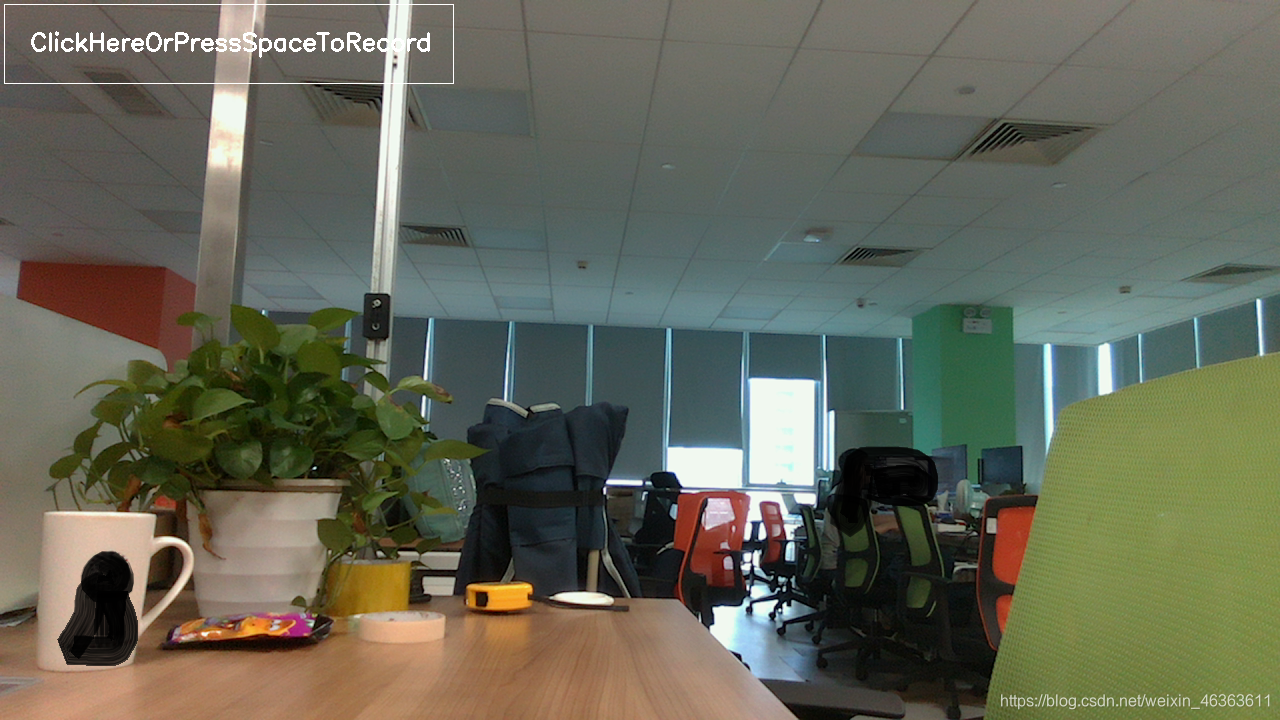

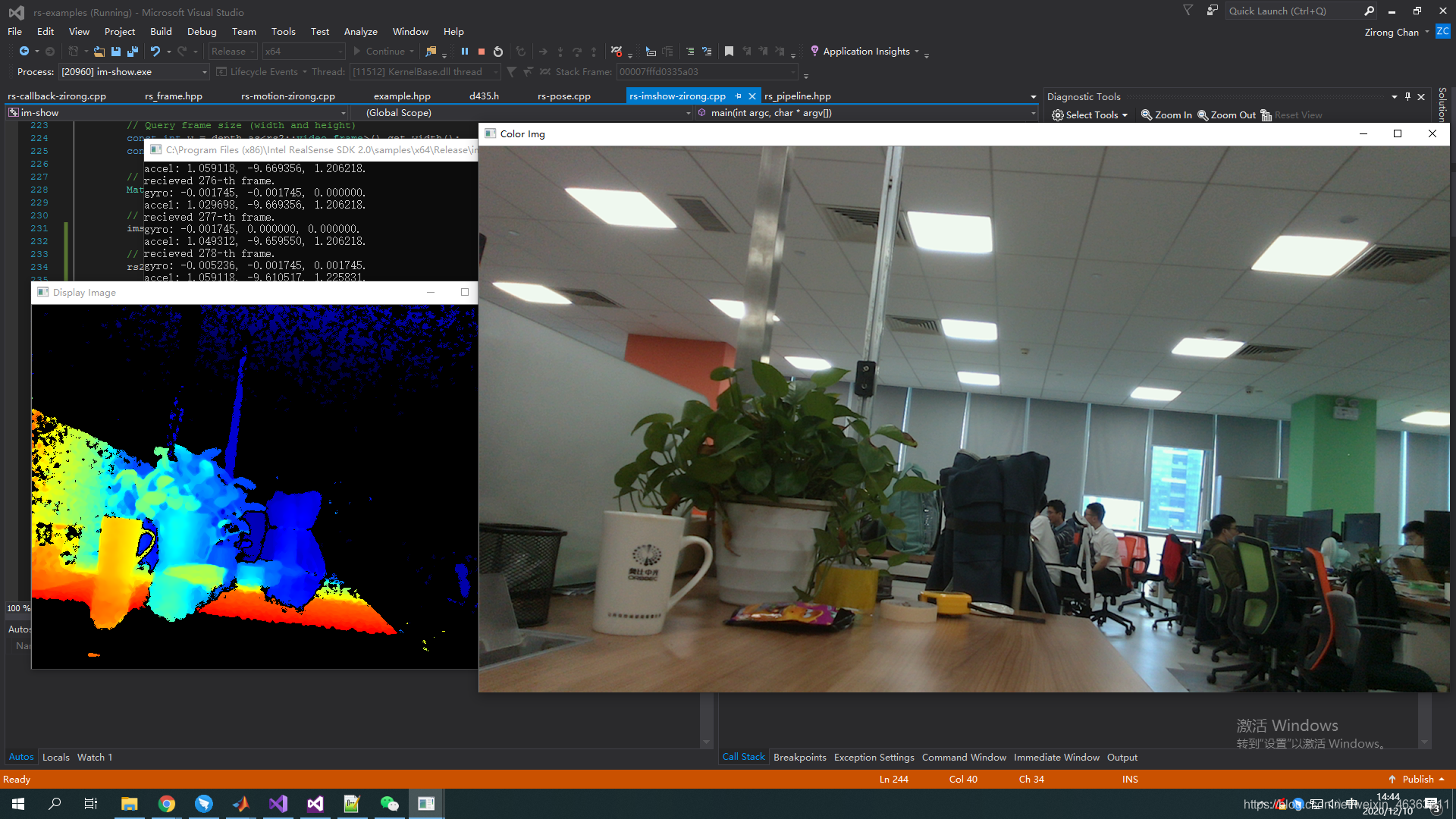

- im-show 提供支持OpenCV的数据接口与显示程序

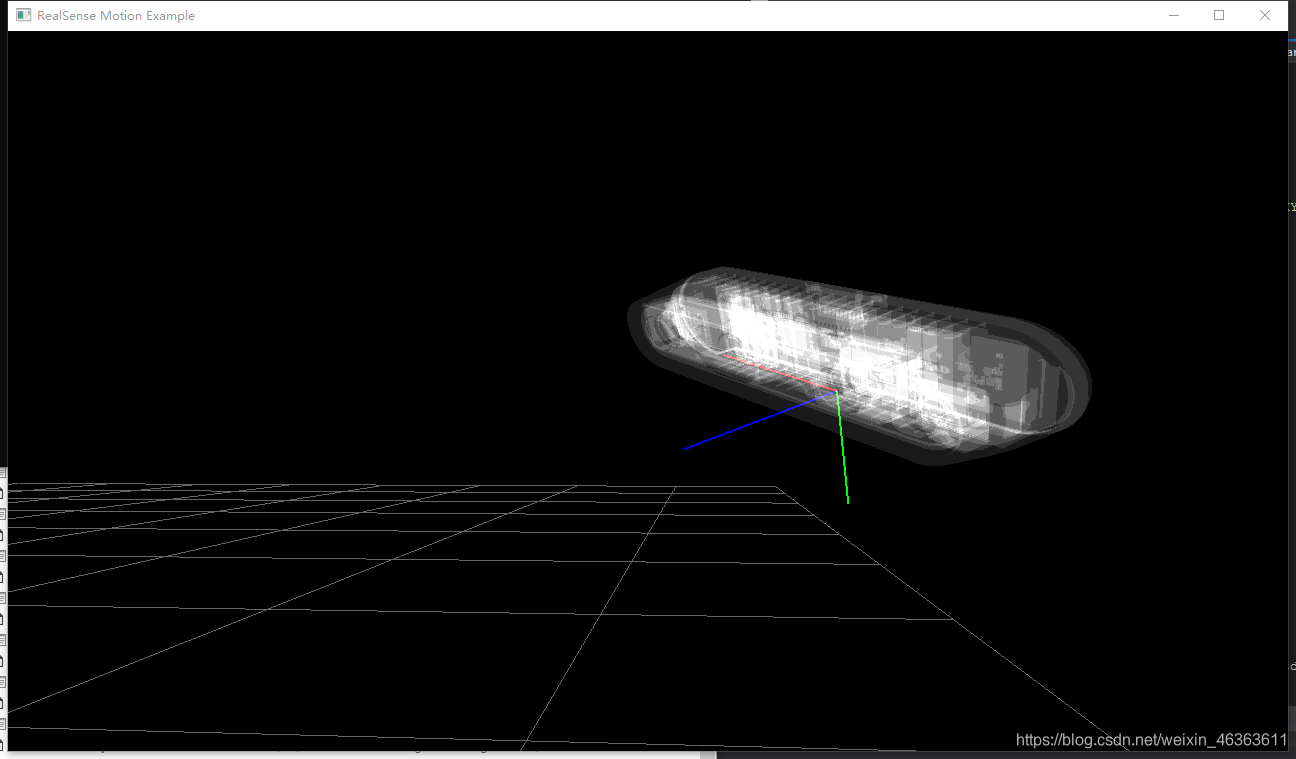

- motion 展示了如何从获取数据中解析陀螺仪与加速计的读数,即IMU数据,并以此展示相机位姿。

- callback 展示了如何通过回调函数,同时获取同步过的图像数据流,与不同步的IMU数据流。

获取数据基本流程

获取同步数据:深度/彩色

创建管线(pipeline)

rs2::pipeline pipe_sync;

配置(config)通过enable/disable函数控制数据流

// only enable the video frames

rs2::config cfg_sync;

//cfg.enable_stream(RS2_STREAM_COLOR, 1920, 1080, RS2_FORMAT_ANY, 30);

cfg_sync.enable_stream(RS2_STREAM_COLOR, 1280, 720, RS2_FORMAT_BGR8, 30);

cfg_sync.enable_stream(RS2_STREAM_DEPTH, 640, 480, RS2_FORMAT_Z16, 30);

cfg_sync.disable_stream(RS2_STREAM_ACCEL);

cfg_sync.disable_stream(RS2_STREAM_GYRO);

启动管线

pipe_sync.start(cfg_sync);

获取同步数据

while (true)

{

rs2::frameset data = pipe_sync.wait_for_frames(); // Wait for next set of frames from the camera

// color

rs2::frame color = data.get_color_frame();

// convert to OpenCV

const int w_clr = color.as<rs2::video_frame>().get_width();

const int h_clr = color.as<rs2::video_frame>().get_height();

Mat color_img(Size(w_clr, h_clr), CV_8UC3, (void*)color.get_data(), Mat::AUTO_STEP);

// depth is related to depth_scale

rs2::depth_frame depth = data.get_depth_frame();

const int w = depth.get_width();

const int h = depth.get_height();

Mat depth_image(Size(w, h), CV_16UC1, (void*)depth.get_data(), Mat::AUTO_STEP);

// depth scale

float depth_scale = depth.get_uints();

// other operation goes here ...

}

停止管线

pipe_sync.stop();

获取运动数据:陀螺仪,加速计

// Declare RealSense pipeline, encapsulating the actual device and sensors

rs2::pipeline pipe;

// Create a configuration for configuring the pipeline with a non default profile

rs2::config cfg;

// Add streams of gyro and accelerometer to configuration

cfg.enable_stream(RS2_STREAM_ACCEL, RS2_FORMAT_MOTION_XYZ32F);

cfg.enable_stream(RS2_STREAM_GYRO, RS2_FORMAT_MOTION_XYZ32F);

// Start streaming with the given configuration;

// Note that since we only allow IMU streams, only single frames are produced

auto profile = pipe.start(cfg, [&](rs2::frame frame)

{

// Cast the frame that arrived to motion frame

auto motion = frame.as<rs2::motion_frame>();

// If casting succeeded and the arrived frame is from gyro stream

if (motion && motion.get_profile().stream_type() == RS2_STREAM_GYRO && motion.get_profile().format() == RS2_FORMAT_MOTION_XYZ32F)

{

// Get the timestamp of the current frame

double ts = motion.get_timestamp();

// Get gyro measures

rs2_vector gyro_data = motion.get_motion_data();

}

// If casting succeeded and the arrived frame is from accelerometer stream

if (motion && motion.get_profile().stream_type() == RS2_STREAM_ACCEL && motion.get_profile().format() == RS2_FORMAT_MOTION_XYZ32F)

{

// Get accelerometer measures

rs2_vector accel_data = motion.get_motion_data();

}

});

使用回调函数获取数据

#include <librealsense2/rs.hpp> // Include RealSense Cross Platform API

#include <iostream>

#include <mutex>

{

std::mutex mutex;

// Define frame callback

// The callback is executed on a sensor thread and can be called simultaneously from multiple sensors

// Therefore any modification to common memory should be done under lock

auto callback = [&](const rs2::frame& frame)

{

std::lock_guard<std::mutex> lock(mutex);

if (rs2::frameset fs = frame.as<rs2::frameset>())

{

// With callbacks, all synchronized stream will arrive in a single frameset

for (const rs2::frame& f : fs)

// operation ...

}

else

{

// Stream that bypass synchronization (such as IMU) will produce single frames

// not a frameset class

// operations ...

}

};

// Declare RealSense pipeline, encapsulating the actual device and sensors.

rs2::pipeline pipe;

// Start streaming through the callback with default recommended configuration

// The default video configuration contains Depth and Color streams

// If a device is capable to stream IMU data, both Gyro and Accelerometer are enabled by default

//

rs2::pipeline_profile profiles = pipe.start(callback);

while (true)

{

// operations ...

}

}

进阶操作

使用不同的管线获取同一设备不同流

// Declare RealSense pipeline, encapsulating the actual device and sensors.

rs2::pipeline pipe_sync;

// only enable the video frames

rs2::config cfg_sync;

//cfg.enable_stream(RS2_STREAM_COLOR, 1920, 1080, RS2_FORMAT_ANY, 30);

cfg_sync.enable_stream(RS2_STREAM_COLOR, 1280, 720, RS2_FORMAT_BGR8, 30);

cfg_sync.enable_stream(RS2_STREAM_DEPTH, 640, 480, RS2_FORMAT_Z16, 30);

cfg_sync.disable_stream(RS2_STREAM_ACCEL);

cfg_sync.disable_stream(RS2_STREAM_GYRO);

// Pipeline for IMU data

rs2::pipeline pipe_imu;

rs2::config cfg_imu;

cfg_imu.disable_stream(RS2_STREAM_COLOR);

cfg_imu.disable_stream(RS2_STREAM_DEPTH);

cfg_imu.enable_stream(RS2_STREAM_ACCEL, RS2_FORMAT_MOTION_XYZ32F);

cfg_imu.enable_stream(RS2_STREAM_GYRO, RS2_FORMAT_MOTION_XYZ32F);

pipe_sync.start();

pipe_imu.start();

// Operation goes here

pipe_sync.stop();

pipe_imu.stop();

同时开启不同设备

rs2::context ctx; // Create librealsense context for managing devices

std::map<std::string, rs2::colorizer> colorizers; // Declare map from device serial number to colorizer (utility class to convert depth data RGB colorspace)

std::vector<rs2::pipeline> pipelines;

// Capture serial numbers before opening streaming

std::vector<std::string> serials;

for (auto&& dev : ctx.query_devices())

serials.push_back(dev.get_info(RS2_CAMERA_INFO_SERIAL_NUMBER));

// Start a streaming pipe per each connected device

for (auto&& serial : serials)

{

rs2::pipeline pipe(ctx);

rs2::config cfg;

cfg.enable_device(serial);

pipe.start(cfg);

pipelines.emplace_back(pipe);

// Map from each device's serial number to a different colorizer

colorizers[serial] = rs2::colorizer();

}

// other operation goes here ...

获取相机参数

rs2::frameset data = pipe_sync.wait_for_frames(); // Wait for next set of frames from the camera

rs2::frame color = data.get_color_frame();

rs2_intrinsics intr = color.get_profile().as<rs2::video_stream_profile>().get_intrinsics(); // Calibration data

获取真实深度

rs2::frameset data = pipe_sync.wait_for_frames(); // Wait for next set of frames from the camera

// depth data

rs2::depth_frame depth = data.get_depth_frame();

// Query frame size (width and height)

const int w = depth.get_width();

const int h = depth.get_height();

cv::Mat depth_image = cv::Mat(Size(w, h), CV_16UC1);

for (int coo_x = 0; coo_x < w; coo_x++)

{

for (int coo_y = 0; coo_y < h; coo_y++)

{

// in metrics

float dist = depth.get_distance(coo_x, coo_y);

// convert to millimeter

depth_image.at<ushort>(coo_y, coo_x) = std::floor(dist * 1e3);

}

}

开发采集工具

- 使用两个管线分别采集图像/IMU数据;

- 将获取IMU数据的管线作为一个独立线程,利用其低存储尺寸的特点,到采集结束再写入硬盘;

- 另起两个独立线程解决串行图像数据存储问题(彩色、深度)。