发表初衷

有很多同学使用虚拟机无法通过 windows 和 Linux 共享复制粘贴板。实现起来可能比较麻烦,这里所给的代码是实验的测试代码。需要做改变的地方:文件包名修改成一致 类名不同也要修改,eclipse可以提示修改的。如果出现链接失败:先确定你的Hadoop hdfs要开启,其次可能需要修改访问端口,具体情况根据你的版本号而定!

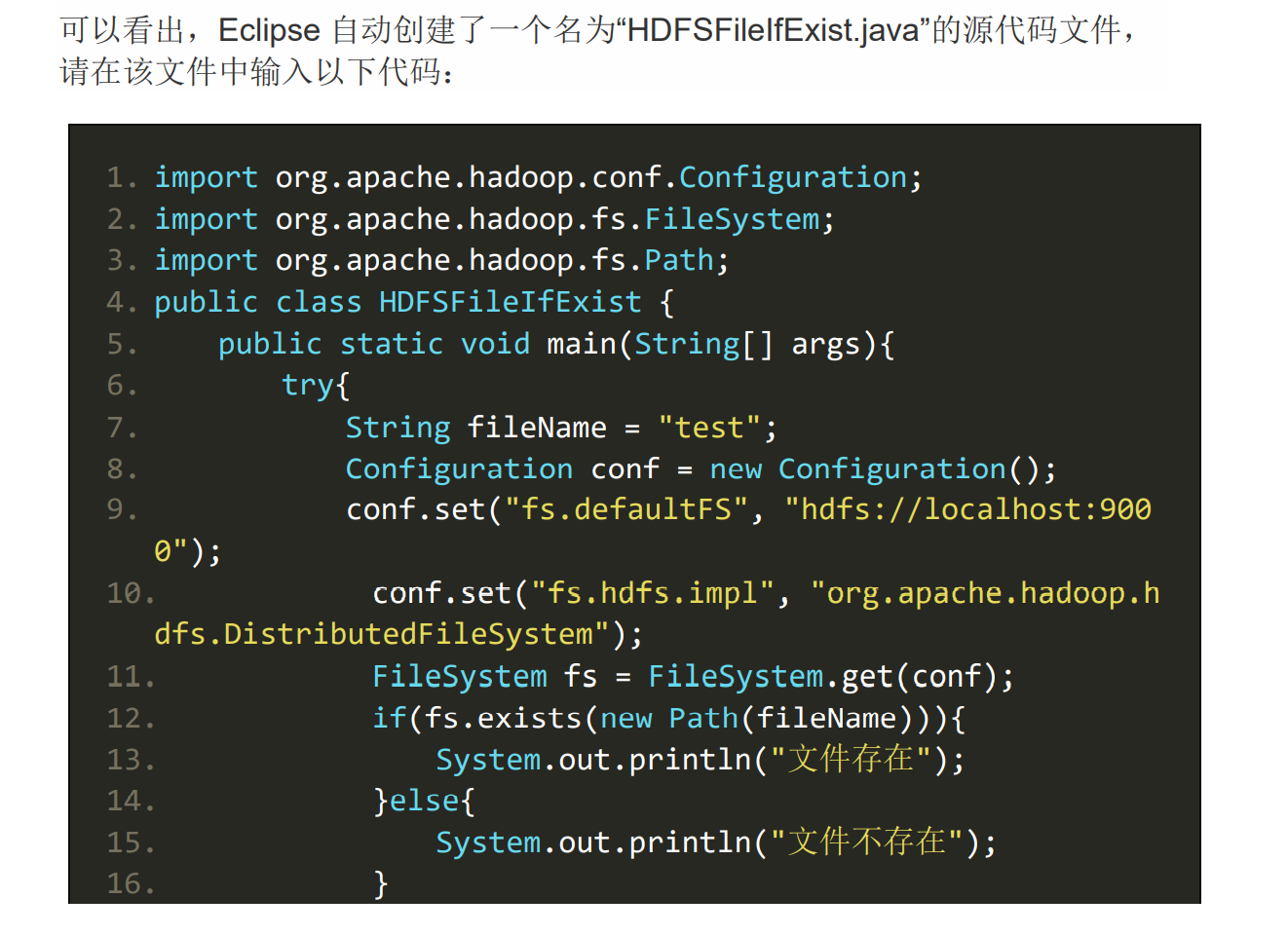

第二次实验

代码提取:

package hdfs;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

public class HDFSFileIfExist {

public static void main(String args[])

{

try {

String fileName = "test";

Configuration conf = new Configuration();

conf.set("fs.defaultFS", "hdfs://localhost:9000"); // 这里可能修改端口

conf.set("fs.hdfs.impl", "org.apache.hadoop.hdfs.DistributedFileSystem");

FileSystem fs = FileSystem.get(conf);

if(fs.exists(new Path(fileName))){

System.out.println("文件存在");

}else{

System.out.println("文件不存在");

}

}

catch(Exception e) {

e.printStackTrace();

}

}

}

1.1作业1 写入文件

package hdfs;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.FSDataOutputStream;

import org.apache.hadoop.fs.Path;

public class Chapter3 {

public static void main(String[] args) {

try {

Configuration conf = new Configuration();

conf.set("fs.defaultFS","hdfs://localhost:9000");

conf.set("fs.hdfs.impl","org.apache.hadoop.hdfs.DistributedFileSystem");

FileSystem fs = FileSystem.get(conf);

byte[] buff = "Hello world".getBytes(); // 要写入的内容

String filename = "test"; //要写入的文件名

FSDataOutputStream os = fs.create(new Path(filename));

os.write(buff,0,buff.length);

System.out.println("Create:"+ filename);

os.close();

fs.close();

} catch (Exception e) {

e.printStackTrace();

}

}

}

1.2 作业2 判断文件是否存在

package hdfs;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

public class Judge_file_exist {

public static void main(String[] args) {

try {

String filename = "test";

Configuration conf = new Configuration();

conf.set("fs.defaultFS","hdfs://localhost:9000");

conf.set("fs.hdfs.impl","org.apache.hadoop.hdfs.DistributedFileSystem");

FileSystem fs = FileSystem.get(conf);

if(fs.exists(new Path(filename))){

System.out.println("文件存在");

}else{

System.out.println("文件不存在");

}

fs.close();

} catch (Exception e) {

e.printStackTrace();

}

}

}

1.3 作业3 读取文件

package hdfs;

import java.io.BufferedReader;

import java.io.InputStreamReader;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.fs.FSDataInputStream;

public class Read_file {

public static void main(String[] args) {

try {

Configuration conf = new Configuration();

conf.set("fs.defaultFS","hdfs://localhost:9000");

conf.set("fs.hdfs.impl","org.apache.hadoop.hdfs.DistributedFileSystem");

FileSystem fs = FileSystem.get(conf);

Path file = new Path("test");

FSDataInputStream getIt = fs.open(file);

BufferedReader d = new BufferedReader(new InputStreamReader(getIt));

String content = d.readLine(); //读取文件一行

System.out.println(content);

d.close(); //关闭文件

fs.close(); //关闭 hdfs

} catch (Exception e) {

e.printStackTrace();

}

}

}