Hive报错如下:

Caused by: org.apache.hadoop.hive.ql.metadata.HiveFatalException: [Error 20004]: Fatal error occurred when node tried to create too many dynamic partitions. The maximum number of dynamic partitions is controlled by hive.exec.max.dynamic.partitions and hive.exec.max.dynamic.partitions.pernode. Maximum was set to: 100

at org.apache.hadoop.hive.ql.exec.FileSinkOperator.getDynOutPaths(FileSinkOperator.java:922)

at org.apache.hadoop.hive.ql.exec.FileSinkOperator.process(FileSinkOperator.java:699)

at org.apache.hadoop.hive.ql.exec.Operator.forward(Operator.java:837)

at org.apache.hadoop.hive.ql.exec.SelectOperator.process(SelectOperator.java:97)

at org.apache.hadoop.hive.ql.exec.mr.ExecReducer.reduce(ExecReducer.java:236)

... 7 more

at org.apache.hadoop.hive.ql.exec.FileSinkOperator.getDynOutPaths(FileSinkOperator.java:922)

at org.apache.hadoop.hive.ql.exec.FileSinkOperator.process(FileSinkOperator.java:699)

at org.apache.hadoop.hive.ql.exec.Operator.forward(Operator.java:837)

at org.apache.hadoop.hive.ql.exec.SelectOperator.process(SelectOperator.java:97)

at org.apache.hadoop.hive.ql.exec.mr.ExecReducer.reduce(ExecReducer.java:236)

... 7 more

主要原因为我们建表时设置了动态分区,而后随着分区数量的增多,Hadoop上的目录结构也越来越多,超过了Hive默认的物理分区个数,抛出异常。所以,修改Hive的最大分区数,即可解决问题。

解决方法:

SET hive.exec.dynamic.partition=true;

SET hive.exec.max.dynamic.partitions=2048;

SET hive.exec.max.dynamic.partitions.pernode=256;

(具体数字根据自己业务需要自行更改)

参数解释:

hive.exec.dynamic.partition 是否启动动态分区。false(不开启) true(开启)默认是 false

hive.exec.dynamic.partition.mode 打开动态分区后,动态分区的模式,有 strict和 nonstrict 两个值可选,strict 要求至少包含一个静态分区列,nonstrict则无此要求。各有各的好处。

hive.exec.max.dynamic.partitions 允许的最大的动态分区的个数。可以手动增加分区。默认1000

hive.exec.max.dynamic.partitions.pernode 一个 mapreduce job所允许的最大的动态分区的个数。默认是100

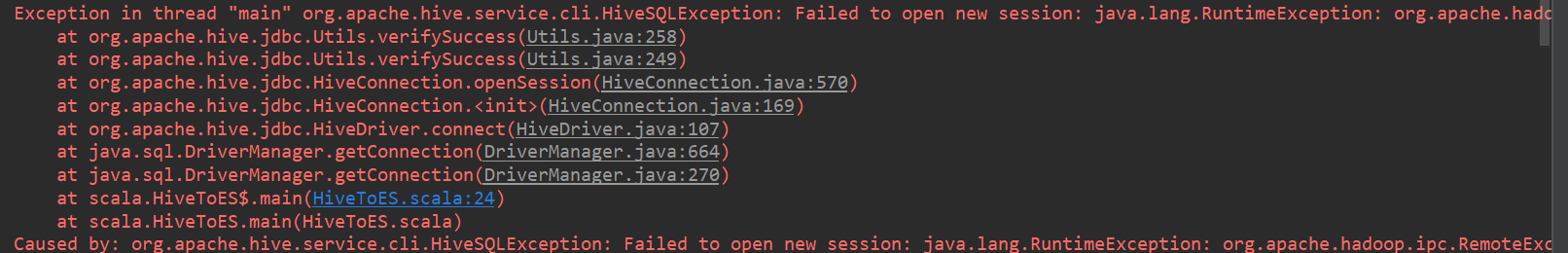

Hive报错如下:

Exception后面的错误信息如下:

User: root is not allowed to impersonate anonymous

即:

org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.security.authorize.AuthorizationException): User: root is not allowed to impersonate anonymous

报错原因:使用Java的JDBC连接Hive时,使用的用户不是Hadoop的管理用户,无法完成这一操作

解决方案,在Hadoop的配置文件core-site.xml中为你连接的用户配置权限,比如你使用root用户,就可以这样配置:

core-site.xml

<property> <name>hadoop.proxyuser.root.groups</name> <value>*</value> </property> <property> <name>hadoop.proxyuser.root.hosts</name> <value>*</value> </property>