本文将通过使用微软语音服务SDK实现文字到语音的转换

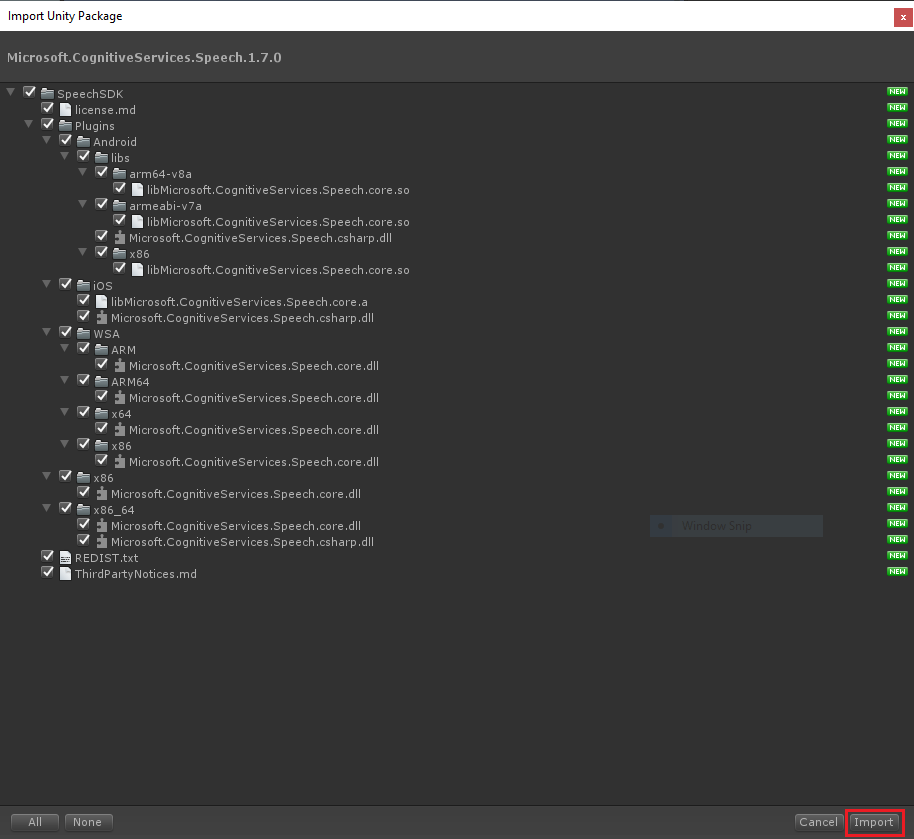

1、首先从微软官方网站现在SDK包,然后将SDK包导入新建的项目里

2、新建项目里添加一个InputField文本输入框和一个按钮即可,然后再新建一个脚本命名为TTSDemo,挂在一个物体上即可,如图

3、TTSDemo代码如下:

using Microsoft.CognitiveServices.Speech;

using Microsoft.CognitiveServices.Speech.Audio;

using UnityEngine;

using UnityEngine.UI;

public class TTSDemo : MonoBehaviour

{

public InputField inputField;

public Button speakButton;

public AudioSource audioSource;

private string message;

public string path;

public string fileName;

public string audioType;

void Start()

{

if (inputField == null)

{

message = "inputField property is null! Assign a UI InputField element to it.";

UnityEngine.Debug.LogError(message);

}

else if (speakButton == null)

{

message = "speakButton property is null! Assign a UI Button to it.";

UnityEngine.Debug.LogError(message);

}

else

{

speakButton.onClick.AddListener(ButtonClick);

}

}

public async void ButtonClick()

{

//string xmlPath = Application.dataPath + "/SpeechConfig/ZHCN.xml";

var config = SpeechConfig.FromSubscription("xxxxx", "eastus");

//config.SpeechSynthesisLanguage = "zh-CN";

//config.SpeechSynthesisVoiceName = "zh-CN-XiaoyouNeural";

var audioConfig = AudioConfig.FromWavFileInput("E:/FirstAudio.wav");

using (var synthsizer = new SpeechSynthesizer(config, audioConfig))

{

var result = synthsizer.SpeakTextAsync(inputField.text).Result;

//var xmlConfig = File.ReadAllText(Application.dataPath + "/SpeechConfig/ZHCN.xml");

//var result = await synthsizer.SpeakSsmlAsync(xmlConfig);

string newMessage = string.Empty;

if (result.Reason == ResultReason.SynthesizingAudioCompleted)

{

var sampleCount = result.AudioData.Length / 2;

var audioData = new float[sampleCount];

for (var i = 0; i < sampleCount; ++i)

{

audioData[i] = (short)(result.AudioData[i * 2 + 1] << 8 | result.AudioData[i * 2]) / 32768.0F;

}

// The default output audio format is 16K 16bit mono

var audioClip = AudioClip.Create("SynthesizedAudio", sampleCount, 1, 16000, false);

audioClip.SetData(audioData, 0);

audioSource.clip = audioClip;

audioSource.Play();

newMessage = "Speech synthesis succeeded!";

Debug.Log(newMessage);

}

else if (result.Reason == ResultReason.Canceled)

{

var cancellation = SpeechSynthesisCancellationDetails.FromResult(result);

//newMessage = $"CANCELED:\nReason=[{cancellation.Reason}]\nErrorDetails=[{cancellation.ErrorDetails}]\nDid you update the subscription info?";

Debug.LogError(cancellation.ErrorDetails);

}

}

}

}

4、代码详解

var config = SpeechConfig.FromSubscription("xxxxx", "eastus"); “xxxxx”就是你自己的密钥;“eastus”是区域语言(美国东部、美国西部等等), 此值代表美国东部

如果用“eastus” InputField文本框里就只能输入英语,生成英语语音片段,不然就会报错。那么有同学会问如果要生成汉语语音怎么办:

//config.SpeechSynthesisLanguage = "zh-CN";

//config.SpeechSynthesisVoiceName = "zh-CN-XiaoyouNeural";把这两行代码放开即可

扫描二维码关注公众号,回复: 14631519 查看本文章

config.SpeechSynthesisLanguage是地区,"zh-CN"代表中国

config.SpeechSynthesisVoiceName 是音色,本文用的是女声Xiaoyou

地区、音色、语音情感等参数见微软官方文档这里

using (var synthsizer = new SpeechSynthesizer(config, audioConfig)) 第1个参数是上面所解释的生成语音所需要的参数集合,第2个参数是语音存储位置和名称、类型

5、报错详览

这种错误代表你从文字生成出来的语音是空的,记住用“eastus”就只能输入英语,不然就会报空。

这种401错误是你密钥没有验证通过,没连到SDK服务