一,

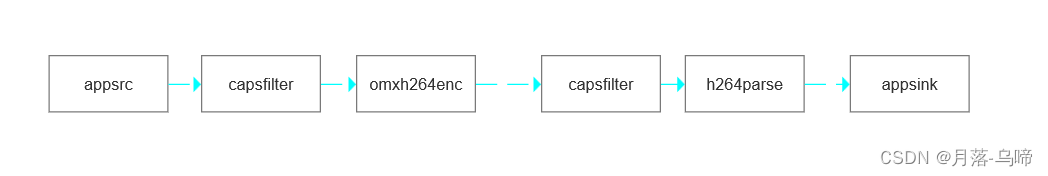

cv::Mat 编码h264数据包,并利用websocket发送,html端进行h264解析并使用wegl渲染

二,

三,

#pragma once

#include <opencv2/core/core.hpp>

#include <string>

#include <gst/gst.h>

#include "websocket_server.h"

namespace Transmit

{

class VideoEncode : public std::enable_shared_from_this<VideoEncode>

{

public:

using Ptr = std::shared_ptr<VideoEncode>;

~VideoEncode();

/**

* @brief 初始化编码器

*/

int init(int width, int height, std::pair<int, int> framerate, WebsocketServer::Ptr websocket, int index);

/**

* @brief 写入视频帧

*/

int write(const cv::Mat &frame);

/**

* @brief 获取websocket服务器

*/

WebsocketServer::Ptr GetWebSocket()

{

return websocket_;

}

/**

* @brief 获取当前相机索引

*/

int GetIndex()

{

return index_;

}

private:

int pushData2Pipeline(const cv::Mat &frame);

private:

GstElement *pipeline_;

GstElement *appSrc_;

GstElement *appSink_;

GstElement *encoder_;

GstElement *capsFilter1_;

GstElement *capsFilter2_;

GstElement *parser_;

int width_ = 0;

int height_ = 0;

int bitrate_ = 1;

std::pair<int, int> framerate_{30, 1};

GstClockTime timestamp{0};

// 用于将数据发送给 websocket 服务器

WebsocketServer::Ptr websocket_;

int index_;

};

}

#include <transmit/video_encode.h>

#include <iostream>

#include <stdio.h>

#include <unistd.h>

#include <gst/gst.h>

#include <gst/gstelement.h>

#include <gst/app/gstappsink.h>

using namespace std;

namespace Transmit

{

GstFlowReturn CaptureGstBuffer(GstAppSink *sink, gpointer user_data)

{

VideoEncode *videoEncode = (VideoEncode*)user_data;

if(!videoEncode)

return GST_FLOW_ERROR;

GstSample *sample = gst_app_sink_pull_sample(sink);

if (sample == NULL)

{

return GST_FLOW_ERROR;

}

GstBuffer *buffer = gst_sample_get_buffer(sample);

GstMapInfo map_info;

if (!gst_buffer_map((buffer), &map_info, GST_MAP_READ))

{

gst_buffer_unmap((buffer), &map_info);

gst_sample_unref(sample);

return GST_FLOW_ERROR;

}

// render using map_info.data

// frame = Mat::zeros(1080, 1920, CV_8UC3);

// frame = cv::Mat(1080, 1920, CV_8UC3, (char *)map_info.data, cv::Mat::AUTO_STEP);

// memcpy(frame.data,map_info.data,map_info.size);

// fprintf(stderr, "Got sample no %d %d\n", sampleno++, (int)map_info.size);

videoEncode->GetWebSocket()->sendH264Data(map_info.data,(int)map_info.size,videoEncode->GetIndex());

gst_buffer_unmap((buffer), &map_info);

gst_sample_unref(sample);

return GST_FLOW_OK;

}

VideoEncode::~VideoEncode()

{

if (appSrc_)

{

GstFlowReturn retflow;

g_signal_emit_by_name(appSrc_, "end-of-stream", &retflow);

std::cout << "EOS sended. Writing last several frame..." << std::endl;

g_usleep(1000000); // 等待1s,写数据

std::cout << "Writing Done!" << std::endl;

if (retflow != GST_FLOW_OK)

{

std::cerr << "We got some error when sending eos!" << std::endl;

}

}

if (pipeline_)

{

gst_element_set_state(pipeline_, GST_STATE_NULL);

gst_object_unref(pipeline_);

pipeline_ = nullptr;

}

}

int VideoEncode::init(int width,int height,std::pair<int, int> framerate,WebsocketServer::Ptr websocket,int index)

{

width_ = width;

height_ = height;

framerate_ = framerate;

websocket_ = websocket;

index_ = index;

pipeline_ = gst_pipeline_new("pipeline");

appSrc_ = gst_element_factory_make("appsrc", "AppSrc");

capsFilter1_ = gst_element_factory_make("capsfilter", "Capsfilter");

capsFilter2_ = gst_element_factory_make("capsfilter", "Capsfilter2");

encoder_ = gst_element_factory_make("omxh264enc", "Omxh264enc");

parser_ = gst_element_factory_make("h264parse", "H264parse");

appSink_ = gst_element_factory_make("appsink", "Appsink");

if (!pipeline_ || !appSrc_ || !encoder_ || !parser_ || !capsFilter2_ || !capsFilter1_ || !appSink_)

{

std::cerr << "Not all elements could be created" << std::endl;

return -1;

}

// 设置 src format

std::string srcFmt = "I420";

// Set up appsrc

g_object_set(appSrc_, "format", GST_FORMAT_TIME, NULL);

g_object_set(appSrc_, "stream-type", 0, NULL);

g_object_set(appSrc_, "is-live", TRUE, NULL);

g_object_set(G_OBJECT(capsFilter1_), "caps", gst_caps_new_simple("video/x-raw", "format", G_TYPE_STRING, srcFmt.c_str(), "width", G_TYPE_INT, width_, "height", G_TYPE_INT, height_, "framerate", GST_TYPE_FRACTION, framerate_.first, framerate_.second, nullptr), NULL);

g_object_set(G_OBJECT(capsFilter2_), "caps", gst_caps_new_simple("video/x-h264", "stream-format", G_TYPE_STRING, "byte-stream", nullptr), nullptr);

// Set up omxh264enc

g_object_set(G_OBJECT(encoder_), "bitrate", bitrate_ * 1024 * 1024 * 8, NULL);

g_object_set(G_OBJECT(encoder_), "temporal-tradeoff", 4, NULL);

// Set up appsink

g_object_set(G_OBJECT(appSink_),"emit-signals",TRUE,NULL);

g_object_set(G_OBJECT(appSink_),"sync", FALSE,NULL);

g_object_set(G_OBJECT(appSink_),"drop", TRUE,NULL);

g_signal_connect (appSink_, "new-sample",G_CALLBACK (CaptureGstBuffer), reinterpret_cast<void*> (this));

// BAdd elements to pipeline

gst_bin_add_many(GST_BIN(pipeline_), appSrc_, capsFilter1_, encoder_, capsFilter2_, parser_, appSink_, nullptr);

// Link elements

if (gst_element_link_many(appSrc_, capsFilter1_, encoder_, capsFilter2_, parser_, appSink_, nullptr) != TRUE)

{

std::cerr << "appSrc, capsFilter2, parser, encoder, capsFilter1, appSink_ could not be linked" << std::endl;

return -1;

}

// Start playing

auto ret = gst_element_set_state(pipeline_, GST_STATE_PLAYING);

if (ret == GST_STATE_CHANGE_FAILURE)

{

std::cerr << "Unable to set the pipeline to the playing state" << std::endl;

return -1;

}

return 0;

}

int VideoEncode::pushData2Pipeline(const cv::Mat &frame)

{

GstBuffer *buffer;

GstFlowReturn ret;

GstMapInfo map;

// Create a new empty buffer

uint size = frame.total() * frame.elemSize();

buffer = gst_buffer_new_and_alloc(size);

gst_buffer_map(buffer, &map, GST_MAP_WRITE);

memcpy(map.data, frame.data, size);

// 必须写入时间戳和每帧画面持续时间

gst_buffer_unmap(buffer, &map);

GST_BUFFER_PTS(buffer) = timestamp;

GST_BUFFER_DTS(buffer) = timestamp;

GST_BUFFER_DURATION(buffer) = gst_util_uint64_scale_int(1, GST_SECOND, framerate_.first / framerate_.second);

timestamp += GST_BUFFER_DURATION(buffer);

// std::cout << "GST_BUFFER_DURATION(buffer):" << GST_BUFFER_DURATION(buffer) << std::endl;

// std::cout << "timestamp:" << static_cast<uint64>(_timestamp * GST_SECOND) << std::endl;

// Push the buffer into the appsrc

g_signal_emit_by_name(appSrc_, "push-buffer", buffer, &ret);

// Free the buffer now that we are done with it

gst_buffer_unref(buffer);

if (ret != GST_FLOW_OK)

{

// We got some error, stop sending data

std::cout << "We got some error, stop sending data" << std::endl;

return -1;

}

return 0;

}

int VideoEncode::write(const cv::Mat &frame)

{

return pushData2Pipeline(frame);

}

}