背景:

今天给大家介绍一款新的工具,用过UE5、Blender的朋友对蓝图应该不陌生。蓝图绝对是UE5在游戏、虚拟制作、虚拟摄影爆火的大杀器。这工具把游戏制作直接变成了拖拖拉拽拽的工作,让艺术家、地形师、灯光师、渲染人员和底层技术人员可以一起协同作业。把游戏这个看起来toy的行业变成了可以写协作流程化的工作。

吹牛吹了这么久,此处开始切入正题。今天给大家介绍一套基于stablediffusion作图的蓝图工具。这套工具和stablediffusion webui的差异在于 stablediffusion wenui更适合一个人作业,工具更扁平更适合懂代码的朋友。对于真正把作图当成一个流水线,需要多到工序协作的小团队作业不算太优化。

ComfyUI一款为AI作图流水线化而生的产品,还处在产品打磨阶段,但已经可以看到它的牛逼。

工具介绍

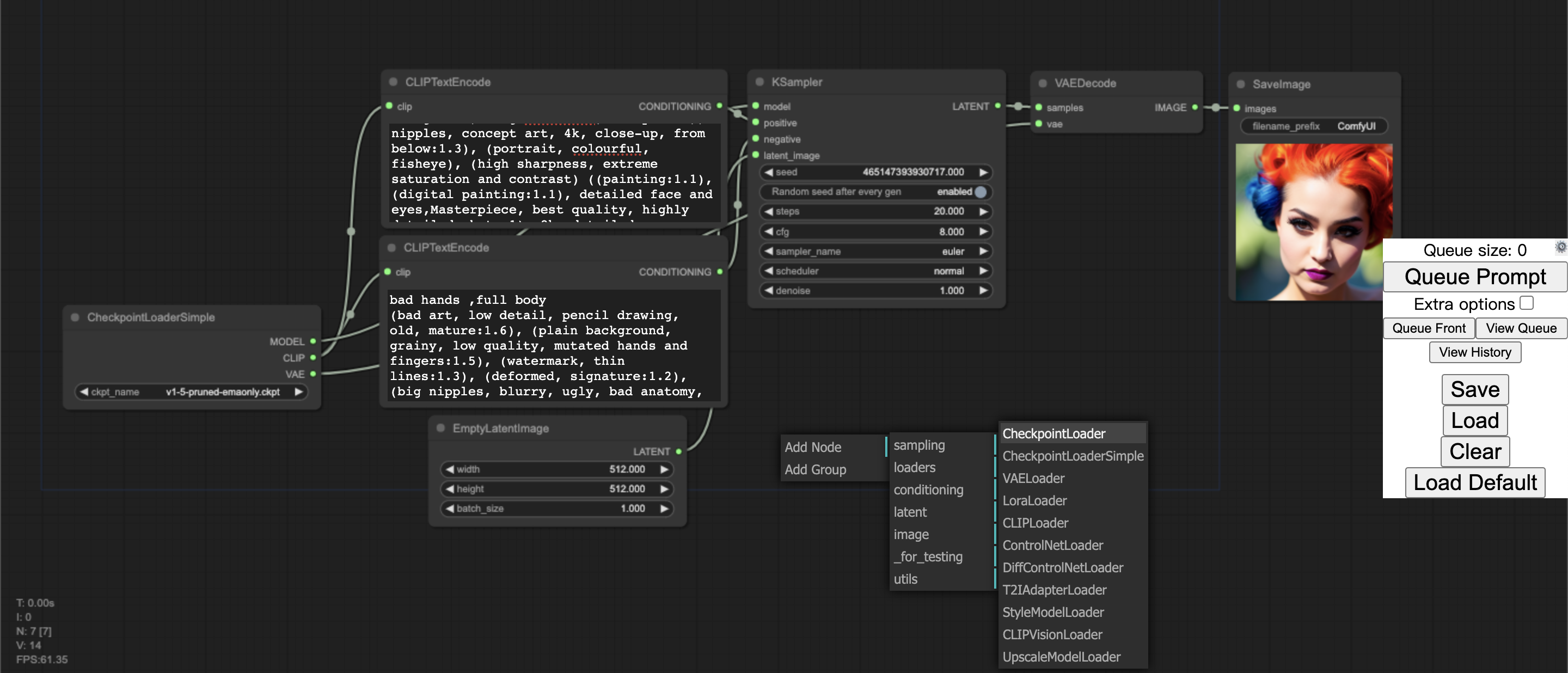

长什么样

通过拖拉拽增加节点处理流程,看起来挺酷炫。

如何搭建

初始化环境

!git clone https://github.com/comfyanonymous/ComfyUI

%cd ComfyUI

!pip install xformers -r requirements.txt下载软件

# Checkpoints

# SD1.5

!wget -c https://huggingface.co/runwayml/stable-diffusion-v1-5/resolve/main/v1-5-pruned-emaonly.ckpt -P ./models/checkpoints/

# SD2

#!wget -c https://huggingface.co/stabilityai/stable-diffusion-2-1-base/resolve/main/v2-1_512-ema-pruned.safetensors -P ./models/checkpoints/

#!wget -c https://huggingface.co/stabilityai/stable-diffusion-2-1/resolve/main/v2-1_768-ema-pruned.safetensors -P ./models/checkpoints/

# Some SD1.5 anime style

#!wget -c https://huggingface.co/WarriorMama777/OrangeMixs/resolve/main/Models/AbyssOrangeMix2/AbyssOrangeMix2_hard.safetensors -P ./models/checkpoints/

#!wget -c https://huggingface.co/WarriorMama777/OrangeMixs/resolve/main/Models/AbyssOrangeMix3/AOM3A1_orangemixs.safetensors -P ./models/checkpoints/

#!wget -c https://huggingface.co/WarriorMama777/OrangeMixs/resolve/main/Models/AbyssOrangeMix3/AOM3A3_orangemixs.safetensors -P ./models/checkpoints/

#!wget -c https://huggingface.co/Linaqruf/anything-v3.0/resolve/main/anything-v3-fp16-pruned.safetensors -P ./models/checkpoints/

# Waifu Diffusion 1.5 (anime style SD2.x 768-v)

#!wget -c https://huggingface.co/waifu-diffusion/wd-1-5-beta2/resolve/main/checkpoints/wd-1-5-beta2-fp16.safetensors -P ./models/checkpoints/

# VAE

!wget -c https://huggingface.co/stabilityai/sd-vae-ft-mse-original/resolve/main/vae-ft-mse-840000-ema-pruned.safetensors -P ./models/vae/

#!wget -c https://huggingface.co/WarriorMama777/OrangeMixs/resolve/main/VAEs/orangemix.vae.pt -P ./models/vae/

#!wget -c https://huggingface.co/hakurei/waifu-diffusion-v1-4/resolve/main/vae/kl-f8-anime2.ckpt -P ./models/vae/

# Loras

#!wget -c https://civitai.com/api/download/models/10350 -O ./models/loras/theovercomer8sContrastFix_sd21768.safetensors #theovercomer8sContrastFix SD2.x 768-v

#!wget -c https://civitai.com/api/download/models/10638 -O ./models/loras/theovercomer8sContrastFix_sd15.safetensors #theovercomer8sContrastFix SD1.x

# T2I-Adapter

#!wget -c https://huggingface.co/TencentARC/T2I-Adapter/resolve/main/models/t2iadapter_depth_sd14v1.pth -P ./models/t2i_adapter/

#!wget -c https://huggingface.co/TencentARC/T2I-Adapter/resolve/main/models/t2iadapter_seg_sd14v1.pth -P ./models/t2i_adapter/

#!wget -c https://huggingface.co/TencentARC/T2I-Adapter/resolve/main/models/t2iadapter_sketch_sd14v1.pth -P ./models/t2i_adapter/

#!wget -c https://huggingface.co/TencentARC/T2I-Adapter/resolve/main/models/t2iadapter_keypose_sd14v1.pth -P ./models/t2i_adapter/

#!wget -c https://huggingface.co/TencentARC/T2I-Adapter/resolve/main/models/t2iadapter_openpose_sd14v1.pth -P ./models/t2i_adapter/

#!wget -c https://huggingface.co/TencentARC/T2I-Adapter/resolve/main/models/t2iadapter_color_sd14v1.pth -P ./models/t2i_adapter/

#!wget -c https://huggingface.co/TencentARC/T2I-Adapter/resolve/main/models/t2iadapter_canny_sd14v1.pth -P ./models/t2i_adapter/

# T2I Styles Model

#!wget -c https://huggingface.co/TencentARC/T2I-Adapter/resolve/main/models/t2iadapter_style_sd14v1.pth -P ./models/style_models/

# CLIPVision model (needed for styles model)

#!wget -c https://huggingface.co/openai/clip-vit-large-patch14/resolve/main/pytorch_model.bin -O ./models/clip_vision/clip_vit14.bin

# ControlNet

#!wget -c https://huggingface.co/webui/ControlNet-modules-safetensors/resolve/main/control_depth-fp16.safetensors -P ./models/controlnet/

#!wget -c https://huggingface.co/webui/ControlNet-modules-safetensors/resolve/main/control_scribble-fp16.safetensors -P ./models/controlnet/

#!wget -c https://huggingface.co/webui/ControlNet-modules-safetensors/resolve/main/control_openpose-fp16.safetensors -P ./models/controlnet/

# Controlnet Preprocessor nodes by Fannovel16

#!cd custom_nodes && git clone https://github.com/Fannovel16/comfy_controlnet_preprocessors; cd comfy_controlnet_preprocessors && python install.py

# ESRGAN upscale model

#!wget -c https://huggingface.co/sberbank-ai/Real-ESRGAN/resolve/main/RealESRGAN_x2.pth -P ./models/upscale_models/

#!wget -c https://huggingface.co/sberbank-ai/Real-ESRGAN/resolve/main/RealESRGAN_x4.pth -P ./models/upscale_models/运行可视化界面

!npm install -g localtunnel

import subprocess

import threading

import time

import socket

def iframe_thread(port):

while True:

time.sleep(0.5)

sock = socket.socket(socket.AF_INET, socket.SOCK_STREAM)

result = sock.connect_ex(('127.0.0.1', port))

if result == 0:

break

sock.close()

print("\nComfyUI finished loading, trying to launch localtunnel (if it gets stuck here localtunnel is having issues)")

p = subprocess.Popen(["lt", "--port", "{}".format(port)], stdout=subprocess.PIPE)

for line in p.stdout:

print(line.decode(), end='')

threading.Thread(target=iframe_thread, daemon=True, args=(8188,)).start()

!python main.py --dont-print-serveriframe打开方式

import threading

import time

import socket

def iframe_thread(port):

while True:

time.sleep(0.5)

sock = socket.socket(socket.AF_INET, socket.SOCK_STREAM)

result = sock.connect_ex(('127.0.0.1', port))

if result == 0:

break

sock.close()

from google.colab import output

output.serve_kernel_port_as_iframe(port, height=1024)

print("to open it in a window you can open this link here:")

output.serve_kernel_port_as_window(port)

threading.Thread(target=iframe_thread, daemon=True, args=(8188,)).start()

!python main.py --dont-print-server小结:

1.Ai作图已成态势,适合普通人的作图流水线

2.稳定的AI作图才能产生工业价值,而不是toy

3.蓝图是种很好的艺术家、技术家...不同工种协同的工具