我的第一个爬虫,哈哈,纯面向过程

实现目标:

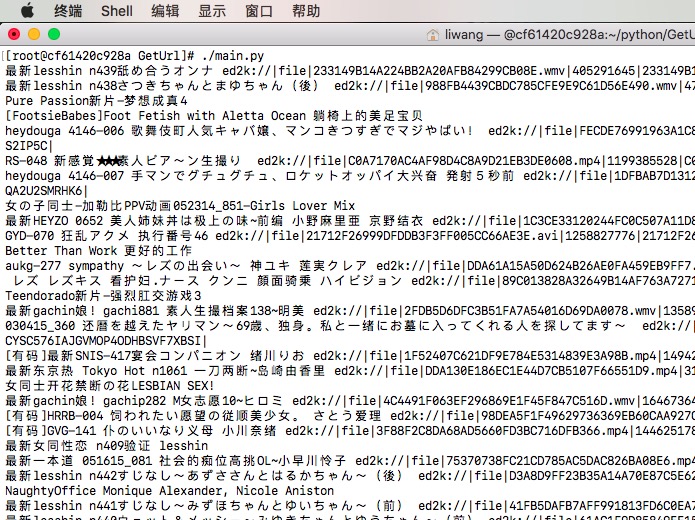

1.抓取本地conf文件,其中的URL地址,然后抓取视频名称以及对应的下载URL 2.抓取URL会单独写在本地路径下,以便复制粘贴下载 废话补多少,代码实现效果如下:

#!/usr/local/python/bin/python3

import requests

import re

import chardet

import random

import signal

import time

import os

import sys

def DealwithURL(url):

r = requests.get(url)

pattern = re.compile('<meta http-equiv="refresh" content="0.1;url=')

findurl=re.findall(pattern,r.text)

if findurl:

pattern = re.compile('<meta http-equiv="refresh" content="0.1;url=(.*)"')

transferurl = re.findall(pattern,r.text)[0]

return transferurl

else :

return True

def GetNewURL(url):

r = requests.get(url)

r.encoding='utf-8'

pattern = re.compile('alert(.*)">')

findurl=re.findall(pattern,r.text)

findurl_str = (" ".join(findurl))

return (findurl_str.split(' ',1)[0][2:])

def gettrueurl(url):

if DealwithURL(url)==True:

return url

else :

return GetNewURL(DealwithURL(url))

def SaveLocalUrl(untreatedurl,treatedurl):

if untreatedurl == treatedurl :

pass

else :

try:

fileconf = open(r'main.conf','r')

rewritestr = ""

for readline in fileconf:

if re.search(untreatedurl,readline):

readline = re.sub(untreatedurl,treatedurl,readline)

rewritestr = rewritestr + readline

else :

rewritestr = rewritestr + readline

fileconf.close()

fileconf = open(r'main.conf','w')

fileconf.write(rewritestr)

fileconf.close()

except:

print ("get new url but open files ng write to logs")

def handler(signum,frame):

raise AssertionError

def WriteLocalDownloadURL(downfile,downurled2k):

urlfile = open(downfile,'a+')

urlfile.write(downurled2k+'\n')

def GetDownloadURL(sourceurl,titleurl,titlename,update_file,headers):

downurlstr = (" ".join(titleurl))

downnamestr = (" ".join(titlename))

r = requests.get((sourceurl+downurlstr),headers)

pattern = re.compile('autocomplete="on">(.*)/</textarea></div>')

downurled2k = re.findall(pattern,r.text)

downurled2kstr = (" ".join(downurled2k))

WriteLocalDownloadURL(update_file , downurled2kstr)

print (downnamestr , downurled2kstr)

def ReadLocalFiles() :

returndict={}

localfiles = open(r'main.conf')

readline = localfiles.readline().rstrip()

while readline :

if readline.startswith('#'):

pass

else:

try:

readline = readline.rstrip()

returndict[readline.split('=')[0]] = readline.split('=')[1]

except:

print ("Please Check your conf %s" %(readline))

sys.exit(1)

readline = localfiles.readline().rstrip()

localfiles.close()

return returndict

def GetListURLinfo(sourceurl , title , getpagenumber , total,update_file,headers):

if total >= 100:

total = 100

if total <= 1:

total = 2

getpagenumber = total

for number in range(0,total) :

try:

signal.signal(signal.SIGALRM,handler)

signal.alarm(3)

url = sourceurl + title + '-' + str(random.randint(1,getpagenumber)) + '.html'

r = requests.get(url,headers)

pattern = re.compile('<div class="info"><h2>(.*)</a><em></em></h2>')

r.encoding = chardet.detect(r.content)['encoding']

allurl = re.findall(pattern,r.text)

for lineurl in allurl:

try:

signal.signal(signal.SIGALRM,handler)

signal.alarm(3)

pattern = re.compile('<a href="(.*)" title')

titleurl = re.findall(pattern,lineurl)

pattern = re.compile('title="(.*)" target=')

titlename = re.findall(pattern,lineurl)

GetDownloadURL(sourceurl,titleurl,titlename,update_file,headers)

signal.alarm(0)

except AssertionError:

print (lineurl,titlename , "Timeout Error: the cmd 10s have not finished")

continue

# title = '/list/'+str(random.randint(1,8))

# print (title)

# print (title_header)

except AssertionError:

print ("GetlistURL Infor Error")

continue

def GetTitleInfo(url,down_page,update_file,headers):

title = '/list/'+str(random.randint(1,8))

titleurl = url + title + '.html'

r = requests.get(titleurl,headers)

r.encoding = chardet.detect(r.content)['encoding']

pattern = re.compile(' 当前:.*/(.*)页 ')

getpagenumber = re.findall(pattern,r.text)

getpagenumber = (" ".join(getpagenumber))

GetListURLinfo(url , title , int(getpagenumber) , int(down_page),update_file,headers)

def write_logs(time,logs):

loginfo = str(time)+logs

try:

logfile = open(r'logs','a+')

logfile.write(loginfo)

logfile.close()

except:

print ("Write logs error,code:154")

def DeleteHisFiles(update_file):

if os.path.isfile(update_file):

try:

download_files = open(update_file,'r+')

download_files.truncate()

download_files.close()

except:

print ("Delete " + update_file + "Error --code:166")

else :

print ("Build New downfiles")

def main():

headers = {"User-Agent": "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_9_5) AppleWebKit 537.36 (KHTML, like Gecko) Chrome","Accept": "text/html,application/xhtml+xml,application/xml; q=0.9,image/webp,*/*;q=0.8"}

readconf = ReadLocalFiles()

try:

file_url = readconf['url']

down_page = readconf['download_page']

update_file = readconf['download_local_files']

except:

print ("Get local conf error,please check it")

sys.exit(-1)

DeleteHisFiles(update_file)

untreatedurl = file_url

treatedurl = gettrueurl(untreatedurl)

SaveLocalUrl(untreatedurl,treatedurl)

url = treatedurl

GetTitleInfo(url,int(down_page),update_file,headers)

if __name__=="__main__":

main()

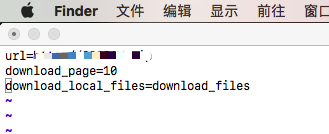

对应的main.conf如下:

本着对爬虫的好奇来写下这些代码,如有对代码感兴趣的,可以私聊提供完整的conf信息,毕竟,我也是做运维的,要脸,从来不用服务器下片哈。。。