1. Volume

将数据和镜像解耦,以及容器间数据共享.不将数据打包到镜像中可以减小镜像大小.

k8s抽象出一个对象,用来保存数据.

常用的卷有:

emptyDir: 本地临时卷,容器删除数据跟着删除

hostPath: 本地卷,容器删除数据不被删除

nfs: nfs共享卷,容器删除数据不被删除

configmap: 配置文件

其他:https://kubernetes.io/zh-cn/docs/concepts/workloads/controllers/deployment/#scaling-a-deployment

1.1 emptyDir

当pod被创建时,会先创建emptyDir卷.只要该pod在该节点上运行,这个卷就会一直存在.被创建的时候这个卷是空的.pod中的容器可以读写该emptyDir卷中的文件.当pod被删除时,emptyDir将被永久删除.可以放一些缓存或日志等不重要的文件.

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 1

selector:

matchLabels: #rs or deployment

app: ng-deploy-80

template:

metadata:

labels:

app: ng-deploy-80

spec:

containers:

- name: ng-deploy-80

image: nginx

ports:

- containerPort: 80

volumeMounts:

- mountPath: /cache

name: empty-volume

volumes:

- name: empty-volume

emptyDir: {

}

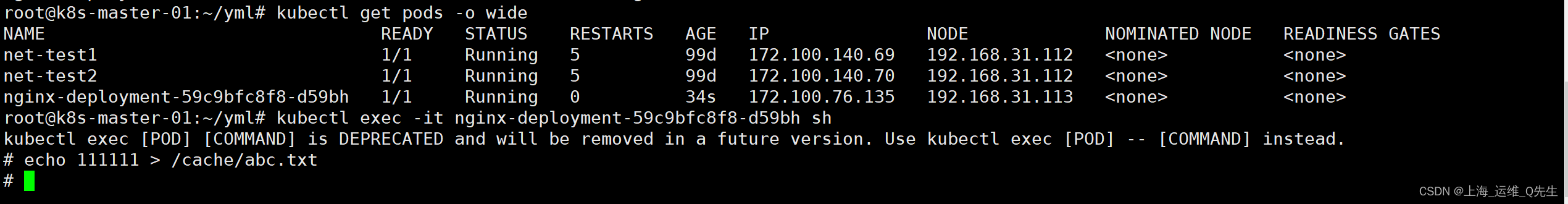

pod创建后写入文件到/cache/abc.txt

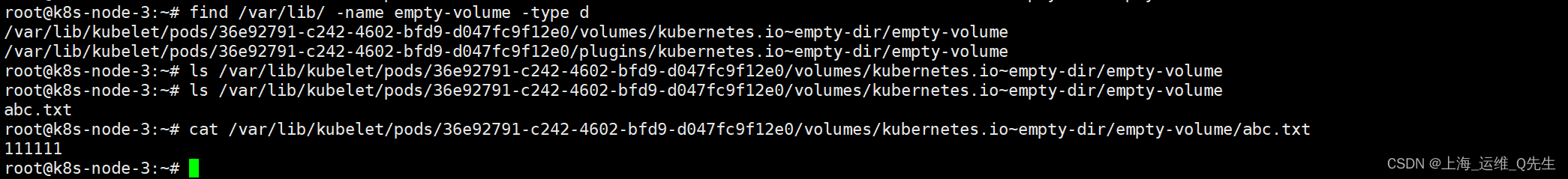

刚创建的时候目录是空的,写入文件后可以在node节点上读取到该文件内容.

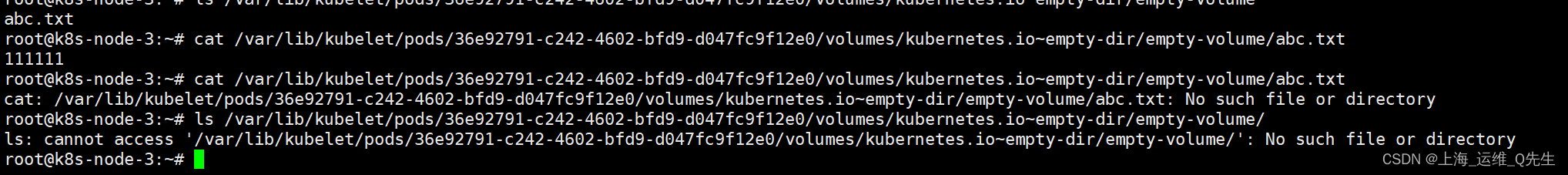

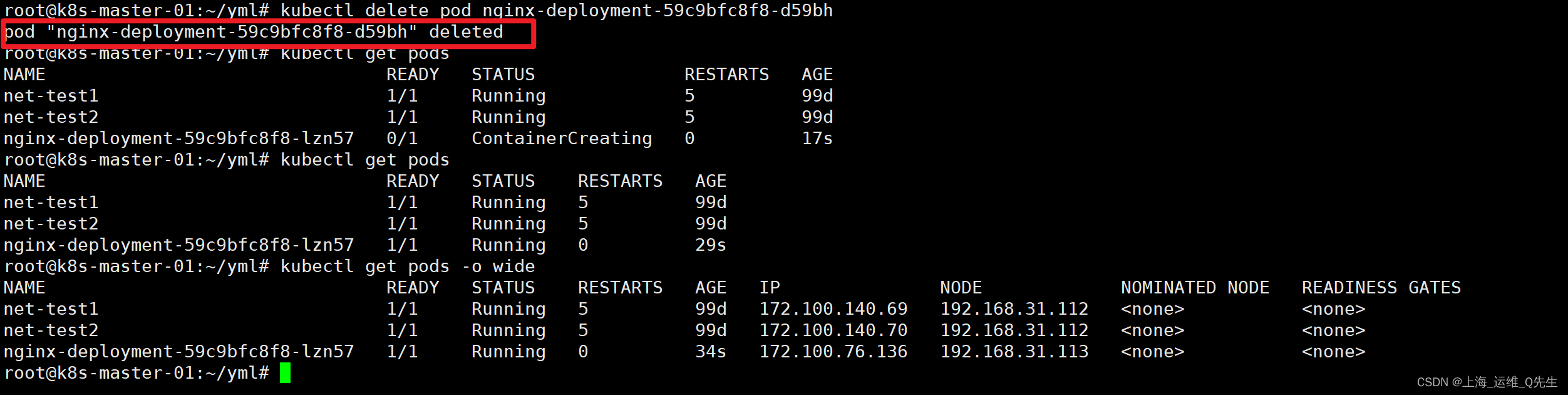

当pod被删除后,emptyDir一并消失

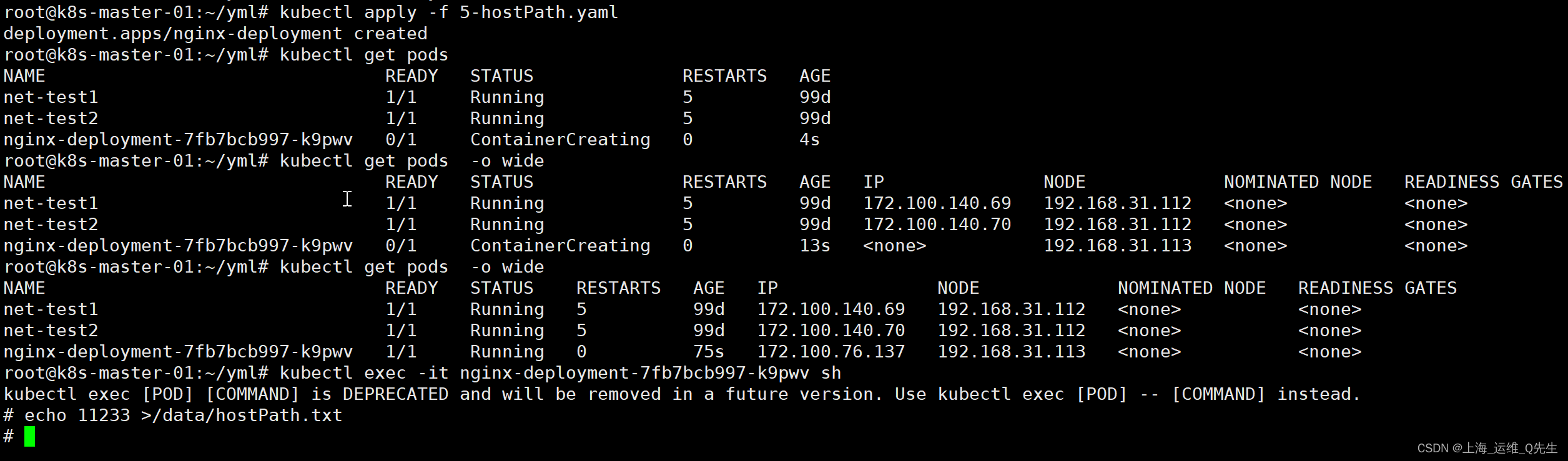

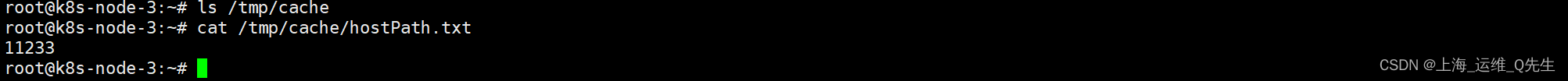

1.2 hostPath

和docker的-v效果比较类似,即使容器被删除,该路径下的数据任然会被保存下来.但发生节点切换就会造成数据丢失.

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 1

selector:

matchLabels:

app: ng-deploy-80

template:

metadata:

labels:

app: ng-deploy-80

spec:

containers:

- name: ng-deploy-80

image: nginx

ports:

- containerPort: 80

volumeMounts:

- mountPath: /data

name: cache-volume

volumes:

- name: cache-volume

hostPath:

path: /tmp/cache

创建hostPath

1.3 nfs

1.3.1 nfs 环境准备

apt update

apt install nfs-server

mkdir /data/k8s/ -p

echo '/data/k8s *(rw,sync,no_root_squash)' >> /etc/exports

systemctl enable --now nfs-server

systemctl restart nfs-server

1.3.2 node节点测试是否可以正常访问到nfs

root@k8s-node-1:~# apt install nfs-common

root@k8s-node-1:~# showmount -e 192.168.31.109

Export list for 192.168.31.109:

/data/k8s *

root@k8s-node-1:~#

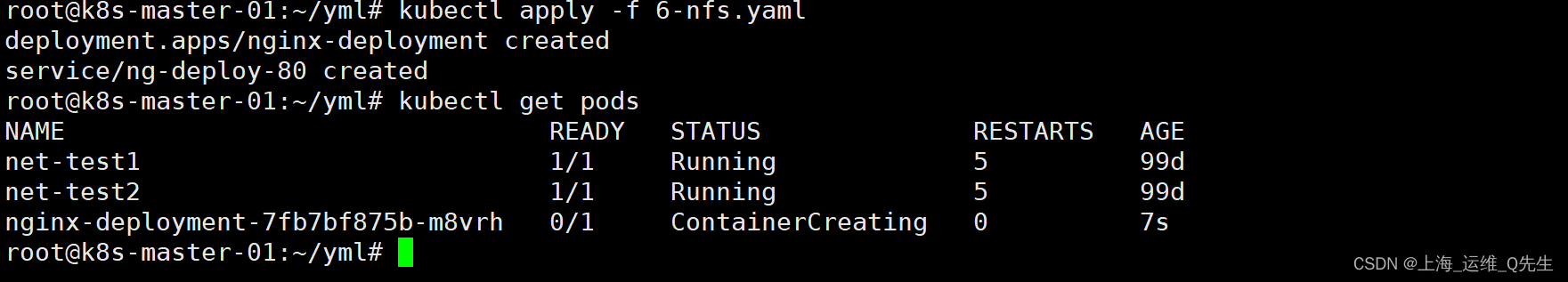

1.3.3 单个nfs的yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 1

selector:

matchLabels:

app: ng-deploy-80

template:

metadata:

labels:

app: ng-deploy-80

spec:

containers:

- name: ng-deploy-80

image: nginx

ports:

- containerPort: 80

volumeMounts:

- mountPath: /usr/share/nginx/html/mysite

name: my-nfs-volume

volumes:

- name: my-nfs-volume

nfs:

server: 192.168.31.109

path: /data/k8s

---

apiVersion: v1

kind: Service

metadata:

name: ng-deploy-80

spec:

ports:

- name: http

port: 81

targetPort: 80

nodePort: 30016

protocol: TCP

type: NodePort

selector:

app: ng-deploy-80

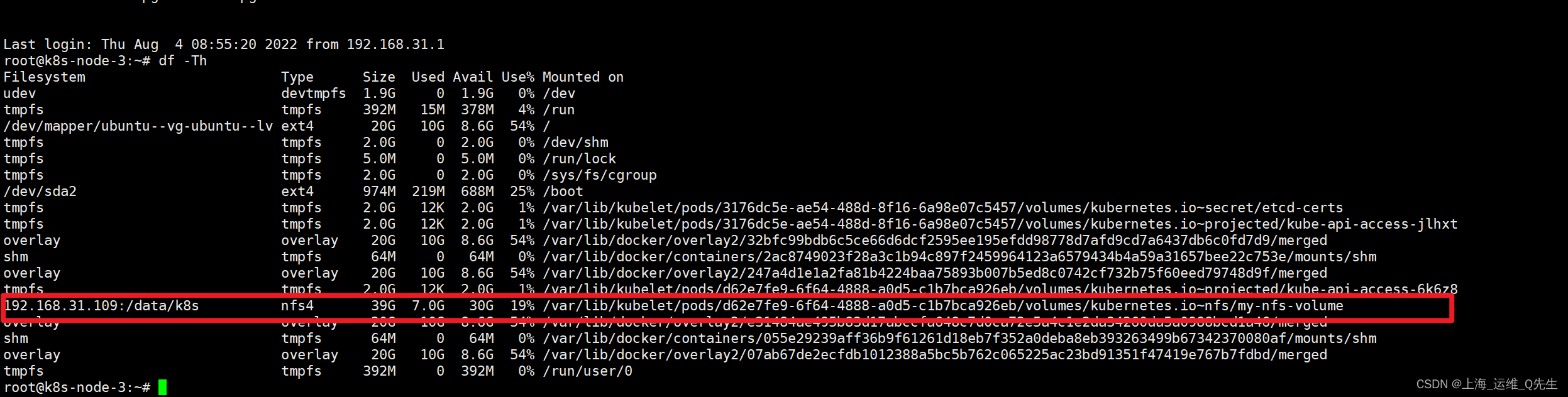

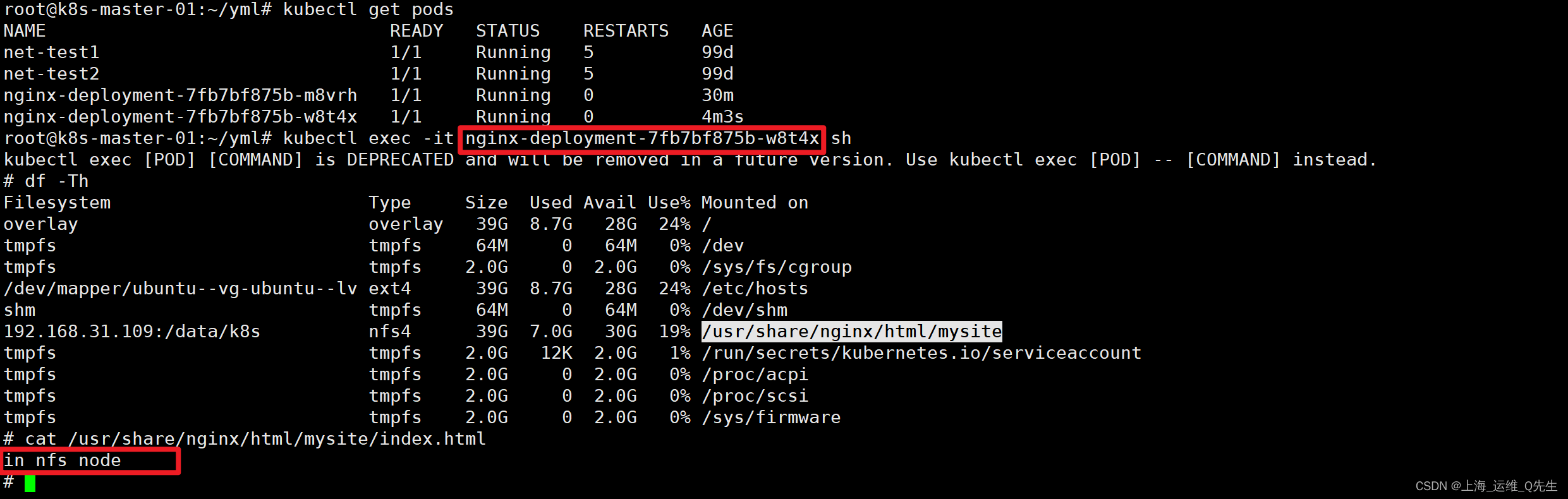

宿主机上,nfs被挂载到容器的目录,最后被联合挂载到容器中

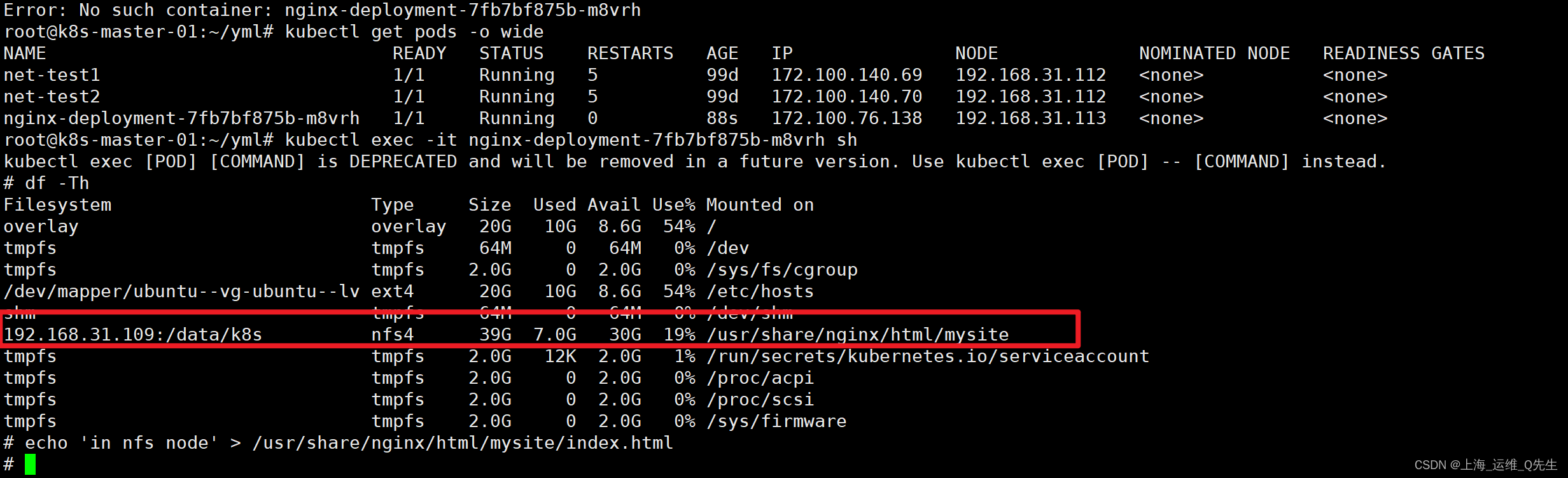

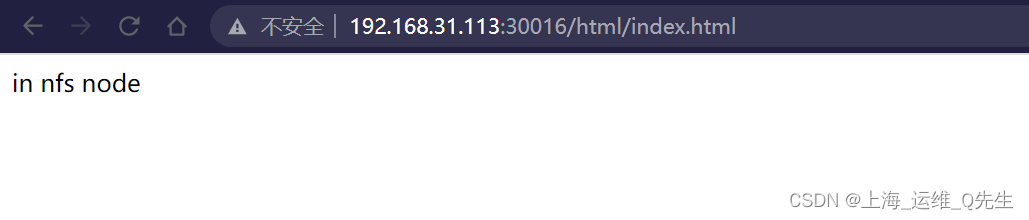

在pod内,nfs已经被挂载

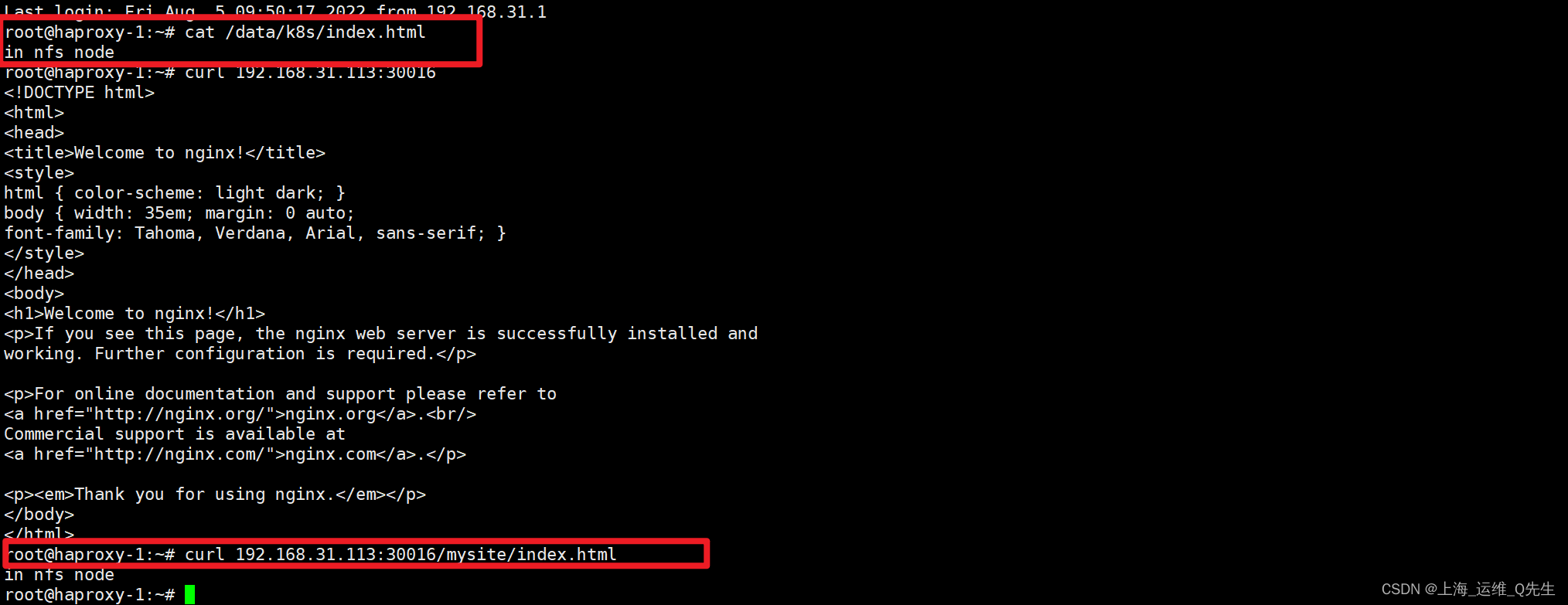

在nfs服务器上也可以看到这个文件

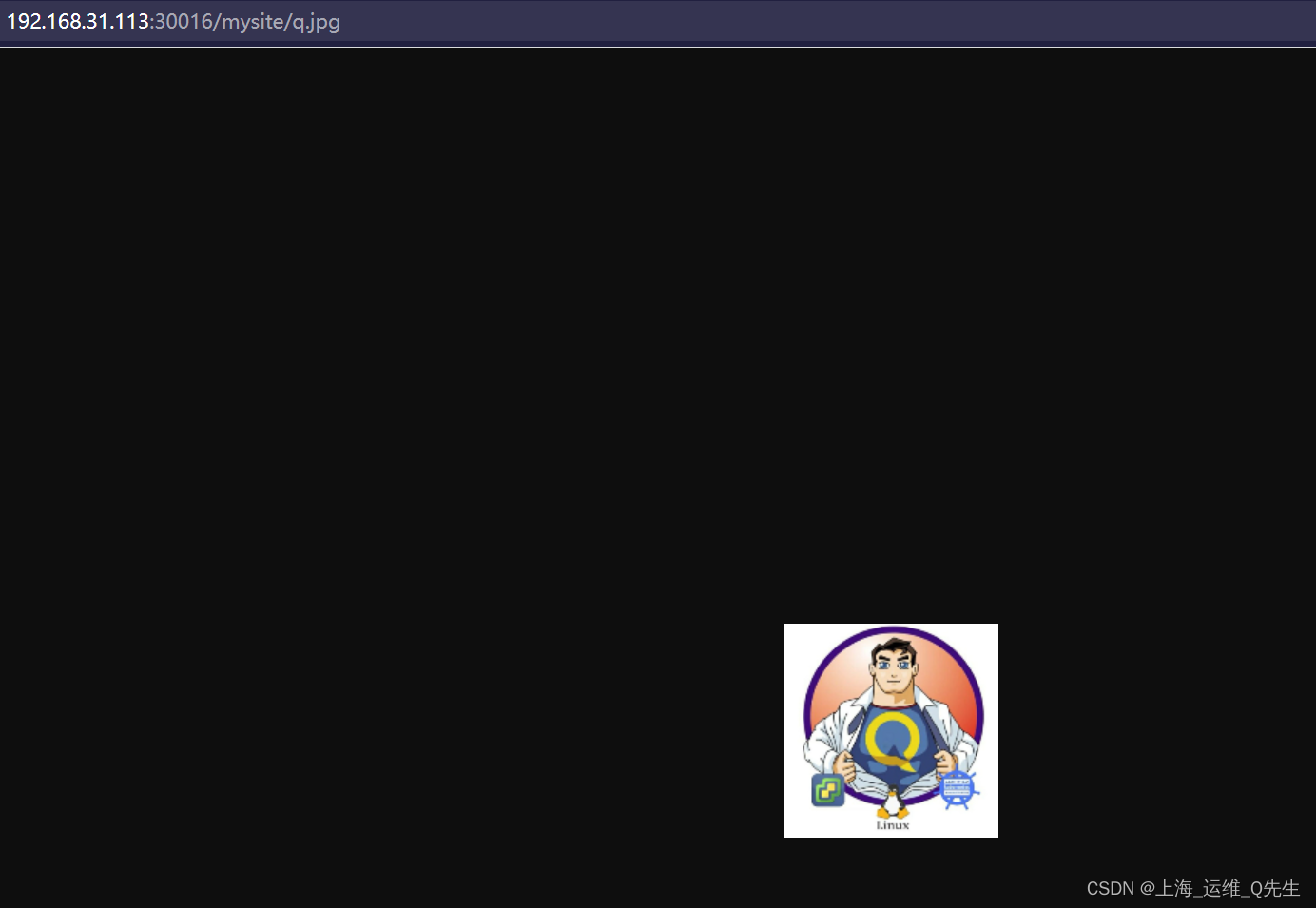

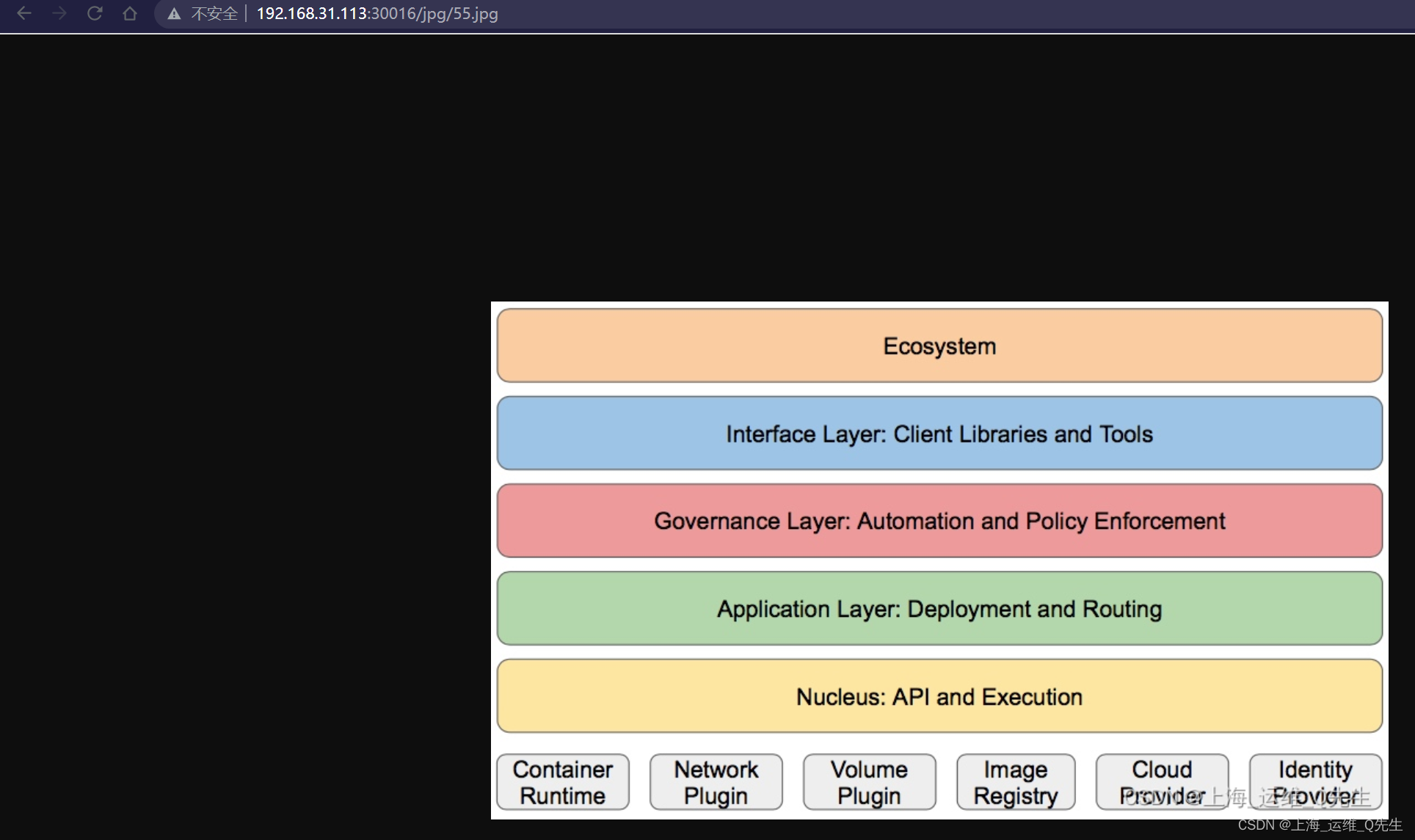

一般这个都是放图片的,我们也放个图片试试看

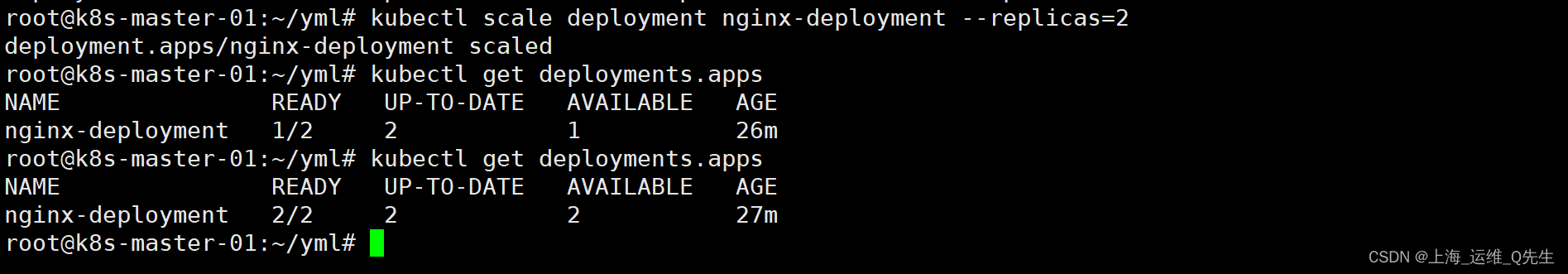

把副本数调整成2个

可以看到新建的pod也自动挂载了相同的内容,数据也是完全相同的

1.3.3 多个nfs的yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 1

selector:

matchLabels:

app: ng-deploy-80

template:

metadata:

labels:

app: ng-deploy-80

spec:

containers:

- name: ng-deploy-80

image: nginx

ports:

- containerPort: 80

volumeMounts:

- mountPath: /usr/share/nginx/html/html

name: nfs-volume-html

- mountPath: /usr/share/nginx/html/jpg

name: nfs-volume-jpg

- mountPath: /usr/share/nginx/html/yml

name: nfs-volume-yml

volumes:

- name: nfs-volume-html

nfs:

server: 192.168.31.109

path: /data/k8s

- name: nfs-volume-jpg

nfs:

server: 192.168.31.110

path: /data/k8s

- name: nfs-volume-yml

nfs:

server: 192.168.31.104

path: /data/k8s

---

apiVersion: v1

kind: Service

metadata:

name: ng-deploy-80

spec:

ports:

- name: http

port: 81

targetPort: 80

nodePort: 30016

protocol: TCP

type: NodePort

selector:

app: ng-deploy-80

分别在192.168.31.109,192.168.31.110,192.168.31.104创建目录,并映射到pod中不同的目录

yaml被当普通文件下载

最后实现pod挂载3个不同的nfs路径

1.4 Configmap

存在的意义:使得镜像和配置解耦,将配置信息放入configmap,在pod的对象中导入configmap,实现配置导入

apiVersion: v1

kind: ConfigMap

metadata:

name: nginx-config

data:

default: |

server {

listen 80;

server_name www.mysite.com;

index index.html;

location / {

root /data/nginx/html;

if (!-e $request_filename) {

rewrite ^/(.*) /index.html last;

}

}

}

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 1

selector:

matchLabels:

app: ng-deploy-80

template:

metadata:

labels:

app: ng-deploy-80

spec:

containers:

- name: ng-deploy-80

image: nginx

ports:

- containerPort: 80

volumeMounts:

- mountPath: /data/nginx/html

name: my-nfs-volume

- name: nginx-config

mountPath: /etc/nginx/conf.d/

volumes:

- name: nginx-config

configMap:

name: nginx-config

items:

- key: default

path: mysite.conf

- name: my-nfs-volume

nfs:

server: 192.168.31.109

path: /data/k8sdata

---

apiVersion: v1

kind: Service

metadata:

name: ng-deploy-80

spec:

ports:

- name: http

port: 81

targetPort: 80

nodePort: 30019

protocol: TCP

type: NodePort

selector:

app: ng-deploy-80

此时访问不存在的页面,会直接将首页返回.

明显configmap配置已经生效

root@k8s-master-01:~/yml# curl 192.168.31.113:30019

in nfs node

root@k8s-master-01:~/yml# curl 192.168.31.113:30019/dak.dkaj

in nfs node

root@k8s-master-01:~/yml# curl 192.168.31.113:30019/dak.jpg

in nfs node

再看下pod下文件,可以看到/etc/nginx/conf.d/mysite.conf的内容正是configmap中default的内容

root@k8s-master-01:~/yml# kubectl exec -it nginx-deployment-7c94b5fcb7-rnj7g sh

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

# cat /etc/nginx/nginx.conf

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log notice;

pid /var/run/nginx.pid;

events {

worker_connections 1024;

}

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

sendfile on;

#tcp_nopush on;

keepalive_timeout 65;

#gzip on;

include /etc/nginx/conf.d/*.conf;

}

# cat /etc/nginx/conf.d/mysite.conf

server {

listen 80;

server_name www.mysite.com;

index index.html;

location / {

root /data/nginx/html;

if (!-e $request_filename) {

rewrite ^/(.*) /index.html last;

}

}

}

1.5 pv/pvc

PersistentVolume/PersistentVvolumeClaim

实现pod和存储的解耦,这样修改storage的时候就不需要修改pod,只需要修改pv和pvc的配置就可以.也可以实现存储和应用的权限隔离.

pv和存储对接,全局资源,不需要指定namespace.

pvc和pod对接,需要指定namespace

这部分后续和ceph一起更新