有的时候我们需要在Caffe中添加新的Layer,现在在做的项目中,需要有一个L2 Normalization Layer,Caffe中居然没有,所以要自己添加。

所以最重要的是如何实现forward_cpu(forward_gpu), backward_cpu(backward_gpu).

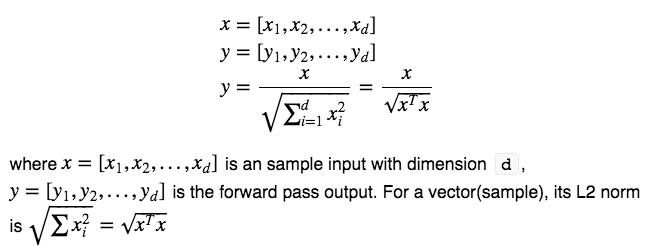

1. L2 Normalization Forward Pass(向前传导)

1.1 Formula Deduction(公式推导)

1.2 Implementation(实现)

template <typename Dtype>

void NormalizationLayer<Dtype>::Forward_gpu(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top) {

const Dtype* bottom_data = bottom[0]->gpu_data();

Dtype* top_data = top[0]->mutable_gpu_data();

Dtype* squared_data = squared_.mutable_gpu_data();

Dtype normsqr;

int n = bottom[0]->num();

int d = bottom[0]->count() / n;

caffe_gpu_powx(n*d, bottom_data, Dtype(2), squared_data);

for (int i=0; i<n; ++i) {

caffe_gpu_asum<Dtype>(d, squared_data+i*d, &normsqr);

caffe_gpu_scale<Dtype>(d, pow(normsqr, -0.5), bottom_data+i*d, top_data+i*d);

}

}

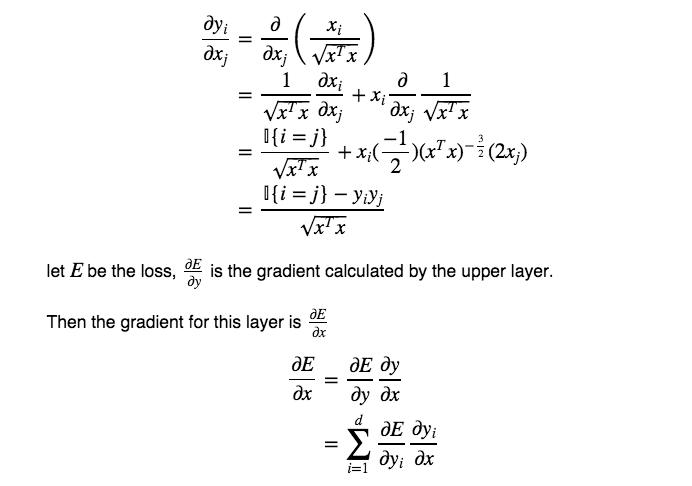

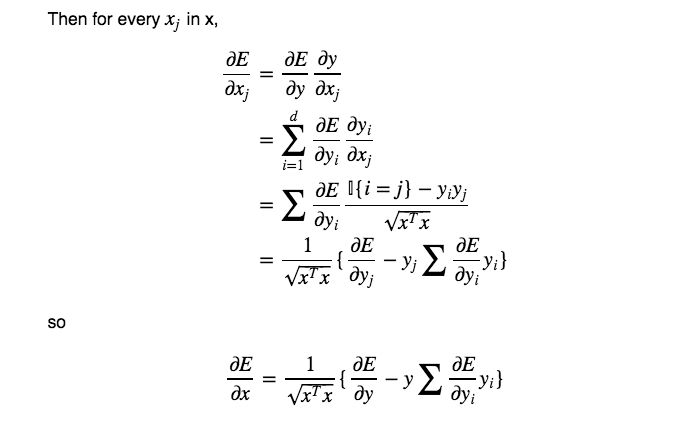

2. L2 Normalization Backward Propagation

2.1 Formula Deduction(公式推导)

First is the gradient regardless of upper layer:

2.2 Implementation(实现)

template <typename Dtype>

void NormalizationLayer<Dtype>::Backward_gpu(const vector<Blob<Dtype>*>& top,

const vector<bool>& propagate_down, const vector<Blob<Dtype>*>& bottom) {

const Dtype* top_diff = top[0]->gpu_diff();

const Dtype* top_data = top[0]->gpu_data();

const Dtype* bottom_data = bottom[0]->gpu_data();

Dtype* bottom_diff = bottom[0]->mutable_gpu_diff();

int n = top[0]->num();

int d = top[0]->count() / n;

Dtype a;

for (int i=0; i<n; ++i) {

caffe_gpu_dot(d, top_data+i*d, top_diff+i*d, &a);

caffe_gpu_scale(d, a, top_data+i*d, bottom_diff+i*d);

caffe_gpu_sub(d, top_diff+i*d, bottom_diff+i*d, bottom_diff+i*d);

caffe_gpu_dot(d, bottom_data+i*d, bottom_data+i*d, &a);

caffe_gpu_scale(d, Dtype(pow(a, -0.5)), bottom_diff+i*d, bottom_diff+i*d);

}

}

全部修改可看一下 链接:

https://github.com/freesouls/caffe/tree/master/src/caffe/layers