Camvid数据集

官网 :http://mi.eng.cam.ac.uk/research/projects/VideoRec/CamVid/

类别数:Object Recognition in Video Dataset (cam.ac.uk)

1. 简介

1.1 下载

CamVid (Cambridge-Driving Labeled Video Database) | Kaggle

1.1 简介

CamVid全称:The Cambridge-driving Labeled Video Database,该数据集由剑桥大学工程系于 2008 年发布,相关论文有《Segmentation and Recognition Using Structure from Motion Point Clouds》,是第一个具有目标类别语义标签的视频集合。数据库提供32个ground truth语义标签,将每个像素与语义类别之一相关联。该数据库解决了对实验数据的需求,以定量评估新兴算法。数据是从驾驶汽车的角度拍摄的,驾驶场景增加了观察目标的数量和异质性。

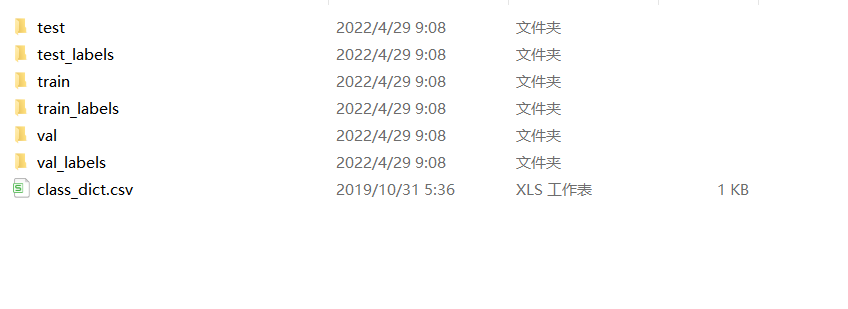

1.2 格式介绍

class_dict.csv内容如下

Camvid 数据一共11个类。加上背景一共12类

class_11 0表示数据中没有这个类,1表示有这个类

| name | r | g | b | class_11 |

|---|---|---|---|---|

| Animal | 64 | 128 | 64 | 0 |

| Archway | 192 | 0 | 128 | 0 |

| Bicyclist | 0 | 128 | 192 | 1 |

| Bridge | 0 | 128 | 64 | 0 |

| Building | 128 | 0 | 0 | 1 |

| Car | 64 | 0 | 128 | 1 |

| CartLuggagePram | 64 | 0 | 192 | 0 |

| Child | 192 | 128 | 64 | 0 |

| Column_Pole | 192 | 192 | 128 | 1 |

| Fence | 64 | 64 | 128 | 1 |

| LaneMkgsDriv | 128 | 0 | 192 | 0 |

| LaneMkgsNonDriv | 192 | 0 | 64 | 0 |

| Misc_Text | 128 | 128 | 64 | 0 |

| MotorcycleScooter | 192 | 0 | 192 | 0 |

| OtherMoving | 128 | 64 | 64 | 0 |

| ParkingBlock | 64 | 192 | 128 | 0 |

| Pedestrian | 64 | 64 | 0 | 1 |

| Road | 128 | 64 | 128 | 1 |

| RoadShoulder | 128 | 128 | 192 | 0 |

| Sidewalk | 0 | 0 | 192 | 1 |

| SignSymbol | 192 | 128 | 128 | 1 |

| Sky | 128 | 128 | 128 | 1 |

| SUVPickupTruck | 64 | 128 | 192 | 0 |

| TrafficCone | 0 | 0 | 64 | 0 |

| TrafficLight | 0 | 64 | 64 | 0 |

| Train | 192 | 64 | 128 | 0 |

| Tree | 128 | 128 | 0 | 1 |

| Truck_Bus | 192 | 128 | 192 | 0 |

| Tunnel | 64 | 0 | 64 | 0 |

| VegetationMisc | 192 | 192 | 0 | 0 |

| Void | 0 | 0 | 0 | 0 |

| Wall | 64 | 192 | 0 | 0 |

2. 制作dataloader

import glob

import os

from torchvision import transforms

import torch.nn as nn

import torch

from torch.nn import functional as F

from PIL import Image

import numpy as np

import pandas as pd

import random

import numbers

import torchvision

import matplotlib.pyplot as plt

from torch.utils.data import DataLoader, Dataset

from torchvision.transforms import InterpolationMode

def bool_to_num(num):

number = np.zeros(num.shape)

height = num.shape[0]

width = num.shape[1]

# channel = num.shape[2]

for h in range(height):

for w in range(width):

# for c in range(channel):

if num[h][w] == True:

number[h][w] = 1

else:

number[h][w] = 0

return number

def poly_lr_scheduler(optimizer, init_lr, iter, lr_decay_iter=1,

max_iter=300, power=0.9):

"""Polynomial decay of learning rate

:param init_lr is base learning rate

:param iter is a current iteration

:param lr_decay_iter how frequently decay occurs, default is 1

:param max_iter is number of maximum iterations

:param power is a polymomial power

"""

# if iter % lr_decay_iter or iter > max_iter:

# return optimizer

lr = init_lr * (1 - iter / max_iter) ** power

optimizer.param_groups[0]['lr'] = lr

return lr

# return lr

def get_label_info(csv_path):

# return label -> {label_name: [r_value, g_value, b_value, ...}

ann = pd.read_csv(csv_path)

label = {

}

for iter, row in ann.iterrows():

label_name = row['name']

r = row['r']

g = row['g']

b = row['b']

class_11 = row['class_11']

label[label_name] = [int(r), int(g), int(b), class_11]

return label

def get_label_info_2():

return {

'Animal': [64, 128, 64, 0],

'Archway': [192, 0, 128, 0],

'Bicyclist': [0, 128, 192, 1],

'Bridge': [0, 128, 64, 0],

'Building': [128, 0, 0, 1],

'Car': [64, 0, 128, 1],

'CartLuggagePram': [64, 0, 192, 0],

'Child': [192, 128, 64, 0],

'Column_Pole': [192, 192, 128, 1],

'Fence': [64, 64, 128, 1],

'LaneMkgsDriv': [128, 0, 192, 0],

'LaneMkgsNonDriv': [192, 0, 64, 0],

'Misc_Text': [128, 128, 64, 0],

'MotorcycleScooter': [192, 0, 192, 0],

'OtherMoving': [128, 64, 64, 0],

'ParkingBlock': [64, 192, 128, 0],

'Pedestrian': [64, 64, 0, 1],

'Road': [128, 64, 128, 1],

'RoadShoulder': [128, 128, 192, 0],

'Sidewalk': [0, 0, 192, 1],

'SignSymbol': [192, 128, 128, 1],

'Sky': [128, 128, 128, 1],

'SUVPickupTruck': [64, 128, 192, 0],

'TrafficCone': [0, 0, 64, 0],

'TrafficLight': [0, 64, 64, 0],

'Train': [192, 64, 128, 0],

'Tree': [128, 128, 0, 1],

'Truck_Bus': [192, 128, 192, 0],

'Tunnel': [64, 0, 64, 0],

'VegetationMisc': [192, 192, 0, 0],

'Void': [0, 0, 0, 0],

'Wall': [64, 192, 0, 0]

}

def one_hot_it(label, label_info):

"""

Args:

label:

label_info:

Returns:

"""

# return semantic_map -> [H, W]

semantic_map = np.zeros(label.shape[:-1])

for index, info in enumerate(label_info):

color = label_info[info]

# colour_map = np.full((label.shape[0], label.shape[1], label.shape[2]), colour, dtype=int)

equality = np.equal(label, color)

class_map = np.all(equality, axis=-1)

semantic_map[class_map] = index

# semantic_map.append(class_map)

# semantic_map = np.stack(semantic_map, axis=-1)

return semantic_map

def one_hot_it_v11(label, label_info):

"""

返回图片信息 -> 转换成one-hot 编码

Args:

label:

label_info:

Returns:

"""

# return semantic_map -> [H, W, class_num]

semantic_map = np.zeros(label.shape[:-1])

# from 0 to 11, and 11 means void

class_index = 0

for index, info in enumerate(label_info):

color = label_info[info][:3]

class_11 = label_info[info][3]

if class_11 == 1:

# 如果在 这11 个类别中。就是类别信息

equality = np.equal(label, color)

class_map = np.all(equality, axis=-1)

semantic_map[class_map] = class_index

class_index += 1

else:

# 如果不在这11 个类别中的话。就是背景信息

equality = np.equal(label, color)

class_map = np.all(equality, axis=-1)

semantic_map[class_map] = 11

return semantic_map

def one_hot_it_v11_dice(label, label_info):

# return semantic_map -> [H, W, class_num]

semantic_map = []

void = np.zeros(label.shape[:2])

dis_all = np.zeros(label.shape[:2])

for index, info in enumerate(label_info):

color = label_info[info][:3]

class_11 = label_info[info][3]

if class_11 == 1:

equality = np.equal(label, color)

class_map = np.all(equality, axis=-1)

dis_all[class_map] = 1

semantic_map.append(class_map)

else:

equality = np.equal(label, color)

class_map = np.all(equality, axis=-1)

# plt.imshow(class_map)

# plt.show()

void[class_map] = 1

# plt.imshow(dis_all)

# plt.show()

# plt.imshow(void)

# plt.show()

semantic_map.append(void)

semantic_map = np.stack(semantic_map, axis=-1).astype(np.float32)

return semantic_map

def reverse_one_hot(image):

"""

转换一个2D数组为一热格式(depth为num_classes),

到一个只有一个通道的2D数组,其中每个像素值是

分类密钥。

# Arguments

image: The one-hot format image

# Returns

A 2D array with the same width and height as the input, but

with a depth size of 1, where each pixel value is the classified

class key.

"""

image = image.permute(1, 2, 0)

x = torch.argmax(image, dim=-1)

return x

def colour_code_segmentation(image, label_values):

"""

Given a 1-channel array of class keys, colour code the segmentation results.

# Arguments

image: single channel array where each value represents the class key.

label_values

# Returns

Colour coded image for segmentation visualization

"""

label_values = [label_values[key][:3] for key in label_values if label_values[key][3] == 1]

label_values.append([0, 0, 0])

colour_codes = np.array(label_values)

x = colour_codes[image.astype(int)]

return x

def compute_global_accuracy(pred, label):

pred = pred.flatten()

label = label.flatten()

total = len(label)

count = 0.0

for i in range(total):

if pred[i] == label[i]:

count = count + 1.0

return float(count) / float(total)

def fast_hist(a, b, n):

'''

a and b are predict and mask respectively

n is the number of classes

'''

k = (a >= 0) & (a < n)

return np.bincount(n * a[k].astype(int) + b[k], minlength=n ** 2).reshape(n, n)

def per_class_iu(hist):

epsilon = 1e-5

return (np.diag(hist) + epsilon) / (hist.sum(1) + hist.sum(0) - np.diag(hist) + epsilon)

class RandomCrop(object):

"""Crop the given PIL Image at a random location.

Args:

size (sequence or int): Desired output size of the crop. If size is an

int instead of sequence like (h, w), a square crop (size, size) is

made.

padding (int or sequence, optional): Optional padding on each border

of the image. Default is 0, i.e no padding. If a sequence of length

4 is provided, it is used to pad left, top, right, bottom borders

respectively.

pad_if_needed (boolean): It will pad the image if smaller than the

desired size to avoid raising an exception.

"""

def __init__(self, size, seed, padding=0, pad_if_needed=False):

if isinstance(size, numbers.Number):

self.size = (int(size), int(size))

else:

self.size = size

self.padding = padding

self.pad_if_needed = pad_if_needed

self.seed = seed

@staticmethod

def get_params(img, output_size, seed):

"""Get parameters for ``crop`` for a random crop.

Args:

img (PIL Image): Image to be cropped.

output_size (tuple): Expected output size of the crop.

Returns:

tuple: params (i, j, h, w) to be passed to ``crop`` for random crop.

"""

random.seed(seed)

w, h = img.size

th, tw = output_size

if w == tw and h == th:

return 0, 0, h, w

i = random.randint(0, h - th)

j = random.randint(0, w - tw)

return i, j, th, tw

def __call__(self, img):

"""

Args:

img (PIL Image): Image to be cropped.

Returns:

PIL Image: Cropped image.

"""

if self.padding > 0:

img = torchvision.transforms.functional.pad(img, self.padding)

# pad the width if needed

if self.pad_if_needed and img.size[0] < self.size[1]:

img = torchvision.transforms.functional.pad(img, (int((1 + self.size[1] - img.size[0]) / 2), 0))

# pad the height if needed

if self.pad_if_needed and img.size[1] < self.size[0]:

img = torchvision.transforms.functional.pad(img, (0, int((1 + self.size[0] - img.size[1]) / 2)))

i, j, h, w = self.get_params(img, self.size, self.seed)

return torchvision.transforms.functional.crop(img, i, j, h, w)

def __repr__(self):

return self.__class__.__name__ + '(size={0}, padding={1})'.format(self.size, self.padding)

def cal_miou(miou_list, csv_path):

# return label -> {label_name: [r_value, g_value, b_value, ...}

ann = pd.read_csv(csv_path)

miou_dict = {

}

cnt = 0

for iter, row in ann.iterrows():

label_name = row['name']

class_11 = int(row['class_11'])

if class_11 == 1:

miou_dict[label_name] = miou_list[cnt]

cnt += 1

return miou_dict, np.mean(miou_list)

class OHEM_CrossEntroy_Loss(nn.Module):

def __init__(self, threshold, keep_num):

super(OHEM_CrossEntroy_Loss, self).__init__()

self.threshold = threshold

self.keep_num = keep_num

self.loss_function = nn.CrossEntropyLoss(reduction='none')

def forward(self, output, target):

loss = self.loss_function(output, target).view(-1)

loss, loss_index = torch.sort(loss, descending=True)

threshold_in_keep_num = loss[self.keep_num]

if threshold_in_keep_num > self.threshold:

loss = loss[loss > self.threshold]

else:

loss = loss[:self.keep_num]

return torch.mean(loss)

def group_weight(weight_group, module, norm_layer, lr):

group_decay = []

group_no_decay = []

for m in module.modules():

if isinstance(m, nn.Linear):

group_decay.append(m.weight)

if m.bias is not None:

group_no_decay.append(m.bias)

elif isinstance(m, (nn.Conv2d, nn.Conv3d)):

group_decay.append(m.weight)

if m.bias is not None:

group_no_decay.append(m.bias)

elif isinstance(m, norm_layer) or isinstance(m, nn.GroupNorm):

if m.weight is not None:

group_no_decay.append(m.weight)

if m.bias is not None:

group_no_decay.append(m.bias)

assert len(list(module.parameters())) == len(group_decay) + len(

group_no_decay)

weight_group.append(dict(params=group_decay, lr=lr))

weight_group.append(dict(params=group_no_decay, weight_decay=.0, lr=lr))

return weight_group

def augmentation():

# augment images with spatial transformation: Flip, Affine, Rotation, etc...

# see https://github.com/aleju/imgaug for more details

pass

def augmentation_pixel():

# augment images with pixel intensity transformation: GaussianBlur, Multiply, etc...

pass

class CamVid(Dataset):

"""

因为数据集较少,需要进行数据加强

"""

def __init__(self, image_path, label_path, csv_path, scale, loss='crossentropy', mode='train'):

super().__init__()

self.mode = mode

self.image_list = []

if not isinstance(image_path, list):

image_path = [image_path]

for image_path_ in image_path:

self.image_list.extend(glob.glob(os.path.join(image_path_, '*.png')))

self.image_list.sort()

self.label_list = []

if not isinstance(label_path, list):

label_path = [label_path]

for label_path_ in label_path:

self.label_list.extend(glob.glob(os.path.join(label_path_, '*.png')))

self.label_list.sort()

self.label_info = get_label_info(csv_path)

self.to_tensor = transforms.Compose([

transforms.ToTensor(),

# transforms.Normalize((0.485, 0.456, 0.406), (0.229, 0.224, 0.225)),

])

# 图片大小

self.image_size = scale

# 随机缩放

self.scale = [0.5, 1, 1.25, 1.5, 1.75, 2]

self.loss = loss

def __getitem__(self, index):

seed = random.random()

# 加载图片

img = Image.open(self.image_list[index])

# 随机选择加载

scale = random.choice(self.scale)

scale = (int(self.image_size[0] * scale), int(self.image_size[1] * scale))

if self.mode == 'train':

img = transforms.Resize(scale, InterpolationMode.BICUBIC)(img)

# 随机裁剪,

img = RandomCrop(self.image_size, seed, pad_if_needed=True)(img)

img = np.array(img)

img = Image.fromarray(img)

img = self.to_tensor(img).float()

# 修改label 图片信息

label = Image.open(self.label_list[index])

# 如果做了训练模式,随机缩放大小,

if self.mode == 'train':

label = transforms.Resize(scale, InterpolationMode.NEAREST)(label)

label = RandomCrop(self.image_size, seed, pad_if_needed=True)(label)

label = np.array(label)

if self.loss == 'dice':

label = one_hot_it_v11_dice(label, self.label_info).astype(np.uint8)

label = np.transpose(label, [2, 0, 1]).astype(np.float32)

label = torch.from_numpy(label)

return img, label

elif self.loss == 'crossentropy':

label = one_hot_it_v11(label, self.label_info)

label = torch.from_numpy(label).long()

return img, label

def __len__(self):

return len(self.image_list)

def get_dataloader(mode=True, batch_size=2):

path = r"E:\note\cv\data\CamVid"

train_path = os.path.join(path, "train")

val_path = os.path.join(path, "val")

train_labels_path = os.path.join(path, "train_labels")

val_labels_path = os.path.join(path, "val_labels")

class_dict_path = os.path.join(path, "class_dict.csv")

test_path = os.path.join(path, "test")

test_labels_path = os.path.join(path, "test_labels")

if mode:

train_dataset = CamVid([train_path, val_path],

[train_labels_path, val_labels_path], class_dict_path,

(720, 960), loss='crossentropy', mode='train')

loader = DataLoader(train_dataset, batch_size=batch_size, shuffle=True, drop_last=True, )

else:

train_dataset = CamVid([test_path],

[test_labels_path], class_dict_path,

(720, 960), loss='crossentropy', mode='val')

loader = DataLoader(train_dataset, batch_size=batch_size, shuffle=True, drop_last=True, )

return loader

def show_image(image):

plt.figure()

image = image.numpy().transpose(1, 2, 0)

print(image)

plt.imshow(image)

plt.show()

def show_label(mask):

plt.figure()

image = mask.numpy()

print(np.unique(np.array(image)))

plt.imshow(image, cmap="gray")

plt.title("label")

plt.show()

if __name__ == '__main__':

train_dataloader = get_dataloader(True, 2)

for images, labels in train_dataloader:

print(images.shape)

print(labels.shape)

show_image(images[0])

show_label(labels[0].argmax(dim=0))

break

参考资料