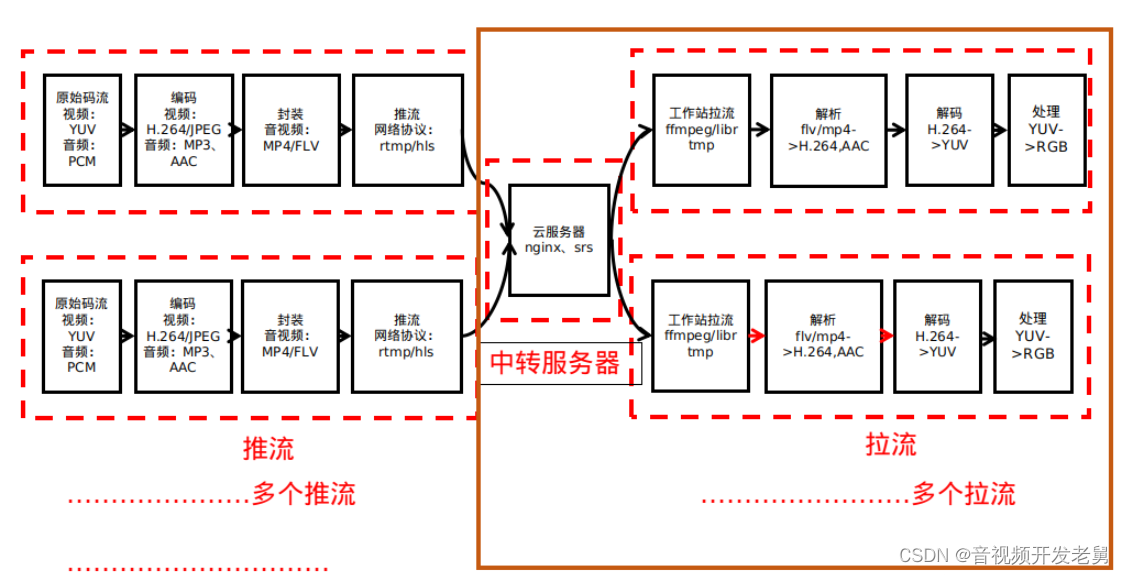

用一张图表示接下来FFmpeg多线程拉流(橙色框框):

环境是Ubuntu18.04,ffmpeg4.1.5

主要有这么几个文件:

main.cpp

transdata.cpp

transdata.h

源码

主程序main.cpp分为两个部分,一是main()函数里申请pthread线程ID,开启线程,释放线程;二是athread线程函数的编写,每一个用户拉的流根据用户ID而定,比如用户ID为1的,拉流地址后面加1。main.cpp:

#include <iostream>

#include "transdata.h"

using namespace std;

vector<Transdata> user_tran;

void *athread(void *ptr)

{

int count = 0;

int num = *(int *)ptr;

//初始化

while((user_tran[num].Transdata_init(num))<0)

{

cout << "init error "<< endl;

}

cout <<"My UserId is :"<< num << endl;

//do something you want

user_tran[num].Transdata_Recdata();

user_tran[num].Transdata_free();

return 0;

}

int main(int argc, char** argv)

{

int ret;

//申请内存 相当于注册

for(int i = 0; i < 5 ; i++)

{

Transdata *p = new Transdata();

user_tran.push_back(*p);

user_tran[i].User_ID = i;

cout << &user_tran[i] << endl;

delete p;

}

//开启五个线程

for(int i = 0; i < 5; i ++)

{

int *num_tran;

num_tran = &user_tran[i].User_ID;

ret = pthread_create(&user_tran[i].thread_id,NULL,athread,(void *)num_tran);

if(ret < 0) return -1;

}

for(int i = 0; i < 5; i++)

{

pthread_join(user_tran[i].thread_id, NULL);/*等待进程t_a结束*/

}

return 0;

}

CSDN站内私信我,领取最新最全C++音视频学习提升资料,内容包括(C/C++,Linux 服务器开发,FFmpeg ,webRTC ,rtmp ,hls ,rtsp ,ffplay ,srs)

transdata.cpp transdata.h是拉流的功能函数。

transdata.cpp:

#include "transdata.h"

Transdata::Transdata(){}

Transdata::~Transdata(){}

int Transdata::Transdata_free()

{

av_bsf_free(&bsf_ctx);

avformat_close_input(&ifmt_ctx);

av_frame_free(&pframe);

if (ret < 0 && ret != AVERROR_EOF)

{

printf( "Error occurred.\n");

return -1;

}

return 0;

}

int Transdata::Transdata_Recdata()

{

//可以自己增加LOG函数

//LOGD("Transdata_Recdata entry %d",User_ID);

int count = 0;

while(av_read_frame(ifmt_ctx, &pkt)>=0)

{

//LOGD("av_read_frame test %d",User_ID);

if (pkt.stream_index == videoindex) {

// H.264 Filter

if (av_bsf_send_packet(bsf_ctx, &pkt) < 0){

cout << " bsg_send_packet is error! " << endl;

av_packet_unref(&pkt);

continue;

//return -1;

}

// LOGD("av_bsf_send_packet test %d",User_ID);

if (av_bsf_receive_packet(bsf_ctx, &pkt) < 0) {

cout << " bsg_receive_packet is error! " << endl;

av_packet_unref(&pkt);

continue;

//return -1;

}

// LOGD("av_bsf_receive_packet test %d",User_ID);

count ++;

if(count == 10) {

printf("My id is %d,Write Video Packet. size:%d\tpts:%ld\n",User_ID, pkt.size, pkt.pts);

count =0;

}

// Decode AVPacket

// LOGD("Decode AVPacket ,ID is %d",User_ID);

if (pkt.size) {

ret = avcodec_send_packet(pCodecCtx, &pkt);

if (ret < 0 || ret == AVERROR(EAGAIN) || ret == AVERROR_EOF) {

std::cout << "avcodec_send_packet: " << ret << std::endl;

av_packet_unref(&pkt);

continue;

//return -1;

}

// LOGD("avcodec_send_packet test %d",User_ID);

//Get AVframe

ret = avcodec_receive_frame(pCodecCtx, pframe);

if (ret == AVERROR(EAGAIN) || ret == AVERROR_EOF) {

std::cout << "avcodec_receive_frame: " << ret << std::endl;

av_packet_unref(&pkt);

av_frame_unref(pframe);

continue;

// return -1;

}

//转成rgb

avframeToCvmat(pframe);

}

}

//Free AvPacket

av_packet_unref(&pkt);

// av_free(pframe->data[0]);

av_frame_unref(pframe); //后来才增加的 !! 每次重用之前应调用将frame复位到原始干净可用状态

//https://www.cnblogs.com/leisure_chn/p/10404502.html

}

return 0;

}

//AVFrame 转 cv::mat

void Transdata::avframeToCvmat(const AVFrame * frame)

{

// LOGD("avframeToCvmat imshow1 , ID is %d",User_ID);

int width = frame->width;

int height = frame->height;

cv::Mat image(height, width, CV_8UC3);

// LOGD("avframeToCvmat imshow2 , ID is %d",User_ID);

int cvLinesizes[1];

cvLinesizes[0] = image.step1();

SwsContext* conversion = sws_getContext(width, height, (AVPixelFormat) frame->format, width, height, AVPixelFormat::AV_PIX_FMT_BGR24, SWS_FAST_BILINEAR, NULL, NULL, NULL);

sws_scale(conversion, frame->data, frame->linesize, 0, height, &image.data, cvLinesizes);

// LOGD("avframeToCvmat imshow3 , ID is %d",User_ID);

sws_freeContext(conversion);

// LOGD("avframeToCvmat imshow4 , ID is %d",User_ID);

imshow(Simg_index,image);

startWindowThread();//开启显示线程,专门用于显示

waitKey(1);

image.release();

// LOGD("avframeToCvmat imshow5 , ID is %d",User_ID);

}

int Transdata::Transdata_init(int num) {

User_ID = num; //用户ID

Simg_index = to_string(num);

cout << "Simg_index is : "<< Simg_index << endl;

string str3 = to_string(num);

cout << str3.size()<< endl;

std::string video_name=str2+str3;

const char *video_filename = video_name.c_str();//string转const char*

cout << video_filename << endl;

//新增

ifmt_ctx = avformat_alloc_context();

//pkt = (AVPacket *)av_malloc(sizeof(AVPacket));

//Register

av_register_all();

//Network

avformat_network_init();

//Input

if ((ret = avformat_open_input(&ifmt_ctx, video_filename, 0, 0)) < 0) {

printf("Could not open input file.");

return -1;

}

if ((ret = avformat_find_stream_info(ifmt_ctx, 0)) < 0) {

printf("Failed to retrieve input stream information");

return -1;

}

videoindex = -1;

for (i = 0; i < ifmt_ctx->nb_streams; i++) {

if (ifmt_ctx->streams[i]->codecpar->codec_type == AVMEDIA_TYPE_VIDEO) {

videoindex = i;

codecpar = ifmt_ctx->streams[i]->codecpar;

}

}

//Find H.264 Decoder

pCodec = avcodec_find_decoder(AV_CODEC_ID_H264);

if (pCodec == NULL) {

printf("Couldn't find Codec.\n");

return -1;

}

pCodecCtx = avcodec_alloc_context3(pCodec);

if (!pCodecCtx) {

fprintf(stderr, "Could not allocate video codec context\n");

return -1;

}

if (avcodec_open2(pCodecCtx, pCodec, NULL) < 0) {

printf("Couldn't open codec.\n");

return -1;

}

pframe = av_frame_alloc();

if (!pframe) {

printf("Could not allocate video frame\n");

return -1;

}

//find filter

buffersrc = av_bsf_get_by_name("h264_mp4toannexb");

//

if(av_bsf_alloc(buffersrc, &bsf_ctx) < 0) {

printf("av_bsf_alloc is error");

return -1;

}

if(codecpar != NULL) {

if (avcodec_parameters_copy(bsf_ctx->par_in, codecpar) < 0) {

printf("avcodec_parameters_copy is error");

return -1;

}

if (av_bsf_init(bsf_ctx) < 0) {

printf("av_bsf_init is error");

return -1;

}

}

else {

printf("codecpar is NULL\n");

return -1;

}

return 0;

}

transdata.h

#ifndef VERSION1_0_TRANSDATA_H

#define VERSION1_0_TRANSDATA_H

#include <iostream>

extern "C"

{

#include "libavformat/avformat.h"

#include <libavutil/mathematics.h>

#include <libavutil/time.h>

#include <libavutil/samplefmt.h>

#include <libavcodec/avcodec.h>

#include <libavfilter/buffersink.h>

#include <libavfilter/buffersrc.h>

#include "libavutil/avconfig.h"

#include <libavutil/imgutils.h>

#include "libswscale/swscale.h"

};

#include "opencv2/core.hpp"

#include<opencv2/opencv.hpp>

//#include "LogUtils.h"

using namespace std;

using namespace cv;

class Transdata

{

public:

Transdata();

~Transdata();

AVFormatContext *ifmt_ctx = NULL;

AVPacket pkt;

AVFrame *pframe = NULL;

int ret, i;

int videoindex=-1;

AVCodecContext *pCodecCtx;

AVCodec *pCodec;

const AVBitStreamFilter *buffersrc = NULL;

AVBSFContext *bsf_ctx;

AVCodecParameters *codecpar = NULL;

std::string str2= "rtmp://localhost:1935/rtmplive/test";

//std::string str2= "rtmp://47.100.110.164:1935/live/test";

//const char *in_filename = "rtmp://localhost:1935/rtmplive"; //rtmp地址

//const char *in_filename = "rtmp://58.200.131.2:1935/livetv/hunantv"; //芒果台rtmp地址

cv::Mat image_test;

int Transdata_init(int num);

int Transdata_Recdata();

int Transdata_free();

void avframeToCvmat(const AVFrame * frame);

int User_ID;

string Simg_index;

pthread_t thread_id;

};

#endif //VERSION1_0_TRANSDATA_H

#ifndef VERSION1_0_TRANSDATA_H

#define VERSION1_0_TRANSDATA_H

#include <iostream>

extern "C"

{

#include "libavformat/avformat.h"

#include <libavutil/mathematics.h>

#include <libavutil/time.h>

#include <libavutil/samplefmt.h>

#include <libavcodec/avcodec.h>

#include <libavfilter/buffersink.h>

#include <libavfilter/buffersrc.h>

#include "libavutil/avconfig.h"

#include <libavutil/imgutils.h>

#include "libswscale/swscale.h"

};

#include "opencv2/core.hpp"

#include<opencv2/opencv.hpp>

using namespace std;

using namespace cv;

class Transdata

{

public:

Transdata();

~Transdata();

AVFormatContext *ifmt_ctx = NULL;

AVPacket pkt;

AVFrame *pframe = NULL;

int ret, i;

int videoindex=-1;

AVCodecContext *pCodecCtx;

AVCodec *pCodec;

const AVBitStreamFilter *buffersrc = NULL;

AVBSFContext *bsf_ctx;

AVCodecParameters *codecpar = NULL;

std::string str2= "rtmp://localhost:1935/rtmplive/test";

int Transdata_init(int num);

int Transdata_Recdata();

int Transdata_free();

void avframeToCvmat(const AVFrame * frame);

int User_ID;

string Simg_index;

pthread_t thread_id;

};

#endif //VERSION1_0_TRANSDATA_H

遇到的问题

①由于要把增加用户ID增加进拉流地址,拉流地址的格式是const char * ,由于ID是整型,这可以把int类型的ID转成string类型,拼接到服务器的string类型IP去,然后再转成const char *,这样比直接在const char *拼接简单,至少我还不知道有什么其他方法。

例子:

int num = 5;

string str2 = {"test"};

string str3 = to_string(num);

std::string video_name=str2+str3;

const char *video_filename = video_name.c_str();//string转const char*

输出为test5

②imshow处显示久了会冻结,不更新

这个问题谷歌后,发现有很多这样的问题,opencv里给的官方拉流demo也确实没有考虑这样的问题,出现这个问题是因为如果接收速度太快而显示速度太慢就会矛盾,从而产生问题。实际上处理图片和接收图片最后是放在两个线程里,接收图片一个线程,显示图片一个线程,接收图片后把图片放进一个队列里,显示图片线程就去取,如果接收得太快,显示图片线程发现有队列里有两张图片,那么就丢掉之前的一张,只拿后面一张,这样就不会发生冲突了。

那么opencv里也有这么一个函数,应该是能够实现上面所说,startWindowThread(),官方给的api说明也不够,但是我用上去之后发现确实没问题了。

原来是这样会出问题:

while(1)

{

RecImage();

imshow(“test0”,img);

waitKey(1);

}

修改后:

while(1)

{

RecImage();

imshow(“test0”,img);

startWindowThread();//开启一个线程专门显示图片

waitKey(1);

}

③各种段错误Segmentation Fault问题,千万要记得申请内存,释放内存,出现Segmentation Fault也不要慌张,把你所定义的变量从头到尾检查一遍,基本就能够解决问题了,另外可以使用gdb调试、查看程序开启前和开启后的内存情况,或是增加LOG库,保存日志,从而发现问题。