加州大学欧文分校的这项研究,让我们更期待未来更先进的彩色夜视仪。

其实是很容易想到的图像增强手段,

在一些军事大片中,士兵头戴夜视仪搜索前进似乎是少不了的场景。使用红外光在黑夜中观察的夜视系统通常将视物渲染成单色图像。

图源:flir.com

不过,在最近的一项研究中,加州大学欧文分校的科学家们借助深度学习 AI 技术设计了一新方法,有了这种方法,红外视觉有助于在无光条件下看到场景中的可见颜色。

研究共同一作、加州大学欧文分校工程师、外科医生和视觉科学家 Andrew Browne 表示,「世界上很多地方都以人们赖以做出决策的方式进行颜色编码,比如信号灯。」

夜视系统是个特例。使用红外光照亮黑夜的夜视系统通常仅以绿色渲染场景,而无法显示出在正常光线下可见的颜色。一些较新的夜视系统使用超灵敏相机放大可见光,但这些相机几乎不能显示出漆黑环境中没有光可放大的颜色。

因此,在这项研究中,研究者推断,赋予物体可见光的每种染料和颜料不仅反射了一组可见波长,而且可能反射一组红外波长。那么,如果可以训练一个能够识别每种染料和颜料的红外指纹的夜视系统,则能够使用与每种染料和颜料相关的可见光来显示图像。

效果图

目前,相关论文已在期刊 PLOS ONE 上发表。

论文地址:https://journals.plos.org/plosone/article?id=10.1371/journal.pone.0265185

这项研究是从不能感知的近红外照明中预测人类可见光谱场景的第一步。接下来的工作可以极大地促进各种应用,比如夜视系统和对可见光敏感的生物样本研究。

研究概述

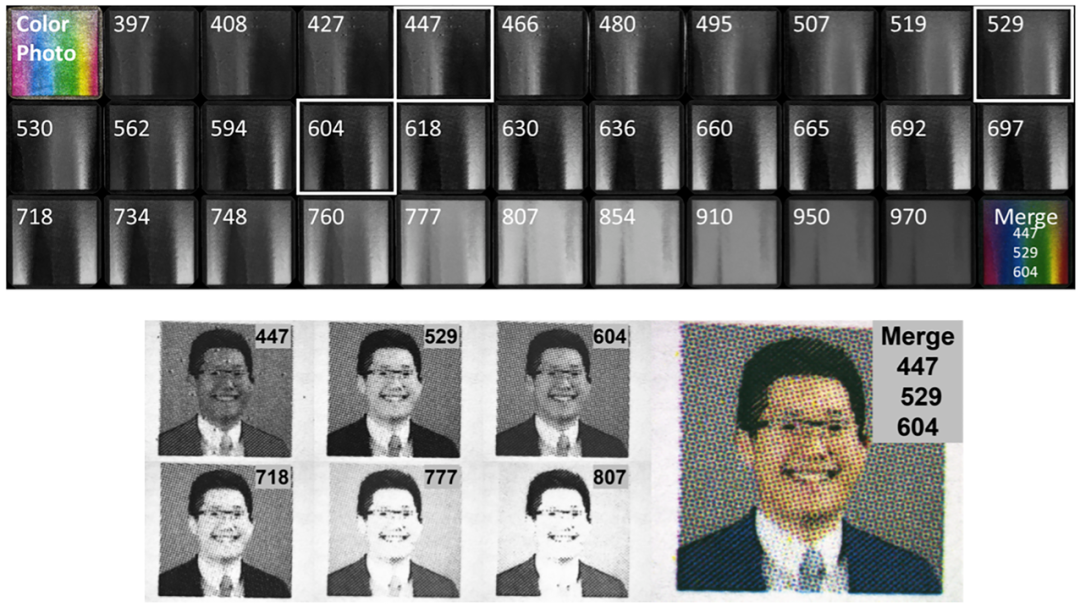

人类可以感知 400-700nm 可见光谱中的光。一些夜视系统使用人类无法感知的红外光,将渲染后的图像转换到数字显示器上,最后在可见光谱中呈现单色图像。

研究者想要开发一种由优化深度学习架构驱动的成像算法,从而可以使用场景中的红外光谱光照来预测该场景中的可见光谱渲染,就好像人类使用可见光谱光感知它一样。当人类处于完全「黑暗」并只有红外光照射时,他们能够以数字方式渲染可见光谱场景。

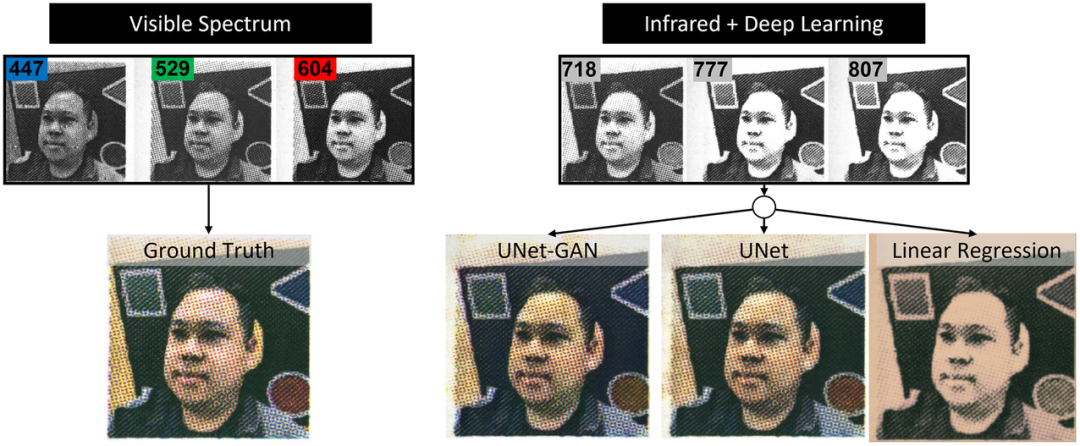

图像处理目标。仅使用红外光照显示的图像与使用深度学习处理 NIR 数据后的可见光谱图像比较。

Andrew Browne 表示,「单色相机对它所看到的场景中反射的任何光子都很敏感。因此,我们使用可调光源将光照射到场景上,并使用单色相机捕捉在所有不同照明颜色下从该场景反射的光子。」

模型:

这里直接看原文:

Baseline and network architectures

To predict RGB color images from individual or combinations of wavelength illuminations, we evaluated the performance of the following architectures: a baseline linear regression, a U-Net inspired CNN (UNet), and a U-Net augmented with adversarial loss (UNet-GAN).

Linear regression baseline.

We implemented a simple linear regression model as a baseline to compare the results of the deep neural architectures and assess their performances. As input to the linear model, we evaluated patches of several sizes and predicted target patches separately for each color channel (R, G, and B).

Conventional U-Net.

A traditional U-Net is a fully convolutional network that consists of a down-sampling path (encoder) that helps to capture the context and an up-sampling path (decoder) that is responsible for precise localization. The down-sampling path repeatedly applies a series of operations which consists of three 3 × 3 convolutions followed by rectified linear unit (ReLU) activation and a 2 × 2 max pooling operation. At the end of one series of operations, the number of feature maps is doubled and the size of feature maps is halved. The up-sampling path repeatedly performs another series of operations consisting of an up-convolution operation of the feature maps by a 2 × 2 filter, a concatenation with the corresponding feature map from the down-sampling path, and two 3 × 3 convolutions, each followed by a ReLU activation. In the output layer, a 3 × 3 convolution is performed with a logistic activation function to produce the output. Padding is used to ensure that the size of output matches the size of the input.

VGG network as a U-Net encoder (UNet).

VGG neural networks [40], named after the Visual Geometry Group that first developed and trained them, were initially introduced to investigate the effect of the convolutional network depth on its accuracy in large-scale image recognition settings. There are several variants of VGG networks like VGG11, VGG16, and VGG19, consisting of 11, 16, and 19 layers of depth, respectively. VGG networks process input images through a stack of convolutional layers with a fixed filter size of 3 × 3 and a stride of 1. Convolutional layers are alternated with max-pooling filters to down-sample the input representation. In [41], using VGG11 with weights pre-trained on ImageNet as U-Net encoder [42] was reported to improve the performance in binary segmentation. In this study, we tried a U-Net architecture with a VGG16 encoder [40] using both randomly initialized weights and weights pre-trained on ImageNet. We refer to this architecture simply as U-Net.

U-Net with adversarial objective (U-Net-GAN).

To enhance the performance of the U-Net architecture, we also explored a Conditional Generative Adversarial Network approach (CGAN). A conventional CGAN consists of two main components: a generator and a discriminator. The task of the generator is to produce an image indistinguishable from a real image and “fool” the discriminator. The task of the discriminator is to distinguish between real and fake images produced by the generator, given the reference input images. In this work, we examined the performances of two U-Net-based architectures: 1) a stand-alone U-Net implementation as described in the previous section and 2) a CGAN architecture with a U-Net generator along with an adversarial discriminator. For the second approach, we used a PatchGAN discriminator as described in [7], which learns to determine whether an image is fake or real by looking at local patches of 70 × 70 pixels, rather than the entire image. This is advantageous because a smaller PatchGAN has fewer parameters, runs faster, and can be applied on arbitrarily large images. We refer to this latter architecture (U-Net generator and PatchGAN discriminator) as U-Net-GAN.

Experimental settings and training

For all the experiments, following standard machine learning practice, we divided the dataset into 3 parts and reserved 140 images for training, 40 for validation and 20 for testing. To compare performances between different models, we evaluated several common metrics for image reconstruction including Mean Square Error (MSE), Structural Similarity Index Measure (SSIM), Peak Signal-to-Noise Ratio (PSNR), Angular Error (AE), DeltaE and Frechet Inception Distance (FID). FID is a metric that determines how distant real and generated images are in terms of feature vectors calculated using the Inception v3 classification model [43]. Lower FID scores usually indicate higher image quality. FID has been employed in many image generation tasks including NIR colorization study [21]. However, Mehri et al. used a single-channel NIR input whereas we feed three stacked images of different NIR wavelengths. Therefore, we cannot entirely compare FID values between our studies as the settings are different. We report FID in the main manuscript and comprehensively present additional metrics in the Supplementary section.

Linear regression baseline.

For the linear regression model, the input images were divided into patches. We explored several patch sizes of 6 × 6, 12 × 12, 24 × 24 and 64 × 64 pixels. Every patch from the selected input infrared images was used to predict the corresponding R, G, and B patch of the target image. A different linear model was used for each color channel and the results for one image were computed as the average over the three channels.

U-Net and U-Net-GAN.

To train the proposed deep architectures, we divided the input images into random patches of size 256 × 256. Random cropping of the patches was used as a data augmentation technique. Both inputs and outputs were normalized to [−1, 1]. The deep architectures were trained for 100000 iterations with a learning rate starting at 1 × 10−4 and cosine learning decay. Given the fully convolutional nature of the proposed architectures, the entire images of size 2048 × 2048 were fed for prediction at inference time. As a loss function for neural networks, i.e. U-Net and U-Net-GAN, we used mean absolute error (MAE).

Human grader evaluation of model performance.

We performed a human evaluation of model performance. We randomly sampled 10 images (ground truth and the 3 prediction output by UNet-GAN, UNet and Linear regression). We provided a blinded multiple choice test to 5 graders to select which of the 3 outputs subjectively appeared most similar to the ground truth image. We subsequently randomly selected 10 patches (portion of images) and asked graders to compare similarity of predicted patches from UNet-GAN and UNet with the corresponding ground truth patch.

Results

In this section, we first describe our spectral reflectance findings for CMY printed inks imaged under different illuminant wavelengths and then report and discuss the performances for the different architectures to accurately predict RGB images from input images acquired by single and combinations of different wavelength illumination.

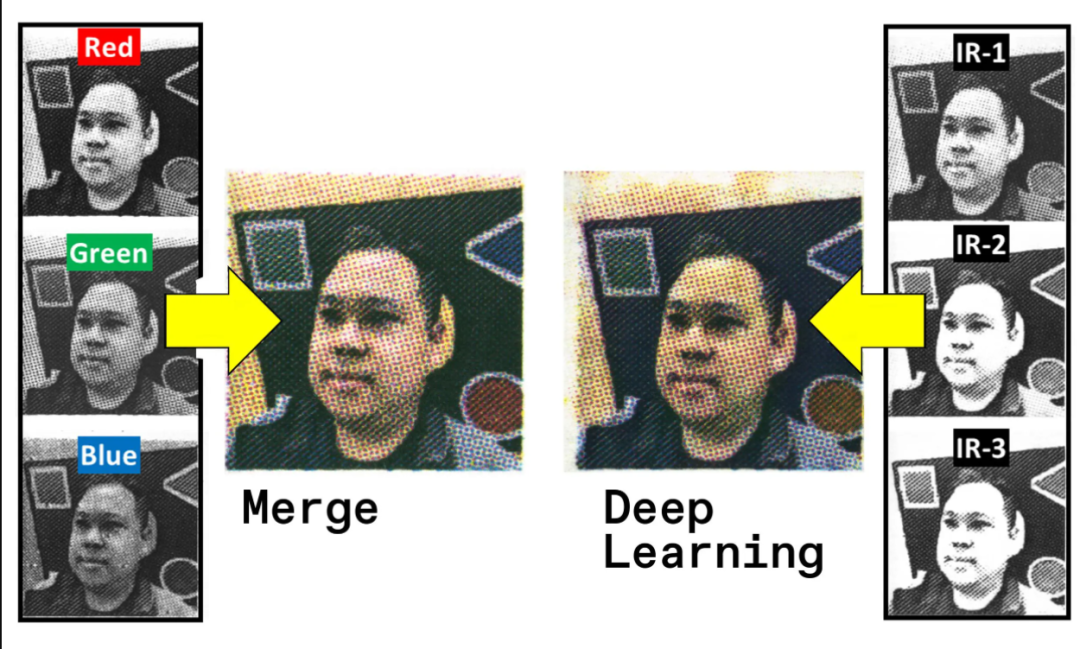

为此,研究者采用了一个对可见光和近红外光敏感的单色相机,在覆盖标准可见红光(604nm)、绿光(529nm)和蓝光(447nm)以及红外波长(718、777 和 807 nm)的多光谱照明下采集面部打印图像的图像数据集。接着,他们对具有类 U-Net 架构的卷积神经网络进行优化,以仅从近红外图像中预测可见光谱图像。

人脸肖像库中的示例图像。

接着,研究者将三张红外图像与彩色图像配对,以训练一个人工智能神经网络来对场景中的颜色进行预测。在经过训练并提升性能之后,该神经网络能够从三张看起来非常接近真实物体的红外线图像中重建彩色图像。下图左为用可见光谱的真彩色,图右为深度学习算法加持下的彩色。

Andrew Browne 说到,「当我们增加红外通道或红外颜色数量时,它会提供更多数据,我们也能更好地预测实际看起来非常接近真实图像的应是什么。我们在这项研究中提出的方法可以用来获取三种不同红外颜色的图像,这三种颜色人眼无法看到。」

不过,研究者只在打印的彩色照片上测试了他们的算法和技术。他们正在寻求将这些算法和技术应用于视频,并最终应用于真实世界的物体和人类主体。

参考链接:

https://spectrum.ieee.org/night-vision-infrared

https://www.popsci.com/technology/ai-infrared-night-vision-in-color/

IJCAI 2022 - Neural MMO 海量 AI 团队生存挑战赛

4月14日,由超参数科技发起,联合学界MIT、清华大学深圳国际研究生院以及知名数据科学挑战平台 AIcrowd 共同主办的「IJCAI 2022-Neural MMO 海量 AI 团队生存挑战赛」正式启动。

本届赛事以「寻找未来开放大世界的最强 AI 团队」为主题,通过在 Neural MMO 的大规模多智能体环境中探索、搜寻和战斗,获得比其他参赛者更高的成就。比赛还设置新的规则,评估智能体面对新地图和不同对手的策略鲁棒性,在 AI 团队中引入合作和角色分工,丰富了比赛内容,增强了趣味性。