目录

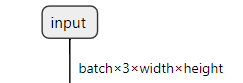

1. input节点

// const char* INPUT_BLOB_NAME = "data";

// static const int INPUT_H = 224;

// static const int INPUT_W = 224;

// DataType dt = DataType::kFLOAT

// Create input tensor of shape { 3, INPUT_H, INPUT_W } with name INPUT_BLOB_NAME

ITensor* data = network->addInput(INPUT_BLOB_NAME, dt, Dims3{3, INPUT_H, INPUT_W});

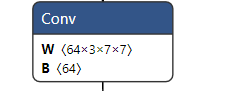

assert(data);2.Conv节点

这里的conv是没有bias,图中字母"B"是指BN层,64是指64通道。

// std::map<std::string, Weights> weightMap = loadWeights("./resnet18.wts");

// Weights emptywts{DataType::kFLOAT, nullptr, 0};

IConvolutionLayer* conv1 = network->addConvolutionNd(*data, 64, DimsHW{7, 7}, weightMap["conv1.weight"], emptywts);

assert(conv1);

conv1->setStrideNd(DimsHW{2, 2});

conv1->setPaddingNd(DimsHW{3, 3});3.BN层

不知道Onnx为啥没有单独的bn层。

IScaleLayer* addBatchNorm2d(INetworkDefinition *network, std::map<std::string, Weights>& weightMap, ITensor& input, std::string lname, float eps) {

float *gamma = (float*)weightMap[lname + ".weight"].values;

float *beta = (float*)weightMap[lname + ".bias"].values;

float *mean = (float*)weightMap[lname + ".running_mean"].values;

float *var = (float*)weightMap[lname + ".running_var"].values;

int len = weightMap[lname + ".running_var"].count;

std::cout << "len " << len << std::endl;

float *scval = reinterpret_cast<float*>(malloc(sizeof(float) * len));

for (int i = 0; i < len; i++) {

scval[i] = gamma[i] / sqrt(var[i] + eps);

}

Weights scale{DataType::kFLOAT, scval, len};

float *shval = reinterpret_cast<float*>(malloc(sizeof(float) * len));

for (int i = 0; i < len; i++) {

shval[i] = beta[i] - mean[i] * gamma[i] / sqrt(var[i] + eps);

}

Weights shift{DataType::kFLOAT, shval, len};

float *pval = reinterpret_cast<float*>(malloc(sizeof(float) * len));

for (int i = 0; i < len; i++) {

pval[i] = 1.0;

}

Weights power{DataType::kFLOAT, pval, len};

weightMap[lname + ".scale"] = scale;

weightMap[lname + ".shift"] = shift;

weightMap[lname + ".power"] = power;

IScaleLayer* scale_1 = network->addScale(input, ScaleMode::kCHANNEL, shift, scale, power);

assert(scale_1);

return scale_1;

}

IScaleLayer* bn1 = addBatchNorm2d(network, weightMap, *conv1->getOutput(0), "bn1", 1e-5);4. Relu节点

IActivationLayer* relu1 = network->addActivation(*bn1->getOutput(0), ActivationType::kRELU);

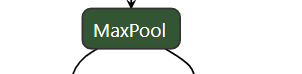

assert(relu1);5.MaxPool节点

IPoolingLayer* pool1 = network->addPoolingNd(*relu1->getOutput(0), PoolingType::kMAX, DimsHW{ 3, 3 });

assert(pool1);

pool1->setStrideNd(DimsHW{ 2, 2 });

pool1->setPaddingNd(DimsHW{ 1, 1 });6.BasicBlock

resnet18特有的块,输入是激活relu后(第一个block是maxpool)后的结果,输出是relu激活结果。

IActivationLayer* basicBlock(INetworkDefinition* network, std::map<std::string, Weights>& weightMap, ITensor& input, int inch, int outch, int stride, std::string lname) {

Weights emptywts{ DataType::kFLOAT, nullptr, 0 };

IConvolutionLayer* conv1 = network->addConvolutionNd(input, outch, DimsHW{ 3, 3 }, weightMap[lname + "conv1.weight"], emptywts);

assert(conv1);

conv1->setStrideNd(DimsHW{ stride, stride });

conv1->setPaddingNd(DimsHW{ 1, 1 });

IScaleLayer* bn1 = addBatchNorm2d(network, weightMap, *conv1->getOutput(0), lname + "bn1", 1e-5);

IActivationLayer* relu1 = network->addActivation(*bn1->getOutput(0), ActivationType::kRELU);

assert(relu1);

IConvolutionLayer* conv2 = network->addConvolutionNd(*relu1->getOutput(0), outch, DimsHW{ 3, 3 }, weightMap[lname + "conv2.weight"], emptywts);

assert(conv2);

conv2->setPaddingNd(DimsHW{ 1, 1 });

IScaleLayer* bn2 = addBatchNorm2d(network, weightMap, *conv2->getOutput(0), lname + "bn2", 1e-5);

IElementWiseLayer* ew1;

if (inch != outch) {

IConvolutionLayer* conv3 = network->addConvolutionNd(input, outch, DimsHW{ 1, 1 }, weightMap[lname + "downsample.0.weight"], emptywts);

assert(conv3);

conv3->setStrideNd(DimsHW{ stride, stride });

IScaleLayer* bn3 = addBatchNorm2d(network, weightMap, *conv3->getOutput(0), lname + "downsample.1", 1e-5);

ew1 = network->addElementWise(*bn3->getOutput(0), *bn2->getOutput(0), ElementWiseOperation::kSUM);

}

else {

ew1 = network->addElementWise(input, *bn2->getOutput(0), ElementWiseOperation::kSUM);

}

IActivationLayer* relu2 = network->addActivation(*ew1->getOutput(0), ActivationType::kRELU);

assert(relu2);

return relu2;

}

IActivationLayer* relu2 = basicBlock(network, weightMap, *pool1->getOutput(0), 64, 64, 1, "layer1.0.");

// 第二个残差块

IActivationLayer* relu3 = basicBlock(network, weightMap, *relu2->getOutput(0), 64, 64, 1, "layer1.1.");7. GlobalAvgPooling

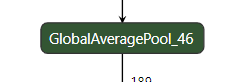

没有找到TensorRT c++ api自带的全局平均池化,这里根据其特点,进行实现。

Dims last_conv_dims = relu9->getOutput(0)->getDimensions();

int nb_dims = last_conv_dims.nbDims;

Dims k_size = DimsHW{ last_conv_dims.d[nb_dims - 2], last_conv_dims.d[nb_dims - 1] };

IPoolingLayer* pool2 = network->addPoolingNd(*relu9->getOutput(0), PoolingType::kAVERAGE, k_size );

assert(pool2);

pool2->setStrideNd(DimsHW{ 1, 1 });8.Flatten

tensorRT不需要额外flatten,直接接上全连接(如下)。

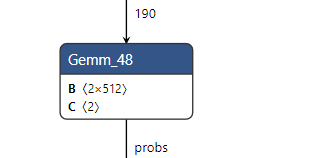

9.Gemm节点(全连接层)

IFullyConnectedLayer* fc1 = network->addFullyConnected(*pool2->getOutput(0), OUTPUT_SIZE, weightMap["backbone.backbone.fc.weight"], weightMap["backbone.backbone.fc.bias"]);

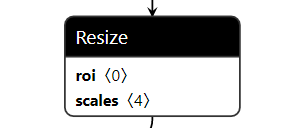

assert(fc1);10.Resize节点

onnx Resize类型节点上的scales<4>,意思应该是放大四倍(长宽各两倍)。下面是放大4倍,缩小则要改动下。

IResizeLayer* upsample(ITensor* input_tensor, INetworkDefinition* network) {

IResizeLayer* resize_tensor = network->addResize(*input_tensor);

Dims origin_shape = input_tensor->getDimensions();

Dims upsample_dim;

if (origin_shape.nbDims == 4)

upsample_dim = { origin_shape.nbDims, origin_shape.d[0], origin_shape.d[1], origin_shape.d[2] * 2, origin_shape.d[3] * 2 };

else

upsample_dim = { origin_shape.nbDims, origin_shape.d[0], origin_shape.d[1] * 2, origin_shape.d[2] * 2 };

resize_tensor->setOutputDimensions(upsample_dim);

resize_tensor->setResizeMode(ResizeMode::kLINEAR);

resize_tensor->setAlignCorners(true);

assert(resize_tensor);

return resize_tensor;

}11.Concat节点

IConcatenationLayer* conCat(ITensor* tensor_x, ITensor* tensor_y, INetworkDefinition* network) {

ITensor* inputTensors[] = { tensor_x,tensor_y};

IConcatenationLayer* cat = network->addConcatenation(inputTensors, 2);

assert(cat);

return cat;

}