整个测试工具设计为两个部分:PC客户端和Android App代理程序,架构图如下:

主要实现一下几个功能:

- 设备截图

- 设备屏幕映射

- 获取设备基本信息

- 实时显示设备Logcat日志信息

- 获取目标App性能数据:CPU、内存、FPS、网络流量等

- 支持原生测试脚本执行并获取测试数据

- 获取设备ANR traces、Tombstones等相关异常日志

一:PC客户端:

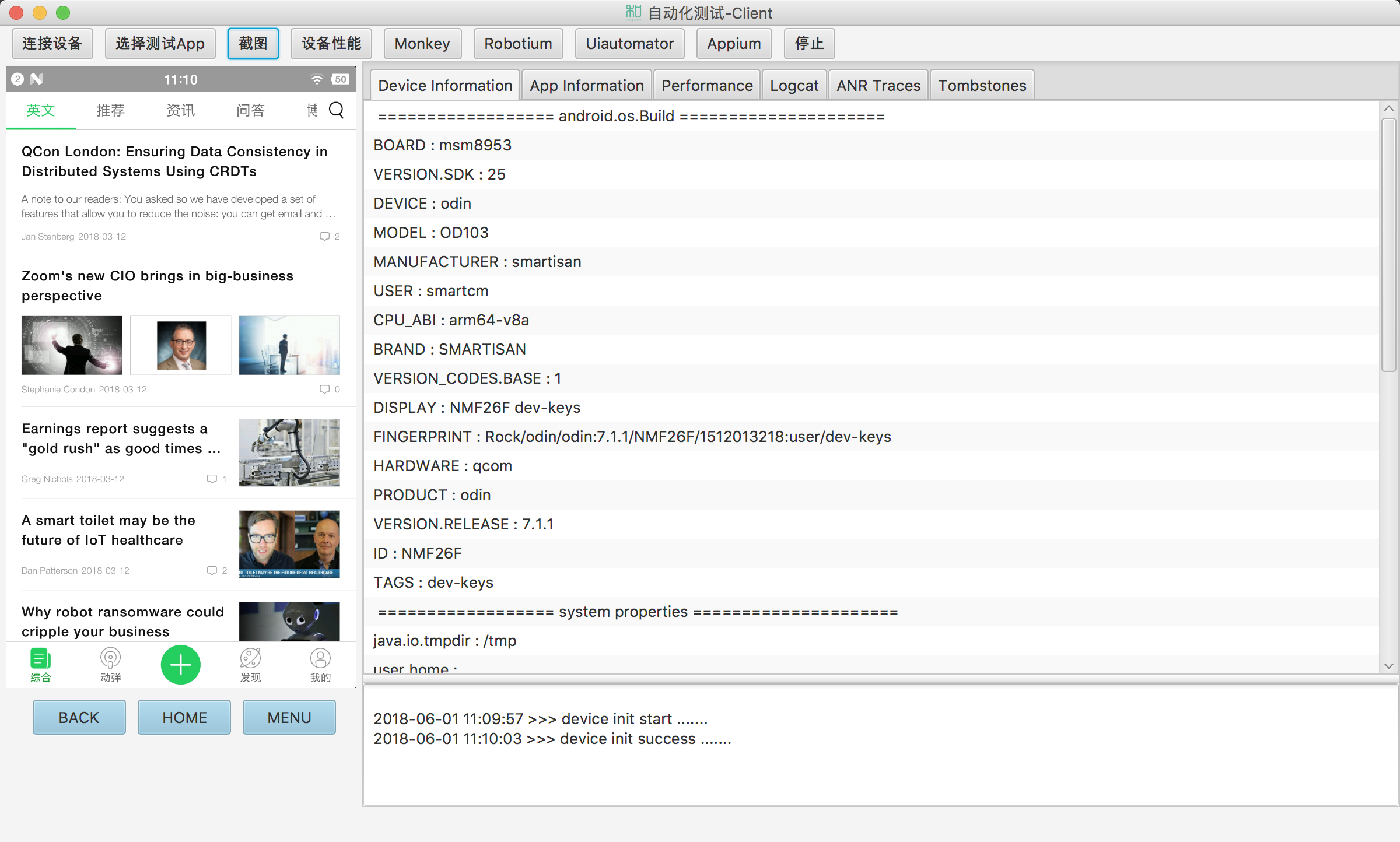

闲话少说,先上个客户端截图(界面比较丑陋,大家凑合看)

接下来简单介绍下PC客户端与Agent相关的操作

-

1.1 连接设备、初始化设备环境

android设备通过USB连接到电脑,并可以识别,启动PC客户端后,点击连接设备,选择已经连接的设备,客户端自动安装agent apk到android设备

-

1.2 adb shell 命令启动Agent代理应用

首先执行abd forward命令,映射PC端到移动端的端口:Port 53516

@Override

public void createForward(int localPort, String remotePort) throws ShellCommandUnresponsiveException {

try {

this.deviceInfo.getDevice().createForward(Config.PORT_53516, Config.PORT_53516);

} catch (IOException e) {

Logger.error("create forward error.", e);

} catch (AdbCommandRejectedException | TimeoutException e) {

e.printStackTrace();

}

}

执行启动agent中Main类的主函数,来启动agent。启动命令如下:

private final static String AGENT_CLASS_PATH = "CLASSPATH=/data/app/live.itrip.agent-1/base.apk exec app_process /system/bin live.itrip.agent.Main";

执行adb shell 下执行上面的命令。ps执行后adb shell会阻塞,需要单独进程执行

public static StringBuffer executeCommandByProcess(String command) {

StringBuffer stringBuffer = new StringBuffer();

try {

Process process = Runtime.getRuntime().exec(command);

// 读取进程标准输出流

BufferedReader in = new BufferedReader(new InputStreamReader(process.getInputStream()));

String line;

while ((line = in.readLine()) != null) {

stringBuffer.append(line).append("\n");

}

// 关闭输入流

in.close();

} catch (Exception ex) {

ex.printStackTrace();

}

return stringBuffer;

}

-

1.3 创建http连接到Agent服务端,获取Agent推送的屏幕数据流

客户端http访问 http://localhost:53516/h264

数据流采用H264编码格式,所以获取数据流后需要对数据流H264解码转换为BufferedImage,再显示到客户端的ImageView上面,设备屏幕数据到PC客户端展示,数据延迟大概在100毫秒左右。

public void screenLive(ImageView imageView) {

// image view

String url = String.format("%s:%s/h264", Config.HTTP_URL, Config.PORT_53516);

ThreadExecutor.execute(() -> {

try {

Thread.sleep(2000);

} catch (InterruptedException e) {

e.printStackTrace();

}

// set Thread Name

Thread.currentThread().setName("thread-ScreenLive");

H264Decoder.streamToImageView(url, imageView, 0);

}

);

Logger.debug("threadLive.start:" + System.currentTimeMillis());

}

public static void streamToImageView(String url,

final ImageView imageView,

final int numBuffers) {

Java2DFrameConverter converter = new Java2DFrameConverter();

try (final FrameGrabber grabber = new FFmpegFrameGrabber(url)) {

double frameRate = 25000000D;

String codeFormatH264 = "h264";

int bitRate = 96;

String presetUltraFast = "ultrafast";

grabber.setFrameRate(frameRate);

grabber.setFormat(codeFormatH264);

grabber.setVideoBitrate(bitRate);

grabber.setVideoOption("preset", presetUltraFast);

grabber.setNumBuffers(numBuffers);

grabber.setImageWidth((int) imageView.getFitWidth());

grabber.setImageHeight((int) imageView.getFitHeight());

grabber.start();

while (!Thread.interrupted()) {

final Frame frame = grabber.grab();

if (frame != null) {

final BufferedImage bufferedImage = converter.convert(frame);

if (bufferedImage != null) {

bufferedImage.flush();

Platform.runLater(() -> {

// Logger.debug(String.format("get BufferedImage【%s * %s】", bufferedImage.getWidth(), bufferedImage.getHeight()));

imageView.setImage(SwingFXUtils.toFXImage(bufferedImage, null));

});

}

}

}

} catch (FrameGrabber.Exception e) {

Logger.error(e.getMessage(), e);

}

}

-

1.4 获取设备截图:http://localhost:53516/screenshot.jpg

/** * 截图 * * @return Image */ public Image screenshot() { String imageSource = String.format("%s:%s/screenshot.jpg", Config.HTTP_URL, Config.PORT_53516); return new Image(imageSource); } -

1.5 获取设备基本信息:http://localhost:53516/device.json

public static ObservableList<String> getDeviceInformations(DeviceInfo deviceInfo) { String deviceUrl = String.format("%s:%s/device.json", Config.HTTP_URL, Config.PORT_53516); String strJson = HttpUtils.httpGet(deviceUrl); ObservableList<String> items = FXCollections.observableArrayList(); JSONObject jsonObject = new JSONObject(strJson); if (jsonObject.has("android.os.Build")) { items.add(" ================== android.os.Build ====================="); JSONObject build = jsonObject.getJSONObject("android.os.Build"); Iterator<String> sIterator = build.keys(); while (sIterator.hasNext()) { // 获得key String key = sIterator.next(); // 根据key获得value String value = build.get(key).toString(); items.add(String.format("%s : %s", key, value)); } } if (jsonObject.has("systemProperties")) { items.add(" ================== system properties ====================="); JSONObject systemProperties = jsonObject.getJSONObject("systemProperties"); Iterator<String> sIterator = systemProperties.keys(); while (sIterator.hasNext()) { // 获得key String key = sIterator.next(); // 根据key获得value String value = systemProperties.get(key).toString(); items.add(String.format("%s : %s", key, value)); } } if (jsonObject.has("cpuinfo")) { items.add(" ================== cpuinfo ====================="); JSONObject cpuinfo = jsonObject.getJSONObject("cpuinfo"); Iterator<String> sIterator = cpuinfo.keys(); while (sIterator.hasNext()) { // 获得key String key = sIterator.next(); // 根据key获得value String value = cpuinfo.get(key).toString(); items.add(String.format("%s : %s", key, value)); } } if (jsonObject.has("display")) { JSONObject display = jsonObject.getJSONObject("display"); deviceInfo.setScreenWidth(display.getInt("screenWidth")); deviceInfo.setScreenHeight(display.getInt("screenHeight")); deviceInfo.setNavShow(display.getBoolean("nav")); } return items; }

上面是PC客户端操作的主要流程以及功能点。

补充:PC客户端采用的JavaFX实现

二 Agent 端

agent端为数据采集端,整个测试工具的数据来源,需要实现的功能比较多,这篇我们先介绍下屏幕截图、视频数据流获取的功能。

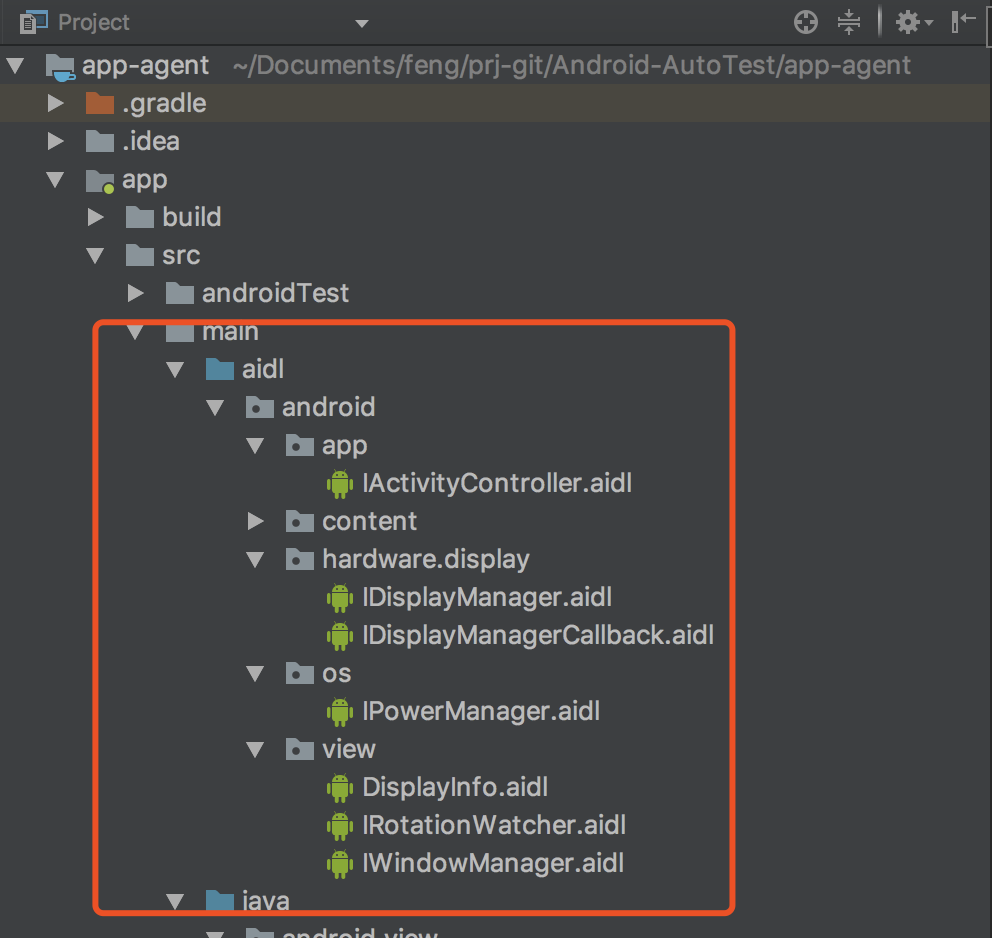

agnet中主要用到的技术点有AIDL及Java的反射:

AIDL:Android Interface definition language,是一种android内部进程通信接口的描述语言,通过它我们可以定义进程间的通信接口,具体AIDL的怎么使用,大家可以自行Google,这里不在啰嗦了。

-

1.1 agent Main class

类Main中的main函数主要工作就是创建并启动 AsyncHttpServer,监听 53516端口,并根据Htt访问地址不同,处理不同数据请求,例如获取设备基本信息、截图、获取设备视频流等。

public class Main {

private static final String PROCESS_NAME = "itrip-agent";

private static final int PORT_53516 = 53516;

public static Looper looper;

public static boolean isImeRunning;

private static StdOutDevice currentDevice;

public static double resolution = 0.0d;

public static void main(String[] args) throws Exception {

LogUtils.e("main started...");

ProcessUtils.setArgV0(PROCESS_NAME);

AsyncHttpServer httpServer = new AsyncHttpServer() {

@Override

protected boolean onRequest(AsyncHttpServerRequest request, AsyncHttpServerResponse response) {

return super.onRequest(request, response);

}

};

Looper.prepare();

looper = Looper.myLooper();

init();

AsyncServer server = new AsyncServer();

// device information

httpServer.get("/device.json", new DeviceInfoRequestCallback());

// screenshot

httpServer.get("/screenshot.jpg", new ScreenshotRequestCallback());

// screen live /h264

httpServer.get("/h264", new ScreenLiveRequestCallback(currentDevice));

httpServer.listen(server, PORT_53516);

LogUtils.e("main entry started...");

Looper.loop();

}

private static void init() throws NoSuchMethodException {

MotionEvent.class.getDeclaredMethod("obtain", new Class[0]).setAccessible(true);

}

public static boolean hasNavBar() {

return KeyCharacterMap.deviceHasKey(KeyEvent.KEYCODE_BACK) && KeyCharacterMap.deviceHasKey((KeyEvent.KEYCODE_HOME));

}

}

-

1.2 获取设备基本信息

获取设备基本信息比较简单,直接调用android系统数据即可

package live.itrip.agent.callback;

import android.graphics.Point;

import android.os.Build;

import android.util.Log;

import com.koushikdutta.async.http.server.AsyncHttpServerRequest;

import com.koushikdutta.async.http.server.AsyncHttpServerResponse;

import com.koushikdutta.async.http.server.HttpServerRequestCallback;

import org.json.JSONObject;

import java.io.File;

import java.util.Map;

import java.util.Properties;

import java.util.Scanner;

import live.itrip.agent.Main;

import live.itrip.agent.common.HttpErrorCode;

import live.itrip.agent.util.LogUtils;

import live.itrip.agent.virtualdisplay.SurfaceControlVirtualDisplayFactory;

/**

* Created on 2017/9/7.

*

* @author JianF

*/

public class DeviceInfoRequestCallback implements HttpServerRequestCallback {

public DeviceInfoRequestCallback() {

}

@Override

public void onRequest(AsyncHttpServerRequest request, AsyncHttpServerResponse response) {

response.getHeaders().set("Cache-Control", "no-cache");

LogUtils.i("performance success");

try {

response.setContentType("application/json;charset=utf-8");

JSONObject object = new JSONObject();

/*

Build.BOARD (主板)

Build.BOOTLOADER(boos 版本)

Build.BRAND(android系统定制商)

Build.TIME (编译时间)

Build.VERSION.SDK_INT (版本号)

Build.MODEL (版本)

Build.SUPPORTED_ABIS (cpu指令集)

Build.DEVICE (设备参数)

Build.ID (修订版本列表)

*/

JSONObject build = new JSONObject();

build.put("PRODUCT", Build.PRODUCT);

build.put("CPU_ABI", Build.CPU_ABI);

build.put("TAGS", Build.TAGS);

build.put("VERSION_CODES.BASE", Build.VERSION_CODES.BASE);

build.put("MODEL", Build.MODEL);

build.put("VERSION.SDK", Build.VERSION.SDK_INT);

build.put("VERSION.RELEASE", Build.VERSION.RELEASE);

build.put("DEVICE", Build.DEVICE);

build.put("DISPLAY", Build.DISPLAY);

build.put("BRAND", Build.BRAND);

build.put("BOARD", Build.BOARD);

build.put("FINGERPRINT", Build.FINGERPRINT);

build.put("ID", Build.ID);

build.put("MANUFACTURER", Build.MANUFACTURER);

build.put("USER", Build.USER);

build.put("HARDWARE", Build.HARDWARE);

object.put("android.os.Build", build);

// System Properties

JSONObject objProperties = new JSONObject();

Properties properties = System.getProperties();

for (Map.Entry entry : properties.entrySet()) {

objProperties.put(entry.getKey().toString(), entry.getValue());

}

object.put("systemProperties", objProperties);

// cpuinfo

object.put("cpuinfo", getCpuInfo());

// stat

object.put("stat", getStatInfo());

// display size

Point displaySize = SurfaceControlVirtualDisplayFactory.getCurrentDisplaySize();

JSONObject jsonDisplay = new JSONObject();

jsonDisplay.put("type", "displaySize");

jsonDisplay.put("screenWidth", displaySize.x);

jsonDisplay.put("screenHeight", displaySize.y);

jsonDisplay.put("nav", Main.hasNavBar());

object.put("display", jsonDisplay);

response.send(object.toString());

} catch (Exception e) {

response.code(HttpErrorCode.ERROR_CODE_500);

response.send(e.toString());

}

}

private JSONObject getStatInfo() {

JSONObject object = new JSONObject();

try {

Scanner s = new Scanner(new File("/proc/stat"));

while (s.hasNextLine()) {

String[] vals = s.nextLine().split(": ");

if (vals.length > 1) {

object.put(vals[0].trim(), vals[1].trim());

}

}

} catch (Exception e) {

Log.e("getCpuInfoMap", Log.getStackTraceString(e));

}

return object;

}

private JSONObject getCpuInfo() {

JSONObject object = new JSONObject();

try {

int i = 0;

Scanner s = new Scanner(new File("/proc/cpuinfo"));

while (s.hasNextLine()) {

String[] vals = s.nextLine().split(": ");

if (vals.length > 1) {

if (object.has(vals[0].trim())) {

object.put(vals[0].trim() + " " + i, vals[1].trim());

} else {

object.put(vals[0].trim(), vals[1].trim());

}

}

}

} catch (Exception e) {

Log.e("getCpuInfoMap", Log.getStackTraceString(e));

}

return object;

}

}

-

1.3 获取屏幕截图

获取设备屏幕截图,首先拿到设备WindowManager服务

public static synchronized IWindowManager getWindowManager() throws ClassNotFoundException

, NoSuchMethodException, InvocationTargetException, IllegalAccessException {

if (windowManager == null) {

Method getServiceMethod = Class.forName(ANDROID_OS_SERVICE_MANAGER).getDeclaredMethod(STRING_GET_SERVICE, new Class[]{String.class});

windowManager = IWindowManager.Stub.asInterface((IBinder) getServiceMethod.invoke(null, new Object[]{Context.WINDOW_SERVICE}));

}

return windowManager;

}

IWindowManager 即时我们定义的AIDL文件。

获取WindowManager 服务后,接下来就是要获取屏幕的尺寸

public static Point getCurrentDisplaySize(boolean rotate) {

try {

Point displaySize = new Point();

IWindowManager wm = InternalApi.getWindowManager();

int rotation;

wm.getInitialDisplaySize(0, displaySize);

rotation = wm.getRotation();

if ((rotate && rotation == 1) || rotation == 3) {

int swap = displaySize.x;

displaySize.x = displaySize.y;

displaySize.y = swap;

}

return displaySize;

} catch (Exception e) {

throw new AssertionError(e);

}

}

然后反射调用系统中 android.view.Surface 或者 android.view.SurfaceControl,获取设备截图。

public static synchronized Method getMethodScreenshot() throws ClassNotFoundException, NoSuchMethodException {

String surfaceClassName;

if (Build.VERSION.SDK_INT <= Build.VERSION_CODES.JELLY_BEAN_MR1) {

surfaceClassName = "android.view.Surface";

} else {

surfaceClassName = "android.view.SurfaceControl";

}

Class<?> clazzSurface = Class.forName(surfaceClassName);

return clazzSurface.getDeclaredMethod("screenshot", new Class[]{Integer.TYPE, Integer.TYPE});

}

拿到设备截图Bimap后,推送到PC客户端。

public static Bitmap screenshot(IWindowManager wm) throws Exception {

Point size = SurfaceControlVirtualDisplayFactory.getCurrentDisplaySize(false);

Bitmap b = (Bitmap) InternalApi.getMethodScreenshot()

.invoke(null, new Object[]{size.x, size.y});

int rotation = wm.getRotation();

if (rotation == 0) {

return b;

}

Matrix m = new Matrix();

if (rotation == 1) {

m.postRotate(-90.0f);

} else if (rotation == 2) {

m.postRotate(-180.0f);

} else if (rotation == 3) {

m.postRotate(-270.0f);

}

return Bitmap.createBitmap(b, 0, 0, size.x, size.y, m, false);

}

上面就是设备截图在agent中的执行流程,当然还有其他的实现方式,比如 adb shell 命令中的screencap命令等,适合自己的才是最好的。

-

1.4 获取屏幕数据流

agent 推送屏幕数据流,为了降低延迟时间,相对于截图来说比较复杂,如果对设备屏幕延迟不做要求或者要求比较低,可以采用实时截图并推送的方式,暂不做讨论,下面简单介绍下agent的数据采集、推送的功能点:

Main中可以了解,当http请求地址为 /264 时,会调度到类 ScreenLiveRequestCallback 类执行,代码如下

public class ScreenLiveRequestCallback implements HttpServerRequestCallback {

private StdOutDevice currentDevice;

public ScreenLiveRequestCallback(StdOutDevice currentDevice) {

this.currentDevice = currentDevice;

}

@Override

public void onRequest(AsyncHttpServerRequest request, AsyncHttpServerResponse response) {

LogUtils.i("h264 new http connection accepted.");

try {

// 点亮设备屏幕

WebSocketInputHandler.turnScreenOn(InternalApi.getInputManager()

, InternalApi.getInjectInputEventMethod()

, InternalApi.getPowerManager());

} catch (Exception e) {

e.printStackTrace();

}

response.getHeaders().set("Access-Control-Allow-Origin", "*");

response.getHeaders().set("Connection", "close");

try {

final StdOutDevice device = writer(new BufferedDataSink(response), InternalApi.getWindowManager());

response.setClosedCallback(new CompletedCallback() {

@Override

public void onCompleted(Exception ex) {

LogUtils.i("Connection terminated.");

if (ex != null) {

LogUtils.e("Error", ex);

}

device.stop();

}

});

} catch (ClassNotFoundException | NoSuchMethodException | InvocationTargetException | IllegalAccessException e) {

e.printStackTrace();

}

}

private StdOutDevice writer(BufferedDataSink sink, IWindowManager wm) {

if (this.currentDevice != null) {

this.currentDevice.stop();

this.currentDevice = null;

}

Point encodeSize = getEncodeSize();

SurfaceControlVirtualDisplayFactory factory = new SurfaceControlVirtualDisplayFactory();

StdOutDevice device = new StdOutDevice(encodeSize.x, encodeSize.y, sink);

if (Main.resolution != 0.0d) {

// device.setUseEncodingConstraints(false);

}

this.currentDevice = device;

LogUtils.i("registering virtual display");

if (device.supportsSurface()) {

device.registerVirtualDisplay(factory, 0);

} else {

LogUtils.i("Using legacy path");

device.createDisplaySurface();

final EncoderFeeder feeder = new EncoderFeeder(device.getMediaCodec(), device.getEncodingDimensions().x, device.getEncodingDimensions().y, device.getColorFormat());

IRotationWatcher watcher = new IRotationWatcher.Stub() {

@Override

public void onRotationChanged(int rotation) throws RemoteException {

feeder.setRotation(rotation);

}

};

try {

int displayId = 1;

wm.watchRotation(watcher, displayId);

} catch (RemoteException e) {

LogUtils.e(e.getMessage());

}

feeder.feed();

}

LogUtils.i("virtual display registered");

return device;

}

private Point getEncodeSize() {

Point encodeSize = SurfaceControlVirtualDisplayFactory.getCurrentDisplaySize();

if (Main.resolution == 0.0d) {

if (encodeSize.x >= 1280 || encodeSize.y >= 1280) {

encodeSize.x /= 2;

encodeSize.y /= 2;

}

while (true) {

if (encodeSize.x <= 1280 && encodeSize.y <= 1280) {

break;

}

encodeSize.x /= 2;

encodeSize.y /= 2;

}

} else {

encodeSize.x = (int) (((double) encodeSize.x) * Main.resolution);

encodeSize.y = (int) (((double) encodeSize.y) * Main.resolution);

}

return encodeSize;

}

}

在韩式 writer的参数中,有参数BufferedDataSink,主要作用为推送数据到PC客户端;同时new了 StdOutDevice 对象,并把BufferedDataSink作为参数传递给StdOutDevice,达到实时推送的目的。

下面我们看下StdOutDevice类

public class StdOutDevice extends EncoderDevice {

private int bitrate = 500000;

private BufferedDataSink sink;

class Writer extends EncoderRunnable {

Writer(MediaCodec venc) {

super(venc);

}

@Override

protected void encode() {

Log.i(StdOutDevice.this.LOGTAG, "Writer started.");

ByteBuffer[] encouts = null;

boolean doneCoding = false;

boolean hasReceivedBuffer = false;

while (!doneCoding) {

BufferInfo info = new BufferInfo();

int bufIndex = StdOutDevice.this.encoder.dequeueOutputBuffer(info, -1);

if (bufIndex >= 0) {

if (!hasReceivedBuffer) {

hasReceivedBuffer = true;

Log.i(StdOutDevice.this.LOGTAG, "Got first buffer");

}

if (encouts == null) {

encouts = StdOutDevice.this.encoder.getOutputBuffers();

}

ByteBuffer b = encouts[bufIndex];

ByteBuffer copy = ByteBufferList.obtain(info.size + 12).order(ByteOrder.LITTLE_ENDIAN);

copy.putInt((info.size + 12) - 4);

copy.putLong(info.presentationTimeUs);

b.position(info.offset);

b.limit(info.offset + info.size);

copy.put(b);

copy.flip();

b.clear();

StdOutDevice.this.sink.write(new ByteBufferList(copy));

int rem = StdOutDevice.this.sink.remaining();

if (rem != 0) {

Log.i(StdOutDevice.this.LOGTAG, "Buffered: " + rem);

}

StdOutDevice.this.encoder.releaseOutputBuffer(bufIndex, false);

doneCoding = (info.flags & 4) != 0;

} else if (bufIndex == -3) {

encouts = null;

Log.i(StdOutDevice.this.LOGTAG, "MediaCodec.INFO_OUTPUT_BUFFERS_CHANGED");

} else if (bufIndex == -2) {

Log.i(StdOutDevice.this.LOGTAG, "MediaCodec.INFO_OUTPUT_FORMAT_CHANGED");

MediaFormat outputFormat = StdOutDevice.this.encoder.getOutputFormat();

Log.i(StdOutDevice.this.LOGTAG, "output width: " + outputFormat.getInteger("width"));

Log.i(StdOutDevice.this.LOGTAG, "output height: " + outputFormat.getInteger("height"));

}

}

StdOutDevice.this.sink.end();

Log.i(StdOutDevice.this.LOGTAG, "Writer done");

}

}

public StdOutDevice(int width, int height, BufferedDataSink sink) {

super("stdout", width, height);

this.sink = sink;

}

public int getBitrate() {

return this.bitrate;

}

@TargetApi(19)

public void setBitrate(int bitrate) {

Log.i(this.LOGTAG, "Bitrate: " + bitrate);

this.bitrate = bitrate;

Bundle bundle = new Bundle();

bundle.putInt("video-bitrate", bitrate);

this.encoder.setParameters(bundle);

}

@TargetApi(19)

public void requestSyncFrame() {

Bundle bundle = new Bundle();

bundle.putInt("request-sync", 0);

this.encoder.setParameters(bundle);

}

@Override

protected EncoderRunnable onSurfaceCreated(MediaCodec venc) {

return new Writer(venc);

}

}

继承于 EncoderDevice,并实现了 encode 方法。

EncoderDevice 功能:

- 读取设备配置信息 :/system/etc/media_profiles.xml

- 创建 Surface

- 创建MediaCodec对象encoder:MediaCodec.createEncoderByType("video/avc");

- 在 StdOutDevice的encode()方法中,获取视频数据:encoder.getOutputBuffers() 并推送到PC客户端。

EncoderDevice类代码如下:

public abstract class EncoderDevice {

private static final boolean assertionsDisabled = (!EncoderDevice.class.desiredAssertionStatus());

public final String LOGTAG = getClass().getSimpleName();

private int colorFormat;

private Point encSize;

private int height;

public String name;

private boolean useSurface = true;

private VirtualDisplayFactory vdf;

public MediaCodec encoder;

private VirtualDisplay virtualDisplay;

private int width;

protected abstract class EncoderRunnable implements Runnable {

MediaCodec venc;

protected abstract void encode() throws Exception;

public EncoderRunnable(MediaCodec venc) {

this.venc = venc;

}

protected void cleanup() {

EncoderDevice.this.destroyDisplaySurface(this.venc);

this.venc = null;

}

@Override

public final void run() {

try {

encode();

} catch (Exception e) {

Log.e(EncoderDevice.this.LOGTAG, "Encoder error", e);

}

cleanup();

Log.i(EncoderDevice.this.LOGTAG, "=======ENCODING COMPELTE=======");

}

}

protected abstract EncoderRunnable onSurfaceCreated(MediaCodec mediaCodec);

public void registerVirtualDisplay(VirtualDisplayFactory vdf, int densityDpi) {

if (assertionsDisabled || this.virtualDisplay == null) {

Surface surface = createDisplaySurface();

if (surface == null) {

Log.e(this.LOGTAG, "Unable to create surface");

return;

}

Log.e(this.LOGTAG, "Created surface");

this.vdf = vdf;

this.virtualDisplay = vdf.createVirtualDisplay(this.name, this.width, this.height, densityDpi, 3, surface, null);

return;

}

throw new AssertionError();

}

public EncoderDevice(String name, int width, int height) {

this.width = width;

this.height = height;

this.name = name;

}

public void stop() {

signalEnd();

this.encoder = null;

if (this.virtualDisplay != null) {

this.virtualDisplay.release();

this.virtualDisplay = null;

}

if (this.vdf != null) {

this.vdf.release();

this.vdf = null;

}

}

private void destroyDisplaySurface(MediaCodec venc) {

if (venc != null) {

try {

venc.stop();

venc.release();

} catch (Exception e) {

LogUtils.e(e.getMessage(), e);

}

if (this.encoder == venc) {

this.encoder = null;

if (this.virtualDisplay != null) {

this.virtualDisplay.release();

this.virtualDisplay = null;

}

if (this.vdf != null) {

this.vdf.release();

this.vdf = null;

}

}

}

}

private void signalEnd() {

if (this.encoder != null) {

try {

this.encoder.signalEndOfInputStream();

} catch (Exception e) {

LogUtils.e(e.getMessage(), e);

}

}

}

private void setSurfaceFormat(MediaFormat video) {

this.colorFormat = 2130708361;

video.setInteger(MediaFormat.KEY_COLOR_FORMAT, 2130708361);

}

private int selectColorFormat(MediaCodecInfo codecInfo, String mimeType) throws Exception {

CodecCapabilities capabilities = codecInfo.getCapabilitiesForType(mimeType);

Log.i(this.LOGTAG, "Available color formats: " + capabilities.colorFormats.length);

for (int colorFormat : capabilities.colorFormats) {

if (isRecognizedFormat(colorFormat)) {

Log.i(this.LOGTAG, "Using: " + colorFormat);

return colorFormat;

}

Log.i(this.LOGTAG, "Not using: " + colorFormat);

}

throw new Exception("Unable to find suitable color format");

}

private static boolean isRecognizedFormat(int colorFormat) {

switch (colorFormat) {

case 19:

case 20:

case 21:

case 39:

case 2130706688:

return true;

default:

return false;

}

}

public void useSurface(boolean useSurface) {

this.useSurface = useSurface;

}

public boolean supportsSurface() {

return VERSION.SDK_INT >= 19 && this.useSurface;

}

public MediaCodec getMediaCodec() {

return this.encoder;

}

public Point getEncodingDimensions() {

return this.encSize;

}

public int getColorFormat() {

return this.colorFormat;

}

public Surface createDisplaySurface() {

signalEnd();

this.encoder = null;

MediaCodecInfo codecInfo = null;

try {

int numCodecs = MediaCodecList.getCodecCount();

for (int i = 0; i < numCodecs; i++) {

MediaCodecInfo found = MediaCodecList.getCodecInfoAt(i);

if (found.isEncoder()) {

for (String type : found.getSupportedTypes()) {

if (type.equalsIgnoreCase("video/avc")) {

if (codecInfo == null) {

codecInfo = found;

}

Log.i(this.LOGTAG, found.getName());

CodecCapabilities caps = found.getCapabilitiesForType("video/avc");

for (int colorFormat : caps.colorFormats) {

Log.i(this.LOGTAG, "colorFormat: " + colorFormat);

}

for (CodecProfileLevel profileLevel : caps.profileLevels) {

Log.i(this.LOGTAG, "profile/level: " + profileLevel.profile + "/" + profileLevel.level);

}

}

}

}

}

} catch (Exception e) {

LogUtils.e(e.getMessage(), e);

}

int maxWidth;

int maxHeight;

int bitrate;

int maxFrameRate;

try {

String xml = StreamUtility.readFile("/system/etc/media_profiles.xml");

RootElement root = new RootElement("MediaSettings");

Element encoderElement = root.requireChild("VideoEncoderCap");

ArrayList<VideoEncoderCap> encoders = new ArrayList<>();

XmlListener mXmlListener = new XmlListener(encoders);

encoderElement.setElementListener(mXmlListener);

Reader mReader = new StringReader(xml);

Parsers.parse(mReader, root.getContentHandler());

if (encoders.size() != 1) {

throw new Exception("derp");

}

VideoEncoderCap videoEncoderCap = encoders.get(0);

for (VideoEncoderCap cap : encoders) {

if ("h264".equals(cap.name)) {

videoEncoderCap = cap;

}

}

maxWidth = videoEncoderCap.maxFrameWidth;

maxHeight = videoEncoderCap.maxFrameHeight;

bitrate = videoEncoderCap.maxBitRate;

maxFrameRate = videoEncoderCap.maxFrameRate;

int max = Math.max(maxWidth, maxHeight);

int min = Math.min(maxWidth, maxHeight);

double ratio;

if (this.width > this.height) {

if (this.width > max) {

ratio = ((double) max) / ((double) this.width);

this.width = max;

this.height = (int) (((double) this.height) * ratio);

}

if (this.height > min) {

ratio = ((double) min) / ((double) this.height);

this.height = min;

this.width = (int) (((double) this.width) * ratio);

}

} else {

if (this.height > max) {

ratio = ((double) max) / ((double) this.height);

this.height = max;

this.width = (int) (((double) this.width) * ratio);

}

if (this.width > min) {

ratio = ((double) min) / ((double) this.width);

this.width = min;

this.height = (int) (((double) this.height) * ratio);

}

}

// this.width /= 16;

// this.width *= 16;

// this.height /= 16;

// this.height *= 16;

Log.i(this.LOGTAG, "Width: " + this.width + " Height: " + this.height);

this.encSize = new Point(this.width, this.height);

MediaFormat mMediaFormat = MediaFormat.createVideoFormat("video/avc", this.width, this.height);

Log.i(this.LOGTAG, "Bitrate: " + bitrate);

mMediaFormat.setInteger(MediaFormat.KEY_BIT_RATE, bitrate);

mMediaFormat.setInteger(MediaFormat.KEY_FRAME_RATE, maxFrameRate);

Log.i(this.LOGTAG, "Frame rate: " + maxFrameRate);

mMediaFormat.setLong(MediaFormat.KEY_REPEAT_PREVIOUS_FRAME_AFTER, TimeUnit.MILLISECONDS.toMicros((long) (1000 / maxFrameRate)));

mMediaFormat.setInteger(MediaFormat.KEY_I_FRAME_INTERVAL, 30);

Log.i(this.LOGTAG, "Creating encoder");

try {

if (supportsSurface()) {

setSurfaceFormat(mMediaFormat);

} else {

int selectColorFormat = selectColorFormat(codecInfo, "video/avc");

this.colorFormat = selectColorFormat;

mMediaFormat.setInteger(MediaFormat.KEY_COLOR_FORMAT, selectColorFormat);

}

this.encoder = MediaCodec.createEncoderByType("video/avc");

Log.i(this.LOGTAG, "Created encoder");

Log.i(this.LOGTAG, "Configuring encoder");

this.encoder.configure(mMediaFormat, null, null, MediaCodec.CONFIGURE_FLAG_ENCODE);

Log.i(this.LOGTAG, "Creating input surface");

Surface surface = null;

if (this.useSurface) {

surface = this.encoder.createInputSurface();

}

Log.i(this.LOGTAG, "Starting Encoder");

this.encoder.start();

Log.i(this.LOGTAG, "Surface ready");

Thread lastEncoderThread = new Thread(onSurfaceCreated(this.encoder), "Encoder");

lastEncoderThread.start();

Log.i(this.LOGTAG, "Encoder ready");

return surface;

} catch (Exception e2) {

Log.e(this.LOGTAG, "Exception creating encoder", e2);

}

} catch (Exception e22) {

Log.e(this.LOGTAG, "Error getting media profiles", e22);

}

return null;

}

private class XmlListener implements ElementListener {

final ArrayList<VideoEncoderCap> encoders;

XmlListener(ArrayList<VideoEncoderCap> mList) {

this.encoders = mList;

}

@Override

public void end() {

}

@Override

public void start(Attributes attributes) {

if (TextUtils.equals(attributes.getValue("name"), "h264")) {

this.encoders.add(new VideoEncoderCap(attributes));

}

}

}

private static class VideoEncoderCap {

// <VideoEncoderCap name="h264" enabled="true"

// minBitRate="64000" maxBitRate="40000000"

// minFrameWidth="176" maxFrameWidth="1920"

// minFrameHeight="144" maxFrameHeight="1080"

// minFrameRate="15" maxFrameRate="30" />

String name;

boolean enabled;

int maxBitRate;

int maxFrameHeight;

int maxFrameRate;

int maxFrameWidth;

VideoEncoderCap(Attributes attributes) {

this.maxFrameWidth = Integer.valueOf(attributes.getValue("maxFrameWidth"));

this.maxFrameHeight = Integer.valueOf(attributes.getValue("maxFrameHeight"));

this.maxBitRate = Integer.valueOf(attributes.getValue("maxBitRate"));

this.maxFrameRate = Integer.valueOf(attributes.getValue("maxFrameRate"));

this.name = attributes.getValue("name");

this.enabled = Boolean.valueOf(attributes.getValue("enabled"));

}

}

}

到此,agent中设备信息采集、屏幕截图、屏幕数据流获取等功能已实现,这只是一种实现方式供大家闲余时间参考。项目代码后面会整理后发布出来。