文章目录

使用简介

- 数据实时同步流程: hbase – > solr

- 数据实时查询流程: solr – > hbase

hbase-indexer 索引hbase数据到solr

官方文档:https://github.com/NGDATA/hbase-indexer/wiki/Tutorial

1. 服务端:CDH添加服务 (配置1g内存)

[root@hadoop1jms]# ps -ef |grep hbase-index

hbase 105985 22652 0 Feb12 ? 00:18:43 /usr/java/jdk1.8.0_211-amd64/bin/java -Dproc_server -XX:OnOutOfMemoryError=kill -9 %p -Djava.net.preferIPv4Stack=true \

-Xms1048576 -Xmx1048576 -XX:+UseParNewGC -XX:+UseConcMarkSweepGC -XX:CMSInitiatingOccupancyFraction=70 -XX:+CMSParallelRemarkEnabled -XX:+HeapDumpOnOutOfMemoryError \

-XX:HeapDumpPath=/tmp/ks_indexer_ks_indexer-HBASE_INDEXER-144a0ada48aafa3485e33bb69df57f5c_pid105985.hprof -XX:OnOutOfMemoryError=/opt/cm-5.12.2/lib64/cmf/service/common/killparent.sh \

-Dhbaseindexer.log.dir=/opt/cloudera/parcels/CDH-5.12.0-1.cdh5.12.0.p0.29/lib/hbase-solr/bin/../logs -Dhbaseindexer.log.file=hbase-indexer.log \

-Dhbaseindexer.home.dir=/opt/cloudera/parcels/CDH-5.12.0-1.cdh5.12.0.p0.29/lib/hbase-solr/bin/.. -Dhbaseindexer.id.str= -Dhbaseindexer.root.logger=INFO,console \

-Djava.library.path=/opt/cloudera/parcels/CDH-5.12.0-1.cdh5.12.0.p0.29/lib/hadoop/lib/native \

com.ngdata.hbaseindexer.Main

[zk: localhost:2181(CONNECTED) 3] ls /ngdata/hbaseindexer

[masters, indexer, indexerprocess, indexer-trash]

2. 客户端: hbase-indexer命令行工具

[root@hadoop1 ~]$ which hbase-indexer

/usr/bin/hbase-indexer

[root@hadoop1 ~]# readlink -f /usr/bin/hbase-indexer

/opt/cloudera/parcels/CDH-5.12.0-1.cdh5.12.0.p0.29/bin/hbase-indexer

[root@hadoop1 ~]$ cat /opt/cloudera/parcels/CDH-5.12.0-1.cdh5.12.0.p0.29/bin/hbase-indexer

#!/bin/bash

# Reference: http://stackoverflow.com/questions/59895/can-a-bash-script-tell-what-directory-its-stored-in

SOURCE="${BASH_SOURCE[0]}"

BIN_DIR="$( dirname "$SOURCE" )"

while [ -h "$SOURCE" ]

do

SOURCE="$(readlink "$SOURCE")"

[[ $SOURCE != /* ]] && SOURCE="$DIR/$SOURCE"

BIN_DIR="$( cd -P "$( dirname "$SOURCE" )" && pwd )"

done

BIN_DIR="$( cd -P "$( dirname "$SOURCE" )" && pwd )"

LIB_DIR=$BIN_DIR/../lib

# Autodetect JAVA_HOME if not defined

. $LIB_DIR/bigtop-utils/bigtop-detect-javahome

exec $LIB_DIR/hbase-solr/bin/hbase-indexer "$@"

[root@hadoop1 ~]$ cat /opt/cloudera/parcels/CDH-5.12.0-1.cdh5.12.0.p0.29/lib/hbase-solr/bin/hbase-indexer

...

# figure out which class to run

if [ "$COMMAND" = "server" ] ; then

CLASS='com.ngdata.hbaseindexer.Main'

elif [ "$COMMAND" = "daemon" ] ; then

CLASS='com.ngdata.hbaseindexer.Main daemon'

elif [ "$COMMAND" = "add-indexer" ] ; then

CLASS='com.ngdata.hbaseindexer.cli.AddIndexerCli'

elif [ "$COMMAND" = "update-indexer" ] ; then

CLASS='com.ngdata.hbaseindexer.cli.UpdateIndexerCli'

elif [ "$COMMAND" = "delete-indexer" ] ; then

CLASS='com.ngdata.hbaseindexer.cli.DeleteIndexerCli'

elif [ "$COMMAND" = "list-indexers" ] ; then

CLASS='com.ngdata.hbaseindexer.cli.ListIndexersCli'

...

# Exporting classpath since passing the classpath with -cp seems to choke daemon mode

export CLASSPATH

# Exec unless HBASE_INDEXER_NOEXEC is set.

if [ "${HBASE_INDEXER_NOEXEC}" != "" ]; then

"$JAVA" -Dproc_$COMMAND {-XX:OnOutOfMemoryError="kill -9 %p" $JAVA_HEAP_MAX $HBASE_INDEXER_OPTS $CLASS "$@"

else

exec "$JAVA" -Dproc_$COMMAND -XX:OnOutOfMemoryError="kill -9 %p" $JAVA_HEAP_MAX $HBASE_INDEXER_OPTS $CLASS "$@"

fi

2.1 客户端配置文件

配置文档:https://github.com/NGDATA/hbase-indexer/wiki/Indexer-configuration

- 确保hbase表启用复制

alter 'HBase_Indexer_Test' , { NAME => 'f', REPLICATION_SCOPE => '1' }

#1, 创建索引配置文件

[root@test-c6 ~]# cat /var/lib/solr/hbase_2solr/indexerconf.xml

<indexer table="HBase_Indexer_Test" read-row="never">

<field name="HBase_Indexer_Test_cf1_name" value="cf1:name" type="string"/>

<field name="HBase_Indexer_Test_cf1_job" value="cf1:job" type="string"/>

</indexer>

####1.2快速根据schema.xml生成配置

[root@test-c6 ~]# cat schema.xml

<field name="id" type="string" indexed="true" stored="true" />

<field name="name" type="string" indexed="true" stored="true" />

#

[root@test-c6 ~]# sed 's@name="\(.*\)" type=.*@ name="\1" \t value="f:\1" \t type="string" \/\>@' schema.xml

<field name="id" value="f:id" type="string" />

<field name="name" value="f:name" type="string" />

2.2 创建实时索引

2.2.1 solr cloud

- 确保hbase表启用复制

alter 'HBase_Indexer_Test' , { NAME => 'f', REPLICATION_SCOPE => '1' } - 注意: 实时索引,只对新数据才有效;旧的hbase表历史数据,需要使用批量索引方式添加进来

#命令行添加索引

#短格式的命令参数

hbase-indexer add-indexer \

--name hbase_2solr \

-c /var/lib/solr/hbase_2solr/indexerconf.xml \

-cp solr.zk=test-c6,hadoop01:2181/solr \

-cp solr.collection=hbase_2solr \

-z test-c6:2181

#长格式的命令参数

#hbase-indexer add-indexer \

#--name hbase_2solr \

#--indexer-conf /var/lib/solr/hbase_2solr/indexerconf.xml \

#--connection-param solr.zk=test-c6:2181/solr \

#--connection-param solr.collection=hbase_2solr \

#--zookeeper test-c6:2181

#2,查看索引状态

[root@test-c6 ~]# hbase-indexer list-indexers --zookeeper test-c6:2181

Number of indexes: 1

hbase_2solr

+ Lifecycle state: ACTIVE

+ Incremental indexing state: SUBSCRIBE_AND_CONSUME

+ Batch indexing state: INACTIVE

+ SEP subscription ID: Indexer_collection_hbase_2solr_indexer

+ SEP subscription timestamp: 2020-11-27T15:17:04.569+08:00

+ Connection type: solr

+ Connection params:

+ solr.zk = test-c6:2181/solr

+ solr.collection = hbase_2solr

+ Indexer config:

795 bytes, use -dump to see content

+ Indexer component factory: com.ngdata.hbaseindexer.conf.DefaultIndexerComponentFactory

+ Additional batch index CLI arguments:

(none)

+ Default additional batch index CLI arguments:

(none)

+ Processes

+ 1 running processes

+ 0 failed processes

2.2.2 solr stand-alone

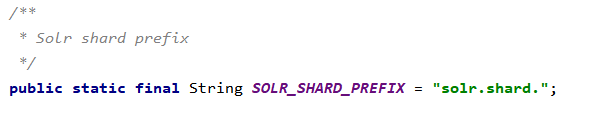

根据代码中参数的解析:来获取参数名

添加http solr: 确保hbase表启用复制 alter 'HBase_Indexer_Test' , { NAME => 'f', REPLICATION_SCOPE => '1' }

hbase-indexer add-indexer \

--name test2 \

-c /var/lib/solr/index.conf \

-cp solr.mode=classic \

-cp solr.shard.1=http://192.168.56.1:8089/solr/test

2.3 创建批量索引:hadoop jar hbase-indexer-mr-job.jar

- 确保hbase表启用复制

alter 'HBase_Indexer_Test' , { NAME => 'f', REPLICATION_SCOPE => '1' }

# --reducers INTEGER 0 indicates that no reducers should be used, and documents

# should be sent directly from the mapper tasks to live Solr servers

# (Re)index a table with direct writes to SolrCloud

#基于hbase-indexer 配置文件

hadoop jar /opt/cloudera/parcels/CDH/lib/hbase-solr/tools/hbase-indexer-mr-job.jar \

--hbase-indexer-file /var/lib/solr/hbase_2solr/indexerconf.xml \

--zk-host localhost:2181/solr \

--collection hbase_2solr \

--reducers 0

# (Re)index a table based on a indexer config stored in ZK

#基于已经创建的hbase-indexer 索引名称

hadoop jar /opt/cloudera/parcels/CDH/lib/hbase-solr/tools/hbase-indexer-mr-job.jar \

--hbase-indexer-zk localhost:2181 \

--hbase-indexer-name hbase_2solr \

--reducers 0

2.4 验证hbase数据是否被solr索引

- hbase shell 插入数据

hbase(main):023:0> put "HBase_Indexer_Test",'015','cf1:job', 'java3'

hbase(main):023:0> put "HBase_Indexer_Test",'015','cf1:name', 'bb'

- 查看Key-Value Store Indexer, solr日志

#Key-Value Store Indexer

[root@test-c6 ~]# tailf /var/log/hbase-solr/lily-hbase-indexer-cmf-ks_indexer-HBASE_INDEXER-test-c6.log.out

2020-11-27 15:31:16,890 INFO org.kitesdk.morphline.api.MorphlineContext: Importing commands

2020-11-27 15:31:17,341 INFO org.kitesdk.morphline.api.MorphlineContext: Done importing commands

- solr查询