文章目录

lucene demo样例演示:如何创建索引,如何检索数据 https://lucene.apache.org/core/8_6_0/demo/index.html

1, 下载lucene, 获取demo相关jar包

https://lucene.apache.org/core/downloads.html

2, 源代码实现明细

配置pom.xml

<dependency>

<groupId>org.apache.lucene</groupId>

<artifactId>lucene-demo</artifactId>

<version>8.6.0</version>

</dependency>

<dependency>

<groupId>org.apache.lucene</groupId>

<artifactId>lucene-core</artifactId>

<version>8.6.0</version>

</dependency>

<dependency>

<groupId>org.apache.lucene</groupId>

<artifactId>lucene-queryparser</artifactId>

<version>8.6.0</version>

</dependency>

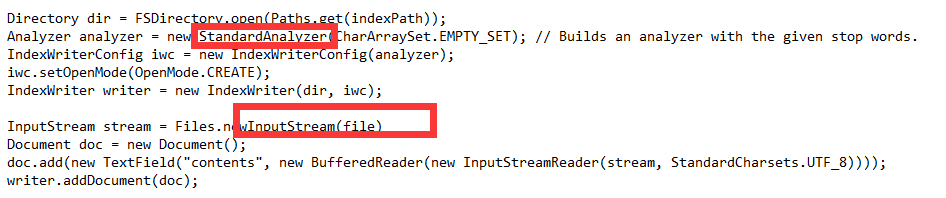

org.apache.lucene.demo.IndexFiles : StandardAnalyzer index阶段

- 使用 StandardAnalyzer 来解析文件内容

// Index all text files under a directory.

public class IndexFiles {

/** Index all text files under a directory. */

public static void main(String[] args) {

//指定源文档的路径:绝对路径 或 相对路径

//cmd> ls D:\download\lucene-7.7.3\demo\txt\

// 123.txt 456.txt hello123.txt hello12345678.txt

String docsPath = "D:\\download\\lucene-7.7.3\\demo\\txt";

//指定索引存放的位置:绝对路径 或 相对路径

String indexPath = "D:\\download\\lucene-7.7.3\\demo\\index1";

//是创建索引/ 还是更新索引

boolean create = true;

final Path docDir = Paths.get(docsPath);

Date start = new Date();

System.out.println("Indexing to directory '" + indexPath + "'...");

Directory indexPathDir = FSDirectory.open(Paths.get(indexPath));

Analyzer analyzer = new StandardAnalyzer();

IndexWriterConfig iwc = new IndexWriterConfig(analyzer);

if (create) {

// Create a new index in the directory, removing old indexs

iwc.setOpenMode(OpenMode.CREATE);

} else {

// Add new documents to an existing index:

iwc.setOpenMode(OpenMode.CREATE_OR_APPEND);

}

IndexWriter writer = new IndexWriter(indexPathDir, iwc);

indexDocs(writer, docDir);

// NOTE: if you want to maximize search performance,

// you can optionally call forceMerge here. This can be

// a terribly costly operation, so generally it's only

// worth it when your index is relatively static (ie

// you're done adding documents to it):

//

// writer.forceMerge(1);

writer.close();

Date end = new Date();

System.out.println(end.getTime() - start.getTime() + " total milliseconds");

}

/**

* Indexes the given file using the given writer, or if a directory is given,

*

* NOTE: This method indexes one document per input file. This is slow. For good

* throughput, put multiple documents into your input file(s). An example of this is

* in the benchmark module, which can create "line doc" files, one document per line,

* using the

* <a href="../../../../../contrib-benchmark/org/apache/lucene/benchmark/byTask/tasks/WriteLineDocTask.html"

* >WriteLineDocTask</a>.

*

* @param writer Writer to the index where the given file/dir info will be stored

* @param path The file to index, or the directory to recurse into to find files to index

*/

static void indexDocs(final IndexWriter writer, Path path) throws IOException {

if (Files.isDirectory(path)) {

Files.walkFileTree(path, new SimpleFileVisitor<Path>() {

@Override

public FileVisitResult visitFile(Path file, BasicFileAttributes attrs) throws IOException {

try {

indexDoc(writer, file, attrs.lastModifiedTime().toMillis());

} catch (IOException ignore) {

// don't index files that can't be read.

}

return FileVisitResult.CONTINUE;

}

});

} else {

indexDoc(writer, path, Files.getLastModifiedTime(path).toMillis());

}

}

/** Indexes a single document */

static void indexDoc(IndexWriter writer, Path file, long lastModified) throws IOException {

try (InputStream stream = Files.newInputStream(file)) {

// make a new, empty document

Document doc = new Document();

// Add the path of the file as a field named "path". Use a

Field pathField = new StringField("path", file.toString(), Field.Store.YES);

doc.add(pathField);

// Add the last modified date of the file a field named "modified".

doc.add(new LongPoint("modified", lastModified));

// Add the contents of the file to a field named "contents". Specify a Reader,

// so that the text of the file is tokenized and indexed, but not stored.

doc.add(new TextField("contents", new BufferedReader(new InputStreamReader(stream, StandardCharsets.UTF_8))));

if (writer.getConfig().getOpenMode() == OpenMode.CREATE) {

// New index, so we just add the document (no old document can be there):

System.out.println("adding " + file);

writer.addDocument(doc);

} else {

// Existing index (an old copy of this document may have been indexed) so

// we use updateDocument instead to replace the old one matching the exact

// path, if present:

System.out.println("updating " + file);

writer.updateDocument(new Term("path", file.toString()), doc);

}

}

}

}

org.apache.lucene.demo.SearchFiles : StandardAnalyzer query阶段

// search demo.

public class SearchFiles {

public static void main(String[] args) throws Exception {

//指定索引存放的位置:绝对路径 或 相对路径

String index = "D:\\download\\lucene-7.7.3\\demo\\index1";

//搜索的文本内容

String queryString = "hello";

//搜索的文本内容 --- 从哪个索引字段检索

String field = "contents";

//分页大小

int hitsPerPage = 10;

//是否查看:更详细的内容( 搜索配置的score )

boolean raw = true;

IndexReader reader = DirectoryReader.open(FSDirectory.open(Paths.get(index)));

IndexSearcher searcher = new IndexSearcher(reader);

Analyzer analyzer = new StandardAnalyzer();

QueryParser parser = new QueryParser(field, analyzer);

Query query = parser.parse(queryString);

System.out.println("Searching for: " + query.toString(field));

doSearch(searcher, query, hitsPerPage, raw);

reader.close();

}

//查询

public static void doSearch(IndexSearcher searcher, Query query, int hitsPerPage, boolean raw) throws IOException {

BufferedReader in = new BufferedReader(new InputStreamReader(System.in, StandardCharsets.UTF_8));

// Collect enough docs to show 5 pages

TopDocs results = searcher.search(query, 5 * hitsPerPage);

ScoreDoc[] hits = results.scoreDocs;

int numTotalHits = Math.toIntExact(results.totalHits.value);

System.out.println(numTotalHits + " total matching documents ");

System.out.println("\n\n>>>>>>>> doc details >>>>>>>>>");

for (int i = 0; i < numTotalHits; i++) {

if (raw) {

// output raw format

System.out.println("doc=" + hits[i].doc + " score=" + hits[i].score);

//doc=2 score=0.29767057

}

Document doc = searcher.doc(hits[i].doc);

String path = doc.get("path");

if (path != null) {

System.out.println((i + 1) + ". " + path);

//1. D:\download\lucene-7.7.3\demo\txt\hello123.txt

String title = doc.get("title");

if (title != null) {

System.out.println(" Title: " + doc.get("title"));

}

} else {

System.out.println((i + 1) + ". " + "No path for this document");

}

System.out.println();

}

}

}

StandardAnalyzer 中文分词示例: index, query

@org.junit.Test

public void analyzeTest() throws IOException {

StringReader stopwords = new StringReader("the \n bigger");

StringReader stringReader = new StringReader("The quick BIGGER brown fox jumped over the bigger lazy dog. ");

//StandardAnalyzer: 内置 LowerCaseFilter, StopFilter

StandardAnalyzer analyzer = new StandardAnalyzer(stopwords);

TokenStream tokenStream = analyzer.tokenStream("contents, ", stringReader);

// final StandardTokenizer src = new StandardTokenizer();

// TokenStream tok = new LowerCaseFilter(src);

// tok = new StopFilter(tok, stopwords);

tokenStream.reset();

CharTermAttribute term = tokenStream.addAttribute(CharTermAttribute.class);

while (tokenStream.incrementToken()) {

System.out.print("[" + term.toString() + "] ");

//[quick] [brown] [fox] [jumped] [over] [lazy] [dog]

}

tokenStream.close();

}

3, 中文词库下载:同义词,停用词

- 链接地址: https://github.com/fighting41love/funNLP

- 直接下载github文件:将 github.com 替换为 raw.githubusercontent.com,并去除 /blob:

url="https://github.com/guotong1988/chinese_dictionary/blob/master/dict_synonym.txt"

echo $url|sed -e '[email protected]@raw.githubusercontent.com@' -e 's@blob/@@'

生成solr格式的 停用词:逗号分隔

public static void main(String[] args) throws IOException {

// 创建字符流对象,并根据已创建的字节流对象创建字符流对象

String file = "D:\\download\\solr-近义词,停用词\\synonym.txt";

String outfile = "D:\\download\\solr-近义词,停用词\\synonym2.txt";

BufferedWriter bw = new BufferedWriter(new FileWriter(outfile));

BufferedReader raf = new BufferedReader(new FileReader(file));

//同义词

//Aa01A01= 人 士 人物 人士 人氏 人选

//Aa01A02= 人类 生人 全人类

String s = null;

while ((s = raf.readLine()) != null) {

String[] arr = s.split("=");

if (arr.length < 2) continue;

String[] arr2 = arr[1].split("\\s");

for (int i=0;i <arr2.length; i++){

if (i != arr2.length -1 ){

if ( ! arr2[i].trim().equals("")){

System.out.print(arr2[i]+",");

bw.write(arr2[i]+",");

}

}else {

System.out.print(arr2[i]);

bw.write(arr2[i]);

}

}

System.out.println();

bw.write("\n");

}

bw.flush();

bw.close();

raf.close();

}

//人,士,人物,人士,人氏,人选

//人类,生人,全人类

//人手,人员,人口,人丁,口,食指

//劳力,劳动力,工作者