- Activation Maximization:

- Network Inversion:

- Deconvolutional Neural Networks (DeconvNet):

- Network Dissection:

后面有相关的参考论文。

如果是想要可视化CNN的网络结构,推荐使用这个软件:Netron

Activation Maximization:

用BP的算法生成一个输入图像,该图像可以最大化任意层中特定神经元的激活。

三种方式:

- 不带正则:

x ∗ = a r g m a x x a i , l ( θ , x ) x^*= \mathop{argmax}\limits_{x} a_{i,l}(\theta,x) x∗=xargmaxai,l(θ,x)- 带正则:

x ∗ = a r g m a x x ( a i , l ( θ , x ) − λ ( x ) ) x^*= \mathop{argmax}\limits_{x}(a_{i,l}(\theta,x)-\lambda(x)) x∗=xargmax(ai,l(θ,x)−λ(x))- 与GAN结合(Deep Generative Network Activation Maximization, DGN-AM):

x ∗ = a r g m a x x ( a i , l ( θ , G ( x ) ) − λ ( x ) ) x^*= \mathop{argmax}\limits_{x}(a_{i,l}(\theta,G(x))-\lambda(x)) x∗=xargmax(ai,l(θ,G(x))−λ(x))

Network Inversion:

在输入的图像中找到一个特定的区域,这个区域能够使卷积层中特定的神经元激活。

- 基于Regularizer:

x ∗ = a r g m a x x ( C ⋅ L ( A ( x ) , A ( x 0 ) ) − λ ( x ) ) x^*= \mathop{argmax}\limits_{x}(C\cdot \mathcal{L}(A(x),A(x_0)) -\lambda(x)) x∗=xargmax(C⋅L(A(x),A(x0))−λ(x))- 基于UpconvNet:

W ∗ = a r g m a x w ∑ i ∣ ∣ x i − D ( A ( x i ) , W ) ∣ ∣ 2 W^*= \mathop{argmax}\limits_{w}\sum_i ||x_i-D(A(x_i),W)||^2 W∗=wargmaxi∑∣∣xi−D(A(xi),W)∣∣2

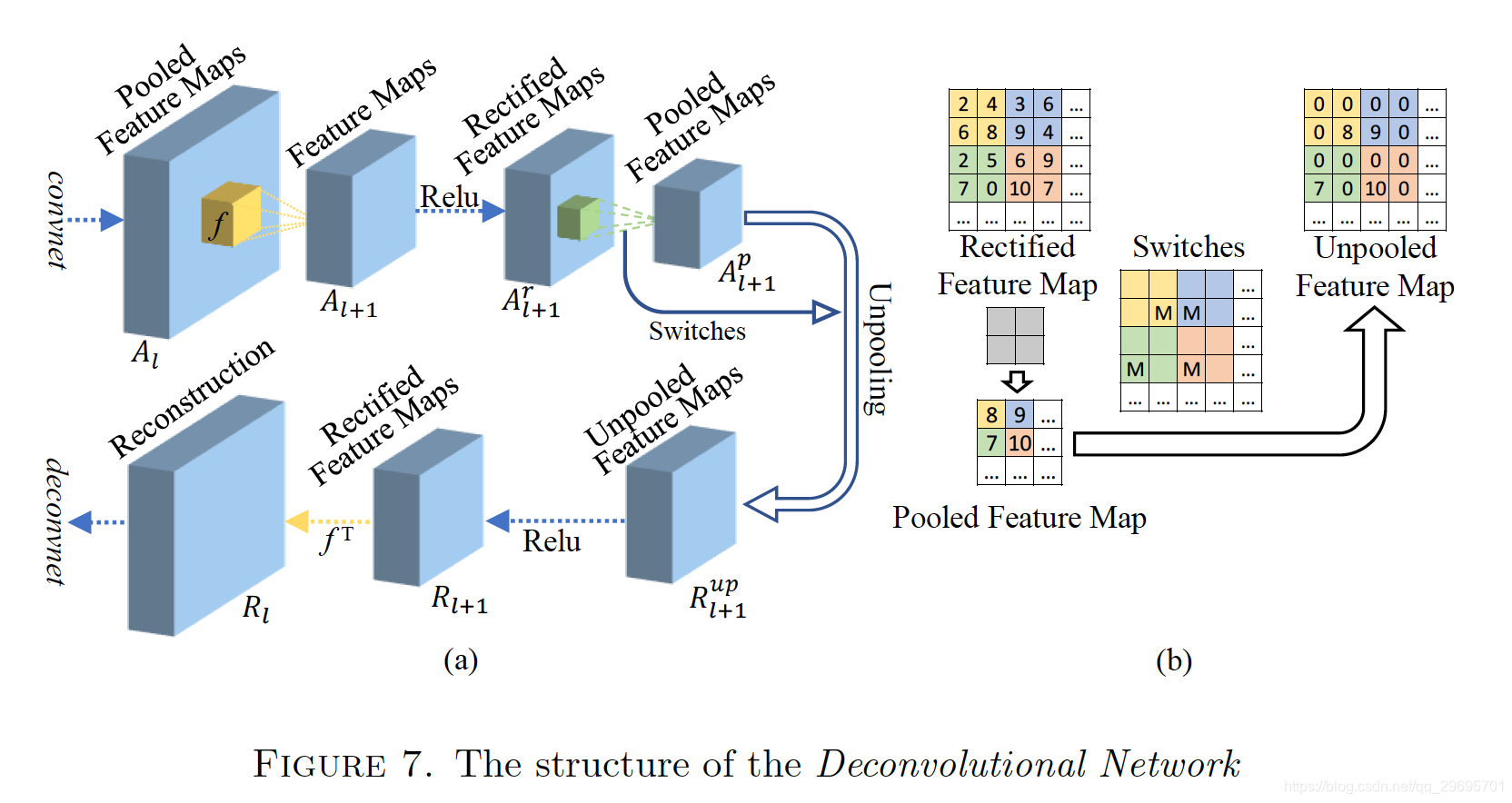

Deconvolutional Neural Networks (DeconvNet):

对于输入图像,通过任意层中所有神经元的特征图重建新图像,以突出全面的CNN层级特征。

可以将多个特征图 a i , l a_{i,l} ai,l转化为一维特征向量 A l A_{l} Al,而原来的卷积操作可视为向量与向量之间的映射 f l f_l fl:

A l + 1 = A l ∗ f l A_{l+1}=A_{l}*f_{l} Al+1=Al∗fl

所以Deconv的操作是如下的方式:

R l = R l + 1 ∗ f l T R_l=R_{l+1}*f_{l}^T Rl=Rl+1∗flT还有其他两种操作: R e v e r s e d R e c t i f i c a t i o n L a y e r Reversed Rectification Layer ReversedRectificationLayer 与 R e v e r s e d M a x − p o o l i n g / U n p o o l i n g L a y e r Reversed Max-pooling/Unpooling Layer ReversedMax−pooling/UnpoolingLayer

参考:https://blog.csdn.net/qq_16234613/article/details/79387345

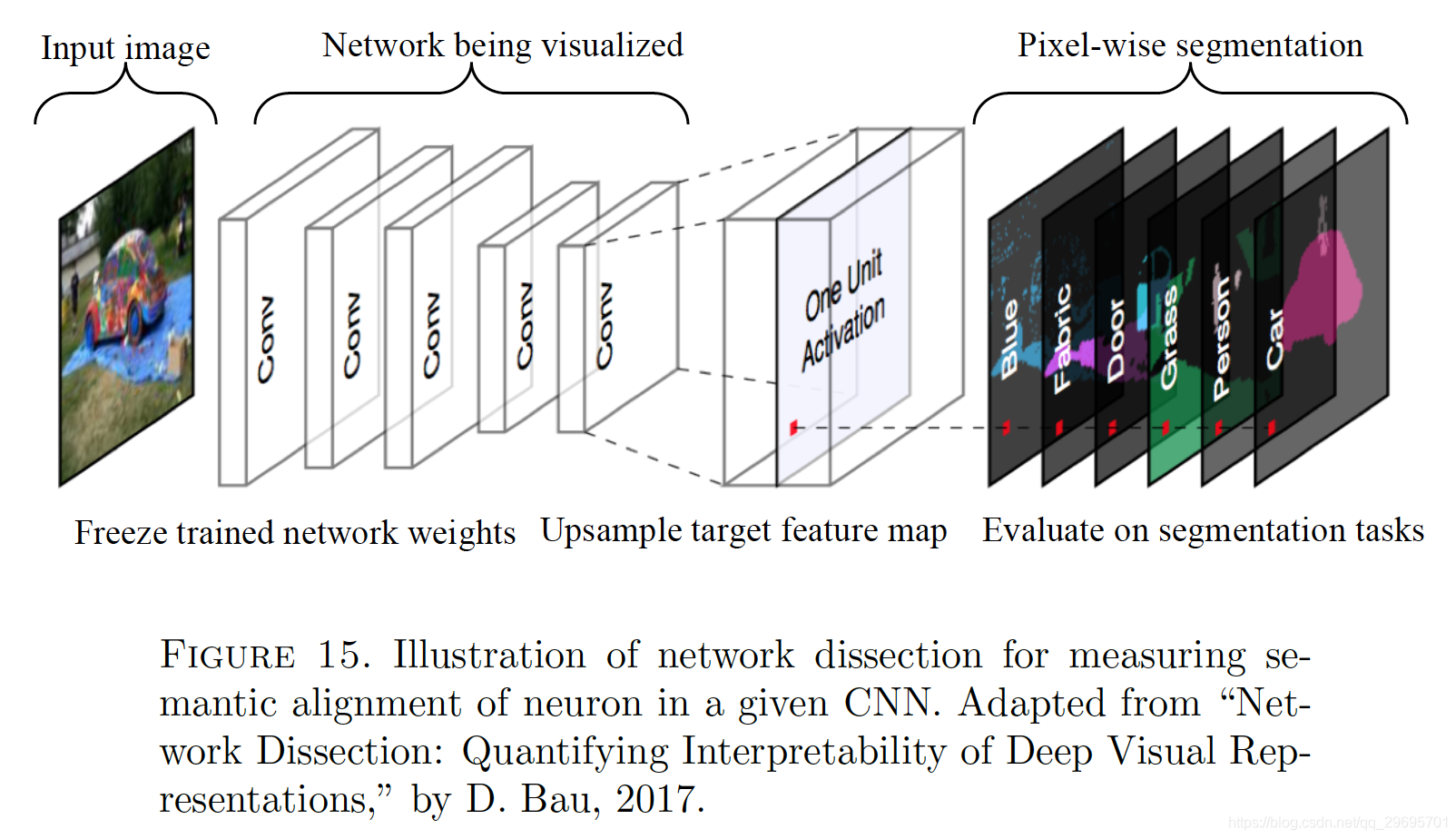

Network Dissection:

评估单个或多个卷积神经元与特定语义概念之间的相关性。

项目代码:https://github.com/CSAILVision/NetDissect

官方主页:http://netdissect.csail.mit.edu/

参考文献:

- Activation Maximization:

- D. Erhan, Y. Bengio, A. Courville and P. Vincent, Visualizing higher-layer features of a deep network, Technical report, University of Montreal, 1341 (2009), p3.

- G. E. Hinton, S. Osindero and Y.-W. Teh, A fast learning algorithm for deep belief nets, Neural computation, 18 (2006), 1527-1554.

- P. Vincent, H. Larochelle, I. Lajoie, Y. Bengio and P.-A. Manzagol, Stacked denoising autoencoders: Learning useful representations in a deep network with a local denoising criterion, Journal of Machine Learning Research, 11 (2010), 3371-3408.

- K. Simonyan, A. Vedaldi and A. Zisserman, Deep inside convolutional networks: Visualising image classi cation models and saliency maps, arXiv preprint, arXiv:1312.6034.

- J. Yosinski, J. Clune, A. Nguyen, T. Fuchs and H. Lipson, Understanding neural networks through deep visualization, arXiv preprint, arXiv:1506.06579.

- A. Nguyen, J. Yosinski and J. Clune, Multifaceted feature visualization: Uncovering the different types of features learned by each neuron in deep neural networks, arXiv preprint, arXiv:1602.03616.

- A. Nguyen, A. Dosovitskiy, J. Yosinski, T. Brox and J. Clune, Synthesizing the preferred inputs for neurons in neural networks via deep generator networks, in Proceedings of the Advances in Neural Information Processing Systems, 2016, 3387-3395.

- Network Inversion:

- A. Mahendran and A. Vedaldi, Understanding deep image representations by inverting them, in Proceedings of the IEEE conference on Computer Vision and Pattern Recognition, 2015, 5188-5196.

- A. Mahendran and A. Vedaldi, Visualizing deep convolutional neural networks using natural pre-images, International Journal of Computer Vision, 120 (2016), 233-255.

- Deconvolutional Neural Networks (DeconvNet):

- M. D. Zeiler and R. Fergus, Visualizing and understanding convolutional networks, in Proceedings of the European Conference on Computer Vision, 2014, 818-833.

- Network Dissection:

- D. Bau, B. Zhou, A. Khosla, A. Oliva and A. Torralba, Network dissection: Quantifying interpretability of deep visual representations, in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2017, 3319-3327.

- R. Fong and A. Vedaldi, Net2vec: Quantifying and explaining how concepts are encoded by lters in deep neural networks, arXiv preprint, arXiv:1801.03454.