概述

1)ceph集群:nautilus版,monitoring为192.168.39.19-21

2)kuberntes集群环境:v1.14.2

3)集成方式:storageclass动态提供存储

4)k8s集群的节点已安装ceph-common包。

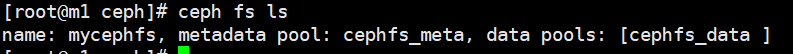

1)步骤1:在ceph中创建cephfs文件系统

#1:启动mds组件

ceph-deploy --overwrite-conf mds create node1 datanode2

#2:创建cephfs文件系统

ceph osd pool create cephfs_data 8 8

ceph osd pool create cephfs_meta 2 2

ceph fs new mycephfs cephfs_meta cephfs_data

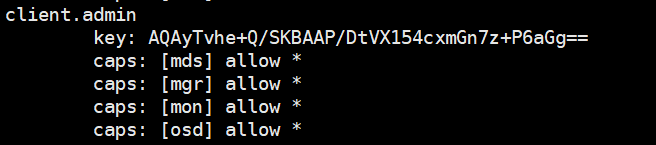

2)步骤2:在k8s中创建存放ceph admin用户的secret

#创建secret对象保存ceph admin用户的key

kubectl create secret generic ceph-admin --type="kubernetes.io/rbd" --from-literal=key=AQAyTvhe+Q/SKBAAP/DtVX154cxmGn7z+P6aGg== --namespace=kube-system

3)步骤3:部署cephfs csi

[root@master rbac]# cat serviceaccount.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: cephfs-provisioner

namespace: kube-system

[root@master rbac]# cat role.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: cephfs-provisioner

namespace: kube-system

rules:

- apiGroups: [""]

resources: ["secrets"]

verbs: ["create", "get", "delete"]

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

[root@master rbac]# cat rolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: cephfs-provisioner

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: cephfs-provisioner

subjects:

- kind: ServiceAccount

name: cephfs-provisioner

[root@master rbac]# cat clusterrole.yaml

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: cephfs-provisioner

namespace: kube-system

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

- apiGroups: [""]

resources: ["services"]

resourceNames: ["kube-dns","coredns"]

verbs: ["list", "get"]

[root@master rbac]# cat clusterrolebinding.yaml

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: cephfs-provisioner

subjects:

- kind: ServiceAccount

name: cephfs-provisioner

namespace: kube-system

roleRef:

kind: ClusterRole

name: cephfs-provisioner

apiGroup: rbac.authorization.k8s.io

[root@master rbac]# cat deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: cephfs-provisioner

namespace: kube-system

spec:

replicas: 1

selector:

matchLabels:

app: cephfs-provisioner

strategy:

type: Recreate

template:

metadata:

labels:

app: cephfs-provisioner

spec:

containers:

- name: cephfs-provisioner

image: "quay-mirror.qiniu.com/external_storage/cephfs-provisioner:latest"

env:

- name: PROVISIONER_NAME

value: ceph.com/cephfs

- name: PROVISIONER_SECRET_NAMESPACE

value: kube-system

command:

- "/usr/local/bin/cephfs-provisioner"

args:

- "-id=cephfs-provisioner-1"

serviceAccount: cephfs-provisioner

4)步骤4:创建storageclass

[root@master rbac]# cat storageclass.yaml

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: cephfs

provisioner: ceph.com/cephfs

parameters:

monitors: 192.168.39.19:6789,192.168.39.20:6789,192.168.39.21:6789

adminId: admin

adminSecretName: ceph-admin

adminSecretNamespace: kube-system

claimRoot: /volumes/kubernetes

5)步骤5:创建pvc

[root@master rbac]# cat test-pvc.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: cephfs-claim

spec:

accessModes:

- ReadWriteOnce

storageClassName: cephfs

resources:

requests:

storage: 32Mi

创建的pvc会和自动地与csi插件创建的pv对象进行绑定,如图所示:

6)步骤6:创建使用pvc的pod

[root@master rbac]# cat pod-busybox-cephfs.yaml

apiVersion: v1

kind: Pod

metadata:

name: cephfs-busybox

spec:

containers:

- name: ceph-busybox

image: busybox:1.27

command: ["sleep", "60000"]

volumeMounts:

- name: ceph-vol1

mountPath: /usr/share/busybox

readOnly: false

volumes:

- name: ceph-vol1

persistentVolumeClaim:

claimName: cephfs-claim

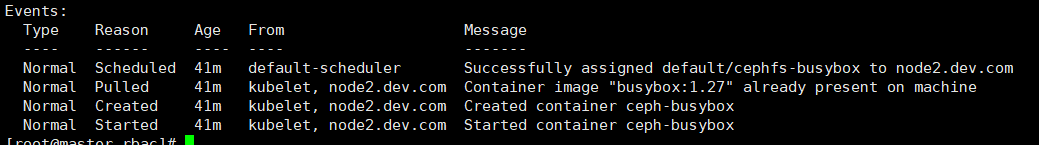

pod创建成功,事件如图所示:

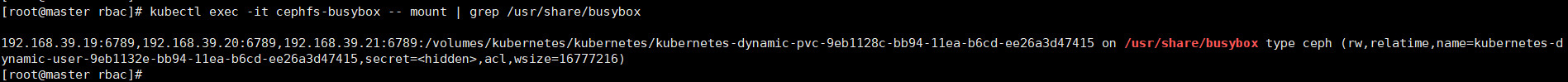

进入pod中查看挂载信息,可发现容器已经挂载远程cephfs: