文章目录

环境部署

| 主机名 | 地址 | 部署软件 |

|---|---|---|

| master01 | 20.0.0.11 | kube-apiserver kube-controller-manager kube-scheduler etcd |

| node1 | 20.0.0.11 | kubelet kube-proxy docker flannel etcd |

| node2 | 20.0.0.11 | kubelet kube-proxy docker flannel etcd |

开局优化

修改主机名

hostnamectl set-hostname master01

hostnamectl set-hostname node01

hostnamectl set-hostname node02

关闭防火墙与核心防护(三个节点都做)

systemctl stop firewalld && systemctl disable firewalld

setenforce 0 && sed -i "s/SELINUX=enforcing/SELNIUX=disabled/g" /etc/selinux/config

master节点操作etcd 集群部署

创建k8s目录和存放证书目录,安装制作证书的工具cfssl

[root@master01 ~]# mkdir k8s

[root@master01 ~]# cd k8s/

[root@master01 k8s]# mkdir etcd-cert '存放证书'

'编写cfssl.sh脚本,从官网下载制作证书的工具cfssl,直接放在/usr/local/bin目录下,方便系统识别,并加执行权限'

[root@localhost k8s]# vim cfssl.sh

curl -L https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 -o /usr/local/bin/cfssl

curl -L https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 -o /usr/local/bin/cfssljson

curl -L https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 -o /usr/local/bin/cfssl-certinfo

chmod +x /usr/local/bin/cfssl /usr/local/bin/cfssljson /usr/local/bin/cfssl-certinfo

[root@localhost k8s]# bash cfssl.sh #执行脚本等待安装下载软件

[root@master01 ~]# cd /usr/local/bin/

[root@master01 bin]# ll

总用量 18808

-rw-r--r--. 1 root root 10376657 1月 16 2020 cfssl

-rw-r--r--. 1 root root 6595195 1月 16 2020 cfssl-certinfo

-rw-r--r--. 1 root root 2277873 1月 16 2020 cfssljson

[root@master01 bin]# chmod +x * '增加执行权限'

#cfssl:生成证书工具

#cfssl-certinfo:查看证书信息

#cfssljson:通过传入json文件生成证书

开始制作证书

定义ca证书

[root@master01 ~]# cd k8s/etcd-cert/

[root@master01 etcd-cert]# cat > ca-config.json <<EOF

> {

> "signing": {

> "default": {

> "expiry": "87600h"

> },

> "profiles": {

> "www": {

> "expiry": "87600h",

> "usages": [

> "signing",

> "key encipherment",

> "server auth",

> "client auth"

> ]

> }

> }

> }

> }

> EOF

[root@master01 etcd-cert]# ls

ca-config.json

实现证书签名

[root@master01 etcd-cert]# cat > ca-csr.json <<EOF

> {

> "CN": "etcd CA",

> "key": {

> "algo": "rsa",

> "size": 2048

> },

> "names": [

> {

> "C": "CN",

> "L": "Beijing",

> "ST": "Beijing"

> }

> ]

> }

> EOF

[root@master01 etcd-cert]# ls

ca-config.json ca-csr.json

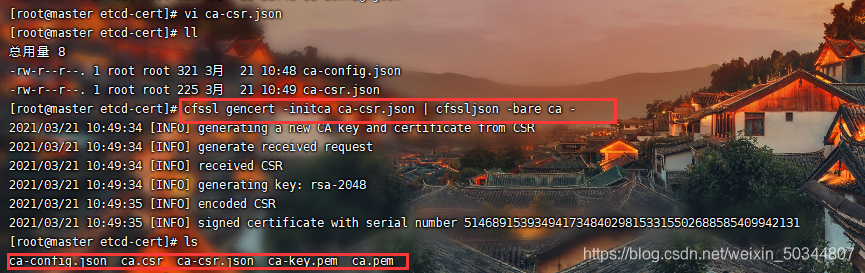

生产证书,生成ca-key.pem ca.pem

[root@master etcd-cert]# cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

指定etcd三个节点之间的通信验证

[root@master01 etcd-cert]# cat > server-csr.json <<EOF

> {

> "CN": "etcd",

> "hosts": [

> "20.0.0.11",

> "20.0.0.12",

> "20.0.0.13"

> ],

> "key": {

> "algo": "rsa",

> "size": 2048

> },

> "names": [

> {

> "C": "CN",

> "L": "BeiJing",

> "ST": "BeiJing"

> }

> ]

> }

> EOF

[root@master01 etcd-cert]# ls

ca-config.json ca.csr ca-csr.json ca-key.pem ca.pem server-csr.json

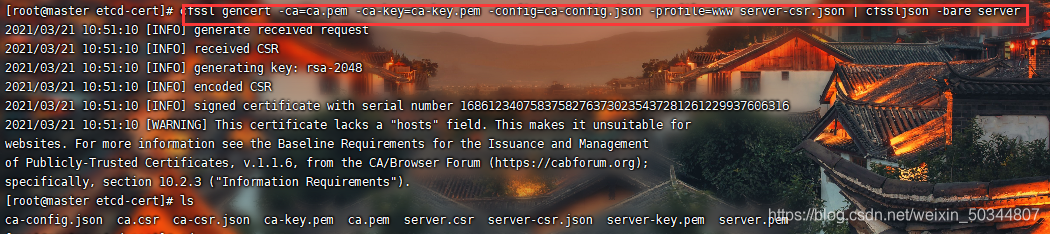

生成ETCD证书 server-key.pem server.pem

[root@master01 etcd-cert]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=www server-csr.json | cfssljson -bare server

下载ETCD二进制包并解压缩

etcd下载地址 https://github.com/etcd-io/etcd/releases

扫描二维码关注公众号,回复:

12886233 查看本文章

[root@master01 ~]# cd k8s/

[root@master01 k8s]# ls

etcd-cert etcd-v3.3.10-linux-amd64.tar.gz

[root@master01 k8s]# tar zxvf etcd-v3.3.10-linux-amd64.tar.gz

创建目录将ETCD的执行脚本移动

[root@master01 etcd-v3.3.10-linux-amd64]# ls

Documentation etcd etcdctl README-etcdctl.md README.md READMEv2-etcdctl.md

[root@master01 ~]# mkdir -p /opt/etcd/{

cfg,bin,ssl}

[root@master01 ~]# cd /opt/etcd/

[root@master01 etcd]# ls

bin cfg ssl

[root@master01 ~]# cd k8s/etcd-v3.3.10-linux-amd64/

[root@master01 etcd-v3.3.10-linux-amd64]# mv etcd etcdctl /opt/etcd/bin/

[root@master01 ~]# cd /opt/etcd/bin/

[root@master01 bin]# ls

etcd etcdctl

证书拷贝

[root@master01 ~]# cd k8s/etcd-cert/

[root@master01 etcd-cert]# cp *.pem /opt/etcd/ssl/

[root@master01 ~]# cd /opt/etcd/ssl/

[root@master01 ssl]# ls

ca-key.pem ca.pem server-key.pem server.pem

将以配置好的脚本拉取到k8s目录

[root@master01 ~]# cd k8s/

[root@master01 k8s]# ls

etcd-cert etcd.sh etcd-v3.3.10-linux-amd64 etcd-v3.3.10-linux-amd64.tar.gz

启动脚本

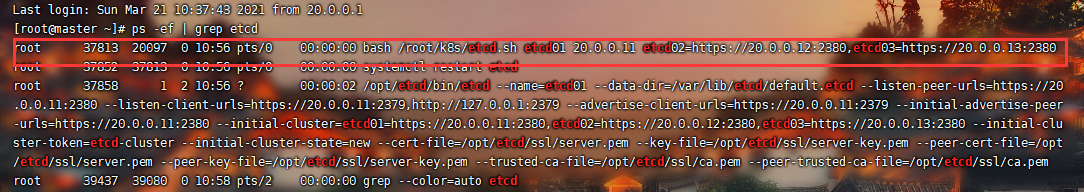

[root@master01 ~]# bash /root/k8s/etcd.sh etcd01 20.0.0.11 etcd02=https://20.0.0.12:2380,etcd03=https://20.0.0.13:2380

Created symlink from /etc/systemd/system/multi-user.target.wants/etcd.service to /usr/lib/systemd/system/etcd.service. '进入卡住状态等待其他节点加入'

2380是集群内部通讯的端口

2379是对外提供的端口

另起会话终端查看发现etcd进程已经开启

node节点加入ETCD集群(实现内部通信)

将master节点上的证书等文件拷贝至node节点

[root@master01 ~]# scp -r /opt/etcd/ root@20.0.0.12:/opt/

[root@master01 ~]# scp -r /opt/etcd/ root@20.0.0.13:/opt/

启动脚本拷贝其他节点

[root@master01 ~]# scp /usr/lib/systemd/system/etcd.service root@20.0.0.12:/usr/lib/systemd/system/

[root@master01 ~]# scp /usr/lib/systemd/system/etcd.service root@20.0.0.13:/usr/lib/systemd/system/

node1/2节点操作

node1 与 node 2

[root@node01 ~]# yum -y install tree

[root@node01 ~]# tree /opt/etcd/

/opt/etcd/

├── bin

│ ├── etcd

│ └── etcdctl

├── cfg

│ └── etcd

└── ssl

├── ca-key.pem

├── ca.pem

├── server-key.pem

修改配置文件node1 node2

[root@node1 ~]# cd /opt/etcd/cfg/

[root@node1 cfg]# ls

etcd

[root@node1 cfg]# vi etcd

#[Member]

ETCD_NAME="etcd02"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://20.0.0.12:2380"

ETCD_LISTEN_CLIENT_URLS="https://20.0.0.12:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://20.0.0.12:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://20.0.0.12:2379"

ETCD_INITIAL_CLUSTER="etcd01=https://20.0.0.11:2380,etcd02=https://20.0.0.12:2380,etcd03=https://20.0.0.13:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

[root@node01 ~]# systemctl start etcd

[root@node02 ~]# cd /opt/etcd/cfg/

[root@node02 cfg]# ls

etcd

[root@node02 cfg]# vi etcd

#[Member]

ETCD_NAME="etcd03"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://20.0.0.13:2380"

ETCD_LISTEN_CLIENT_URLS="https://20.0.0.13:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://20.0.0.13:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://20.0.0.13:2379"

ETCD_INITIAL_CLUSTER="etcd01=https://20.0.0.11:2380,etcd02=https://20.0.0.12:2380,etcd03=https://20.0.0.13:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

[root@node01 ~]# systemctl start etcd

加入集群启动脚本

[root@master01 ~]# bash /root/k8s/etcd.sh etcd01 20.0.0.11 etcd02=https://20.0.0.12:2380,etcd03=https://20.0.0.13:2380

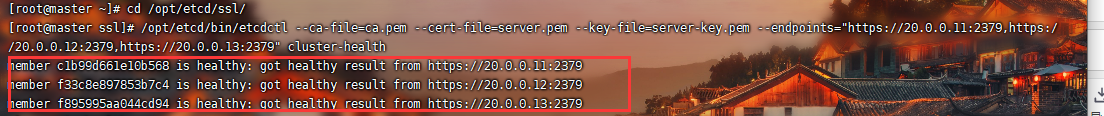

master01检查群集状态

[root@master01 ~]# cd /opt/etcd/ssl/

[root@master ssl]# /opt/etcd/bin/etcdctl --ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem --endpoints="https://20.0.0.11:2379,https://20.0.0.12:2379,https://20.0.0.13:2379" cluster-health

member c1b99d661e10b568 is healthy: got healthy result from https://20.0.0.11:2379

member f33c8e897853b7c4 is healthy: got healthy result from https://20.0.0.12:2379

member f895995aa044cd94 is healthy: got healthy result from https://20.0.0.13:2379

cluster is healthy

'健康状态'

ETCD集群已经搭建成功

docker安装

node1节点与node2都需要安装docker-ce

Docker-ce环境部署

flannel网络配置

master1

分配的子网段到ETCD中,供flannel使用

'写入分配的子网段到ETCD中,供flannel使用'

[root@master1 ~]# cd /opt/etcd/ssl/

[root@master1 ssl]# /opt/etcd/bin/etcdctl --ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem --endpoints="https://20.0.0.11:2379,https://20.0.0.12:2379,https://20.0.0.13:2379" set /coreos.com/network/config '{ "Network": "172.17.0.0/16", "Backend": {"Type": "vxlan"}}'

'查看写入的信息'

[root@master1 ssl]# /opt/etcd/bin/etcdctl --ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem --endpoints="https://20.0.0.11:2379,https://20.0.0.12:2379,https://20.0.0.13:2379" get /coreos.com/network/config

{

"Network": "172.17.0.0/16", "Backend": {

"Type": "vxlan"}}

node节点部署

flannel包拷贝到所有node节点

[root@node1 ~]# ll

总用量 9484

-rw-------. 1 root root 1434 3月 21 10:18 anaconda-ks.cfg

-rw-r--r--. 1 root root 9706487 3月 21 11:17 flannel-v0.10.0-linux-amd64.tar.gz

[root@node2 ~]# tar zxvf flannel-v0.10.0-linux-amd64.tar.gz

所有node节点操作解压

[root@node01 ~]# tar zxvf flannel-v0.10.0-linux-amd64.tar.gz

flanneld

mk-docker-opts.sh

README.md

创建k8s工作目录

[root@node01 ~]# mkdir /opt/kubernetes/{

cfg,bin,ssl} -p

[root@node01 ~]# ls /opt/kubernetes/

bin cfg ssl

移动文件和启动脚本到bin目录

[root@node01 ~]# mv mk-docker-opts.sh flanneld /opt/kubernetes/bin/

[root@node01 ~]# ls /opt/kubernetes/bin/

flanneld mk-docker-opts.sh

将写好的flannel.sh脚本拉取到根目录

vim flannel.sh

[root@localhost ~]# vim flannel.sh

#!/bin/bash

ETCD_ENDPOINTS=${

1:-"http://127.0.0.1:2379"}

cat <<EOF >/opt/kubernetes/cfg/flanneld

FLANNEL_OPTIONS="--etcd-endpoints=${ETCD_ENDPOINTS} \

-etcd-cafile=/opt/etcd/ssl/ca.pem \

-etcd-certfile=/opt/etcd/ssl/server.pem \

-etcd-keyfile=/opt/etcd/ssl/server-key.pem"

EOF

cat <<EOF >/usr/lib/systemd/system/flanneld.service

[Unit]

Description=Flanneld overlay address etcd agent

After=network-online.target network.target

Before=docker.service

[Service]

Type=notify

EnvironmentFile=/opt/kubernetes/cfg/flanneld

ExecStart=/opt/kubernetes/bin/flanneld --ip-masq \$FLANNEL_OPTIONS

ExecStartPost=/opt/kubernetes/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/subnet.env

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable flanneld

systemctl restart flanneld

[root@node2 ~]# ll

总用量 9496

-rw-------. 1 root root 1434 3月 21 10:18 anaconda-ks.cfg

-rw-r--r--. 1 root root 836 3月 21 11:20 flannel.sh

-rw-r--r--. 1 root root 9706487 3月 21 11:17 flannel-v0.10.0-linux-amd6

开启flannel网络功能

-rw-rw-r--. 1 1001 1001 4298 12月 24 2017 README.md

[root@node1 ~]# bash flannel.sh https://20.0.0.11:2379,https://20.0.0.12:2379,https://20.0.0.13:2379

Created symlink from /etc/systemd/system/multi-user.target.wants/flanneld.service to /usr/lib/systemd/system/flanneld.service.

[root@node1 ~]# ls /opt/kubernetes/cfg/

flanneld

[root@node1 ~]# ip add

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:94:33:de brd ff:ff:ff:ff:ff:ff

inet 20.0.0.13/8 brd 20.255.255.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::67cc:c126:8cf8:a1e1/64 scope link noprefixroute

valid_lft forever preferred_lft forever

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:e5:cc:6e:0b brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

4: flannel.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN group default

link/ether 3a:70:86:bf:61:4e brd ff:ff:ff:ff:ff:ff

inet 172.17.18.0/32 scope global flannel.1

valid_lft forever preferred_lft forever

inet6 fe80::3870:86ff:febf:614e/64 scope link

valid_lft forever preferred_lft fore

[root@node2 ~]# cat /run/flannel/subnet.env

DOCKER_OPT_BIP="--bip=172.17.18.1/24"

DOCKER_OPT_IPMASQ="--ip-masq=false"

DOCKER_OPT_MTU="--mtu=1450"

配置docker连接flannel

[root@node01 ~]# vi /usr/lib/systemd/system/docker.service

[Service]

Type=notify

# the default is not to use systemd for cgroups because the delegate issues still

# exists and systemd currently does not support the cgroup feature set required

# for containers run by docker

EnvironmentFile=/run/flannel/subnet.env '添加变量文件'

ExecStart=/usr/bin/dockerd $DOCKER_NETWORK_OPTIONS -H fd:// --containerd=/run/containerd/containerd.sock '添加变量文件'

ExecReload=/bin/kill -s HUP $MAINPID

TimeoutSec=0

RestartSec=2

Restart=always

[root@node01 ~]# systemctl daemon-reload

[root@node01 ~]# systemctl restart docker

测试ping通对方docker0网卡 证明flannel起到路由作用

[root@node01 ~]# docker run -it centos:7 /bin/bash

[root@7487a904517f /]# yum -y install net-tools

[root@7487a904517f /]# ifconfig

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450

inet 172.17.18.2 netmask 255.255.255.0 broadcast 172.17.30.255

ether 02:42:ac:11:1e:02 txqueuelen 0 (Ethernet)

RX packets 16715 bytes 14617448 (13.9 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 7591 bytes 413808 (404.1 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

loop txqueuelen 1 (Local Loopback)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

相互ping通

root@522b63687986 /]# ifconfig

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450

inet 172.17.18.2 netmask 255.255.255.0 broadcast 172.17.18.255

ether 02:42:ac:11:12:02 txqueuelen 0 (Ethernet)

RX packets 18346 bytes 14665035 (13.9 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 9347 bytes 508094 (496.1 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

loop txqueuelen 1000 (Local Loopback)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

[root@522b63687986 /]# ping 172.17.2.2 'ping node02'

PING 172.17.2.2 (172.17.2.2) 56(84) bytes of data.

64 bytes from 172.17.2.2: icmp_seq=1 ttl=62 time=0.571 ms

64 bytes from 172.17.2.2: icmp_seq=2 ttl=62 time=0.539 ms

'node2 也要尝试ping通node1'

可以ping通,这时flannel组件完成,两个节点上的容器可正常通讯