本篇使用的系统是Ubuntu

一.Docker安装

- 进入命令行

安装组件的时候如果报资源锁,重启一下

- 安装组件:

sudo apt install curl

1.镜像比较大, 需要准备一个网络稳定的环境

2.其中–mirror Aliyun代表使用阿里源

- 下载路径:

sudo curl -fsSL https://get.docker.com | bash -s docker --mirror Aliyun

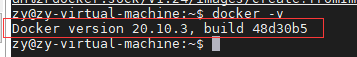

- 下载完成后查看一下版本信息:

docker -v

二.创建容器

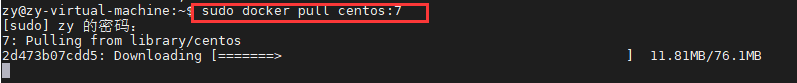

- 拉取镜像:

sudo docker pull centos:7

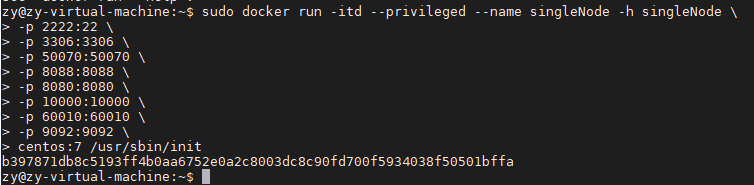

- 启动并创建容器:

sudo docker run -itd --privileged --name singleNode -h singleNode \

-p 2222:22 \

-p 3306:3306 \

-p 50070:50070 \

-p 8088:8088 \

-p 8080:8080 \

-p 10000:10000 \

-p 60010:60010 \

-p 9092:9092 \

centos:7 /usr/sbin/init

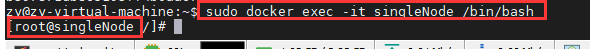

- 进入容器:

sudo docker exec -it singleNode /bin/bash

- 此时就成功进入容器

三.Docker中搭建大数据环境

- 安装组件

yum clean all

yum -y install unzip bzip2-devel vim bashname

- 配置SSH免密登录

yum install -y openssh openssh-server openssh-clients openssl openssl-devel

ssh-keygen -t rsa -f ~/.ssh/id_rsa -P ''

cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

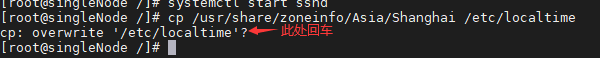

# 启动SSH服务

systemctl start sshd

- 设置时区

cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

- 如果有防火墙需要关闭一下:

systemctl stop firewalld

systemctl disable firewalld

- 创建文件夹:

#存放解压包

mkdir -p /opt/install

- 退出容器:

exit - 将大数据组件包上传至Ubuntu中的一个目录下,创建目录名为software

- 将Ubuntu中的组件包复制一份到容器中

sudo docker cp /home/zy/software/ singleNode:/opt/

- 重新进入容器

sudo docker exec -it singleNode /bin/bash

安装MySQL

- 进入到安装包路径下

cd /opt/software

- 解压包

tar zxvf MySQL-5.5.40-1.linux2.6.x86_64.rpm-bundle.tar -C /opt/install

- 安装依赖

yum -y install libaio perl

- 安装服务端和客户端

#先到MySQL解压后的路径

cd /opt/install

#安装服务端

rpm -ivh MySQL-server-5.5.40-1.linux2.6.x86_64.rpm

#安装客户端

rpm -ivh MySQL-client-5.5.40-1.linux2.6.x86_64.rpm

- 启动并配置MySQL

#第一步:启动

systemctl start mysql

#第二步:配置用户名密码

/usr/bin/mysqladmin -u root password 'root'

#第三步:进入mysql

mysql -uroot -proot

#第四步:配置

> update mysql.user set host='%' where host='localhost';

> delete from mysql.user where host<>'%' or user='';

> flush privileges;

#安装完成退出

quit

安装JDK

- 解压包

tar zxvf /opt/software/jdk-8u171-linux-x64.tar.gz -C /opt/install/

- 创建软连接

#取别名为:java

ln -s /opt/install/jdk1.8.0_171 /opt/install/java

- 配置环境变量:

vi /etc/profile

#添加以下配置信息

export JAVA_HOME=/opt/install/java

export PATH=$JAVA_HOME/bin:$PATH

- 生效配置文件:

source /etc/profile

- 查看Java版本:

java -version

安装Hadoop

- 解压包

tar zxvf /opt/software/hadoop-2.6.0-cdh5.14.2.tar_2.gz -C /opt/install/

- 创建软连接

ln -s /opt/install/hadoop-2.6.0-cdh5.14.2 /opt/install/hadoop

- 配置core-site.xml

vi core-site.xml

-------------------------------------------

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://singleNode:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/opt/install/hadoop/data/tmp</value>

</property>

</configuration>

-------------------------------------------

- 配置hdfs-site.xml

vi hdfs-site.xml

-------------------------------------------

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property

</configuration>

-------------------------------------------

- 配置mapred-site.xml

vi mapred-site.xml.template

-------------------------------------------

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapreduce.jobhistory.address</name>

<value>singleNode:10020</value>

</property>

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>singleNode:19888</value>

</property>

</configuration>

-------------------------------------------

- 配置yarn-site.xml

vi yarn-site.xml

-------------------------------------------

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.resourcemanager.hostname</name>

<value>singleNode</value>

</property>

<property>

<name>yarn.log-aggregation-enable</name>

<value>true</value>

</property>

<property>

<name>yarn.log-aggregation.retain-seconds</name>

<value>604800</value>

</property>

</configuration>

-------------------------------------------

- 配置hadoop-env.sh

vi hadoop-env.sh

-------------------------------------------

export JAVA_HOME=/opt/install/java

-------------------------------------------

- 配置mapred-env.sh

vi mapred-env.sh

-------------------------------------------

export JAVA_HOME=/opt/install/java

-------------------------------------------

- 配置yarn-env.sh

vi yarn-env.sh

-------------------------------------------

export JAVA_HOME=/opt/install/java

-------------------------------------------

- 配置slaves

export HADOOP_HOME=/opt/install/hadoop

export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

export PATH=$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$PATH

- HDFS格式化

hdfs namenode -format

- 启动Hadoop服务

start-all.sh

- web端查看

#地址

192.168.**.**:50070

安装Hive

- 解压包

tar zxvf /opt/software/hive-1.1.0-cdh5.14.2.tar.gz -C /opt/install/

- 创建软连接

ln -s /opt/install/hive-1.1.0-cdh5.14.2 /opt/install/hive

- 修改配置文件:

#到配置文件路径下

cd /opt/install/hive/conf/

- 修改hive-site.xml

vi hive-site.xml

-------------------------------------------

<configuration>

<property>

<name>hive.metastore.warehouse.dir</name>

<value>/home/hadoop/hive/warehouse</value>

</property>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://singleNode:3306/hive?createDatabaseIfNotExist=true</value>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>root</value>

</property>

<property>

<name>hive.exec.scratchdir</name>

<value>/home/hadoop/hive/data/hive-${user.name}</value>

<description>Scratch space for Hive jobs</description>

</property>

<property>

<name>hive.exec.local.scratchdir</name>

<value>/home/hadoop/hive/data/${user.name}</value>

<description>Local scratch space for Hive jobs</description>

</property>

</configuration>

-------------------------------------------

- 修改hive-env.sh.template

vi hive-env.sh.template

-------------------------------------------

HADOOP_HOME=/opt/install/hadoop

-------------------------------------------

- 添加依赖

cp /opt/software/mysql-connector-java-5.1.31.jar /opt/install/hive/lib/

- 添加环境变量

vi /etc/profile

#添加以下配置信息

export HIVE_HOME=/opt/install/hive

export PATH=$HIVE_HOME/bin:$PATH

- 启动服务

nohup hive --service metastore &

nohup hive --service hiveserver2 &

- 查看进程:jps

安装Sqoop

- 解压包

tar zxvf /opt/software/sqoop-1.4.6-cdh5.14.2.tar.gz -C /opt/install/

- 创建软连接

ln -s /opt/install/sqoop-1.4.6-cdh5.14.2 /opt/install/sqoop

- 修改sqoop-env-template.sh

cd /opt/install/sqoop/conf/

vi sqoop-env-template.sh

-------------------------------------------

#Set path to where bin/hadoop is available

export HADOOP_COMMON_HOME=/opt/install/hadoop

#Set path to where hadoop-*-core.jar is available

export HADOOP_MAPRED_HOME=/opt/install/hadoop

#Set the path to where bin/hive is available

export HIVE_HOME=/opt/install/hive

-------------------------------------------

- 添加依赖包

cp /opt/software/mysql-connector-java-5.1.31.jar /opt/install/sqoop/lib/

cp /opt/software/java-json.jar /opt/install/sqoop/lib/

- 添加环境变量

vi /etc/profile

#添加以下配置信息

export SQOOP_HOME=/opt/install/sqoop

export PATH=$SQOOP_HOME/bin:$PATH

- 查看版本

sqoop version