Openstack"T版"全组件手动部署

部署Keystone

基本环境可看openstack基础环境部署

部署openstack组件时,需先行安装认证服务(keystone)。

创建数据库实例和数据库用户

控制节点

mysql -u root -p123123

MariaDB [(none)]> create database keystone;

MariaDB [(none)]> GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'localhost' IDENTIFIED BY 'KEYSTONE_DBPASS';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'%' IDENTIFIED BY 'KEYSTONE_DBPASS';

MariaDB [(none)]> flush privileges;

MariaDB [(none)]> exit

安装、配置keystone、数据库、Apache

yum -y install openstack-keystone httpd mod_wsgi

cp -a /etc/keystone/keystone.conf{

,.bak}

grep -Ev "^$|#" /etc/keystone/keystone.conf.bak > /etc/keystone/keystone.conf

mod_wsgi包的作用是让apache能够代理pythone程序的组件;openstack的各个组件,包括API都是用python写的,但访问的是apache,apache会把请求转发给python去处理,这些包只安装在控制节点

通过pymysql模块访问mysql,指定用户名密码、数据库的域名、数据库名

openstack-config --set /etc/keystone/keystone.conf database connection mysql+pymysql://keystone:KEYSTONE_DBPASS@ct/keystone

指定token的提供者;提供者就是keystone自己本身

openstack-config --set /etc/keystone/keystone.conf token provider fernet

Fernet:一种安全的消息传递格式

初始化认证服务数据库

su -s /bin/sh -c "keystone-manage db_sync" keystone

初始化fernet 密钥存储库(以下命令会生成两个密钥,生成的密钥放于/etc/keystone/目录下,用于加密数据)

cd /etc/keystone/

ll

keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone

keystone-manage credential_setup --keystone-user keystone --keystone-group keystone

配置bootstrap身份认证服务

keystone-manage bootstrap --bootstrap-password ADMIN_PASS \

--bootstrap-admin-url http://ct:5000/v3/ \

--bootstrap-internal-url http://ct:5000/v3/ \

--bootstrap-public-url http://ct:5000/v3/ \

--bootstrap-region-id RegionOne #指定一个区域名称

此步骤是初始化openstack,会把openstack的admin用户的信息写入到mysql的user表中,以及url等其他信息写入到mysql的相关表中;

admin-url是管理网(如公有云内部openstack管理网络),用于管理虚拟机的扩容或删除;如果共有网络和管理网是一个网络,则当业务量大时,会造成无法通过openstack的控制端扩容虚拟机,所以需要一个管理网;

internal-url是内部网络,进行数据传输,如虚拟机访问存储和数据库、zookeeper等中间件,这个网络是不能被外网访问的,只能用于企业内部访问

public-url是共有网络,可以给用户访问的(如公有云) #但是此环境没有这些网络,则公用同一个网络

5000端口是keystone提供认证的端口

需要在haproxy服务器上添加一条listen

各种网络的url需要指定controler节点的域名,一般是haproxy的vip的域名(高可用模式)

配置Apache

echo "ServerName controller" >> /etc/httpd/conf/httpd.conf

创建配置文件,安装完mod_wsgi包后,会生成 wsgi-keystone.conf 这个文件,文件中配置了虚拟主机及监听了5000端口,mod_wsgi就是python的网关

ln -s /usr/share/keystone/wsgi-keystone.conf /etc/httpd/conf.d/

开启服务

systemctl enable httpd

systemctl start httpd

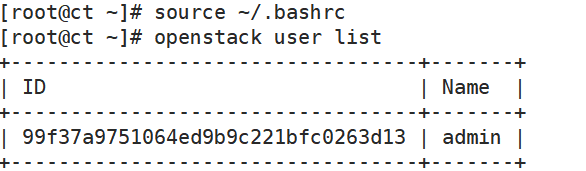

配置管理员账户的环境变量

这些环境变量用于创建角色和项目使用,但是创建角色和项目需要有认证信息,所以通过环境变量声明用户名和密码等认证信息,欺骗openstack已经登录且通过认证,这样就可以创建项目和角色;也就是把admin用户的验证信息通过声明环境变量的方式传递给openstack进行验证,实现针对openstack的非交互式操作

cat >> ~/.bashrc << EOF

export OS_USERNAME=admin #控制台登陆用户名

export OS_PASSWORD=ADMIN_PASS #控制台登陆密码

export OS_PROJECT_NAME=admin

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_DOMAIN_NAME=Default

export OS_AUTH_URL=http://ct:5000/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2

EOF

source ~/.bashrc

可以使用openstack命令进行一些操作

openstack user list

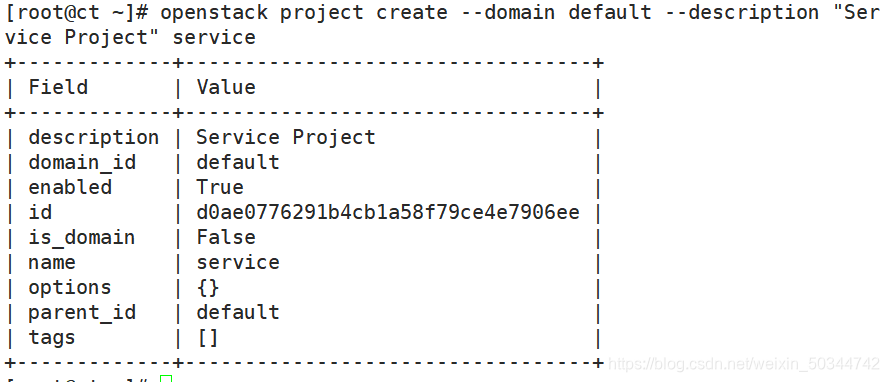

创建OpenStack 域、项目、用户和角色

创建一个项目(project),创建在指定的domain(域)中,指定描述信息,project名称为service(可使用openstack domain list 查询)

openstack project create --domain default --description "Service Project" service

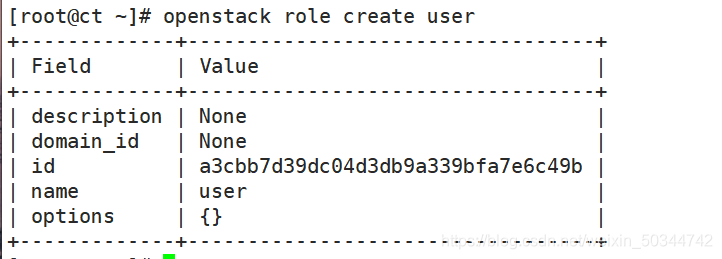

创建角色

openstack role create user

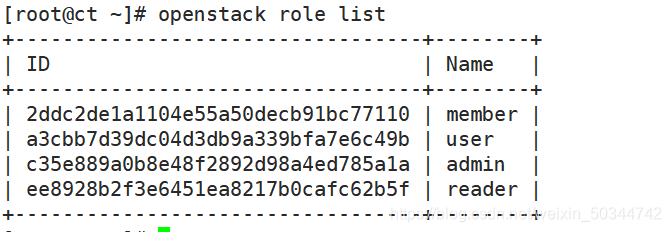

查看openstack 角色列表

openstack role list

admin:管理员

member:租户

user:用户

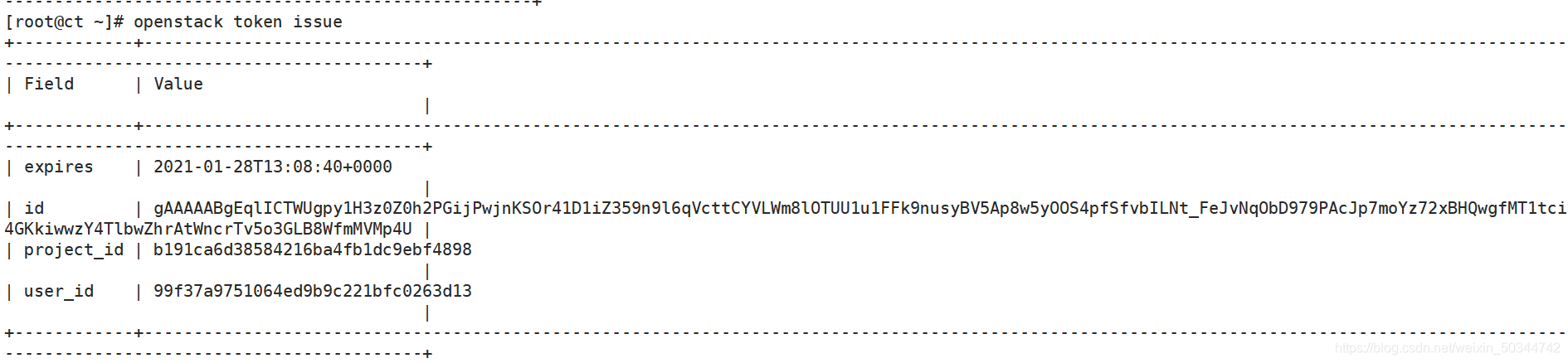

查看是否可以不指定密码就可以获取到token信息(验证认证服务)

openstack token issue

部署Glance

创建数据库实例和数据库用户

[root@ct ~]# mysql -u root -p123123

MariaDB [(none)]> CREATE DATABASE glance;

MariaDB [(none)]> GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'localhost' IDENTIFIED BY 'GLANCE_DBPASS';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'%' IDENTIFIED BY 'GLANCE_DBPASS';

MariaDB [(none)]> flush privileges;

MariaDB [(none)]> exit

创建用户、修改配置文件

创建OpenStack的Glance用户,创建用户前,需要首先执行管理员环境变量脚本(已在~/.bashrc 中定义过)

控制节点

openstack user create --domain default --password GLANCE_PASS glance

#创建glance用户

openstack role add --project service --user glance admin

#将glance用户添加到service项目中,并且针对这个项目拥有admin权限;注册glance的API,需要对service项目有admin权限

openstack service create --name glance --description "OpenStack Image" image

#创建一个service服务,service名称为glance,类型为image;创建完成后可以通过 openstack service list 查看

创建镜像服务 API 端点,OpenStack使用三种API端点代表三种服务:admin、internal、public

openstack endpoint create --region RegionOne image public http://ct:9292

openstack endpoint create --region RegionOne image internal http://ct:9292

openstack endpoint create --region RegionOne image admin http://ct:9292

安装软件包,修改glance配置文件。

yum -y install openstack-glance

cp -a /etc/glance/glance-api.conf{

,.bak}

grep -Ev '^$|#' /etc/glance/glance-api.conf.bak > /etc/glance/glance-api.conf

添加glance-api.conf配置

openstack-config --set /etc/glance/glance-api.conf database connection mysql+pymysql://glance:GLANCE_DBPASS@ct/glance

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken www_authenticate_uri http://ct:5000

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken auth_url http://ct:5000

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken memcached_servers ct:11211

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken auth_type password

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken project_domain_name Default

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken user_domain_name Default

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken project_name service

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken username glance

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken password GLANCE_PASS

openstack-config --set /etc/glance/glance-api.conf paste_deploy flavor keystone

openstack-config --set /etc/glance/glance-api.conf glance_store stores file,http

openstack-config --set /etc/glance/glance-api.conf glance_store default_store file

openstack-config --set /etc/glance/glance-api.conf glance_store filesystem_store_datadir /var/lib/glance/images/

查看配置文件

cat /etc/glance/glance-api.conf

[DEFAULT]

[cinder]

[cors]

[database]

connection = mysql+pymysql://glance:GLANCE_DBPASS@ct/glance

[file]

[glance.store.http.store]

[glance.store.rbd.store]

[glance.store.sheepdog.store]

[glance.store.swift.store]

[glance.store.vmware_datastore.store]

[glance_store]

stores = file,http #存储类型,file:文件,http:基于api调用的方式,把镜像放到其他存储上

default_store = file #默认存储方式

filesystem_store_datadir = /var/lib/glance/images/ ##指定镜像存放的本地目录

[image_format]

[keystone_authtoken]

www_authenticate_uri = http://ct:5000 ##指定认证的keystone的URI

auth_url = http://ct:5000

memcached_servers = ct:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service #glance用户针对service项目拥有admin权限

username = glance

password = GLANCE_PASS

[oslo_concurrency]

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_middleware]

[oslo_policy]

[paste_deploy]

flavor = keystone #指定提供认证的服务器为keystone

[profiler]

[store_type_location_strategy]

[task]

[taskflow_executor]

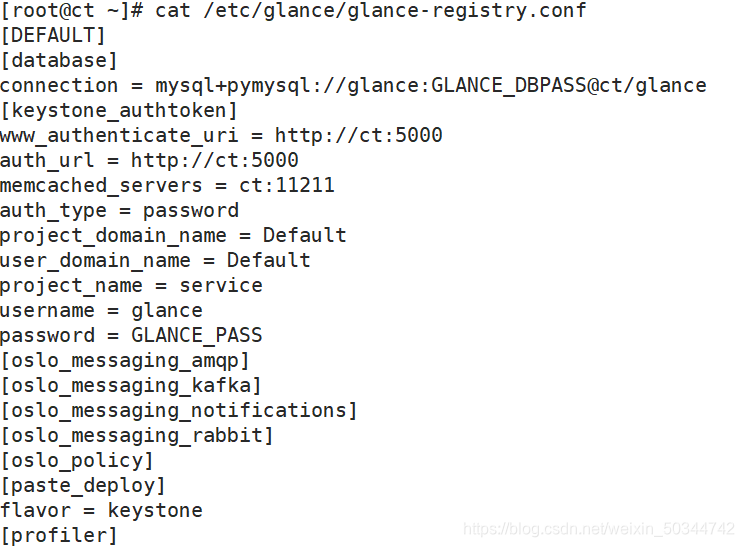

修改glance-registry.conf 配置文件

cp -a /etc/glance/glance-registry.conf{

,.bak}

grep -Ev '^$|#' /etc/glance/glance-registry.conf.bak > /etc/glance/glance-registry.conf

openstack-config --set /etc/glance/glance-registry.conf database connection mysql+pymysql://glance:GLANCE_DBPASS@ct/glance

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken www_authenticate_uri http://ct:5000

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken auth_url http://ct:5000

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken memcached_servers ct:11211

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken auth_type password

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken project_domain_name Default

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken user_domain_name Default

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken project_name service

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken username glance

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken password GLANCE_PASS

openstack-config --set /etc/glance/glance-registry.conf paste_deploy flavor keystone

数据库及服务的配置

初始化glance数据库,生成相关表结构;(只需要初始化一次即可)

su -s /bin/sh -c "glance-manage db_sync" glance

开启glance服务

systemctl enable openstack-glance-api.service

systemctl start openstack-glance-api.service

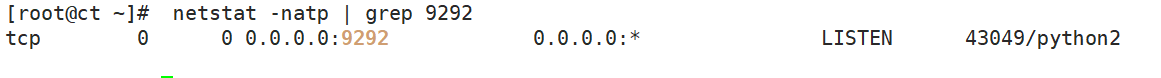

查看端口

赋予openstack-glance-api.service服务对存储设备的可写权限(-h:值对符号连接/软链接的文件修改)

chown -hR glance:glance /var/lib/glance/

先上传cirros镜像到控制节点的/root,然后导入glance,最后查看是否创建成功

openstack image create --file cirros-0.3.5-x86_64-disk.img --disk-format qcow2 --container-format bare --public cirros

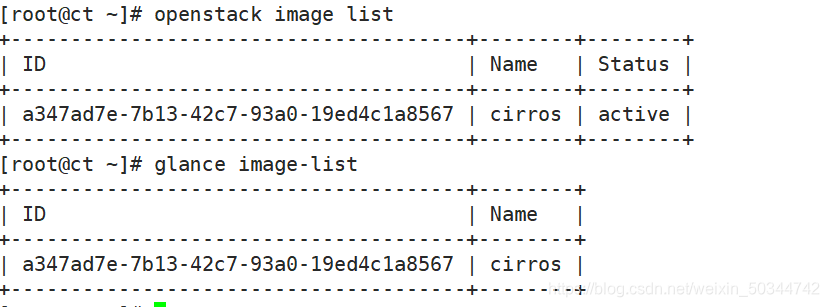

查看镜像

openstack image list

glance image-list

部署Placement

创建数据库实例和数据库用户

[root@ct ~]# mysql -uroot -p123123

MariaDB [(none)]> CREATE DATABASE placement;

MariaDB [(none)]> GRANT ALL PRIVILEGES ON placement.* TO 'placement'@'localhost' IDENTIFIED BY 'PLACEMENT_DBPASS';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON placement.* TO 'placement'@'%' IDENTIFIED BY 'PLACEMENT_DBPASS';

MariaDB [(none)]> flush privileges;

MariaDB [(none)]> exit;

创建服务用户和API的endpoint

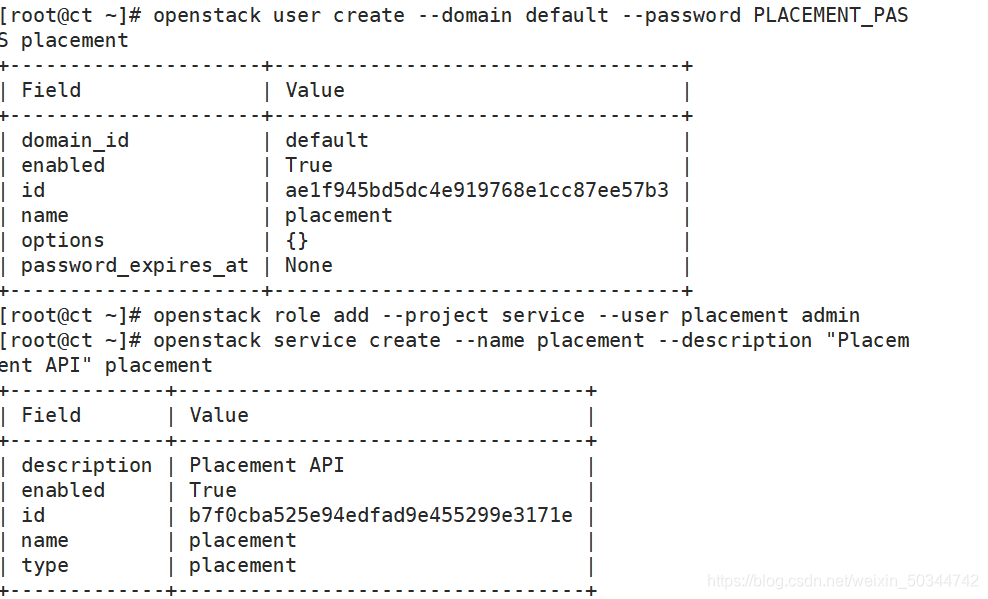

创建placement用户,对service项目拥有admin权限

openstack user create --domain default --password PLACEMENT_PASS placement

openstack role add --project service --user placement admin

创建一个placement服务,服务类型为placement,注册API端口到placement的service中;注册的信息会写入到mysql中

openstack service create --name placement --description "Placement API" placement

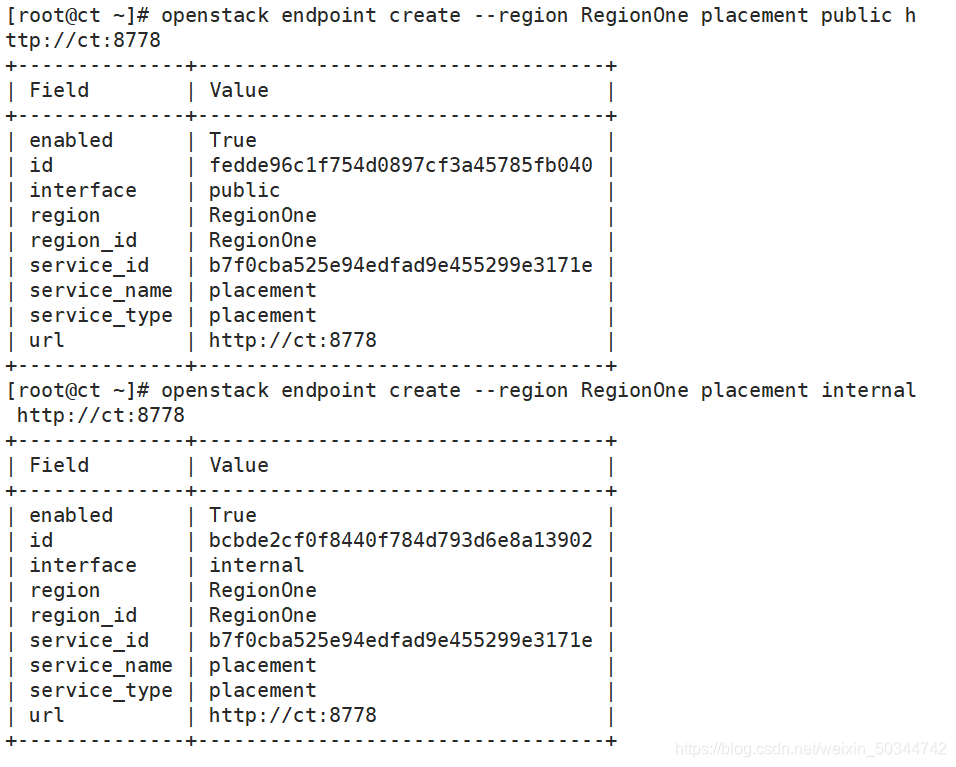

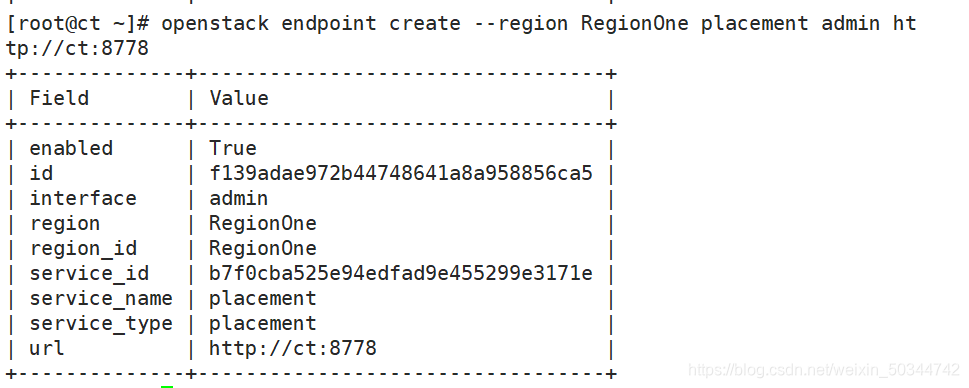

openstack endpoint create --region RegionOne placement public http://ct:8778

openstack endpoint create --region RegionOne placement internal http://ct:8778

openstack endpoint create --region RegionOne placement admin http://ct:8778

安装placement

yum -y install openstack-placement-api

修改 placement配置文件

传参

cp /etc/placement/placement.conf{

,.bak}

grep -Ev '^$|#' /etc/placement/placement.conf.bak > /etc/placement/placement.conf

openstack-config --set /etc/placement/placement.conf placement_database connection mysql+pymysql://placement:PLACEMENT_DBPASS@ct/placement

openstack-config --set /etc/placement/placement.conf api auth_strategy keystone

openstack-config --set /etc/placement/placement.conf keystone_authtoken auth_url http://ct:5000/v3

openstack-config --set /etc/placement/placement.conf keystone_authtoken memcached_servers ct:11211

openstack-config --set /etc/placement/placement.conf keystone_authtoken auth_type password

openstack-config --set /etc/placement/placement.conf keystone_authtoken project_domain_name Default

openstack-config --set /etc/placement/placement.conf keystone_authtoken user_domain_name Default

openstack-config --set /etc/placement/placement.conf keystone_authtoken project_name service

openstack-config --set /etc/placement/placement.conf keystone_authtoken username placement

openstack-config --set /etc/placement/placement.conf keystone_authtoken password PLACEMENT_PASS

查看placement配置文件

cat /etc/placement/placement.conf

[DEFAULT]

[api]

auth_strategy = keystone

[cors]

[keystone_authtoken]

auth_url = http://ct:5000/v3 #指定keystone地址

memcached_servers = ct:11211 #session信息是缓存放到了memcached中

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = placement

password = PLACEMENT_PASS

[oslo_policy]

[placement]

[placement_database]

connection = mysql+pymysql://placement:PLACEMENT_DBPASS@ct/placement

[profiler]

导入数据库

su -s /bin/sh -c "placement-manage db sync" placement

修改Apache配置

cd /etc/httpd/conf.d/

vim 00-placement-api.conf #在末尾添加

<Directory /usr/bin> #此处是bug,必须添加下面的配置来启用对placement api的访问,否则在访问apache的

<IfVersion >= 2.4> #api时会报403;添加在文件的最后即可

Require all granted

</IfVersion>

<IfVersion < 2.4> #apache版本;允许apache访问/usr/bin目录;否则/usr/bin/placement-api将不允许被访问

Order allow,deny

Allow from all #允许apache访问

</IfVersion>

</Directory>

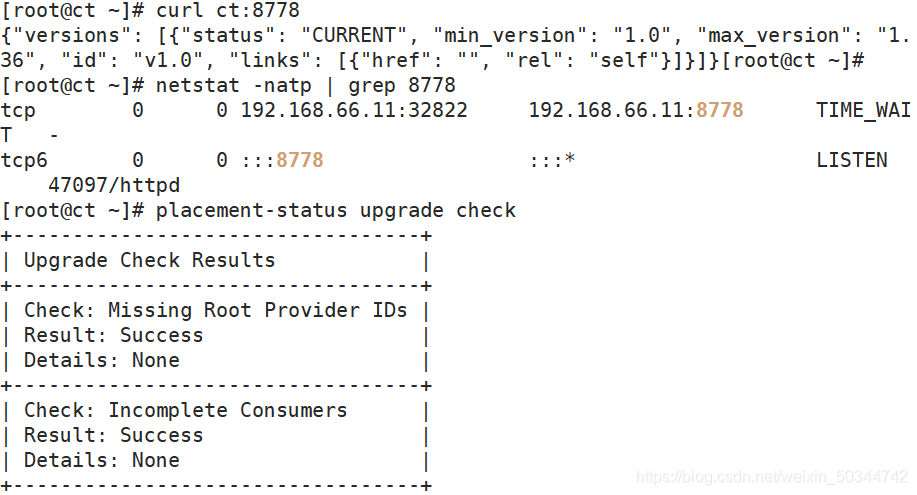

重启apache并测试

systemctl restart httpd

curl ct:8778 #curl测试访问

netstat -natp | grep 8778 #查看端口占用(netstat、lsof)

placement-status upgrade check #检查placement状态

部署nova

环境

| 名称 | 服务 |

|---|---|

| 控制节点ct | nova-api(nova主服务)nova-scheduler(nova调度服务)nova-conductor(nova数据库服务,提供数据库访问)nova-novncproxy(nova的vnc服务,提供实例的控制台) |

| 计算节点c1c2 | nova-compute(nova计算服务) |

控制节点

[root@ct ~]# mysql -uroot -p123123

MariaDB [(none)]> CREATE DATABASE nova_api;

MariaDB [(none)]> CREATE DATABASE nova;

MariaDB [(none)]> CREATE DATABASE nova_cell0;

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'localhost' IDENTIFIED BY 'NOVA_DBPASS';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'%' IDENTIFIED BY 'NOVA_DBPASS';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'localhost' IDENTIFIED BY 'NOVA_DBPASS';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'%' IDENTIFIED BY 'NOVA_DBPASS';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'localhost' IDENTIFIED BY 'NOVA_DBPASS';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'%' IDENTIFIED BY 'NOVA_DBPASS';

MariaDB [(none)]> flush privileges;

MariaDB [(none)]> exit

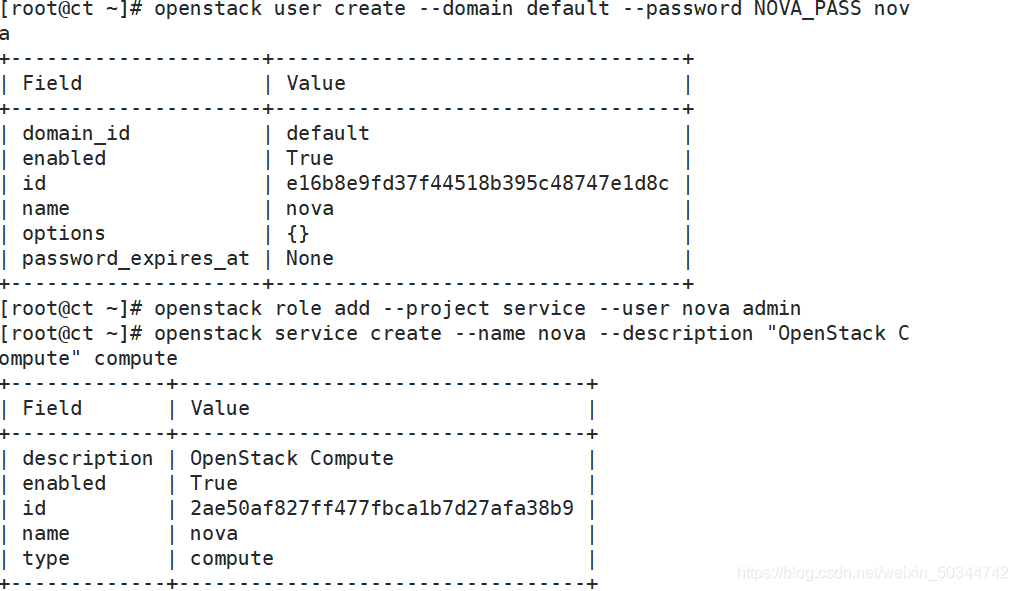

创建nova用户,添加到service项目,拥有admin权限

openstack user create --domain default --password NOVA_PASS nova

openstack role add --project service --user nova admin

创建nova服务

openstack service create --name nova --description "OpenStack Compute" compute

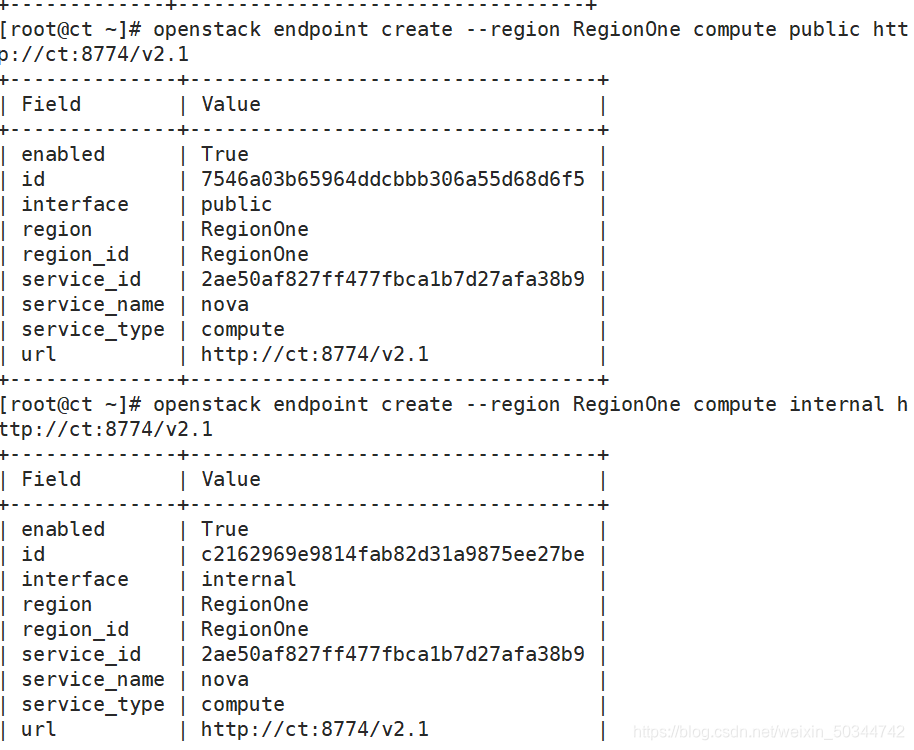

给Nova服务关联endpoint(端点)

openstack endpoint create --region RegionOne compute public http://ct:8774/v2.1

openstack endpoint create --region RegionOne compute internal http://ct:8774/v2.1

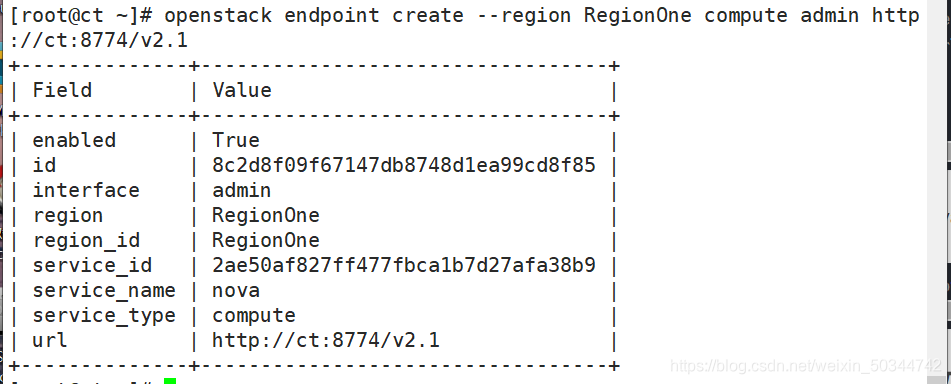

openstack endpoint create --region RegionOne compute admin http://ct:8774/v2.1

安装nova组件(nova-api、nova-conductor、nova-novncproxy、nova-scheduler)

yum -y install openstack-nova-api openstack-nova-conductor openstack-nova-novncproxy openstack-nova-scheduler

修改nova配置文件

修改nova.conf

cp -a /etc/nova/nova.conf{

,.bak}

grep -Ev '^$|#' /etc/nova/nova.conf.bak > /etc/nova/nova.conf

openstack-config --set /etc/nova/nova.conf DEFAULT enabled_apis osapi_compute,metadata

openstack-config --set /etc/nova/nova.conf DEFAULT my_ip 192.168.66.11 #修改为ct的IP

openstack-config --set /etc/nova/nova.conf DEFAULT use_neutron true

openstack-config --set /etc/nova/nova.conf DEFAULT firewall_driver nova.virt.firewall.NoopFirewallDriver

openstack-config --set /etc/nova/nova.conf DEFAULT transport_url rabbit://openstack:RABBIT_PASS@ct

openstack-config --set /etc/nova/nova.conf api_database connection mysql+pymysql://nova:NOVA_DBPASS@ct/nova_api

openstack-config --set /etc/nova/nova.conf database connection mysql+pymysql://nova:NOVA_DBPASS@ct/nova

openstack-config --set /etc/nova/nova.conf placement_database connection mysql+pymysql://placement:PLACEMENT_DBPASS@ct/placement

openstack-config --set /etc/nova/nova.conf api auth_strategy keystone

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_url http://ct:5000/v3

openstack-config --set /etc/nova/nova.conf keystone_authtoken memcached_servers ct:11211

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_type password

openstack-config --set /etc/nova/nova.conf keystone_authtoken project_domain_name Default

openstack-config --set /etc/nova/nova.conf keystone_authtoken user_domain_name Default

openstack-config --set /etc/nova/nova.conf keystone_authtoken project_name service

openstack-config --set /etc/nova/nova.conf keystone_authtoken username nova

openstack-config --set /etc/nova/nova.conf keystone_authtoken password NOVA_PASS

openstack-config --set /etc/nova/nova.conf vnc enabled true

openstack-config --set /etc/nova/nova.conf vnc server_listen ' $my_ip'

openstack-config --set /etc/nova/nova.conf vnc server_proxyclient_address ' $my_ip'

openstack-config --set /etc/nova/nova.conf glance api_servers http://ct:9292

openstack-config --set /etc/nova/nova.conf oslo_concurrency lock_path /var/lib/nova/tmp

openstack-config --set /etc/nova/nova.conf placement region_name RegionOne

openstack-config --set /etc/nova/nova.conf placement project_domain_name Default

openstack-config --set /etc/nova/nova.conf placement project_name service

openstack-config --set /etc/nova/nova.conf placement auth_type password

openstack-config --set /etc/nova/nova.conf placement user_domain_name Default

openstack-config --set /etc/nova/nova.conf placement auth_url http://ct:5000/v3

openstack-config --set /etc/nova/nova.conf placement username placement

openstack-config --set /etc/nova/nova.conf placement password PLACEMENT_PASS

查看nova.conf配置文件

cat /etc/nova/nova.conf

[DEFAULT]

enabled_apis = osapi_compute,metadata #指定支持的api类型

my_ip = 192.168.66.11 #定义本地IP

use_neutron = true #通过neutron获取IP地址

firewall_driver = nova.virt.firewall.NoopFirewallDriver

transport_url = rabbit://openstack:RABBIT_PASS@ct #指定连接的rabbitmq

[api]

auth_strategy = keystone #指定使用keystone认证

[api_database]

connection = mysql+pymysql://nova:NOVA_DBPASS@ct/nova_api

[barbican]

[cache]

[cinder]

[compute]

[conductor]

[console]

[consoleauth]

[cors]

[database]

connection = mysql+pymysql://nova:NOVA_DBPASS@ct/nova

[devices]

[ephemeral_storage_encryption]

[filter_scheduler]

[glance]

api_servers = http://ct:9292

[guestfs]

[healthcheck]

[hyperv]

[ironic]

[key_manager]

[keystone]

[keystone_authtoken] #配置keystone的认证信息

auth_url = http://ct:5000/v3 #到此url去认证

memcached_servers = ct:11211 #memcache数据库地址:端口

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = nova

password = NOVA_PASS

[libvirt]

[metrics]

[mks]

[neutron]

[notifications]

[osapi_v21]

[oslo_concurrency] #指定锁路径

lock_path = /var/lib/nova/tmp #锁的作用是创建虚拟机时,在执行某个操作的时候,需要等此步骤执行完后才能执行下一个步骤,不能并行执行,保证操作是一步一步的执行

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_middleware]

[oslo_policy]

[pci]

[placement]

region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://ct:5000/v3

username = placement

password = PLACEMENT_PASS

[powervm]

[privsep]

[profiler]

[quota]

[rdp]

[remote_debug]

[scheduler]

[serial_console]

[service_user]

[spice]

[upgrade_levels]

[vault]

[vendordata_dynamic_auth]

[vmware]

[vnc] #此处如果配置不正确,则连接不上虚拟机的控制台

enabled = true

server_listen = $my_ip #指定vnc的监听地址

server_proxyclient_address = $my_ip #server的客户端地址为本机地址;此地址是管理网的地址

[workarounds]

[wsgi]

[xenserver]

[xvp]

[zvm]

[placement_database]

connection = mysql+pymysql://placement:PLACEMENT_DBPASS@ct/placement

初始化数据库

su -s /bin/sh -c "nova-manage api_db sync" nova #初始化nova_api数据库

su -s /bin/sh -c "nova-manage cell_v2 map_cell0" nova #注册cell0数据库;nova服务内部把资源划分到不同的cell中,把计算节点划分到不同的cell中;openstack内部基于cell把计算节点进行逻辑上的分组

su -s /bin/sh -c "nova-manage cell_v2 create_cell --name=cell1 --verbose" nova #创建cell1单元格

su -s /bin/sh -c "nova-manage db sync" nova #初始化nova数据库,可以通过 /var/log/nova/nova-manage.log 日志判断是否初始化成功

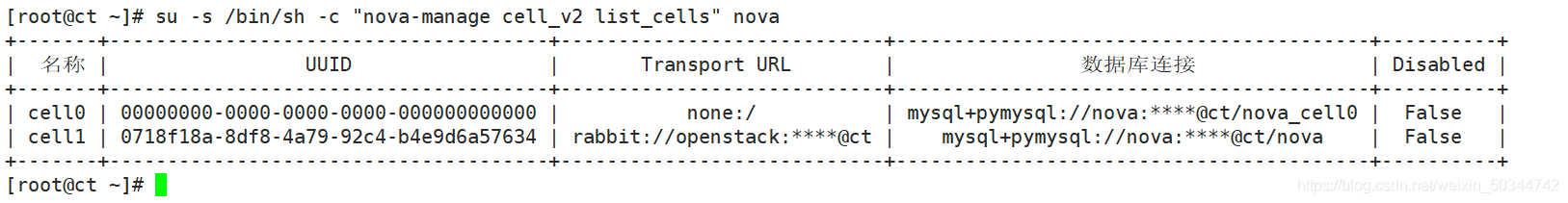

su -s /bin/sh -c "nova-manage cell_v2 list_cells" nova #验证cell0和cell1组件是否注册成功

启动Nova服务

systemctl enable openstack-nova-api.service openstack-nova-scheduler.service openstack-nova-conductor.service openstack-nova-novncproxy.service

systemctl start openstack-nova-api.service openstack-nova-scheduler.service openstack-nova-conductor.service openstack-nova-novncproxy.service

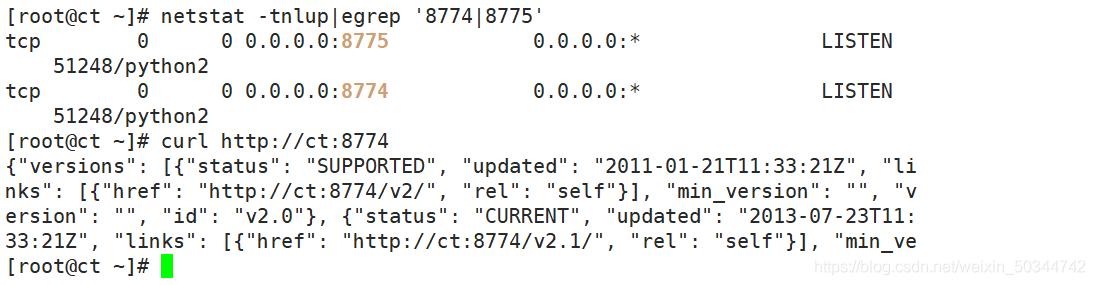

netstat -tnlup|egrep '8774|8775'

curl http://ct:8774

计算节点

除IP地址外,其余的配置C1、C2相同

安装nova-compute

yum -y install openstack-nova-compute

修改配置文件

cp -a /etc/nova/nova.conf{

,.bak}

grep -Ev '^$|#' /etc/nova/nova.conf.bak > /etc/nova/nova.conf

openstack-config --set /etc/nova/nova.conf DEFAULT enabled_apis osapi_compute,metadata

openstack-config --set /etc/nova/nova.conf DEFAULT transport_url rabbit://openstack:RABBIT_PASS@ct

openstack-config --set /etc/nova/nova.conf DEFAULT my_ip 192.168.66.12 #修改为对应节点的内部IP

openstack-config --set /etc/nova/nova.conf DEFAULT use_neutron true

openstack-config --set /etc/nova/nova.conf DEFAULT firewall_driver nova.virt.firewall.NoopFirewallDriver

openstack-config --set /etc/nova/nova.conf api auth_strategy keystone

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_url http://ct:5000/v3

openstack-config --set /etc/nova/nova.conf keystone_authtoken memcached_servers ct:11211

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_type password

openstack-config --set /etc/nova/nova.conf keystone_authtoken project_domain_name Default

openstack-config --set /etc/nova/nova.conf keystone_authtoken user_domain_name Default

openstack-config --set /etc/nova/nova.conf keystone_authtoken project_name service

openstack-config --set /etc/nova/nova.conf keystone_authtoken username nova

openstack-config --set /etc/nova/nova.conf keystone_authtoken password NOVA_PASS

openstack-config --set /etc/nova/nova.conf vnc enabled true

openstack-config --set /etc/nova/nova.conf vnc server_listen 0.0.0.0

openstack-config --set /etc/nova/nova.conf vnc server_proxyclient_address ' $my_ip'

openstack-config --set /etc/nova/nova.conf vnc novncproxy_base_url http://192.168.66.11:6080/vnc_auto.html

openstack-config --set /etc/nova/nova.conf glance api_servers http://ct:9292

openstack-config --set /etc/nova/nova.conf oslo_concurrency lock_path /var/lib/nova/tmp

openstack-config --set /etc/nova/nova.conf placement region_name RegionOne

openstack-config --set /etc/nova/nova.conf placement project_domain_name Default

openstack-config --set /etc/nova/nova.conf placement project_name service

openstack-config --set /etc/nova/nova.conf placement auth_type password

openstack-config --set /etc/nova/nova.conf placement user_domain_name Default

openstack-config --set /etc/nova/nova.conf placement auth_url http://ct:5000/v3

openstack-config --set /etc/nova/nova.conf placement username placement

openstack-config --set /etc/nova/nova.conf placement password PLACEMENT_PASS

openstack-config --set /etc/nova/nova.conf libvirt virt_type qemu

查看配置文件

cat /etc/nova/nova.conf

[DEFAULT]

enabled_apis = osapi_compute,metadata

transport_url = rabbit://openstack:RABBIT_PASS@ct

my_ip = 192.168.66.12

use_neutron = true

firewall_driver = nova.virt.firewall.NoopFirewallDriver

[api]

auth_strategy = keystone

[api_database]

[barbican]

[cache]

[cinder]

[compute]

[conductor]

[console]

[consoleauth]

[cors]

[database]

[devices]

[ephemeral_storage_encryption]

[filter_scheduler]

[glance]

api_servers = http://ct:9292

[guestfs]

[healthcheck]

[hyperv]

[ironic]

[key_manager]

[keystone]

[keystone_authtoken]

auth_url = http://ct:5000/v3

memcached_servers = ct:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = nova

password = NOVA_PASS

[libvirt]

virt_type = qemu

[metrics]

[mks]

[neutron]

[notifications]

[osapi_v21]

[oslo_concurrency]

lock_path = /var/lib/nova/tmp

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_middleware]

[oslo_policy]

[pci]

[placement]

region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://ct:5000/v3

username = placement

password = PLACEMENT_PASS

[powervm]

[privsep]

[profiler]

[quota]

[rdp]

[remote_debug]

[scheduler]

[serial_console]

[service_user]

[spice]

[upgrade_levels]

[vault]

[vendordata_dynamic_auth]

[vmware]

[vnc]

enabled = true

server_listen = 0.0.0.0

server_proxyclient_address = $my_ip

novncproxy_base_url = http://192.168.66.11:6080/vnc_auto.html

#需要手动添加IP地址,否则之后搭建成功后,无法通过UI控制台访问到内部虚拟机

[workarounds]

[wsgi]

[xenserver]

[xvp]

[zvm]

开启服务

systemctl enable libvirtd.service openstack-nova-compute.service

systemctl start libvirtd.service openstack-nova-compute.service

验证

查看compute节点是否注册到controller上,通过消息队列;需要在controller节点执行

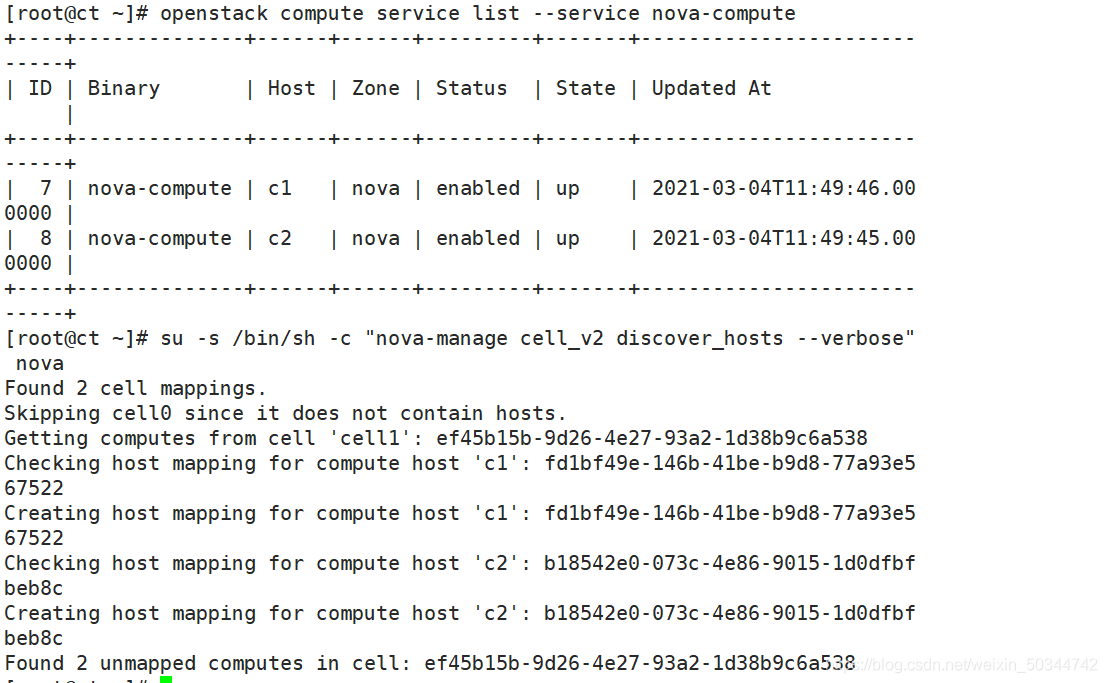

openstack compute service list --service nova-compute

扫描当前openstack中有哪些计算节点可用,发现后会把计算节点创建到cell中,后面就可以在cell中创建虚拟机;相当于openstack内部对计算节点进行分组,把计算节点分配到不同的cell中

su -s /bin/sh -c "nova-manage cell_v2 discover_hosts --verbose" nova

默认每次添加个计算节点,在控制端就需要执行一次扫描,这样会很麻烦,所以可以修改控制端nova的主配置文件

vim /etc/nova/nova.conf

[scheduler]

discover_hosts_in_cells_interval = 300 #每300秒扫描一次

systemctl restart openstack-nova-api.service

验证计算节点服务

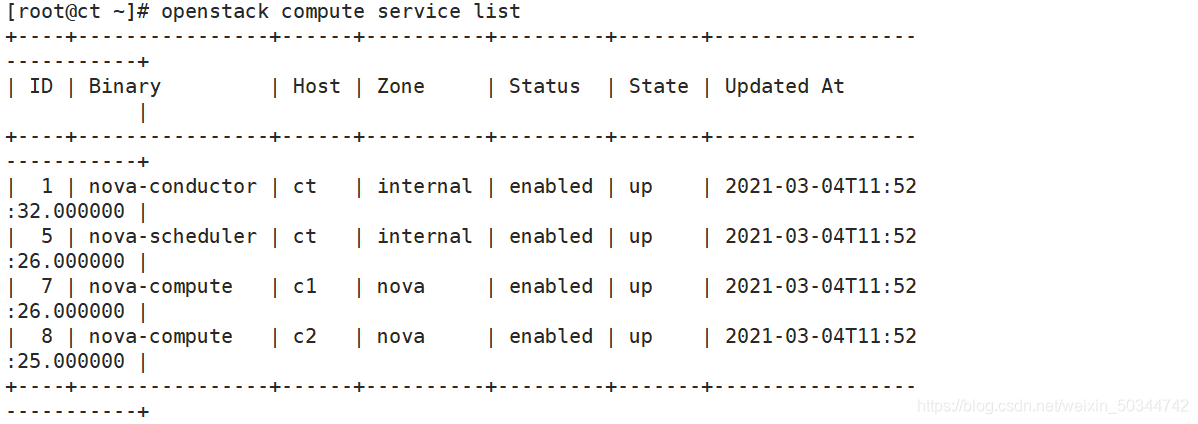

检查 nova 的各个服务是否都是正常,以及 compute 服务是否注册成功

openstack compute service list

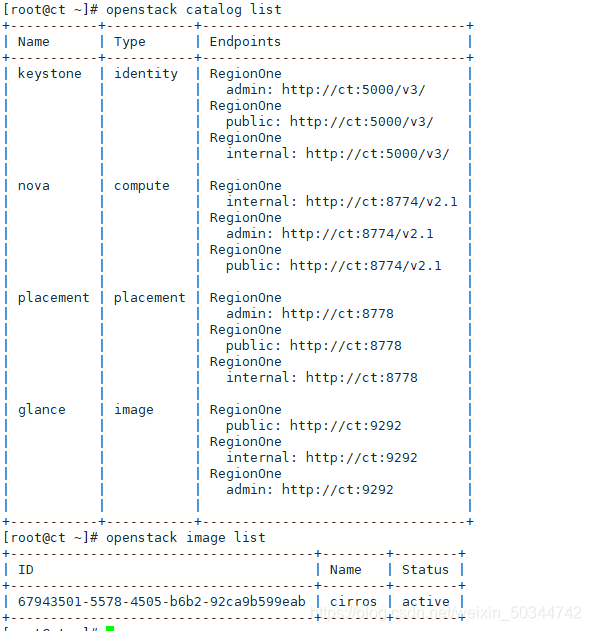

查看各个组件的 api 是否正常,是否能够拿到镜像

openstack catalog list

openstack image list

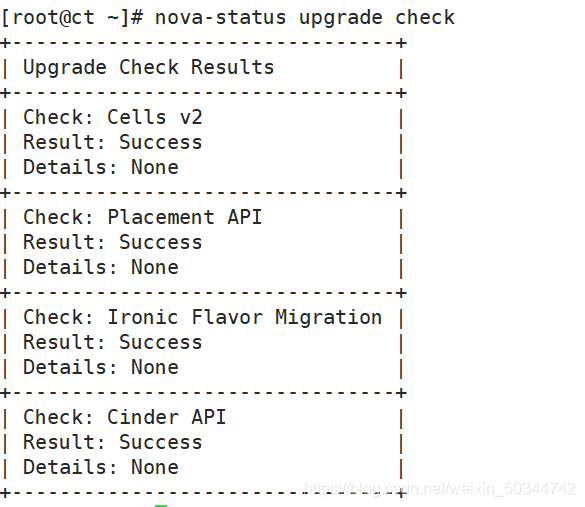

查看cell的api和placement的api是否正常,只要其中一个有误,后期无法创建虚拟机

nova-status upgrade check

部署neutron

控制节点

创建数据库neutron,并进行授权

创建数据库

mysql -u root -p123123

MariaDB [(none)]> CREATE DATABASE neutron;

MariaDB [(none)]> GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'localhost' IDENTIFIED BY 'NEUTRON_DBPASS';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'%' IDENTIFIED BY 'NEUTRON_DBPASS';

MariaDB [(none)]> flush privileges;

MariaDB [(none)]> exit

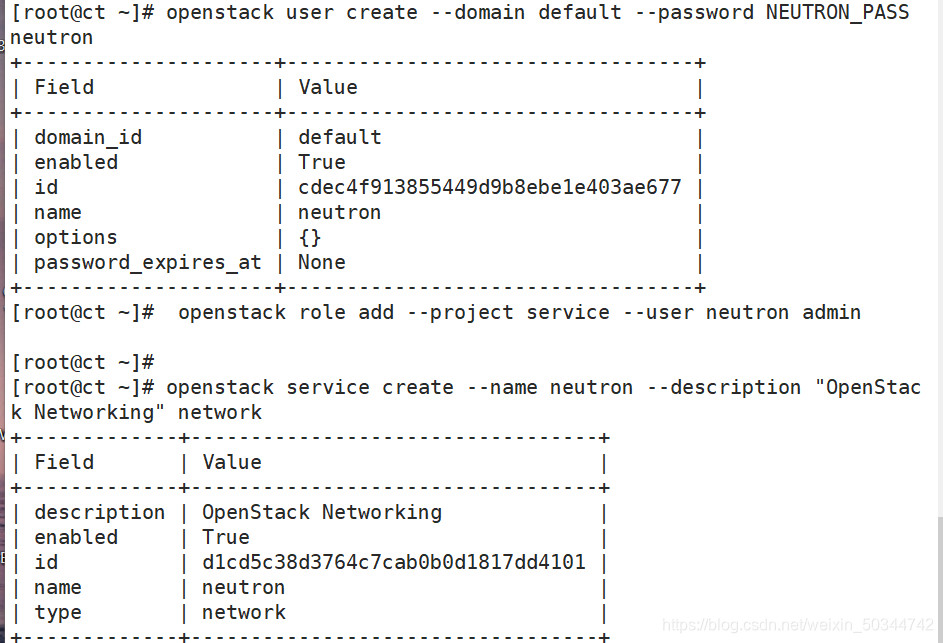

创建用户,用于在keystone做认证

openstack user create --domain default --password NEUTRON_PASS neutron

将neutron用户添加到service项目中拥有管理员权限

openstack role add --project service --user neutron admin

创建network服务,服务类型为network

openstack service create --name neutron --description "OpenStack Networking" network

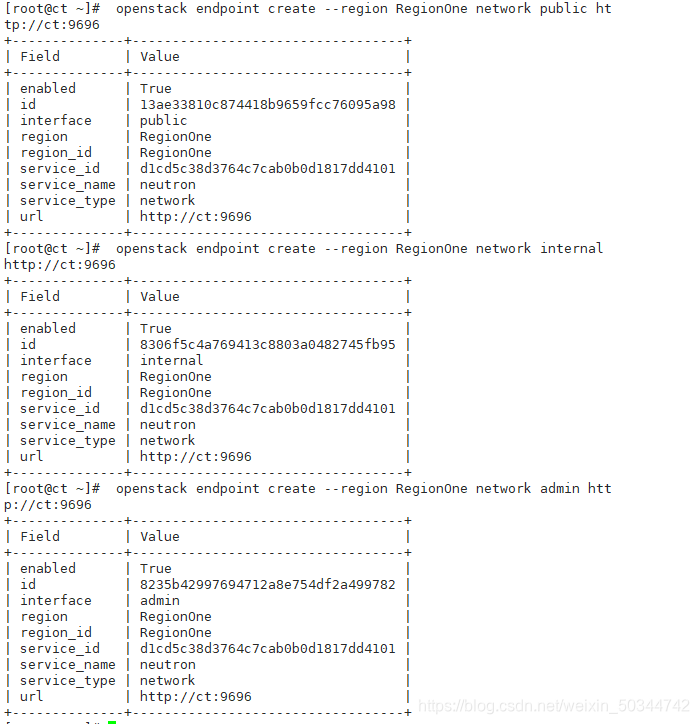

注册API到neutron服务,给neutron服务关联端口,即添加endpoint

openstack endpoint create --region RegionOne network public http://ct:9696

openstack endpoint create --region RegionOne network internal http://ct:9696

openstack endpoint create --region RegionOne network admin http://ct:9696

安装提供者网络(桥接)

yum -y install openstack-neutron openstack-neutron-ml2 openstack-neutron-linuxbridge ebtables conntrack-tools

更改主配置文件

cp -a /etc/neutron/neutron.conf{

,.bak}

grep -Ev '^$|#' /etc/neutron/neutron.conf.bak > /etc/neutron/neutron.conf

openstack-config --set /etc/neutron/neutron.conf database connection mysql+pymysql://neutron:NEUTRON_DBPASS@ct/neutron

openstack-config --set /etc/neutron/neutron.conf DEFAULT core_plugin ml2

openstack-config --set /etc/neutron/neutron.conf DEFAULT service_plugins router

openstack-config --set /etc/neutron/neutron.conf DEFAULT allow_overlapping_ips true

openstack-config --set /etc/neutron/neutron.conf DEFAULT transport_url rabbit://openstack:RABBIT_PASS@ct

openstack-config --set /etc/neutron/neutron.conf DEFAULT auth_strategy keystone

openstack-config --set /etc/neutron/neutron.conf DEFAULT notify_nova_on_port_status_changes true

openstack-config --set /etc/neutron/neutron.conf DEFAULT notify_nova_on_port_data_changes true

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken www_authenticate_uri http://ct:5000

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_url http://ct:5000

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken memcached_servers ct:11211

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_type password

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken project_domain_name default

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken user_domain_name default

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken project_name service

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken username neutron

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken password NEUTRON_PASS

openstack-config --set /etc/neutron/neutron.conf oslo_concurrency lock_path /var/lib/neutron/tmp

openstack-config --set /etc/neutron/neutron.conf nova auth_url http://ct:5000

openstack-config --set /etc/neutron/neutron.conf nova auth_type password

openstack-config --set /etc/neutron/neutron.conf nova project_domain_name default

openstack-config --set /etc/neutron/neutron.conf nova user_domain_name default

openstack-config --set /etc/neutron/neutron.conf nova region_name RegionOne

openstack-config --set /etc/neutron/neutron.conf nova project_name service

openstack-config --set /etc/neutron/neutron.conf nova username nova

openstack-config --set /etc/neutron/neutron.conf nova password NOVA_PASS

查看

cat /etc/neutron/neutron.conf

[DEFAULT]

core_plugin = ml2 #启用二层网络插件

service_plugins = router #启用三层网络插件

allow_overlapping_ips = true

transport_url = rabbit://openstack:RABBIT_PASS@ct #配置rabbitmq连接

auth_strategy = keystone #认证的方式:keystone

notify_nova_on_port_status_changes = true #当网络接口发生变化时,通知给计算节点

notify_nova_on_port_data_changes = true #当端口数据发生变化,通知计算节点

[cors]

[database] #配置数据库连接

connection = mysql+pymysql://neutron:NEUTRON_DBPASS@ct/neutron

[keystone_authtoken] #配置keystone认证信息

www_authenticate_uri = http://ct:5000

auth_url = http://ct:5000

memcached_servers = ct:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = NEUTRON_PASS

[oslo_concurrency] #配置锁路径

lock_path = /var/lib/neutron/tmp

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_middleware]

[oslo_policy]

[privsep]

[ssl]

[nova] #neutron需要给nova返回数据

auth_url = http://ct:5000 #到keystone认证nova

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = nova #通过nova的用户名和密码到keystone验证nova的token

password = NOVA_PASS

ML2 plugin 配置文件 ml2_conf.ini

cp -a /etc/neutron/plugins/ml2/ml2_conf.ini{

,.bak}

grep -Ev '^$|#' /etc/neutron/plugins/ml2/ml2_conf.ini.bak > /etc/neutron/plugins/ml2/ml2_conf.ini

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 type_drivers flat,vlan,vxlan

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 tenant_network_types vxlan

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 mechanism_drivers linuxbridge,l2population

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 extension_drivers port_security

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2_type_flat flat_networks provider

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2_type_vxlan vni_ranges 1:1000

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini securitygroup enable_ipset true

查看

cat /etc/neutron/plugins/ml2/ml2_conf.ini

[DEFAULT]

[ml2]

type_drivers = flat,vlan,vxlan #配置驱动类型;单一扁平网络(桥接)和vlan;让二层网络支持桥接,支持基于vlan做子网划分

tenant_network_types = vxlan #租户网络类型(vxlan)

mechanism_drivers = linuxbridge,l2population #启用Linuxbridge和l2机制,(l2population机制是为了简化网络通信拓扑,减少网络广播):

extension_drivers = port_security #启用端口安全扩展驱动程序,基于iptables实现访问控制;但配置了扩展安全组会导致一些端口限制,造成一些服务无法启动

[ml2_type_flat]

flat_networks = provider #配置公共虚拟网络为flat网络

[ml2_type_vxlan]

vni_ranges = 1:1000 #为私有网络配置VXLAN网络识别的网络范围

[securitygroup]

enable_ipset = true #启用 ipset 增加安全组的方便性

linux bridge network provider 配置文件

cp -a /etc/neutron/plugins/ml2/linuxbridge_agent.ini{

,.bak}

grep -Ev '^$|#' /etc/neutron/plugins/ml2/linuxbridge_agent.ini.bak > /etc/neutron/plugins/ml2/linuxbridge_agent.ini

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini linux_bridge physical_interface_mappings provider:eth1 #eth1网卡名称

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini vxlan enable_vxlan true

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini vxlan local_ip 192.168.66.11 #控制节点IP地址

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini vxlan l2_population true

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini securitygroup enable_security_group true

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini securitygroup firewall_driver neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

查看

cat /etc/neutron/plugins/ml2/linuxbridge_agent.ini

[DEFAULT]

[linux_bridge]

physical_interface_mappings = provider:eth1 #指定上个文件中的桥接网络名称,与eth0物理网卡做关联,后期给虚拟机分配external网络,就可以通过eth0上外网;物理网卡有可能是bind0、br0等

[vxlan] #启用VXLAN覆盖网络,配置覆盖网络的物理网络接口的IP地址,启用layer-2 population

enable_vxlan = true #允许用户创建自定义网络(3层网络)

local_ip = 192.168.66.11

l2_population = true

[securitygroup] #启用安全组并配置 Linux 桥接 iptables 防火墙驱动

enable_security_group = true

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

修改内核参数

echo 'net.bridge.bridge-nf-call-iptables=1' >> /etc/sysctl.conf

echo 'net.bridge.bridge-nf-call-ip6tables=1' >> /etc/sysctl.conf

modprobe br_netfilter ##modprobe:用于向内核中加载模块或者从内核中移除模块。modprobe -r 表示移除

sysctl -p

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

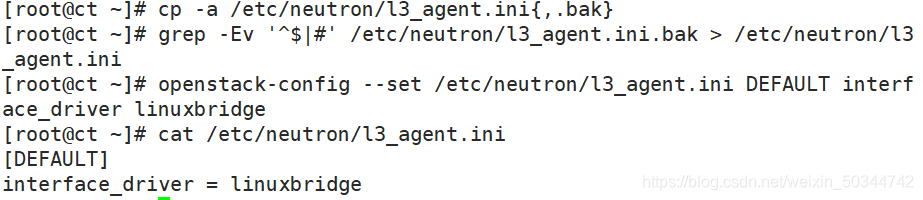

配置Linuxbridge接口驱动和外部网络网桥

cp -a /etc/neutron/l3_agent.ini{

,.bak}

grep -Ev '^$|#' /etc/neutron/l3_agent.ini.bak > /etc/neutron/l3_agent.ini

openstack-config --set /etc/neutron/l3_agent.ini DEFAULT interface_driver linuxbridge

cat /etc/neutron/l3_agent.ini

dhcp_agent 配置文件及内容

cp -a /etc/neutron/dhcp_agent.ini{

,.bak}

grep -Ev '^$|#' /etc/neutron/dhcp_agent.ini.bak > /etc/neutron/dhcp_agent.ini

openstack-config --set /etc/neutron/dhcp_agent.ini DEFAULT interface_driver linuxbridge

openstack-config --set /etc/neutron/dhcp_agent.ini DEFAULT dhcp_driver neutron.agent.linux.dhcp.Dnsmasq

openstack-config --set /etc/neutron/dhcp_agent.ini DEFAULT enable_isolated_metadata true

cat dhcp_agent.ini

[DEFAULT]

interface_driver = linuxbridge #指定默认接口驱动为linux网桥

dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq #指定DHCP驱动

enable_isolated_metadata = true #开启iso元数据

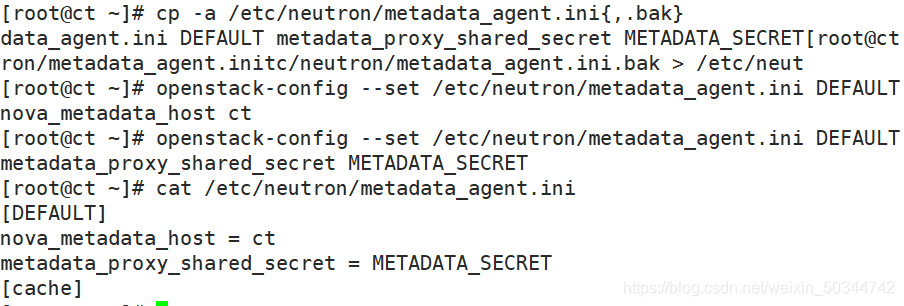

配置元数据代理、用于配置桥接与自服务网络的通用配置

cp -a /etc/neutron/metadata_agent.ini{

,.bak}

grep -Ev '^$|#' /etc/neutron/metadata_agent.ini.bak > /etc/neutron/metadata_agent.ini

openstack-config --set /etc/neutron/metadata_agent.ini DEFAULT nova_metadata_host ct

openstack-config --set /etc/neutron/metadata_agent.ini DEFAULT metadata_proxy_shared_secret METADATA_SECRET

cat /etc/neutron/metadata_agent.ini

nova配置文件,用于neutron交互

openstack-config --set /etc/nova/nova.conf neutron url http://ct:9696

openstack-config --set /etc/nova/nova.conf neutron auth_url http://ct:5000

openstack-config --set /etc/nova/nova.conf neutron auth_type password

openstack-config --set /etc/nova/nova.conf neutron project_domain_name default

openstack-config --set /etc/nova/nova.conf neutron user_domain_name default

openstack-config --set /etc/nova/nova.conf neutron region_name RegionOne

openstack-config --set /etc/nova/nova.conf neutron project_name service

openstack-config --set /etc/nova/nova.conf neutron username neutron

openstack-config --set /etc/nova/nova.conf neutron password NEUTRON_PASS

openstack-config --set /etc/nova/nova.conf neutron service_metadata_proxy true

openstack-config --set /etc/nova/nova.conf neutron metadata_proxy_shared_secret METADATA_SECRET

创建ML2插件文件符号连接

网络服务初始化脚本需要/etc/neutron/plugin.ini指向ML2插件配置文件的符号链接

ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini

初始化数据库

su -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf \

--config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head" neutron

重启计算节点nova-api服务

systemctl restart openstack-nova-api.service

开启neutron服务、设置开机自启动

systemctl enable neutron-server.service \

neutron-linuxbridge-agent.service neutron-dhcp-agent.service \

neutron-metadata-agent.service

systemctl start neutron-server.service \

neutron-linuxbridge-agent.service neutron-dhcp-agent.service \

neutron-metadata-agent.service

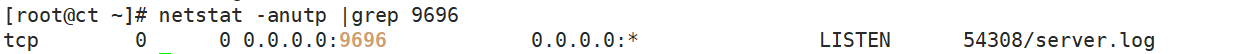

netstat -anutp |grep 9696

因为配置了第三层L3网络服务、所以需要启动第三层服务

systemctl enable neutron-l3-agent.service

systemctl restart neutron-l3-agent.service

计算节点

除ip地址,其他相同

yum -y install openstack-neutron-linuxbridge ebtables ipset conntrack-tools

#ipset:iptables的扩展,允许匹配规则的集合而不仅仅是一个IP

修改neutron.conf文件

cp -a /etc/neutron/neutron.conf{

,.bak}

grep -Ev '^$|#' /etc/neutron/neutron.conf.bak > /etc/neutron/neutron.conf

openstack-config --set /etc/neutron/neutron.conf DEFAULT transport_url rabbit://openstack:RABBIT_PASS@ct

openstack-config --set /etc/neutron/neutron.conf DEFAULT auth_strategy keystone

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken www_authenticate_uri http://ct:5000

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_url http://ct:5000

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken memcached_servers ct:11211

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_type password

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken project_domain_name default

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken user_domain_name default

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken project_name service

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken username neutron

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken password NEUTRON_PASS

openstack-config --set /etc/neutron/neutron.conf oslo_concurrency lock_path /var/lib/neutron/tmp

查看

cat /etc/neutron/neutron.conf

[DEFAULT] #neutron的server端与agent端通讯也是通过rabbitmq进行通讯的

transport_url = rabbit://openstack:RABBIT_PASS@ct

auth_strategy = keystone #认证策略:keystone

[cors]

[database]

[keystone_authtoken] #指定keystone认证的信息

www_authenticate_uri = http://ct:5000

auth_url = http://ct:5000

memcached_servers = ct:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = NEUTRON_PASS

[oslo_concurrency] #配置锁路径(管理线程库)

lock_path = /var/lib/neutron/tmp

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_middleware]

[oslo_policy]

[privsep]

[ssl]

配置Linux网桥代理

cp -a /etc/neutron/plugins/ml2/linuxbridge_agent.ini{

,.bak}

grep -Ev '^$|#' /etc/neutron/plugins/ml2/linuxbridge_agent.ini.bak > /etc/neutron/plugins/ml2/linuxbridge_agent.ini

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini linux_bridge physical_interface_mappings provider:eth1

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini vxlan enable_vxlan true

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini vxlan local_ip 192.168.66.12

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini vxlan l2_population true

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini securitygroup enable_security_group true

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini securitygroup firewall_driver neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

cat /etc/neutron/plugins/ml2/linuxbridge_agent.ini

[DEFAULT]

[linux_bridge]

physical_interface_mappings = provider:eth1

# 直接将node节点external网络绑定在当前节点的指定的物理网卡,不需要node节点配置网络名称,node节点只需要接收controller节点指令即可;controller节点上配置的external网络名称是针对整个openstack环境生效的,所以指定external网络绑定在当前node节点的eth0物理网卡上(也可能是bind0或br0)

[vxlan]

enable_vxlan = true #开启Vxlan网络

local_ip = 192.168.66.12

l2_population = true #L2 Population 是用来提高 VXLAN 网络扩展能力的组件

[securitygroup]

enable_security_group = true #开启安全组

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver #指定安全组驱动文件

修改内核

echo 'net.bridge.bridge-nf-call-iptables=1' >> /etc/sysctl.conf #允许虚拟机的数据通过物理机出去

echo 'net.bridge.bridge-nf-call-ip6tables=1' >> /etc/sysctl.conf

modprobe br_netfilter

sysctl -p

nova.conf配置文件

openstack-config --set /etc/nova/nova.conf neutron auth_url http://ct:5000

openstack-config --set /etc/nova/nova.conf neutron auth_type password

openstack-config --set /etc/nova/nova.conf neutron project_domain_name default

openstack-config --set /etc/nova/nova.conf neutron user_domain_name default

openstack-config --set /etc/nova/nova.conf neutron region_name RegionOne

openstack-config --set /etc/nova/nova.conf neutron project_name service

openstack-config --set /etc/nova/nova.conf neutron username neutron

openstack-config --set /etc/nova/nova.conf neutron password NEUTRON_PASS

cat /etc/nova/nova.conf #以下为修改字段内容

[neutron]

auth_url = http://ct:5000

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = NEUTRON_PASS

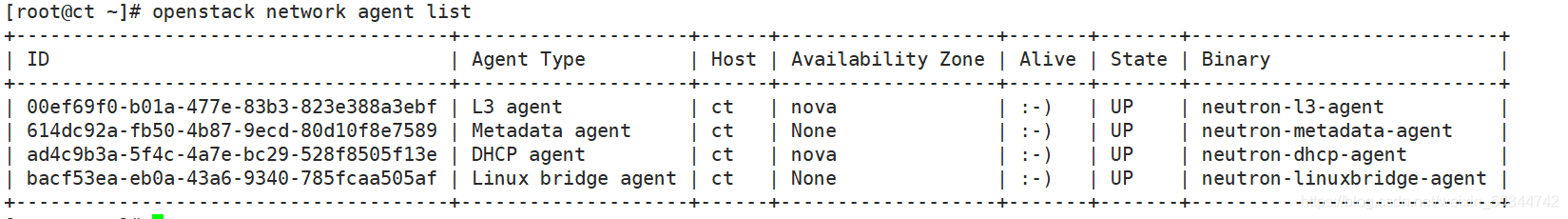

验证

openstack extension list --network

openstack network agent list

部署Dashboard

因为在CT控制节点已安装httpd服务,而Dashboard控制台也需要httpd支持,所以此处可以在C1节点进行安装httpd

yum -y install openstack-dashboard httpd

local_setting本地控制台的配置文件

vim /etc/openstack-dashboard/local_settings #修改local_setting本地控制台的配置文件

import os #使用Python导入一个模块

from django.utils.translation import ugettext_lazy as _

from openstack_dashboard.settings import HORIZON_CONFIG

DEBUG = False #不开启调式

ALLOWED_HOSTS = ['*'] #39行,只允许通过列表中指定的域名访问dashboard;允许通过指定的IP地址及域名访问dahsboard;['*']表示允许所有域名

LOCAL_PATH = '/tmp'

SECRET_KEY='506910006d24703abeb2'

SESSION_ENGINE = 'django.contrib.sessions.backends.cache' #指定session引擎

CACHES = {

#95-100行取消注释

'default': {

'BACKEND': 'django.core.cache.backends.memcached.MemcachedCache',

'LOCATION': 'ct:11211', #指定memcache地址及端口

}

}

#以下配置session信息存放到memcache中;session信息不仅可以存放到memcache中,也可以存放到其他地方

EMAIL_BACKEND = 'django.core.mail.backends.console.EmailBackend'

OPENSTACK_HOST = "ct" #118行

OPENSTACK_KEYSTONE_URL = "http://%s:5000/v3" % OPENSTACK_HOST

OPENSTACK_KEYSTONE_MULTIDOMAIN_SUPPORT = True #让dashboard支持域

OPENSTACK_API_VERSIONS = {

"identity": 3,

"image": 2,

"volume": 3,

}

#配置openstack的API版本

OPENSTACK_KEYSTONE_DEFAULT_DOMAIN = "Default"

OPENSTACK_KEYSTONE_DEFAULT_ROLE = "user"

OPENSTACK_NEUTRON_NETWORK = {

#132行

'enable_auto_allocated_network': False,

'enable_distributed_router': False,

'enable_fip_topology_check': False,

'enable_ha_router': False,

'enable_lb': False,

'enable_firewall': False,

'enable_vpn': False,

'enable_ipv6': True,

'enable_quotas': True,

'enable_rbac_policy': True,

'enable_router': True,

'default_dns_nameservers': [],

'supported_provider_types': ['*'],

'segmentation_id_range': {

},

'extra_provider_types': {

},

'supported_vnic_types': ['*'],

'physical_networks': [],

}

#定义使用的网络类型,[*]表示

TIME_ZONE = "Asia/Shanghai" #157行修改

重新生成openstack-dashboard.conf并重启Apache服务

cd /usr/share/openstack-dashboard

python manage.py make_web_conf --apache > /etc/httpd/conf.d/openstack-dashboard.conf

systemctl enable httpd.service

systemctl restart httpd.service

重启 ct 节点的 memcache 服务

systemctl restart memcached.service

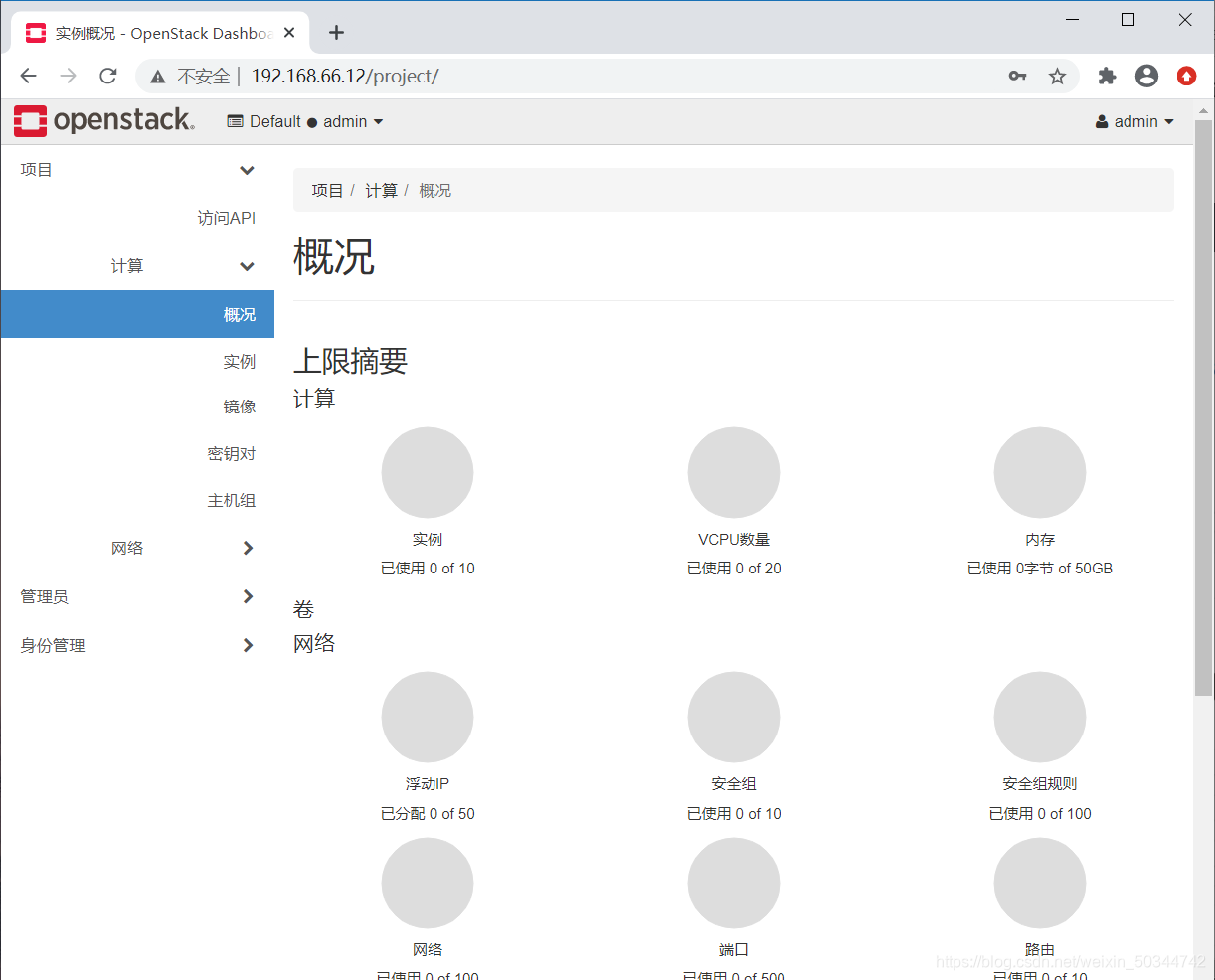

访问http://192.168.66.12”,进入Dashboard登录页面。

在登录页面依次填写:“域:default、用户名:admin、密码:ADMIN_PASS”(在~.bashrc中已定义)

完成后,进行登陆

部署cinder

控制节点

创建数据库实例和角色

mysql -uroot -p123123

MariaDB [(none)]> CREATE DATABASE cinder;

MariaDB [(none)]> GRANT ALL PRIVILEGES ON cinder.* TO 'cinder'@'localhost' IDENTIFIED BY 'CINDER_DBPASS';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON cinder.* TO 'cinder'@'%' IDENTIFIED BY 'CINDER_DBPASS';

MariaDB [(none)]> flush privileges;

MariaDB [(none)]> exit

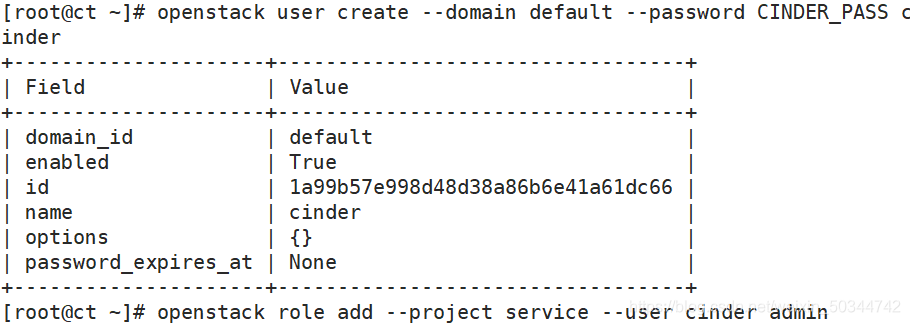

创建用户、修改配置文件

创建cinder用户,密码设置为CINDER_PASS,添加到service服务中,并授予admin权限

openstack user create --domain default --password CINDER_PASS cinder

openstack role add --project service --user cinder admin

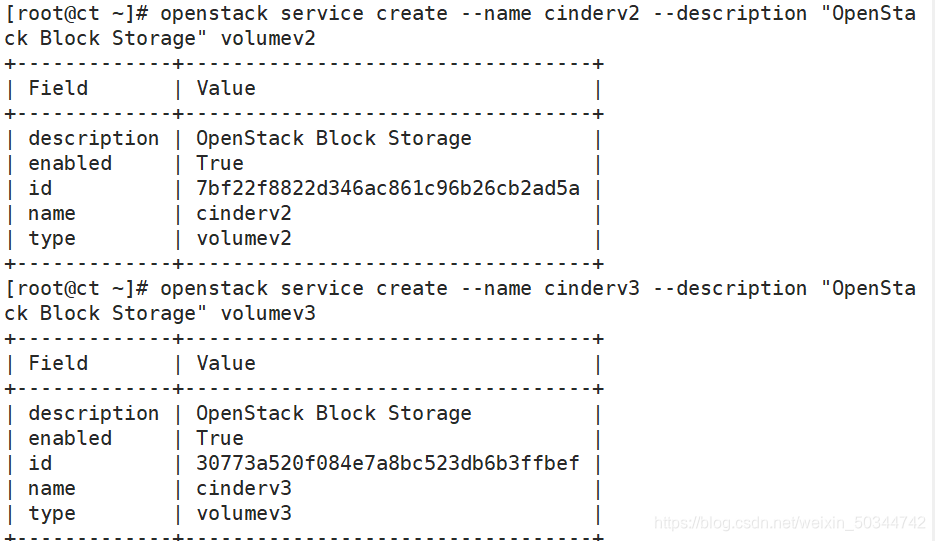

cinder有v2和v3两个并存版本的API,所以需要创建两个版本的service实例

openstack service create --name cinderv2 --description "OpenStack Block Storage" volumev2

openstack service create --name cinderv3 --description "OpenStack Block Storage" volumev3

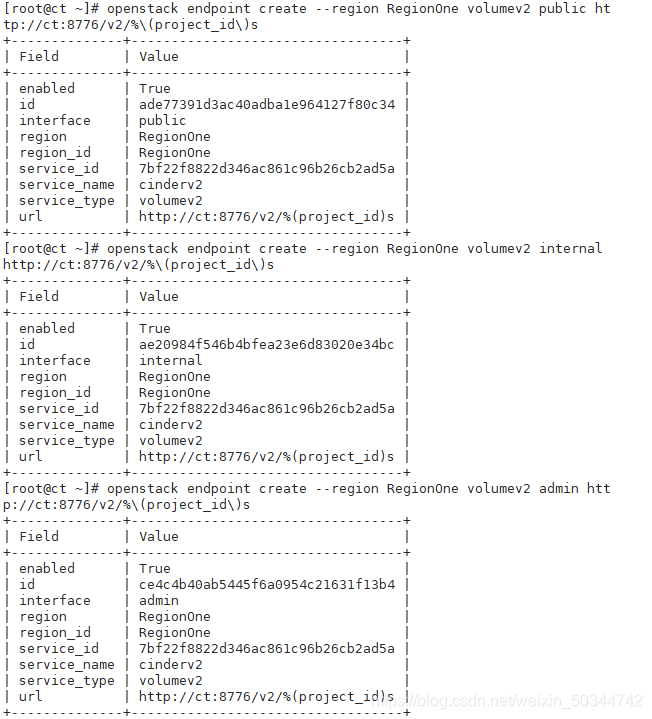

给v2和v3版本的api创建endpoint

#给v2的api创建endpoint

openstack endpoint create --region RegionOne volumev2 public http://ct:8776/v2/%\(project_id\)s

openstack endpoint create --region RegionOne volumev2 internal http://ct:8776/v2/%\(project_id\)s

openstack endpoint create --region RegionOne volumev2 admin http://ct:8776/v2/%\(project_id\)s

# 给v3的api创建endpoint

openstack endpoint create --region RegionOne volumev3 public http://ct:8776/v3/%\(project_id\)s

openstack endpoint create --region RegionOne volumev3 internal http://ct:8776/v3/%\(project_id\)s

openstack endpoint create --region RegionOne volumev3 admin http://ct:8776/v3/%\(project_id\)s

安装及配置cinder服务

yum -y install openstack-cinder

cp /etc/cinder/cinder.conf{

,.bak}

grep -Ev '#|^$' /etc/cinder/cinder.conf.bak>/etc/cinder/cinder.conf

openstack-config --set /etc/cinder/cinder.conf database connection mysql+pymysql://cinder:CINDER_DBPASS@ct/cinder

openstack-config --set /etc/cinder/cinder.conf DEFAULT transport_url rabbit://openstack:RABBIT_PASS@ct

openstack-config --set /etc/cinder/cinder.conf DEFAULT auth_strategy keystone

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken www_authenticate_uri http://ct:5000

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken auth_url http://ct:5000

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken memcached_servers ct:11211

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken auth_type password

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken project_domain_name default

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken user_domain_name default

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken project_name service

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken username cinder

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken password CINDER_PASS

openstack-config --set /etc/cinder/cinder.conf DEFAULT my_ip 192.168.66.11 #修改为 ct的IP地址

openstack-config --set /etc/cinder/cinder.conf oslo_concurrency lock_path /var/lib/cinder/tmp

查看

cat /etc/cinder/cinder.conf

[DEFAULT]

transport_url = rabbit://openstack:RABBIT_PASS@ct #配置rabbitmq连接

auth_strategy = keystone #认证方式

my_ip = 192.168.66.11 #内网IP

[backend]

[backend_defaults]

[barbican]

[brcd_fabric_example]

[cisco_fabric_example]

[coordination]

[cors]

[database] #对接数据库

connection = mysql+pymysql://cinder:CINDER_DBPASS@ct/cinder

[fc-zone-manager]

[healthcheck]

[key_manager]

[keystone_authtoken] #配置keystone认证信息

www_authenticate_uri = http://ct:5000 #keystone地址

auth_url = http://ct:5000

memcached_servers = ct:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = cinder #指定通过cinder账号到keystone做认证(用户名、密码)

password = CINDER_PASS

[nova]

[oslo_concurrency]

lock_path = /var/lib/cinder/tmp #配置锁路径

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_middleware]

[oslo_policy]

[oslo_reports]

[oslo_versionedobjects]

[privsep]

[profiler]

[sample_castellan_source]

[sample_remote_file_source]

[service_user]

[ssl]

[vault]

同步cinder数据库(填充块存储数据库),修改 Nova 配置文件,并重启服务

su -s /bin/sh -c "cinder-manage db sync" cinder

openstack-config --set /etc/nova/nova.conf cinder os_region_name RegionOne

systemctl restart openstack-nova-api.service

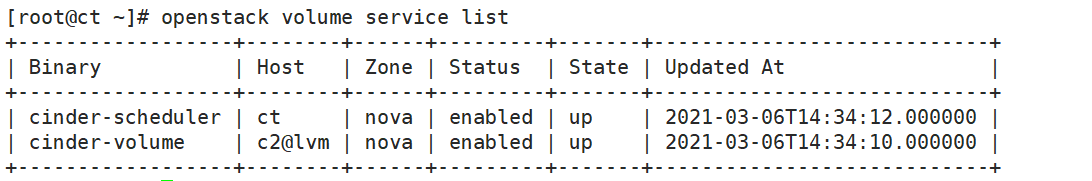

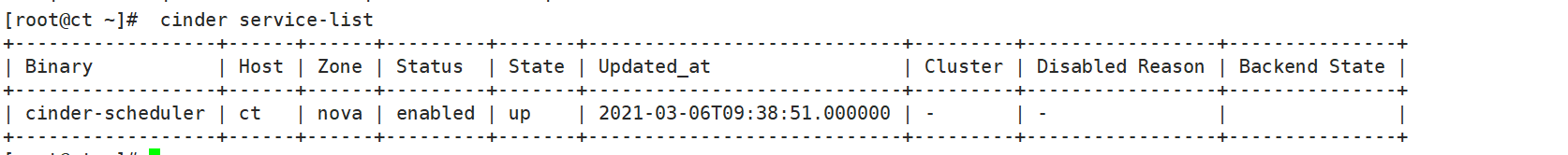

配置Cinder服务,控制节点验证

systemctl enable openstack-cinder-api.service openstack-cinder-scheduler.service

systemctl start openstack-cinder-api.service openstack-cinder-scheduler.service

cinder service-list

计算节点c2配置Cinder(存储节点)

安装并开启lvm服务

yum -y install openstack-cinder targetcli python-keystone

yum -y install lvm2 device-mapper-persistent-data

systemctl enable lvm2-lvmetad.service

systemctl start lvm2-lvmetad.service

创建lvm物理卷和卷组

新加一张sdc的物理卷,然后对c2节点重启

pvcreate /dev/sdc

vgcreate cinder-volumes /dev/sdc

修改lvm配置文件(指定使用sdc磁盘)并重启lvm服务

vim /etc/lvm/lvm.conf

filter = [ "a/sdb/","r/.*/" ] #141行,取消注释,修改filter规则

# a表示允许,r表示拒绝

# 只允许lvm服务访问sdc中的数据,不允许lvm服务访问其他磁盘,这也间接实现了openstack创建的虚拟机只能访问sdb中的数据,不能访问其他磁盘

# 设置只允许实例访问sdb逻辑卷中的数据;如果不配置的话,本机的其他服务也有可能会访问sdc逻辑卷中的数据

systemctl restart lvm2-lvmetad.service

配置cinder模块

cp /etc/cinder/cinder.conf{

,.bak}

grep -Ev '#|^$' /etc/cinder/cinder.conf.bak>/etc/cinder/cinder.conf

openstack-config --set /etc/cinder/cinder.conf database connection mysql+pymysql://cinder:CINDER_DBPASS@ct/cinder

openstack-config --set /etc/cinder/cinder.conf DEFAULT transport_url rabbit://openstack:RABBIT_PASS@ct

openstack-config --set /etc/cinder/cinder.conf DEFAULT auth_strategy keystone

openstack-config --set /etc/cinder/cinder.conf DEFAULT my_ip 192.168.66.13

openstack-config --set /etc/cinder/cinder.conf DEFAULT enabled_backends lvm

openstack-config --set /etc/cinder/cinder.conf DEFAULT glance_api_servers http://ct:9292

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken www_authenticate_uri http://ct:5000

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken auth_url http://ct:5000

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken memcached_servers ct:11211

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken auth_type password

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken project_domain_name default

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken user_domain_name default

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken project_name service

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken username cinder

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken password CINDER_PASS

openstack-config --set /etc/cinder/cinder.conf lvm volume_driver cinder.volume.drivers.lvm.LVMVolumeDriver

openstack-config --set /etc/cinder/cinder.conf lvm volume_group cinder-volumes

openstack-config --set /etc/cinder/cinder.conf lvm target_protocol iscsi

openstack-config --set /etc/cinder/cinder.conf lvm target_helper lioadm

openstack-config --set /etc/cinder/cinder.conf oslo_concurrency lock_path /var/lib/cinder/tmp

查看

cat /etc/cinder/cinder.conf

[DEFAULT]

transport_url = rabbit://openstack:RABBIT_PASS@ct

auth_strategy = keystone

my_ip = 192.168.66.13

enabled_backends = lvm

glance_api_servers = http://ct:9292

[backend]

[backend_defaults]

[barbican]

[brcd_fabric_example]

[cisco_fabric_example]

[coordination]

[cors]

[database]

connection = mysql+pymysql://cinder:CINDER_DBPASS@ct/cinder

[fc-zone-manager]

[healthcheck]

[key_manager]

[keystone_authtoken]

www_authenticate_uri = http://ct:5000

auth_url = http://ct:5000

memcached_servers = ct:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = cinder

password = CINDER_PASS

[nova]

[oslo_concurrency] #配置锁路径

lock_path = /var/lib/cinder/tmp

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_middleware]

[oslo_policy]

[oslo_reports]

[oslo_versionedobjects]

[privsep]

[profiler]

[sample_castellan_source]

[sample_remote_file_source]

[service_user]

[ssl]

[vault]

[lvm] #为LVM后端配置LVM驱动程序

volume_driver = cinder.volume.drivers.lvm.LVMVolumeDriver #指定LVM驱动程序;即通过指定的驱动创建LVM

volume_group = cinder-volumes #指定卷组(vg)

target_protocol = iscsi #pv使用的是iscsi协议,可以提供块存储服务

target_helper = lioadm #iscsi管理工具

#volume_backend_name=Openstack-lvm 选择:当后端有多个不同类型的存储时,可以在openstack中调用指定的存储;给当前存储指定个名称,用于后期区分多个不同的存储

开启cinder卷服务

systemctl enable openstack-cinder-volume.service target.service

systemctl start openstack-cinder-volume.service target.service

验证

查看卷列表

openstack volume service list