K8s官方文档 https://kubernetes.io/zh/docs/home/

目录

0 环境准备

| IP | hostname | 角色 |

|---|---|---|

| 10.238.162.33 | k8s-master | master |

| 10.238.162.32 | k8s-node1 | node1 |

| 10.238.162.34 | k8s-node2 | node2 |

1-9步需要在每个节点上执行

1 修改服务器主机名

用命令修改 重启永久生效

hostnamectl set-hostname k8s-node12添加IP与主机名对应关系

vim /etc/hosts

10.238.162.33 k8s-master

10.238.162.32 k8s-node1

10.238.162.34 k8s-node23 关闭防火墙

systemctl stop firewalld

systemctl disable firewalld4 关闭seliunx

临时关闭

setenforce 0

修改配置文件关闭 永久关闭

sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config 5 关闭swap

临时关闭

swapoff -a

修改配置文件 永久关闭

sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

free 查看6 将桥接的IPV4流量传递到iptables 的链

cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl --system7 安装docker

安装docker可以参考我之前的文章

8 添加阿里云YUM软件源

cat > /etc/yum.repos.d/kubernetes.repo << EOF

[k8s]

name=k8s

enabled=1

gpgcheck=0

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

EOF9 安装kubeadm,kubelet和kubectl

yum install kubelet kubeadm kubectl -y

设置开机自启

systemctl enable kubelet 可以看到版本为19.3

10 部署 K8S (Master)

10.1 初始化kubeadm

kubeadm init --apiserver-advertise-address=10.238.162.33 --image-repository registry.aliyuncs.com/google_containers --kubernetes-version=1.19.3 --service-cidr=10.96.0.0/12 --pod-network-cidr=10.244.0.0/16初始化master 日志输出如下

W1019 20:15:08.000424 26380 configset.go:348] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io]

[init] Using Kubernetes version: v1.19.3

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[WARNING Hostname]: hostname "k8s-master" could not be reached

[WARNING Hostname]: hostname "k8s-master": lookup k8s-master on 10.210.12.10:53: no such host

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [k8s-master kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 10.238.162.33]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [k8s-master localhost] and IPs [10.238.162.33 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [k8s-master localhost] and IPs [10.238.162.33 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 22.008757 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.19" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node k8s-master as control-plane by adding the label "node-role.kubernetes.io/master=''"

[mark-control-plane] Marking the node k8s-master as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: m3l2yo.2yhgic0b7075lqmo

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 10.238.162.33:6443 --token m3l2yo.2yhgic0b7075lqmo \

--discovery-token-ca-cert-hash sha256:540b281f0c4f217b048316aebeeb14645dc304e51c34950fbd0e0759376c24fd 初始化成功后 提示截图

# docker images

1.19.2的版本

1.19.3的版本

从初始化成功后的日志输出中复制命令直接执行 (master节点)

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

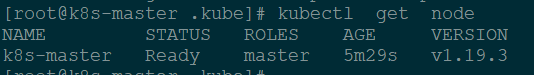

sudo chown $(id -u):$(id -g) $HOME/.kube/configkubectl get nodes (master) status为not ready

1.19.2版本

1.19.3版本

11 安装pod网络 插件(CNI)(master)

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml日志输出如下

Warning: rbac.authorization.k8s.io/v1beta1 ClusterRole is deprecated in v1.17+, unavailable in v1.22+; use rbac.authorization.k8s.io/v1 ClusterRole

clusterrole.rbac.authorization.k8s.io/flannel created

Warning: rbac.authorization.k8s.io/v1beta1 ClusterRoleBinding is deprecated in v1.17+, unavailable in v1.22+; use rbac.authorization.k8s.io/v1 ClusterRoleBinding

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds created查看部署是否成功

kubectl get pods -n kube-system

再次查看状态 已经为ready

12 Node节点加入集群(两个node节点)

kubeadm join 10.238.162.33:6443 --token m0gktc.qswu1kodm46ynf7r --discovery-token-ca-cert-hash sha256:1da9191e4d7c01bc42fe83ac58e52f7693977718a69c6ff6da5eab4455c17cb0

kubeadm join 10.238.162.33:6443 --token m3l2yo.2yhgic0b7075lqmo --discovery-token-ca-cert-hash sha256:540b281f0c4f217b048316aebeeb14645dc304e51c34950fbd0e0759376c24fd 日志输出

# kubeadm join 10.238.162.33:6443 --token m0gktc.qswu1kodm46ynf7r --discovery-token-ca-cert-hash sha256:1da9191e4d7c01bc42fe83ac58e52f7693977718a69c6ff6da5eab4455c17cb0

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[WARNING Hostname]: hostname "k8s-node1" could not be reached

[WARNING Hostname]: hostname "k8s-node1": lookup k8s-node1 on 10.210.12.10:53: no such host

[WARNING Service-Kubelet]: kubelet service is not enabled, please run 'systemctl enable kubelet.service'

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.在master上运行验证 kubectl get nodes 发现node节点已经加入

常用命令

报错以解决:

错误1

unknown flag: --kubernetes-version v1.15.1

To see the stack trace of this error execute with --v=5 or higher

错误2 初始化命令格式错误 调整格式后

unknown command "\u00a0" for "kubeadm init"

错误3

node节点加入master节点报错 还是格式错误

报错4 node节点重新加入时报错

kubeadm join 10.238.162.33:6443 --token m3l2yo.2yhgic0b7075lqmo --discovery-token-ca-cert-hash sha256:540b281f0c4f217b048316aebeeb14645dc304e51c34950fbd0e0759376c24fd

报错

error execution phase kubelet-start: error uploading crisocket: timed out waiting for the condition

解决

# kubeadm reset

[root@k8s-node2 ~]# kubeadm reset

[reset] WARNING: Changes made to this host by 'kubeadm init' or 'kubeadm join' will be reverted.

[reset] Are you sure you want to proceed? [y/N]: y

[preflight] Running pre-flight checks

W1019 20:42:16.800042 29915 removeetcdmember.go:79] [reset] No kubeadm config, using etcd pod spec to get data directory

[reset] No etcd config found. Assuming external etcd

[reset] Please, manually reset etcd to prevent further issues

[reset] Stopping the kubelet service

[reset] Unmounting mounted directories in "/var/lib/kubelet"

[reset] Deleting contents of config directories: [/etc/kubernetes/manifests /etc/kubernetes/pki]

[reset] Deleting files: [/etc/kubernetes/admin.conf /etc/kubernetes/kubelet.conf /etc/kubernetes/bootstrap-kubelet.conf /etc/kubernetes/controller-manager.conf /etc/kubernetes/scheduler.conf]

[reset] Deleting contents of stateful directories: [/var/lib/kubelet /var/lib/dockershim /var/run/kubernetes /var/lib/cni]

The reset process does not clean CNI configuration. To do so, you must remove /etc/cni/net.d

The reset process does not reset or clean up iptables rules or IPVS tables.

If you wish to reset iptables, you must do so manually by using the "iptables" command.

If your cluster was setup to utilize IPVS, run ipvsadm --clear (or similar)

to reset your system's IPVS tables.

The reset process does not clean your kubeconfig files and you must remove them manually.

Please, check the contents of the $HOME/.kube/config file.参考