services

1. k8s网络通信

- k8s通过CNI接口接入其他插件来实现网络通讯。目前比较流行的插件有flannel,calico等。

CNI插件存放位置:# cat /etc/cni/net.d/10-flannel.conflist

插件使用的解决方案如下:

虚拟网桥,虚拟网卡,多个容器共用一个虚拟网卡进行通信。

多路复用:MacVLAN,多个容器共用一个物理网卡进行通信。

硬件交换:SR-LOV,一个物理网卡可以虚拟出多个接口,这个性能最好。

容器间通信:同一个pod内的多个容器间的通信,通过lo即可实现;

pod之间的通信:

同一节点的pod之间通过cni网桥转发数据包。(brctl show可以查看)

不同节点的pod之间的通信需要网络插件支持。

pod和service通信: 通过iptables或ipvs实现通信,ipvs取代不了iptables,因为ipvs只能做负载均衡,而做不了nat转换。

pod和外网通信:iptables的MASQUERADE。

Service与集群外部客户端的通信;(ingress、nodeport、loadbalancer)

2. services

2.1 简介

- Service可以看作是一组提供相同服务的Pod对外的访问接口。借助Service,应用可以方便地实现服务发现和负载均衡。

service默认只支持4层负载均衡能力,没有7层功能。(可以通过Ingress实现)

service的类型:(前三种是集群外部访问内部资源)

ClusterIP:默认值,k8s系统给service自动分配的虚拟IP,只能在集群内部访问。

NodePort:将Service通过指定的Node上的端口暴露给外部,访问任意一个NodeIP:nodePort都将路由到ClusterIP。

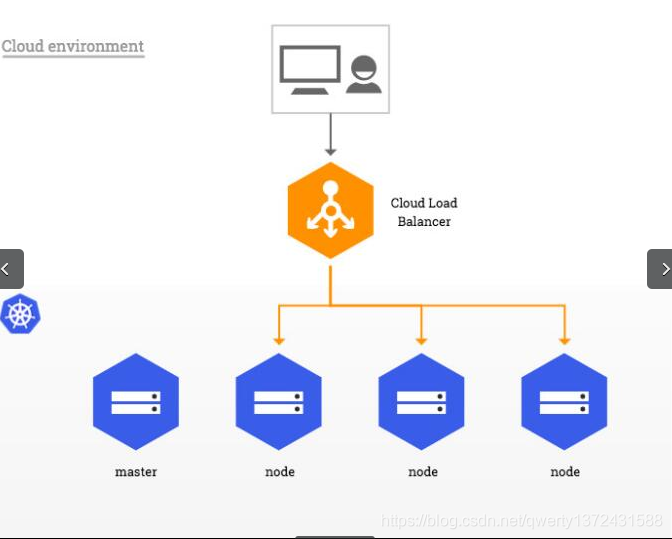

LoadBalancer:在 NodePort 的基础上,借助 cloud provider 创建一个外部的负载均衡器,并将请求转发到 <NodeIP>:NodePort,此模式只能在云服务器上使用。

ExternalName:将服务通过 DNS CNAME 记录方式转发到指定的域名(通过 spec.externlName 设定)。[集群内部访问外部,通过内部调用外部资源]

2.2 IPVS模式的service

- Service 是由 kube-proxy 组件,加上 iptables 来共同实现的.

kube-proxy 通过 iptables 处理 Service 的过程,需要在宿主机上设置相当多的 iptables 规则,如果宿主机有大量的Pod,不断刷新iptables规则,会消耗大量的CPU资源。

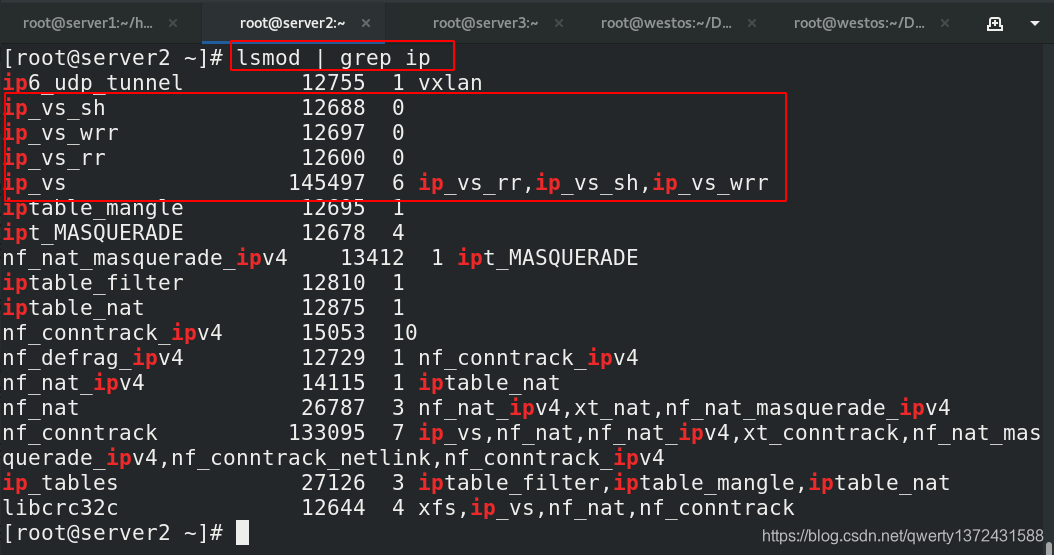

IPVS模式的service,可以使K8s集群支持更多量级的Pod。

2.2.1 查看没有设置ipvs模式时候的ipvs

[root@server2 ~]# lsmod | grep ip ##可以查看对应的ipvs是没有使用的,还是使用的iptables

ip6_udp_tunnel 12755 1 vxlan

ip_vs_sh 12688 0

ip_vs_wrr 12697 0

ip_vs_rr 12600 0

ip_vs 145497 6 ip_vs_rr,ip_vs_sh,ip_vs_wrr

2.2.2 部署ipvs模式

[root@server2 ~]# yum install -y ipvsadm ##安装ipvsadm,每个节点都需要安装。每个节点操作一样

[root@server3 ~]# yum install -y ipvsadm

[root@server4 ~]# yum install -y ipvsadm

[root@server2 ~]# kubectl get pod -n kube-system | grep kube-proxy ##部署之前查看一下

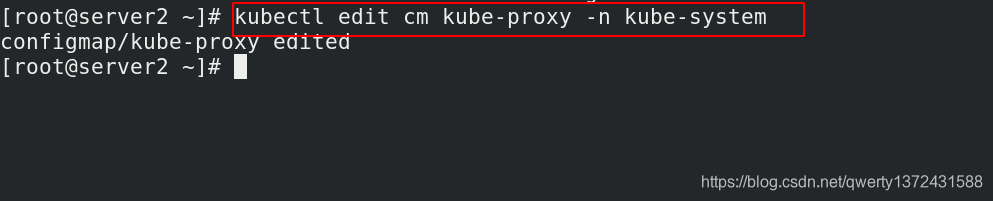

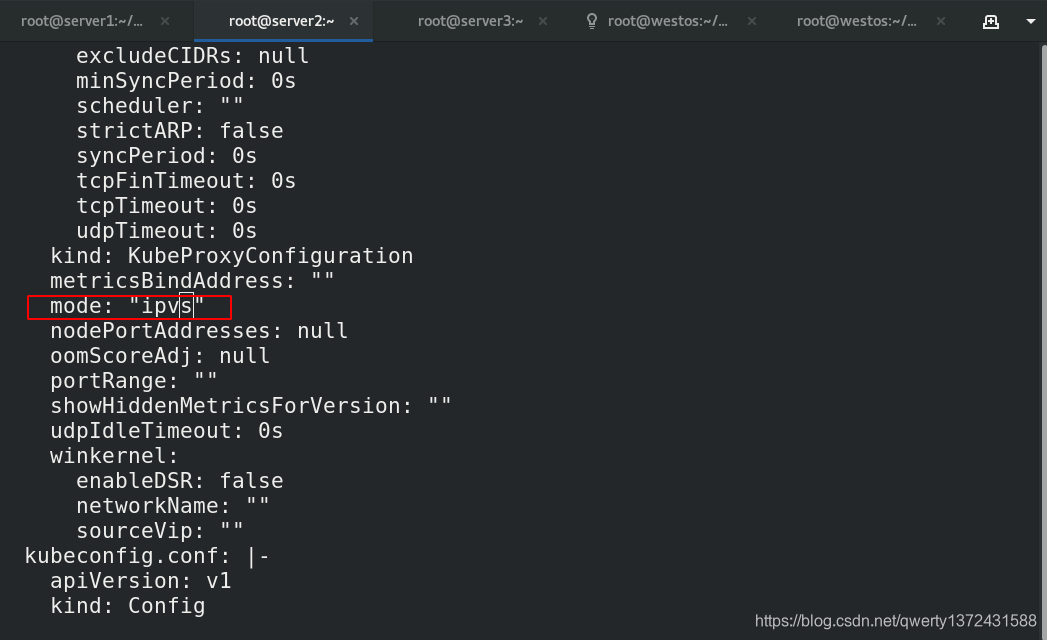

[root@server2 ~]# kubectl edit cm kube-proxy -n kube-system ##进入修改mode为ipvs

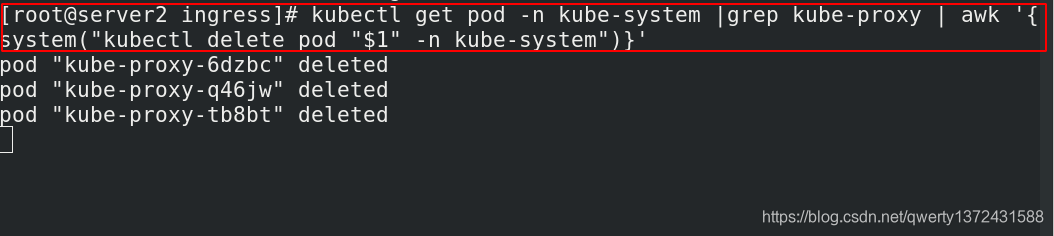

[root@server2 ~]# kubectl get pod -n kube-system |grep kube-proxy | awk '{system("kubectl delete pod "$1" -n kube-system")}' ##更新kube-proxy pod

[root@server2 ~]# kubectl get pod -n kube-system | grep kube-proxy ##部署之后查看是否发生变化

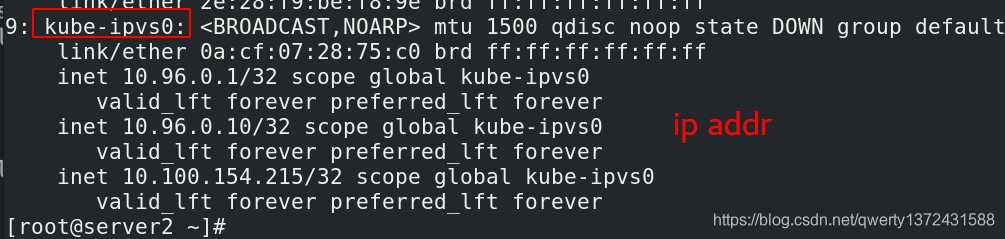

#IPVS模式下,kube-proxy会在service创建后,在宿主机上添加一个虚拟网卡:kube-ipvs0,并分配service IP。

[root@server2 ~]# ip addr ##查看ip

2.2.3 测试(观察是否是动态负载均衡变化)

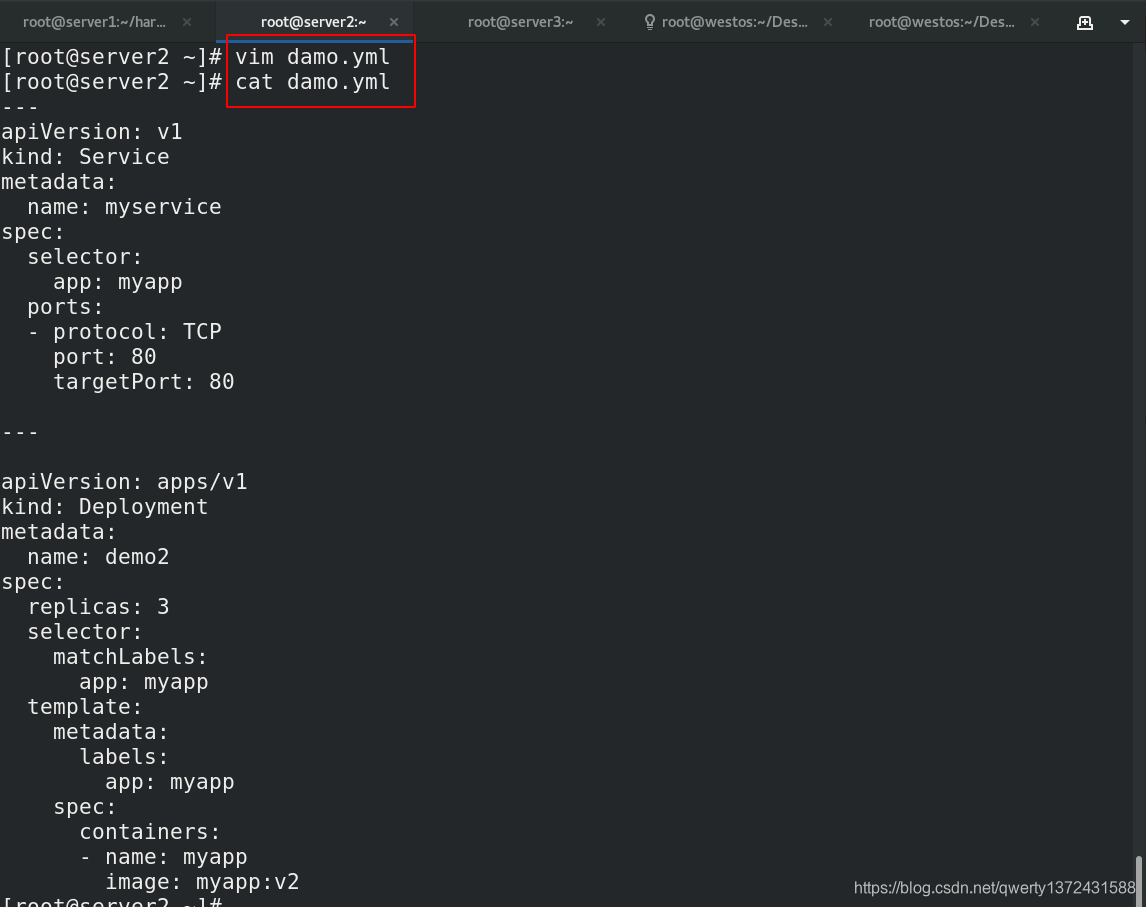

[root@server2 ~]# vim damo.yml ##编辑测试文件

[root@server2 ~]# cat damo.yml

---

apiVersion: v1

kind: Service

metadata:

name: myservice

spec:

selector:

app: myapp

ports:

- protocol: TCP

port: 80

targetPort: 80

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: demo2

spec:

replicas: 3

selector:

matchLabels:

app: myapp

template:

metadata:

labels:

app: myapp

spec:

containers:

- name: myapp

image: myapp:v2

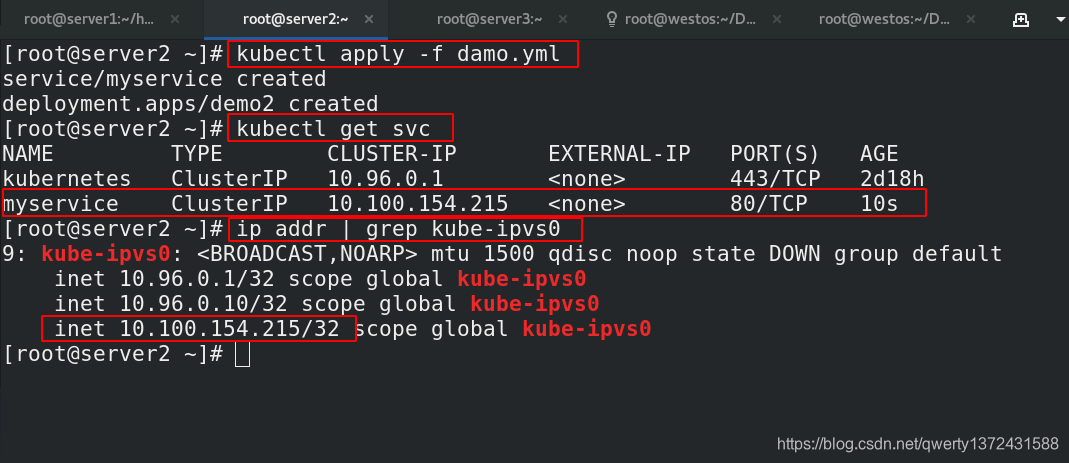

[root@server2 ~]# kubectl apply -f damo.yml ##创建services和pod

service/myservice created

deployment.apps/demo2 created

[root@server2 ~]# kubectl get svc ##查看服务

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 2d18h

myservice ClusterIP 10.100.154.215 <none> 80/TCP 10s

[root@server2 ~]# ip addr | grep kube-ipvs0 ##查看相应的ip

9: kube-ipvs0: <BROADCAST,NOARP> mtu 1500 qdisc noop state DOWN group default

inet 10.96.0.1/32 scope global kube-ipvs0

inet 10.96.0.10/32 scope global kube-ipvs0

inet 10.100.154.215/32 scope global kube-ipvs0

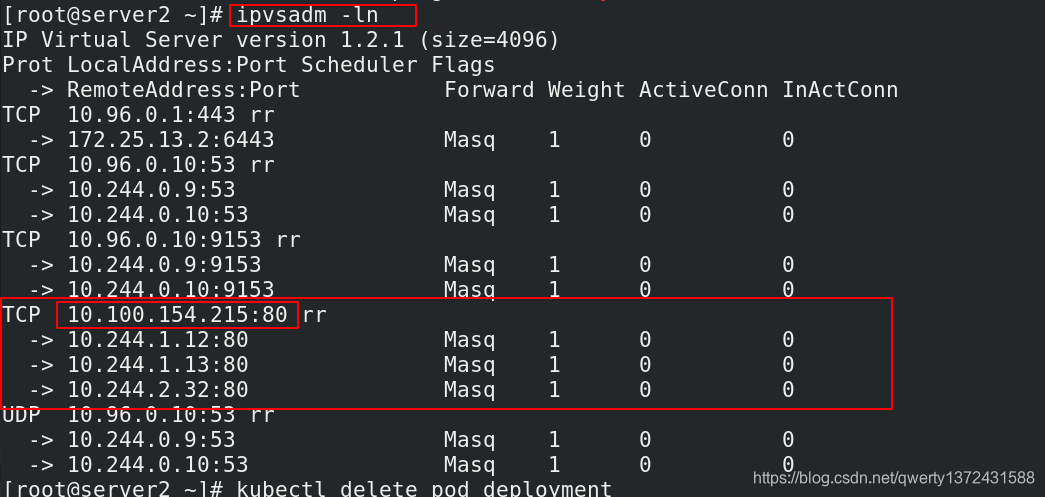

[root@server2 ~]# ipvsadm -ln ##查看对应的负载均衡

[root@server2 ~]# vim damo.yml ##改成6个

[root@server2 ~]# cat damo.yml

---

apiVersion: v1

kind: Service

metadata:

name: myservice

spec:

selector:

app: myapp

ports:

- protocol: TCP

port: 80

targetPort: 80

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: demo2

spec:

replicas: 6

selector:

matchLabels:

app: myapp

template:

metadata:

labels:

app: myapp

spec:

containers:

- name: myapp

image: myapp:v2

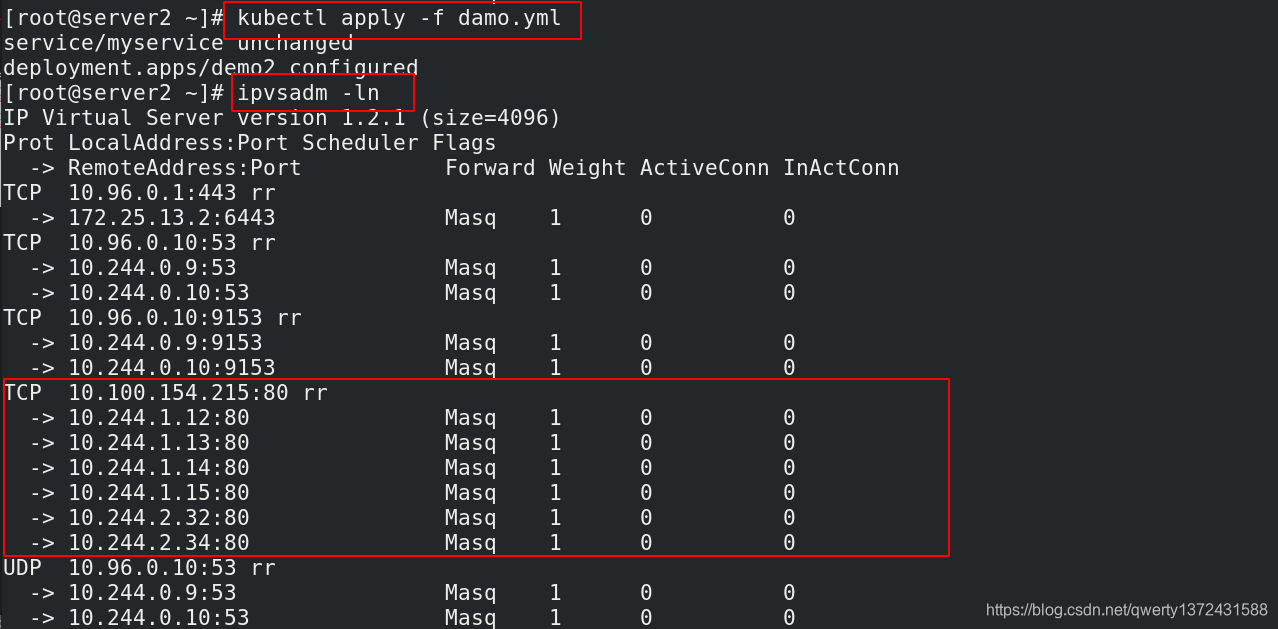

[root@server2 ~]# kubectl apply -f damo.yml ##更新pod

service/myservice unchanged

deployment.apps/demo2 configured

[root@server2 ~]# ipvsadm -ln ##再次查看负载均衡

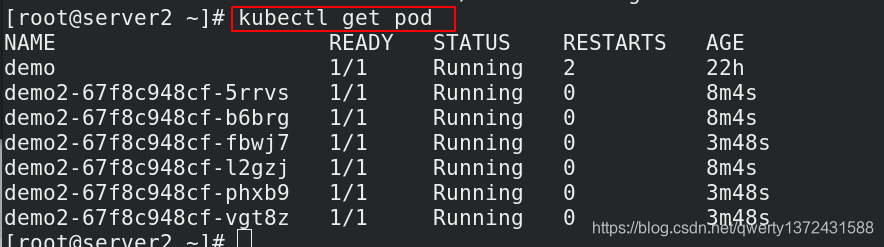

[root@server2 ~]# kubectl get pod ##查看对应pod信息

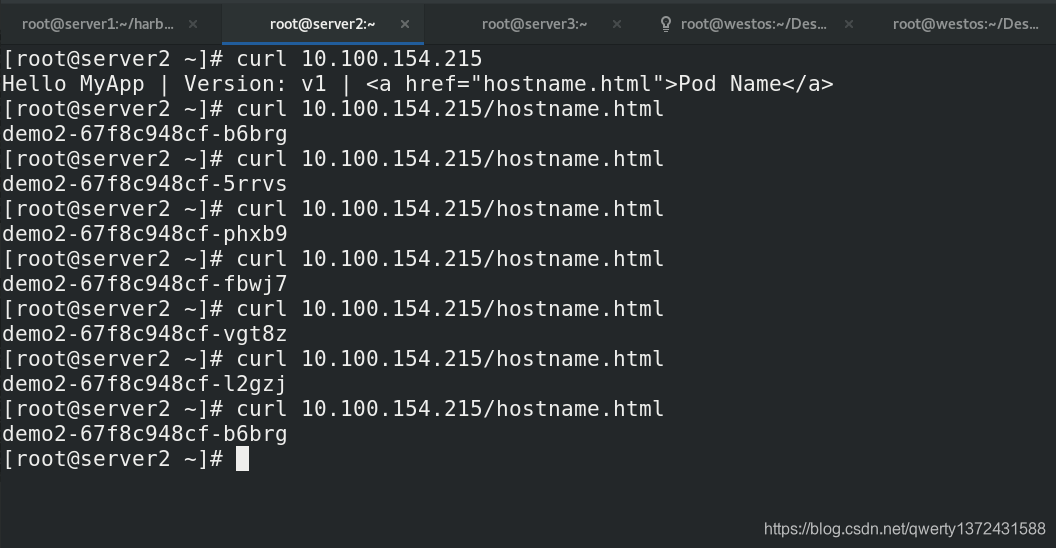

[root@server2 ~]# curl 10.100.154.215 ##查看是否负载均衡

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

[root@server2 ~]# curl 10.100.154.215/hostname.html

2.3 k8s提供的dns服务插件

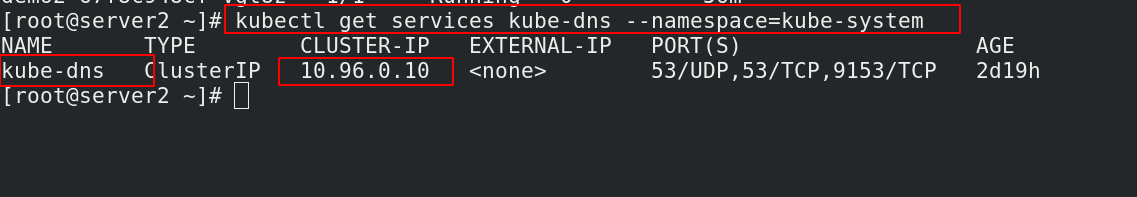

[root@server2 ~]# kubectl get services kube-dns --namespace=kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 2d19h

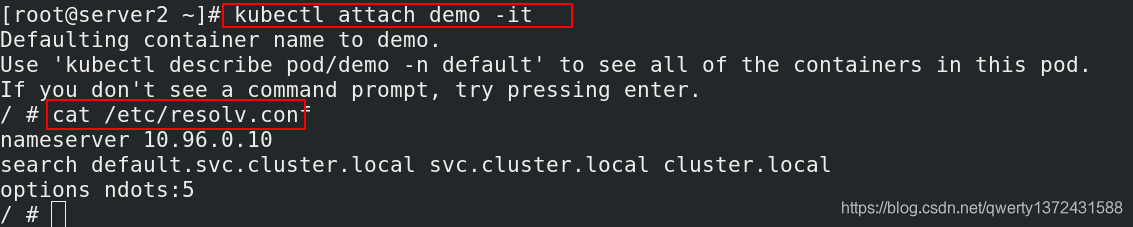

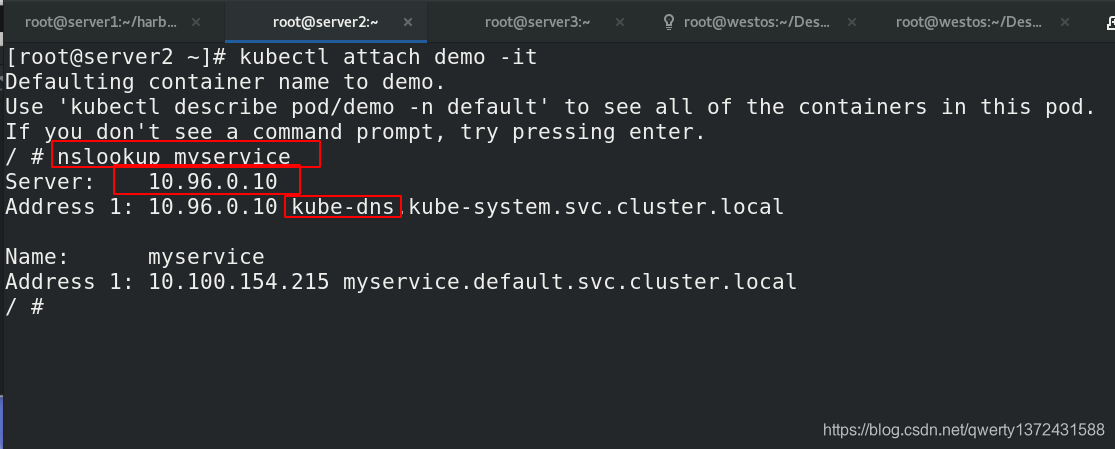

[root@server2 ~]# kubectl attach demo -it ##如果有demo就直接进

[root@server2 ~]# kubectl run demo --image=busyboxplus -it ##没有demo创建demo

[root@server2 ~]# yum install bind-utils -y ##安装dig工具

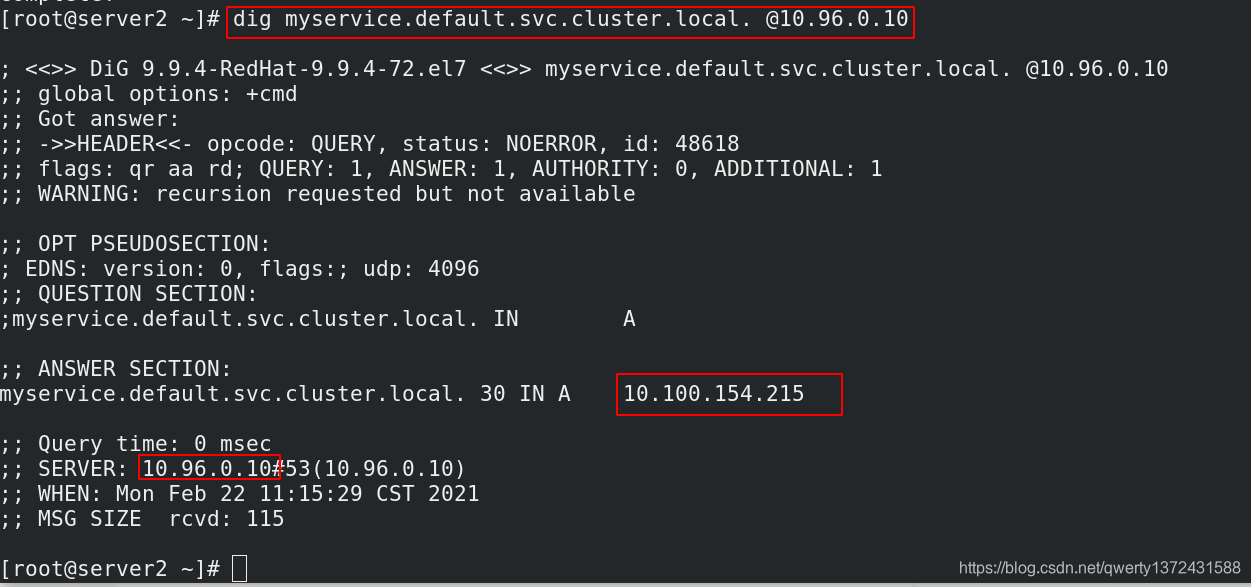

[root@server2 ~]# dig myservice.default.svc.cluster.local. @10.96.0.10 ##通过dig进行测试

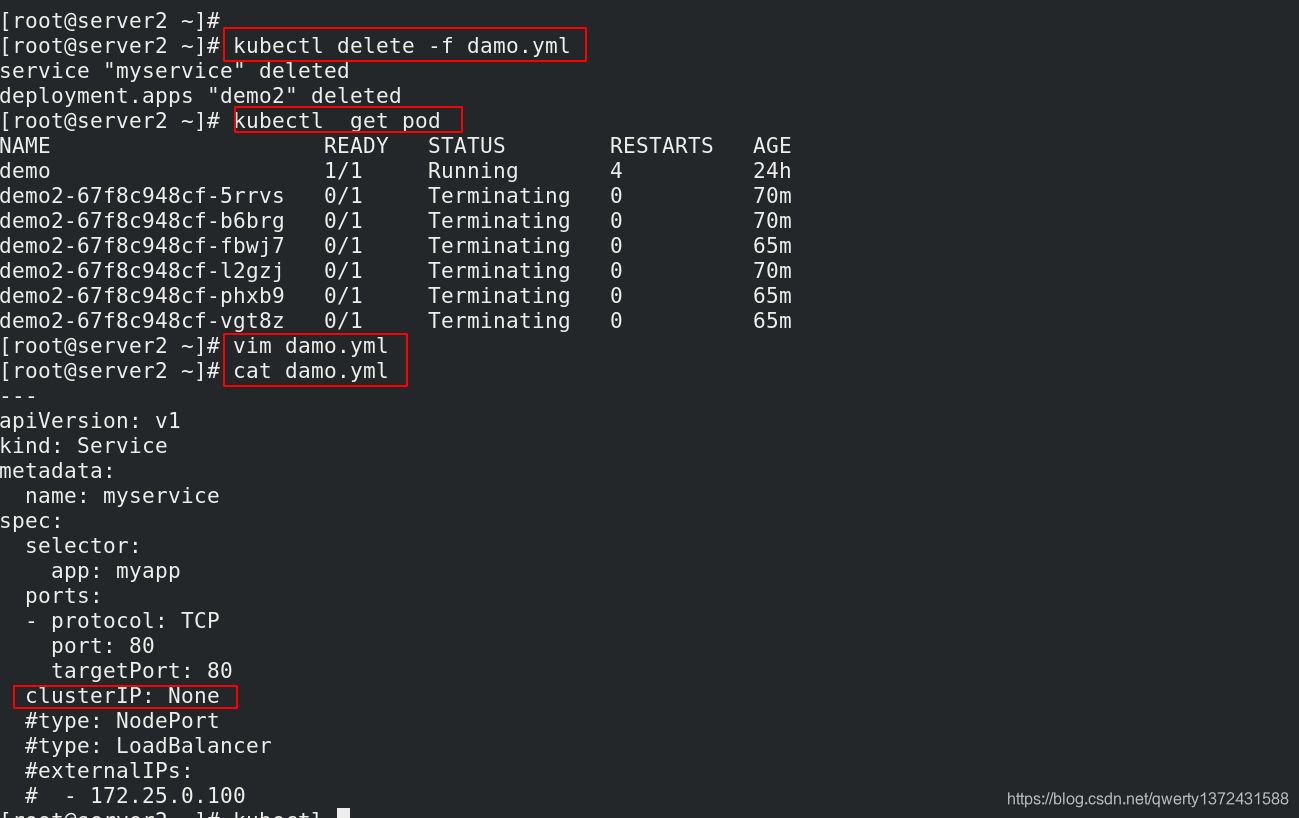

2.4 Headless Service “无头服务”

- Headless Service不需要分配一个VIP,而是直接以DNS记录的方式解析出被代理Pod的IP地址。

域名格式:$(servicename).$(namespace).svc.cluster.local

[root@server2 ~]# kubectl delete -f damo.yml ##清理环境

[root@server2 ~]# vim damo.yml

[root@server2 ~]# cat damo.yml ##无头服务示例

---

apiVersion: v1

kind: Service

metadata:

name: myservice

spec:

selector:

app: myapp

ports:

- protocol: TCP

port: 80

targetPort: 80

clusterIP: None ##无头服务,

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: demo2

spec:

replicas: 3

selector:

matchLabels:

app: myapp

template:

metadata:

labels:

app: myapp

spec:

containers:

- name: myapp

image: myapp:v2

[root@server2 ~]# kubectl apply -f damo.yml ##应用

service/myservice created

[root@server2 ~]# kubectl get svc ##CLUSTER-IP没有分配ip

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 2d19h

myservice ClusterIP None <none> 80/TCP 24s

[root@server2 ~]# ip addr | grep kube-ipvs0 ##没有分配ip到ipvs0

9: kube-ipvs0: <BROADCAST,NOARP> mtu 1500 qdisc noop state DOWN group default

inet 10.96.0.1/32 scope global kube-ipvs0

inet 10.96.0.10/32 scope global kube-ipvs0

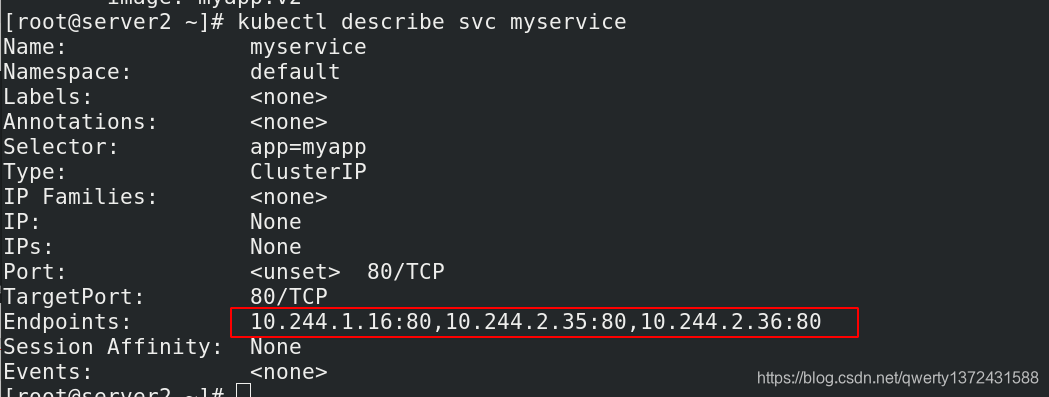

[root@server2 ~]# kubectl describe svc myservice ##有对应的Endpoints:

2.5 从外部访问service的三种方式

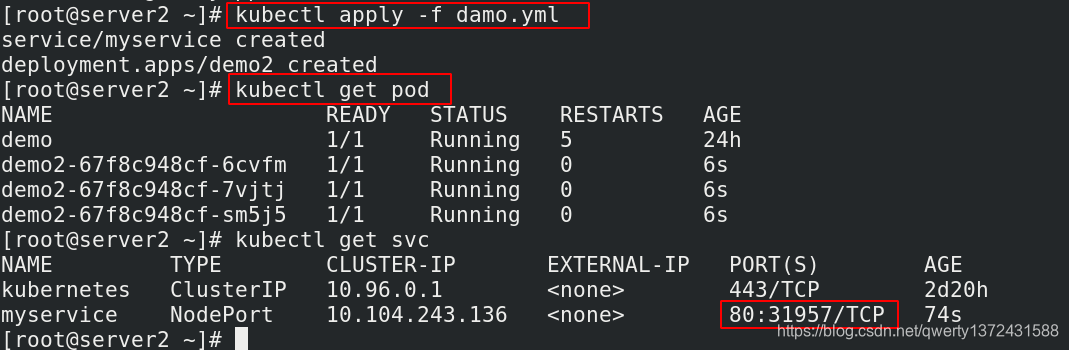

2.5.1 NodePort方式

[root@server2 ~]# kubectl delete -f damo.yml ##删除

[root@server2 ~]# cat damo.yml

---

apiVersion: v1

kind: Service

metadata:

name: myservice

spec:

selector:

app: myapp

ports:

- protocol: TCP

port: 80

targetPort: 80

#clusterIP: None

type: NodePort ##改为NodePort

#type: LoadBalancer

#externalIPs:

# - 172.25.0.100

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: demo2

spec:

replicas: 3

selector:

matchLabels:

app: myapp

template:

metadata:

labels:

app: myapp

spec:

containers:

- name: myapp

image: myapp:v2

[root@server2 ~]# kubectl apply -f damo.yml ##创建pod

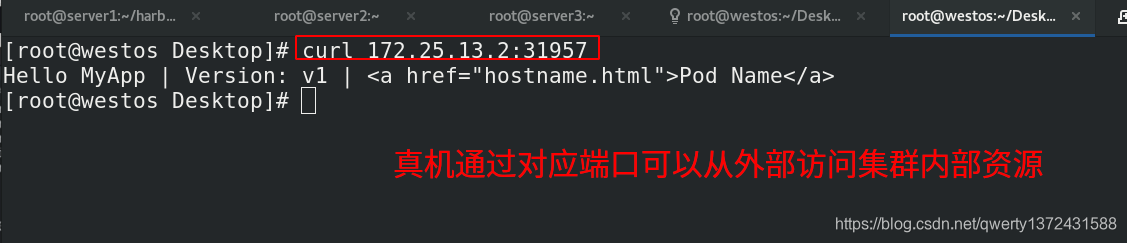

[root@server2 ~]# kubectl get svc ##查看外部访问需要的端口是31957

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 2d20h

myservice NodePort 10.104.243.136 <none> 80:31957/TCP 74s

2.5.2 LoadBalancer

2.5.2.1 没有Metallb

- 从外部访问 Service 的第二种方式,适用于公有云上的 Kubernetes 服务。这时候,你可以指定一个 LoadBalancer 类型的 Service。

- 在service提交后,Kubernetes就会调用 CloudProvider 在公有云上为你创建一个负载均衡服务,并且把被代理的 Pod 的 IP地址配置给负载均衡服务做后端。

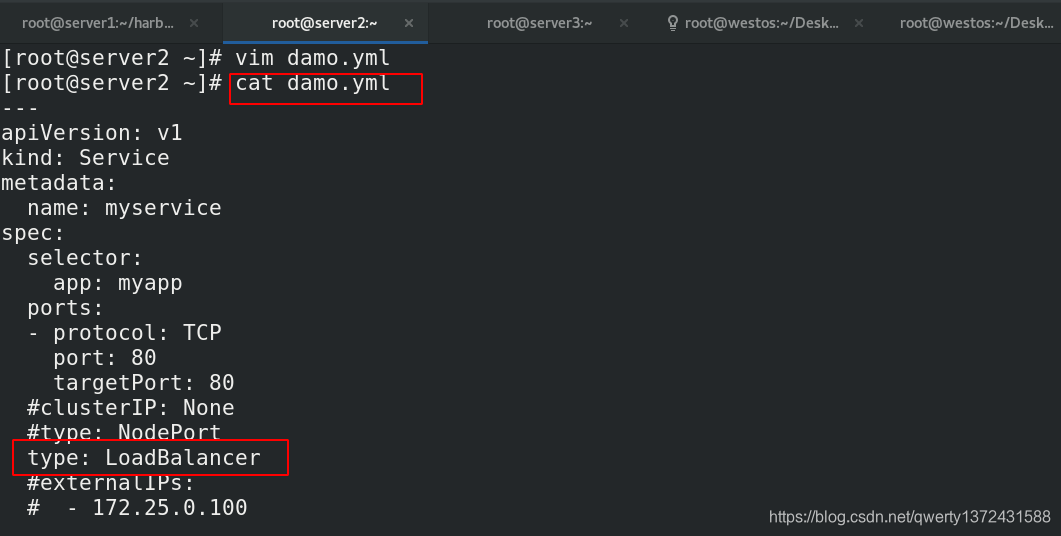

[root@server2 ~]# cat damo.yml

---

apiVersion: v1

kind: Service

metadata:

name: myservice

spec:

selector:

app: myapp

ports:

- protocol: TCP

port: 80

targetPort: 80

#clusterIP: None

#type: NodePort

type: LoadBalancer

#externalIPs:

# - 172.25.0.100

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: demo2

spec:

replicas: 3

selector:

matchLabels:

app: myapp

template:

metadata:

labels:

app: myapp

spec:

containers:

- name: myapp

image: myapp:v2

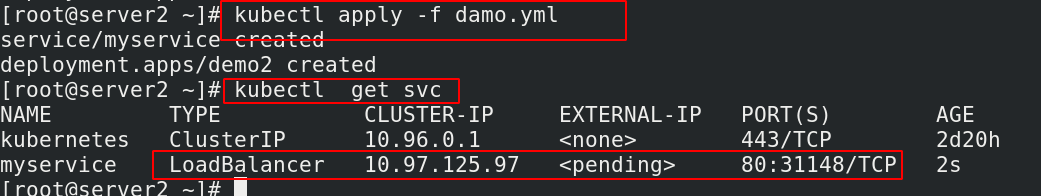

[root@server2 ~]# kubectl apply -f damo.yml ##创建pod应用

service/myservice configured

deployment.apps/demo2 unchanged

[root@server2 ~]# kubectl get svc ##查看服务

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 2d20h

myservice LoadBalancer 10.97.125.97 <pending> 80:31148/TCP 2s

扫描二维码关注公众号,回复:

12781858 查看本文章

2.5.2.2 通过Metallb模拟云端环境

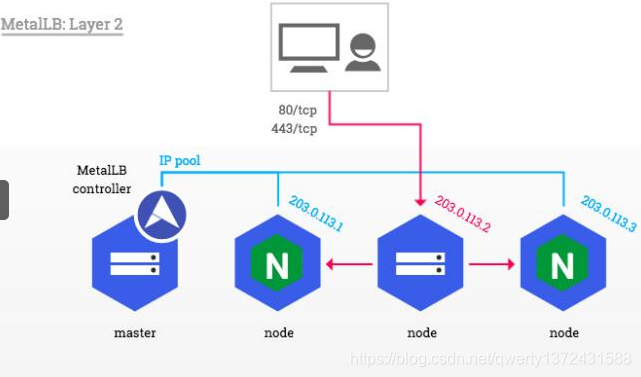

- 针对layer2模式进行分析

metallb会根据配置的地址池为LoadBalancer类型的service分配external-ip(vip)

一旦MetalLB为service分配了一个外部IP地址,它需要使集群之外的网络知道该VIP“存在”在集群中。MetalLB使用标准路由协议来实现这一点:ARP、NDP或BGP。

在layer2模式下,MetalLB的某一个speaker(这个speaker由metallb controller选择)会响应对service VIP的ARP请求或IPv6的NDP请求,因此从局域网层面来看,speaker所在的机器是有多个IP地址的,其中包含VIP。

## 1. metallb配置

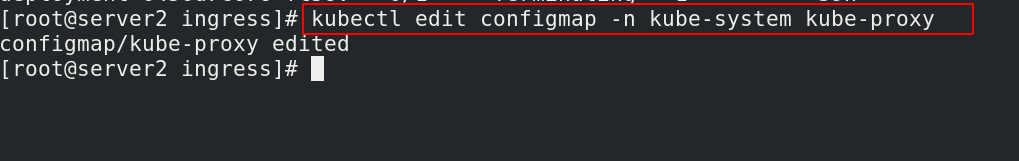

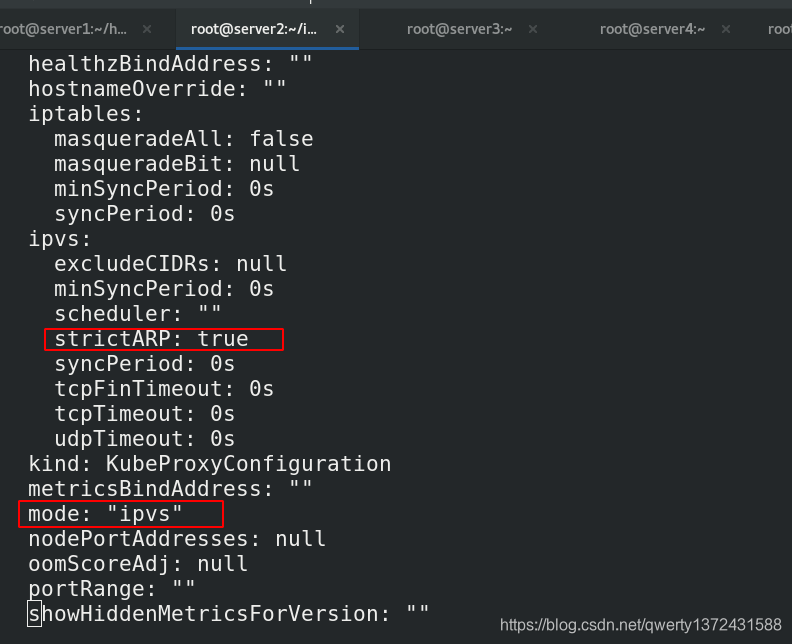

[root@server2 ingress]# kubectl edit configmap -n kube-system kube-proxy ##修改配置文件

[root@server2 ~]# kubectl get pod -n kube-system |grep kube-proxy | awk '{system("kubectl delete pod "$1" -n kube-system")}' //更新kube-proxy pod

[root@server2 ~]# mkdir metallb ##建立文件夹方便实验

[root@server2 ~]# cd metallb/

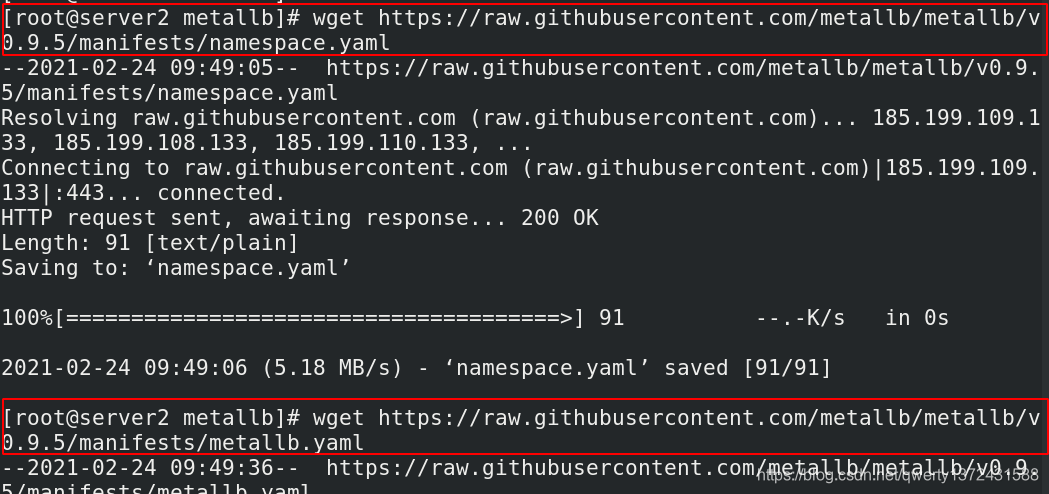

[root@server2 metallb]# wget https://raw.githubusercontent.com/metallb/metallb/v0.9.5/manifests/namespace.yaml

[root@server2 metallb]# wget https://raw.githubusercontent.com/metallb/metallb/v0.9.5/manifests/metallb.yaml ##下载metallb的俩个配置文件

[root@server2 metallb]# ls

metallb.yaml namespace.yaml

##拉取镜像并上传镜像到自己的私有仓库(在自己的仓库主机上执行)

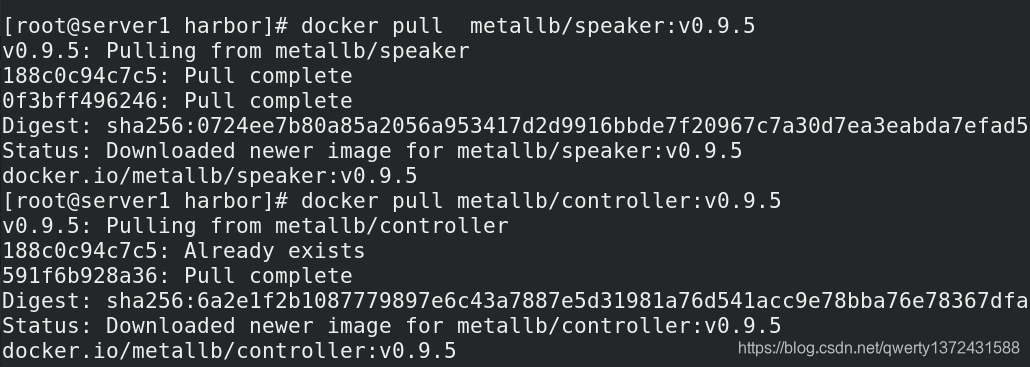

[root@server1 harbor]# docker pull metallb/speaker:v0.9.5 ##通过查看metallb.yml配置文件,拉取相应的镜像

[root@server1 harbor]# docker pull metallb/controller:v0.9.5

##创建yml文件内容

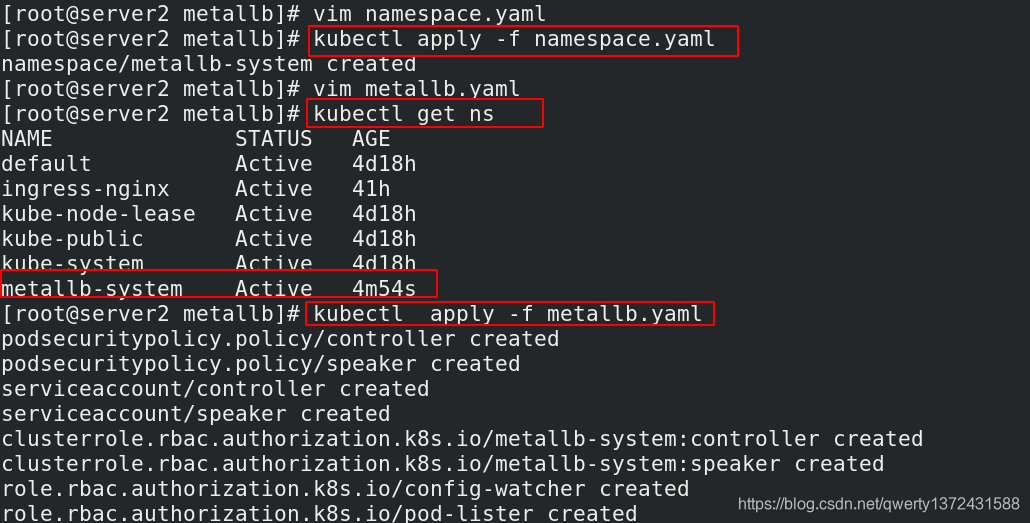

[root@server2 metallb]# kubectl apply -f namespace.yaml ##创建namespace

[root@server2 metallb]# vim metallb.yaml

[root@server2 metallb]# kubectl get ns ##查看创建的namespace

NAME STATUS AGE

default Active 4d18h

ingress-nginx Active 41h

kube-node-lease Active 4d18h

kube-public Active 4d18h

kube-system Active 4d18h

metallb-system Active 4m54s

[root@server2 metallb]# kubectl apply -f metallb.yaml ##创建metallb

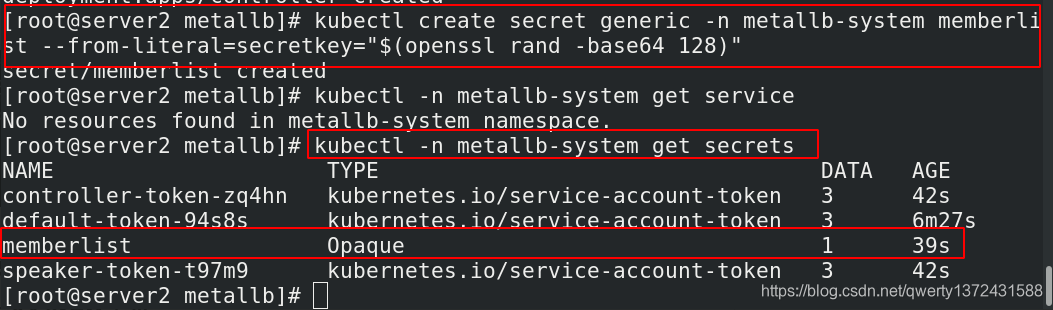

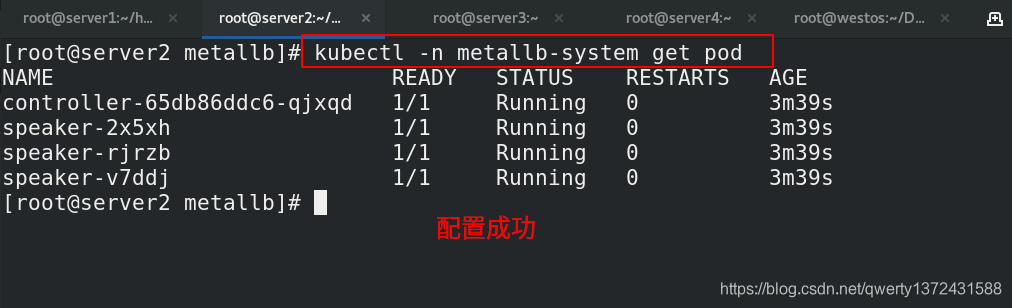

[root@server2 metallb]# kubectl create secret generic -n metallb-system memberlist --from-literal=secretkey="$(openssl rand -base64 128)" ##创建密钥(第一次创建需要执行,之后不需要多次执行)

[root@server2 metallb]# kubectl -n metallb-system get secrets ##查看密钥

[root@server2 metallb]# kubectl -n metallb-system get pod ##都是running说明配置成功

1. metallb配置

2.测试

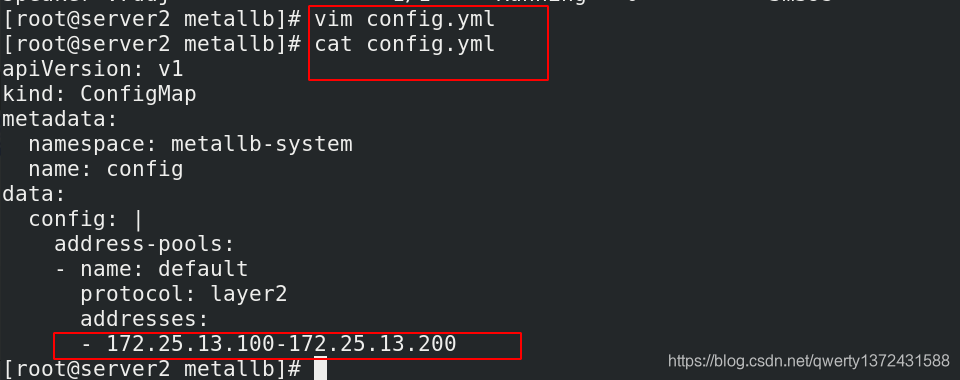

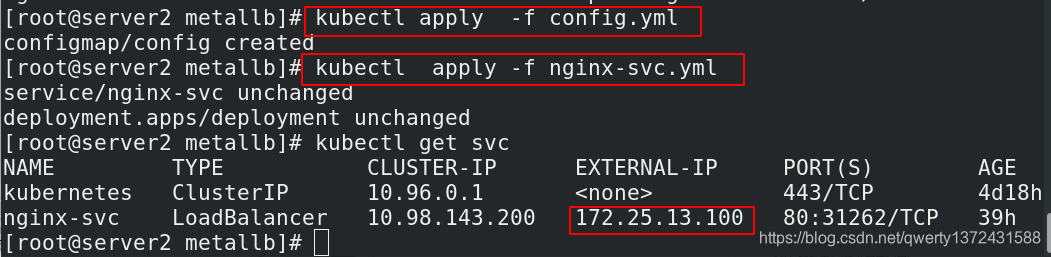

[root@server2 metallb]# vim config.yml

[root@server2 metallb]# cat config.yml

apiVersion: v1

kind: ConfigMap

metadata:

namespace: metallb-system

name: config

data:

config: |

address-pools:

- name: default

protocol: layer2

addresses:

- 172.25.13.100-172.25.13.200 ##测试ip范围100-200

[root@server2 metallb]# kubectl apply -f config.yml ##应用config文件,随即分配ip

configmap/config created

## 测试1

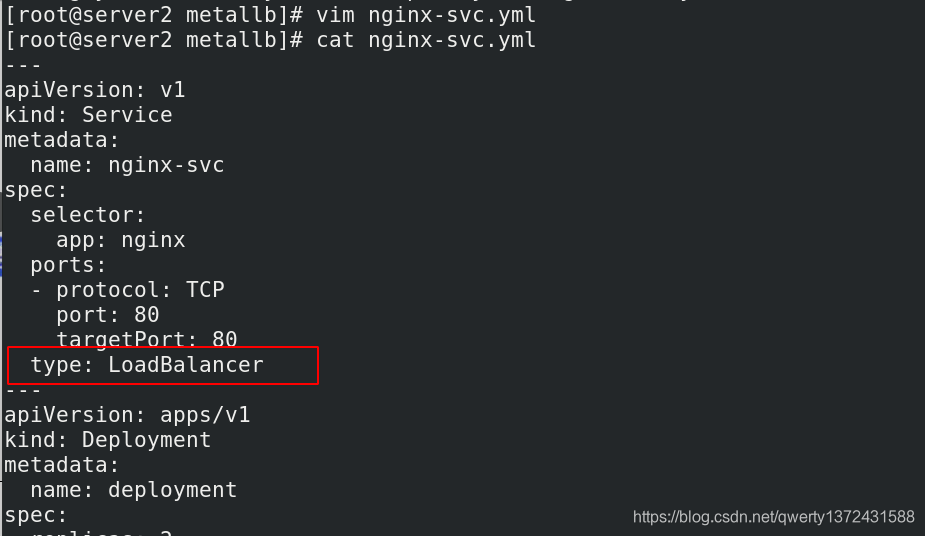

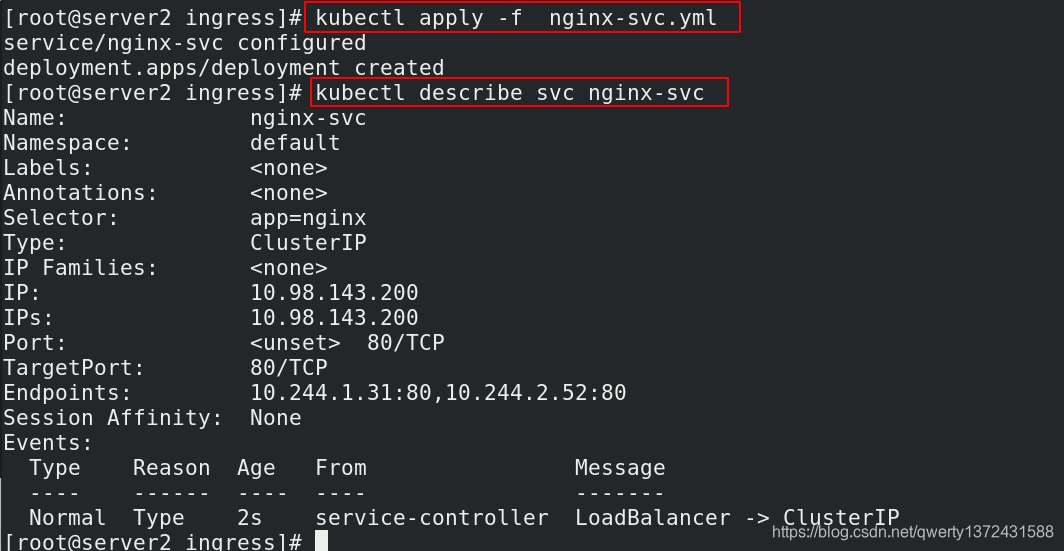

[root@server2 metallb]# vim nginx-svc.yml ##测试1

[root@server2 metallb]# cat nginx-svc.yml

---

apiVersion: v1

kind: Service

metadata:

name: nginx-svc

spec:

selector:

app: nginx

ports:

- protocol: TCP

port: 80

targetPort: 80

type: LoadBalancer

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: deployment

spec:

replicas: 2

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: myapp:v1

[root@server2 metallb]# kubectl apply -f nginx-svc.yml ##

service/nginx-svc unchanged

deployment.apps/deployment unchanged

[root@server2 metallb]# kubectl get svc ## 172,25,13,100分配成功

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 4d18h

nginx-svc LoadBalancer 10.98.143.200 172.25.13.100 80:31262/TCP 39h

## 测试2

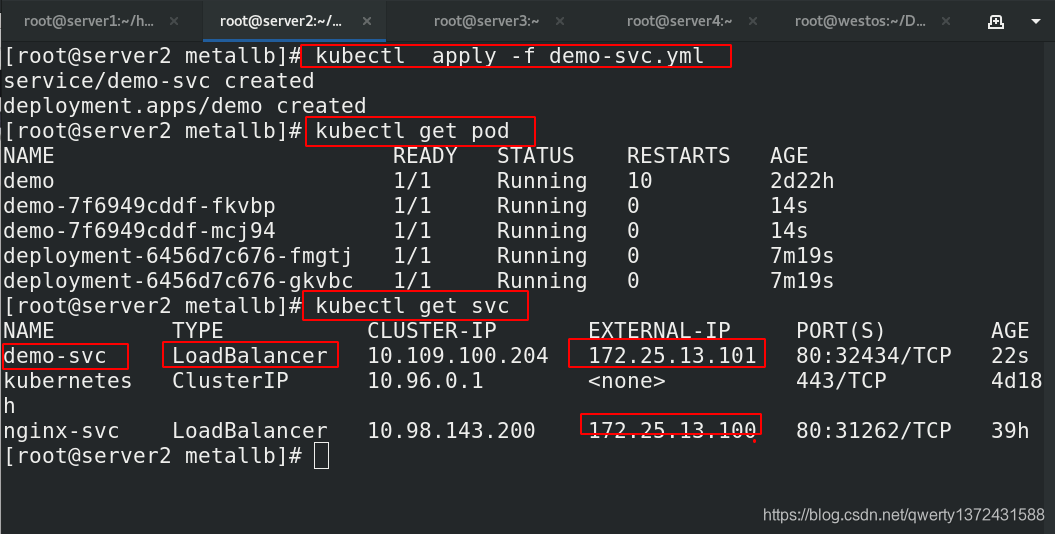

[root@server2 metallb]# cat demo-svc.yml

---

apiVersion: v1

kind: Service

metadata:

name: demo-svc

spec:

selector:

app: demo

ports:

- protocol: TCP

port: 80

targetPort: 80

type: LoadBalancer

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: demo

spec:

replicas: 2

selector:

matchLabels:

app: demo

template:

metadata:

labels:

app: demo

spec:

containers:

- name: demo

image: myapp:v2

[root@server2 metallb]# kubectl apply -f demo-svc.yml

[root@server2 metallb]# kubectl get pod ##查看是否running

[root@server2 metallb]# kubectl get svc ##查看服务启动效果

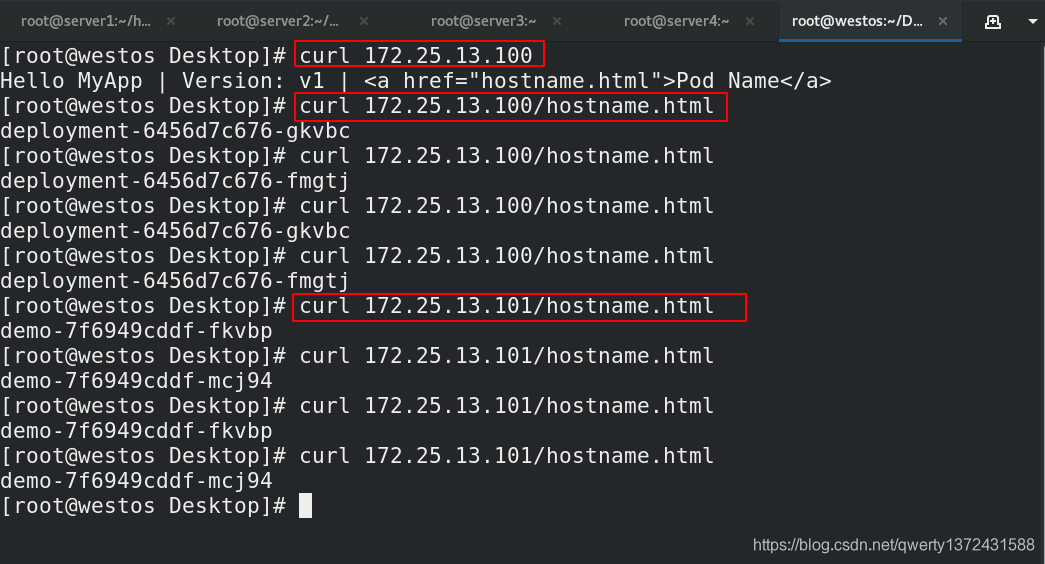

## 真机测试效果

[root@westos Desktop]# curl 172.25.13.100

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

[root@westos Desktop]# curl 172.25.13.100/hostname.html

deployment-6456d7c676-gkvbc

[root@westos Desktop]# curl 172.25.13.100/hostname.html

deployment-6456d7c676-fmgtj

[root@westos Desktop]# curl 172.25.13.101/hostname.html

demo-7f6949cddf-fkvbp

[root@westos Desktop]# curl 172.25.13.101/hostname.html

demo-7f6949cddf-mcj94

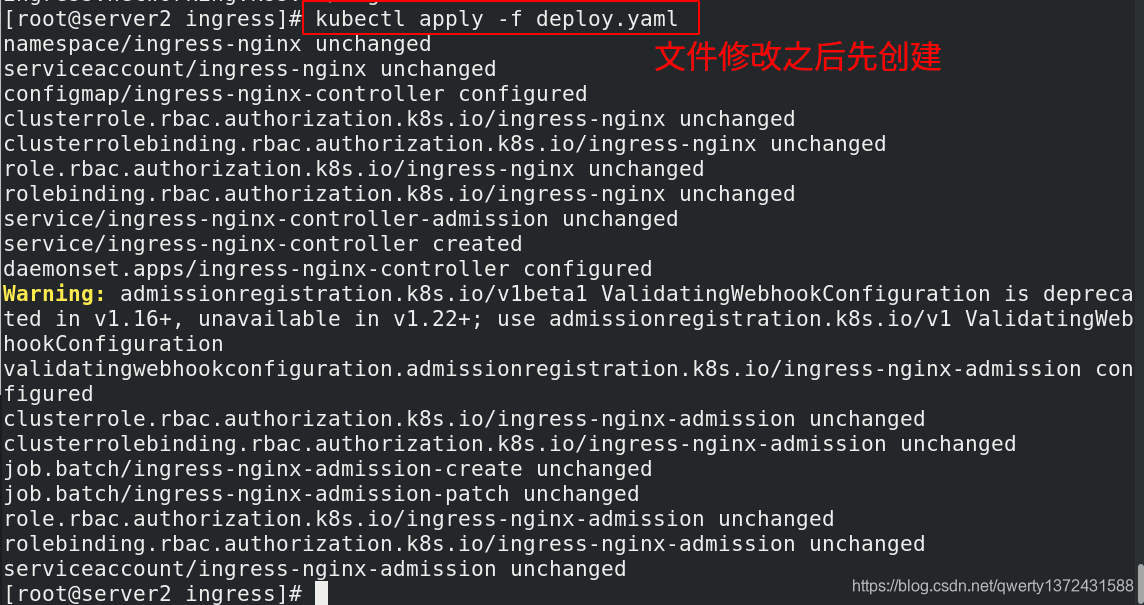

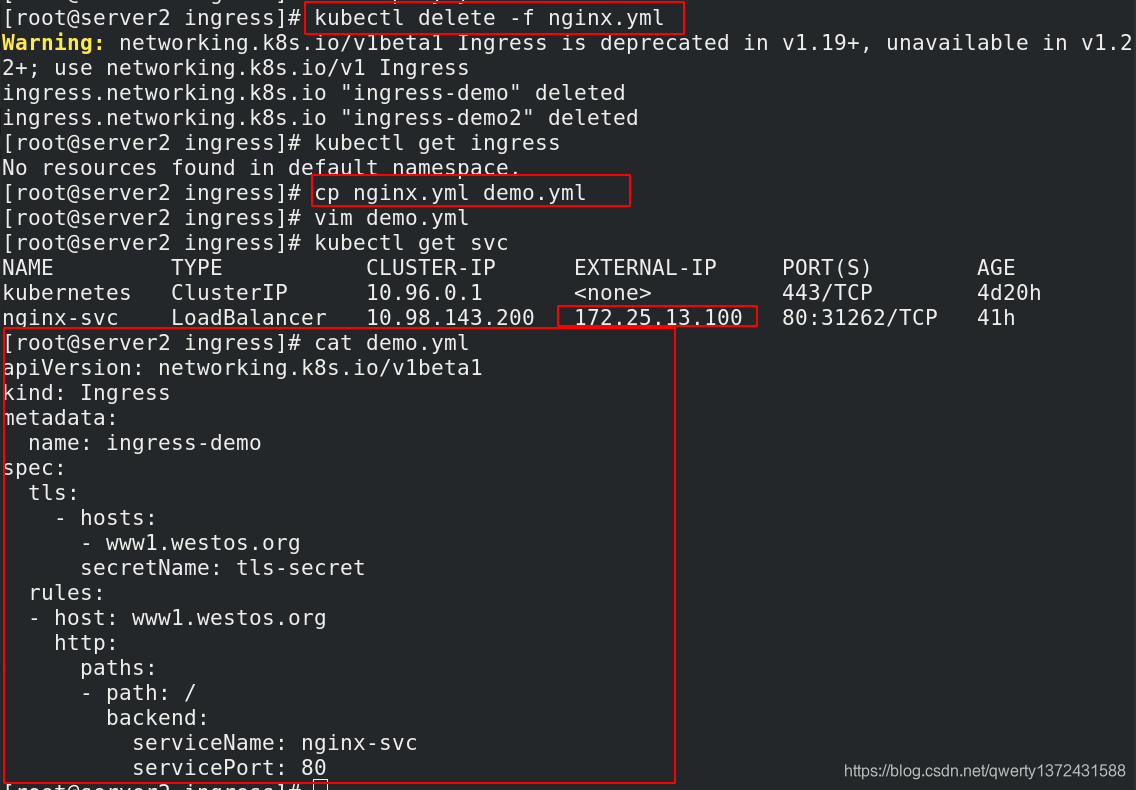

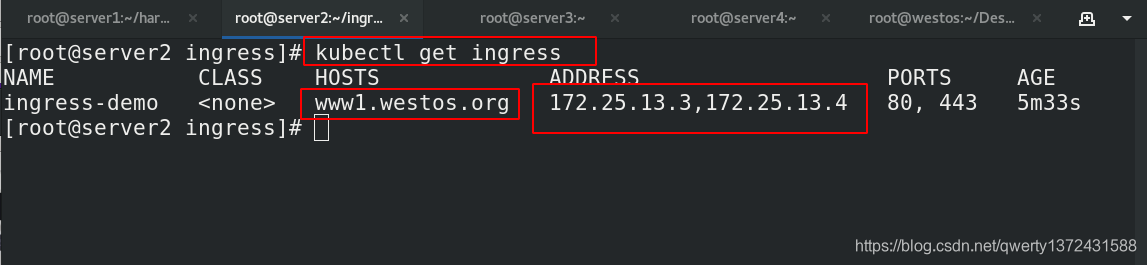

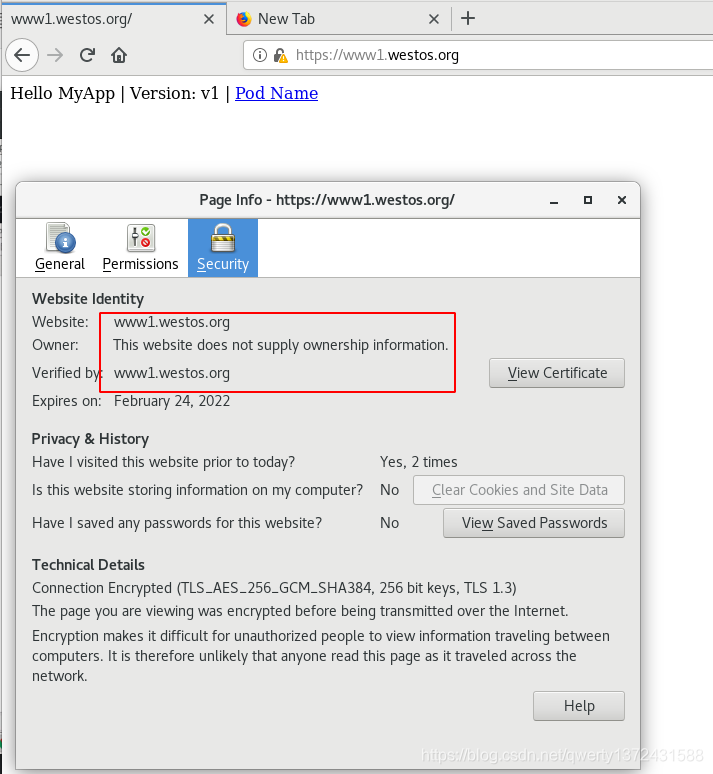

2.5.2.3 ingress结合metallb的实验(可以不看)

[root@server2 ~]# cd ingress/

[root@server2 ingress]# vim deploy.yaml ##修改的文件内容如下

273 # Source: ingress-nginx/templates/controller-service.yaml

274 apiVersion: v1

275 kind: Service

276 metadata:

277 labels:

278 helm.sh/chart: ingress-nginx-2.4.0

279 app.kubernetes.io/name: ingress-nginx

280 app.kubernetes.io/instance: ingress-nginx

281 app.kubernetes.io/version: 0.33.0

282 app.kubernetes.io/managed-by: Helm

283 app.kubernetes.io/component: controller

284 name: ingress-nginx-controller

285 namespace: ingress-nginx

286 spec:

287 type: LoadBalancer

288 ports:

289 - name: http

290 port: 80

291 protocol: TCP

292 targetPort: http

293 - name: https

294 port: 443

295 protocol: TCP

296 targetPort: https

297 selector:

298 app.kubernetes.io/name: ingress-nginx

299 app.kubernetes.io/instance: ingress-nginx

300 app.kubernetes.io/component: controller

329 spec:

330 #hostNetwork: true

331 #nodeSelector:

332 # kubernetes.io/hostname: server4

333 dnsPolicy: ClusterFirst

334 containers:

## 测试文件

[root@server2 ingress]# cat demo.yml

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: ingress-demo

spec:

tls:

- hosts:

- www1.westos.org

secretName: tls-secret

rules:

- host: www1.westos.org

http:

paths:

- path: /

backend:

serviceName: nginx-svc ##服务一定要确保开启,如果没有可以[root@server2 ingress]# kubectl apply -f nginx-svc.yml

servicePort: 80

[root@server2 ingress]# kubectl delete -f nginx.ymlz

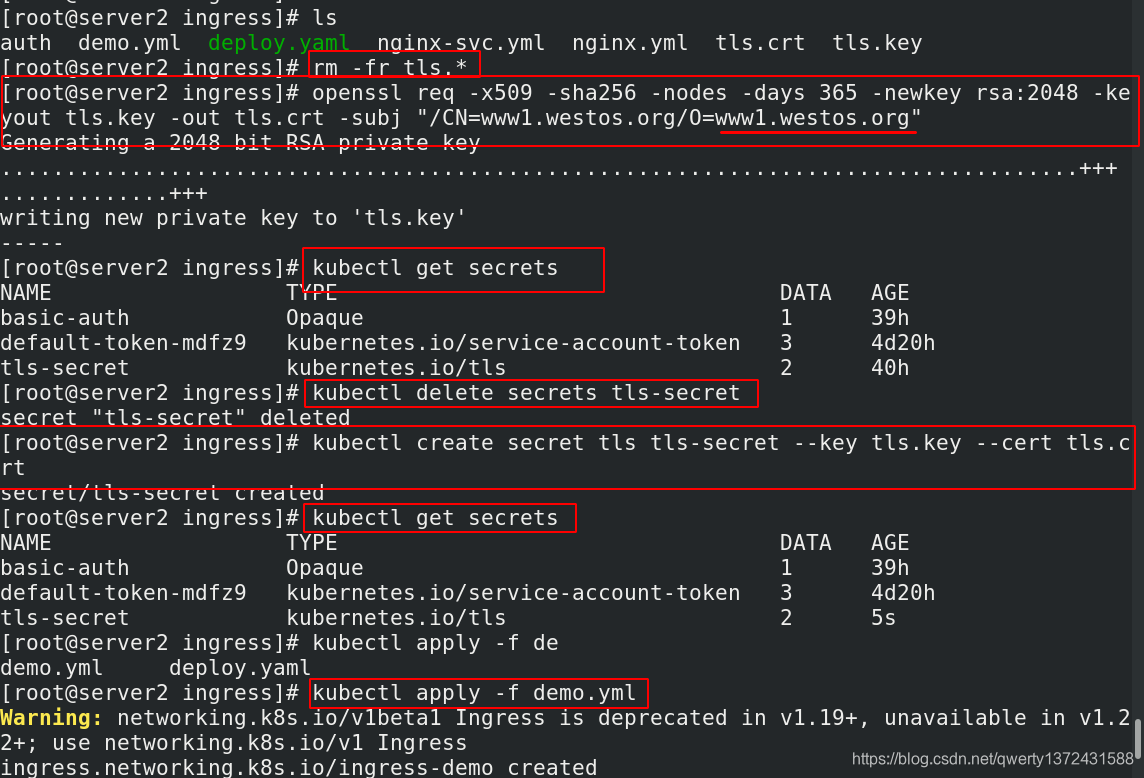

## 重新配置证书文件

[root@server2 ingress]# ls

auth demo.yml deploy.yaml nginx-svc.yml nginx.yml tls.crt tls.key

[root@server2 ingress]# rm -fr tls.*

[root@server2 ingress]# openssl req -x509 -sha256 -nodes -days 365 -newkey rsa:2048 -keyout tls.key -out tls.crt -subj "/CN=www1.westos.org/O=www1.westos.org" ##重新生成证书

[root@server2 ingress]# kubectl get secrets

[root@server2 ingress]# kubectl delete secrets tls-secret ##删除原来的证书文件

[root@server2 ingress]# kubectl create secret tls tls-secret --key tls.key --cert tls.crt ##重新保存

[root@server2 ingress]# kubectl get secrets

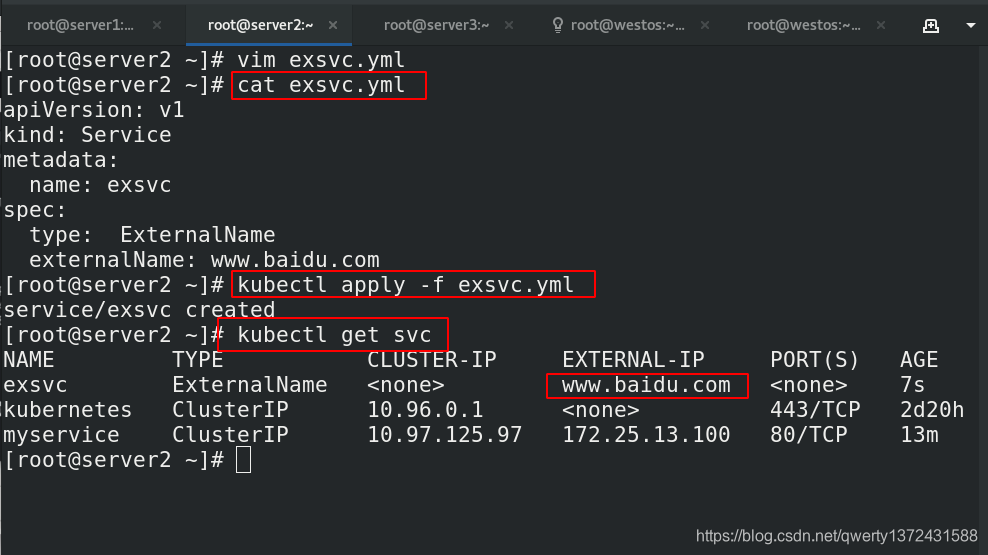

2.5.3 ExternalName(集群内部访问外部)

从外部访问的第三种方式叫做ExternalName

2.5.3.1 ExternalName

[root@server2 ~]# vim exsvc.yml

[root@server2 ~]# cat exsvc.yml

apiVersion: v1

kind: Service

metadata:

name: exsvc

spec:

type: ExternalName

externalName: www.baidu.com

[root@server2 ~]# kubectl apply -f exsvc.yml

service/exsvc created

[root@server2 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

exsvc ExternalName <none> www.baidu.com <none> 7s

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 2d20h

myservice ClusterIP 10.97.125.97 172.25.13.100 80/TCP 13m

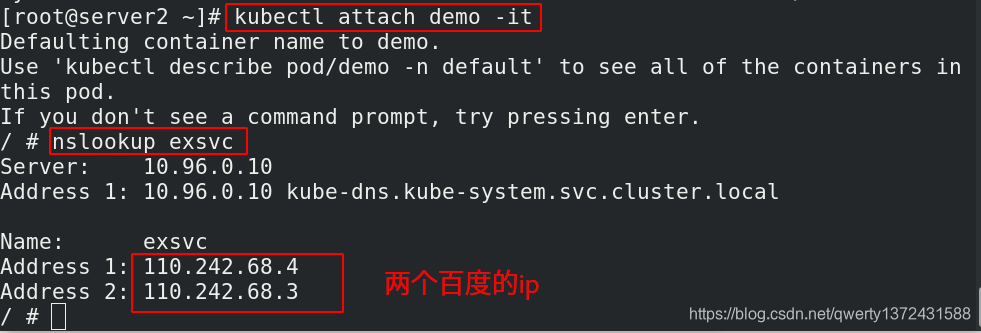

[root@server2 ~]# kubectl attach demo -it ##

/ # nslookup exsvc

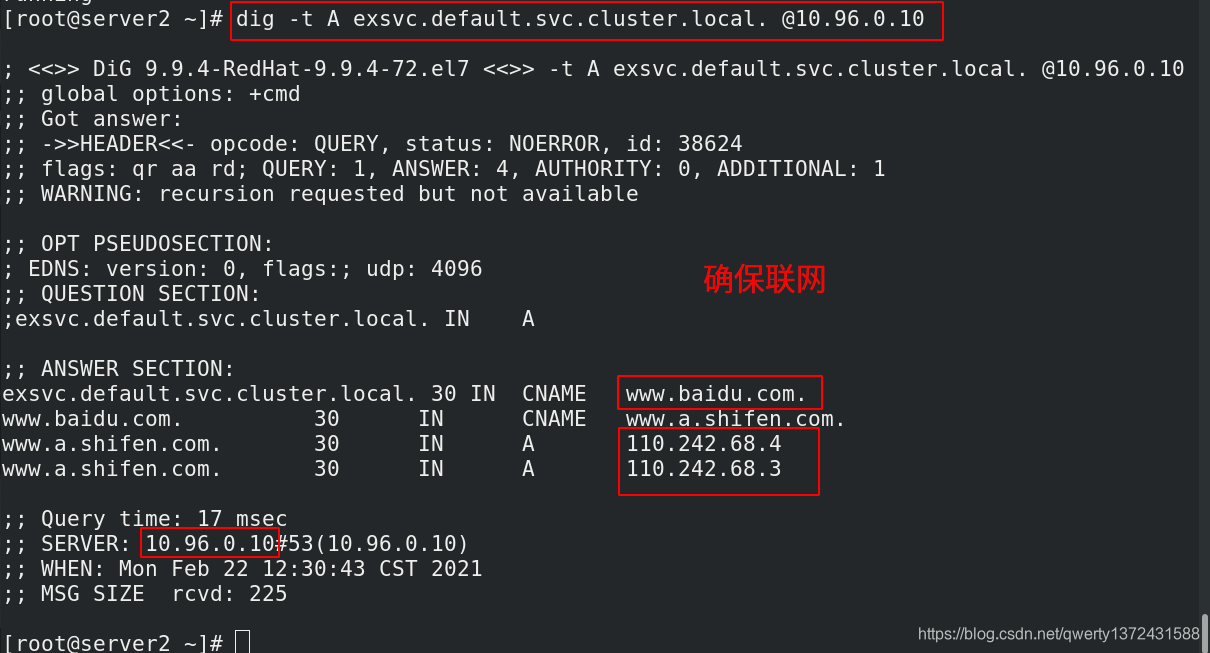

[root@server2 ~]# dig -t A exsvc.default.svc.cluster.local. @10.96.0.10 ##要想访问到,要确保联网

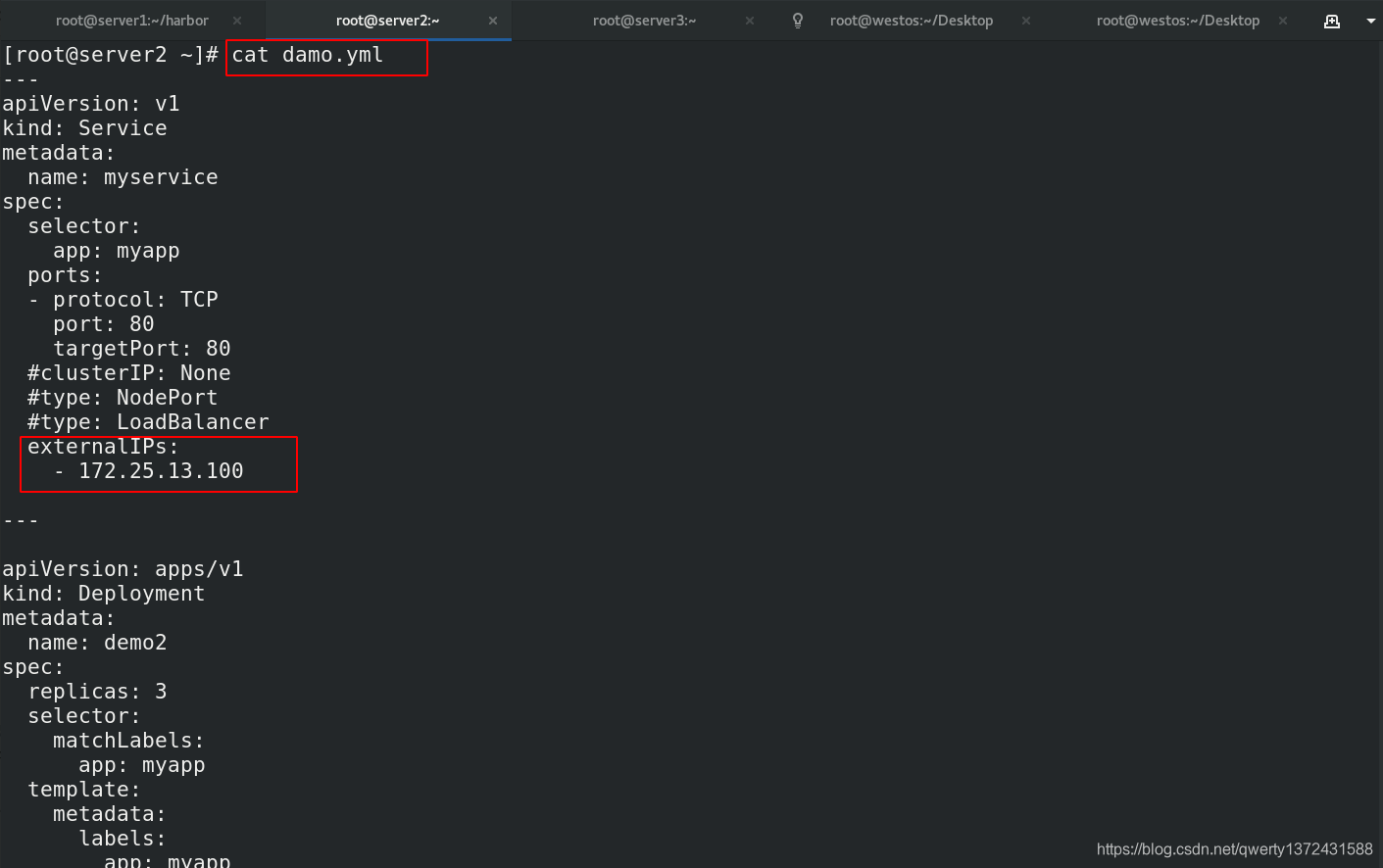

2.5.3.2 直接分配一个公有ip

[root@server2 ~]# vim damo.yml

[root@server2 ~]# cat damo.yml

---

apiVersion: v1

kind: Service

metadata:

name: myservice

spec:

selector:

app: myapp

ports:

- protocol: TCP

port: 80

targetPort: 80

#clusterIP: None

#type: NodePort

#type: LoadBalancer

externalIPs: ##分配的公有ip

- 172.25.13.100

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: demo2

spec:

replicas: 3

selector:

matchLabels:

app: myapp

template:

metadata:

labels:

app: myapp

spec:

containers:

- name: myapp

image: myapp:v2

[root@server2 ~]# kubectl apply -f damo.yml

service/myservice configured

deployment.apps/demo2 unchanged

[root@server2 ~]# kubectl get svc ##查看分配的公有ip为172.25.0.100

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 2d20h

myservice ClusterIP 10.97.125.97 172.25.13.100 80/TCP 5m7s

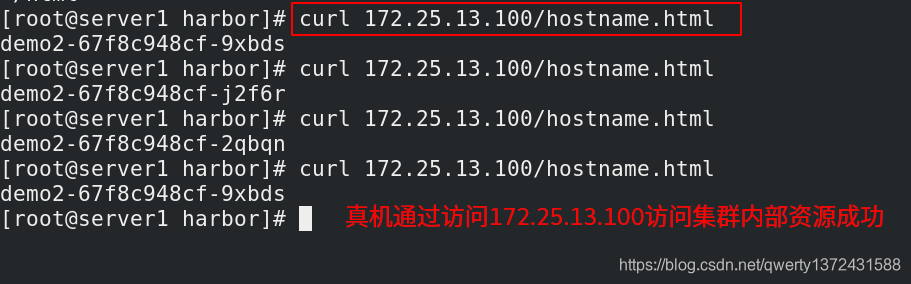

[root@westos Desktop]# curl 172.25.13.100/hostname.html ##真机访问172.25.13.100

3. pod间通信

3.1 同节点之间的通信

- 同一节点的pod之间通过cni网桥转发数据包。(brctl show可以查看)

3.2 不同节点的pod之间的通信需要网络插件支持(详解)

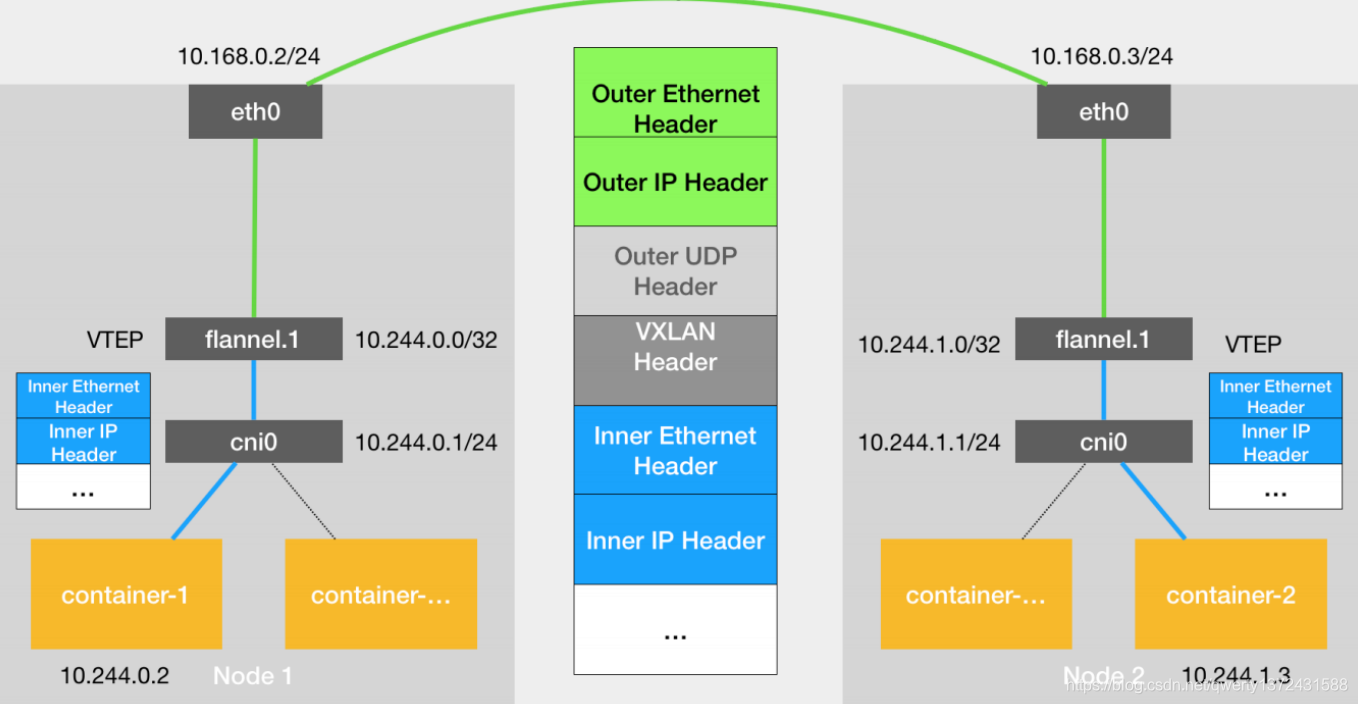

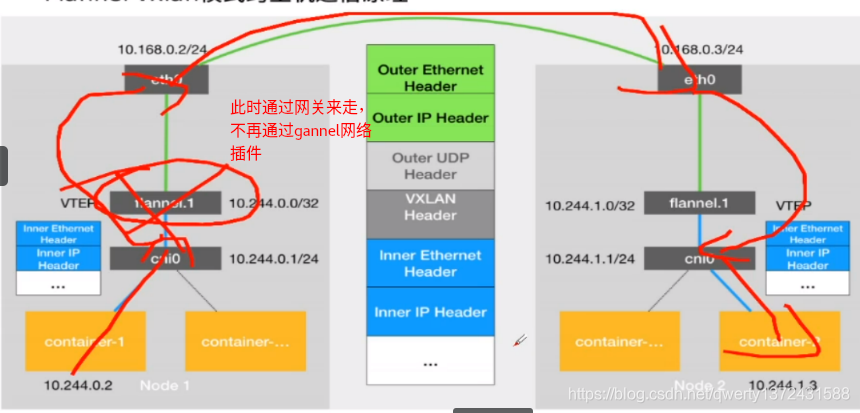

3.2.1 Flannel vxlan模式跨主机通信原理

- flannel网络

VXLAN,即Virtual Extensible LAN(虚拟可扩展局域网),是Linux本身支持的一网种网络虚拟化技术。VXLAN可以完全在内核态实现封装和解封装工作,从而通过“隧道”机制,构建出覆盖网络(Overlay Network)。

VTEP:VXLAN Tunnel End Point(虚拟隧道端点),在Flannel中 VNI的默认值是1,这也是为什么宿主机的VTEP设备都叫flannel.1的原因。

Cni0: 网桥设备,每创建一个pod都会创建一对 veth pair。其中一端是pod中的eth0,另一端是Cni0网桥中的端口(网卡)。

Flannel.1: TUN设备(虚拟网卡),用来进行 vxlan 报文的处理(封包和解包)。不同node之间的pod数据流量都从overlay设备以隧道的形式发送到对端。

Flanneld:flannel在每个主机中运行flanneld作为agent,它会为所在主机从集群的网络地址空间中,获取一个小的网段subnet,本主机内所有容器的IP地址都将从中分配。同时Flanneld监听K8s集群数据库,为flannel.1设备提供封装数据时必要的mac、ip等网络数据信息。

- flannel网络原理

当容器发送IP包,通过veth pair 发往cni网桥,再路由到本机的flannel.1设备进行处理。

VTEP设备之间通过二层数据帧进行通信,源VTEP设备收到原始IP包后,在上面加上一个目的MAC地址,封装成一个内部数据帧,发送给目的VTEP设备。

内部数据桢,并不能在宿主机的二层网络传输,Linux内核还需要把它进一步封装成为宿主机的一个普通的数据帧,承载着内部数据帧通过宿主机的eth0进行传输。

Linux会在内部数据帧前面,加上一个VXLAN头,VXLAN头里有一个重要的标志叫VNI,它是VTEP识别某个数据桢是不是应该归自己处理的重要标识。

flannel.1设备只知道另一端flannel.1设备的MAC地址,却不知道对应的宿主机地址是什么。在linux内核里面,网络设备进行转发的依据,来自FDB的转发数据库,这个flannel.1网桥对应的FDB信息,是由flanneld进程维护的。

linux内核在IP包前面再加上二层数据帧头,把目标节点的MAC地址填进去,MAC地址从宿主机的ARP表获取。

此时flannel.1设备就可以把这个数据帧从eth0发出去,再经过宿主机网络来到目标节点的eth0设备。目标主机内核网络栈会发现这个数据帧有VXLAN Header,并且VNI为1,Linux内核会对它进行拆包,拿到内部数据帧,根据VNI的值,交给本机flannel.1设备处理,flannel.1拆包,根据路由表发往cni网桥,最后到达目标容器。

- flannel支持多种后端:

1. Vxlan

vxlan //报文封装,默认

Directrouting //直接路由,跨网段使用vxlan,同网段使用host-gw模式。

2. host-gw: //主机网关,性能好,但只能在二层网络中,不支持跨网络,如果有成千上万的Pod,容易产生广播风暴,不推荐

3. UDP: //性能差,不推荐

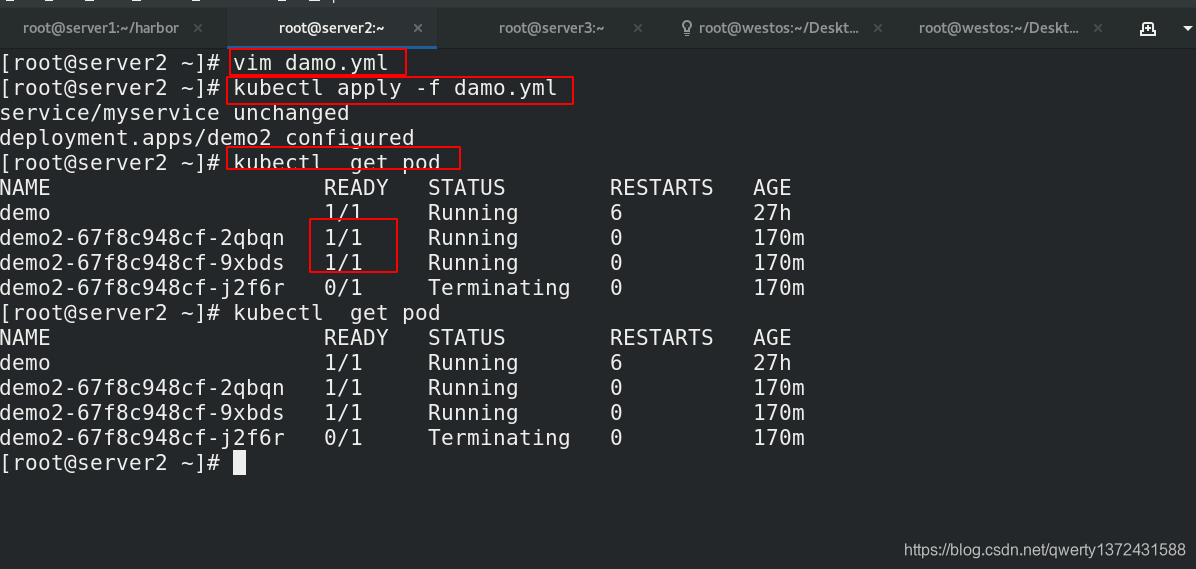

3.2.2 vxlan模式(默认模式)

[root@server2 ~]# vim damo.yml

[root@server2 ~]# cat damo.yml

---

apiVersion: v1

kind: Service

metadata:

name: myservice

spec:

selector:

app: myapp

ports:

- protocol: TCP

port: 80

targetPort: 80

#clusterIP: None

#type: NodePort

#type: LoadBalancer

externalIPs:

- 172.25.13.100

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: demo2

spec:

replicas: 2

selector:

matchLabels:

app: myapp

template:

metadata:

labels:

app: myapp

spec:

containers:

- name: myapp

image: myapp:v2

[root@server2 ~]# kubectl apply -f damo.yml

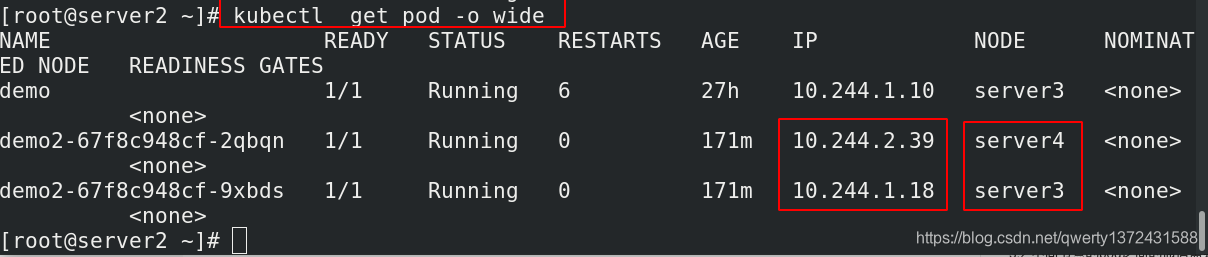

[root@server2 ~]# kubectl get pod -o wide ##查看详细信息

##下面的命令每一个节点都可以用

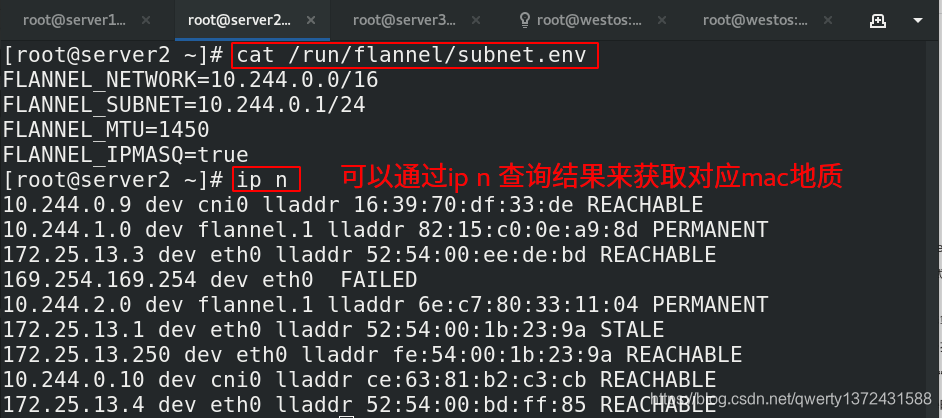

[root@server2 ~]# cat /run/flannel/subnet.env

[root@server2 ~]# ip n

[root@server2 ~]# ip addr ##查看flannel.1对应的mac地址

[root@server4 ~]# bridge fdb

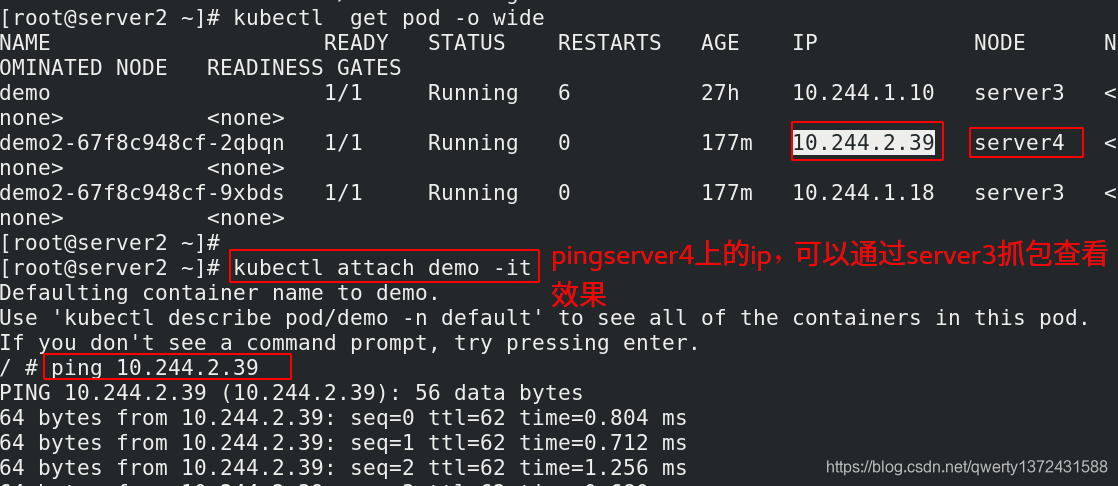

[root@server2 ~]# kubectl attach demo -it

/ # ping 10.244.2.39

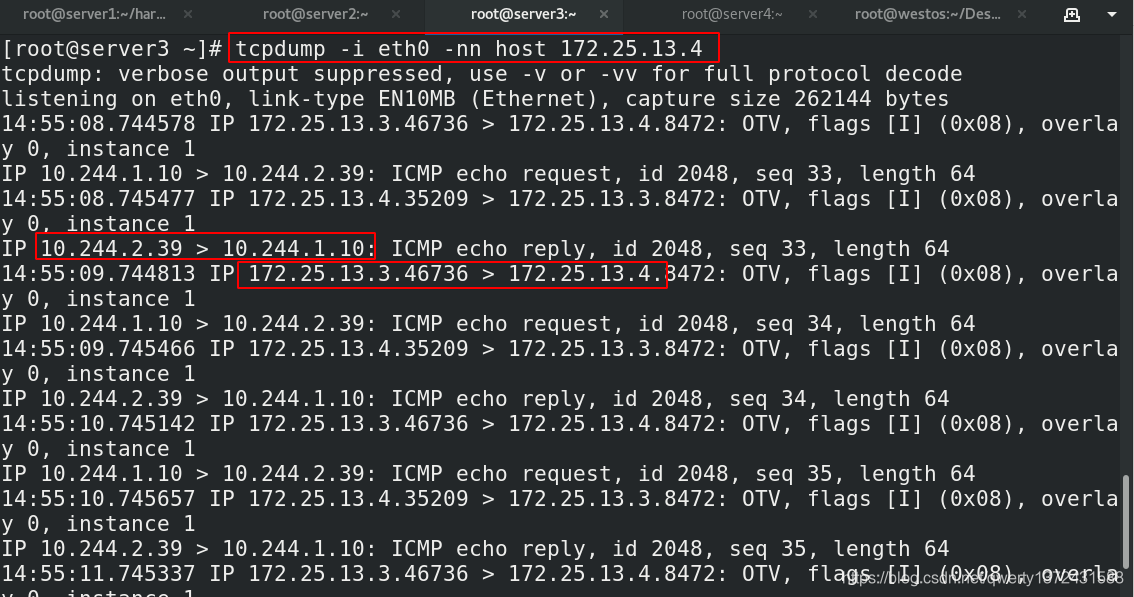

[root@server3 ~]# tcpdump -i eth0 -nn host 172.25.13.4

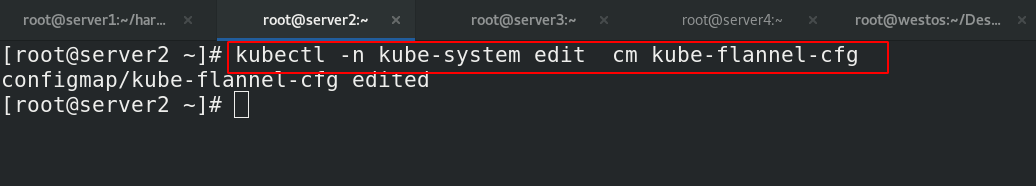

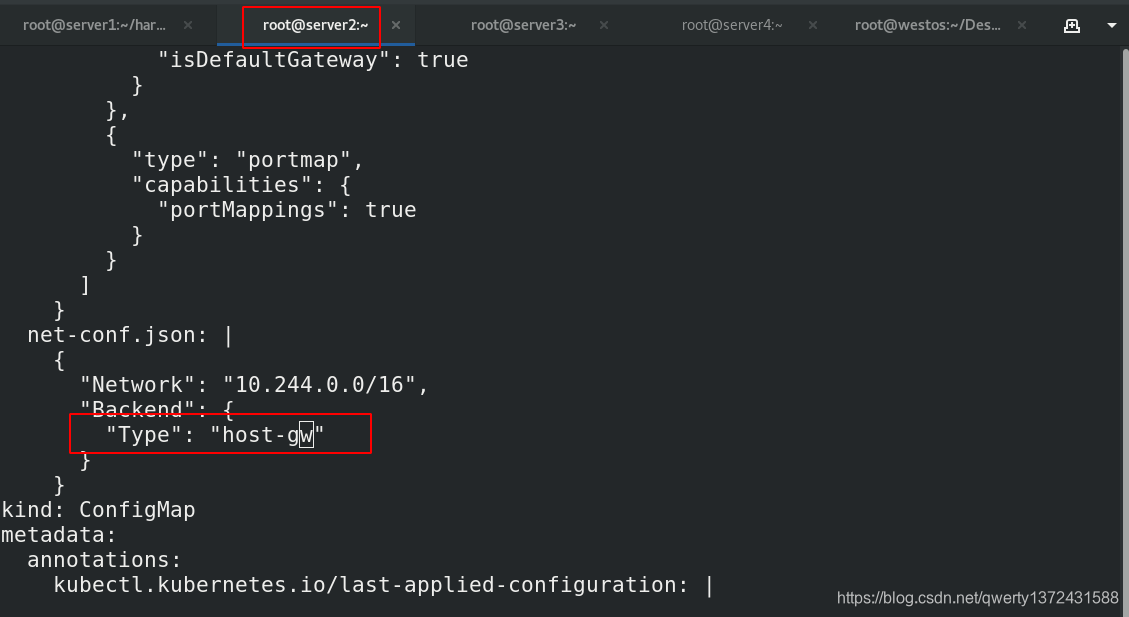

3.2.3 host-gw模式

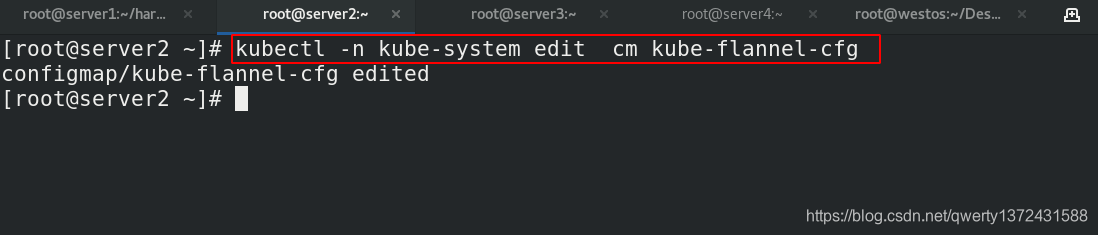

[root@server2 ~]# kubectl -n kube-system edit cm kube-flannel-cfg ##修改模式为host-gw

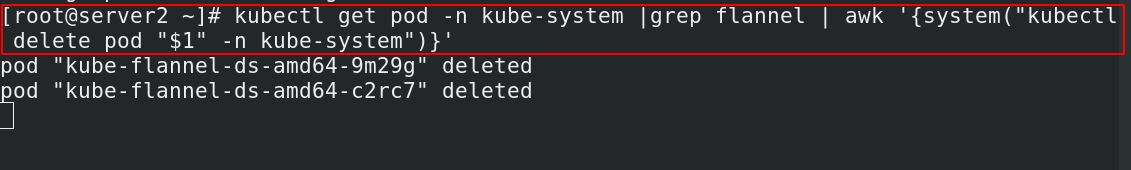

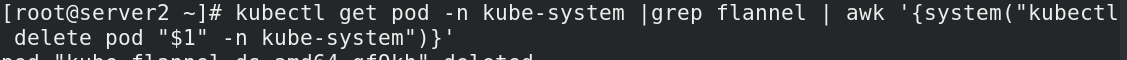

[root@server2 ~]# kubectl get pod -n kube-system |grep flannel | awk '{system("kubectl delete pod "$1" -n kube-system")}' ##类似于之前,重启生效,是一个删除在生成的过程

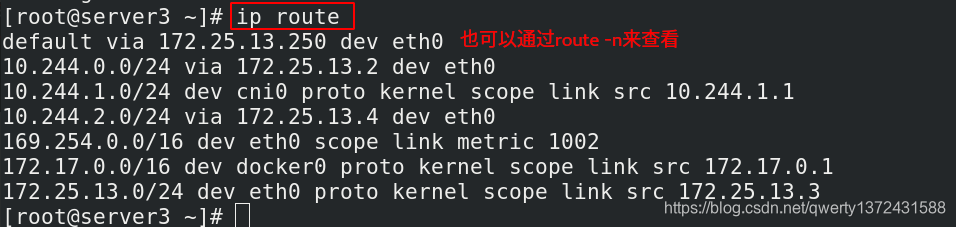

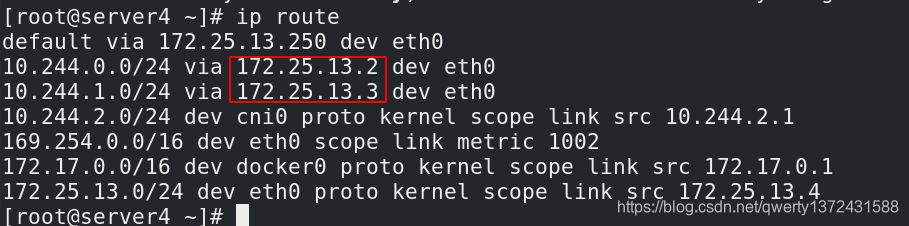

[root@server3 ~]# ip route ##每个节点都可以通过这条命令查看route,也可以用route -n

3.2.4 Directrouting

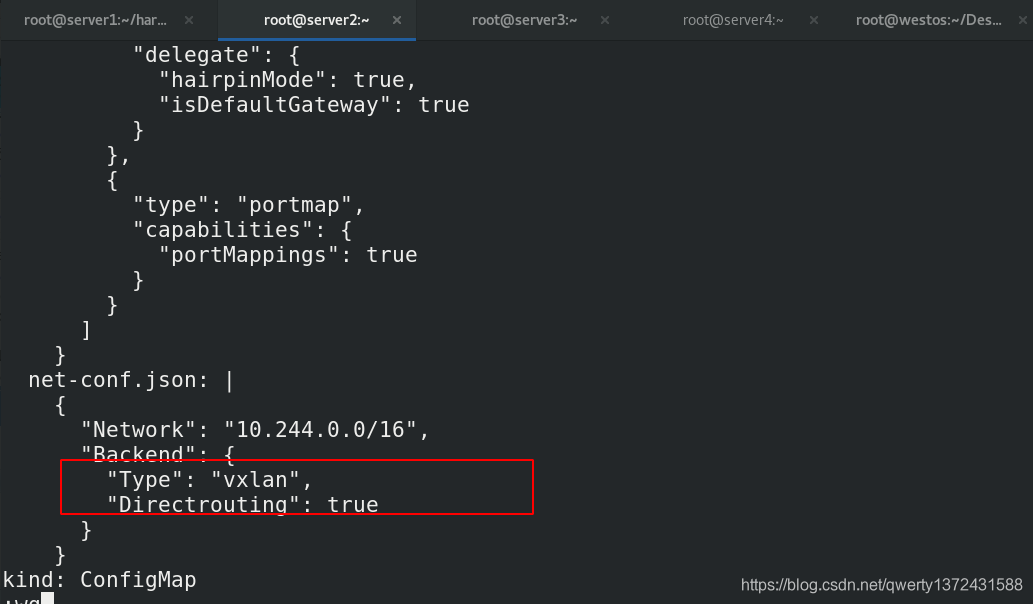

[root@server2 ~]# kubectl -n kube-system edit cm kube-flannel-cfg ##修改模式

[root@server2 ~]# kubectl get pod -n kube-system |grep flannel | awk '{system("kubectl delete pod "$1" -n kube-system")}' ##重新生效

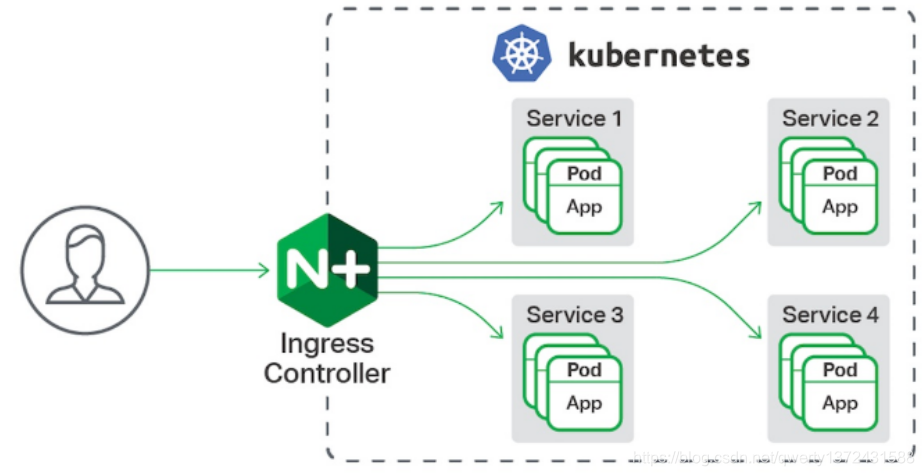

4. Ingress服务

- 一种全局的、为了代理不同后端 Service 而设置的负载均衡服务,就是 Kubernetes 里的Ingress 服务。

Ingress由两部分组成:Ingress controller和Ingress服务。

Ingress Controller 会根据你定义的 Ingress 对象,提供对应的代理能力。业界常用的各种反向代理项目,比如 Nginx、HAProxy、Envoy、Traefik 等,都已经为Kubernetes 专门维护了对应的 Ingress Controller。

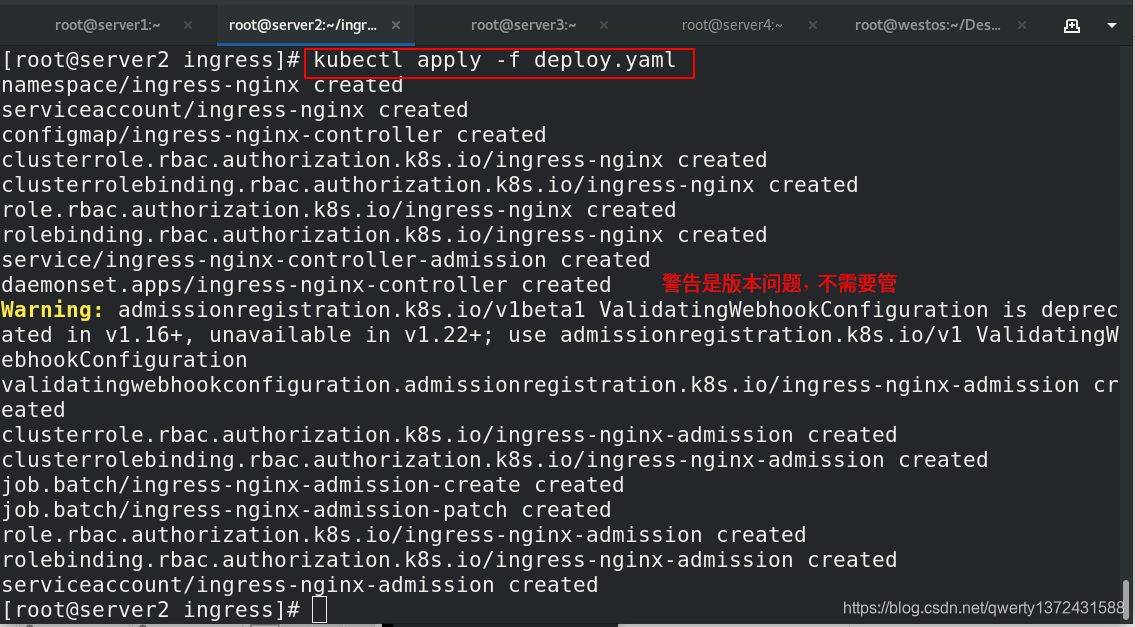

4.1 部署ingress服务

- 用DaemonSet结合nodeselector来部署ingress-controller到特定的node上,然后使用HostNetwork直接把该pod与宿主机node的网络打通,直接使用宿主机的80/443端口就能访问服务。

优点是整个请求链路最简单,性能相对NodePort模式更好。

缺点是由于直接利用宿主机节点的网络和端口,一个node只能部署一个ingress-controller pod。

比较适合大并发的生产环境使用。

[root@server2 ~]# mkdir ingress ##创建对应文件夹方便实验

[root@server2 ~]# cd ingress/

[root@server2 ingress]# pwd

/root/ingress

[root@server2 ingress]# ll

total 20

-rwxr-xr-x 1 root root 17728 Feb 22 15:59 deploy.yaml ##我本地的文件,也可以通过官网wget

[root@server1 ~]# docker load -i ingress-nginx.tar ##加载两个镜像,分别是quay.io/kubernetes-ingress-controller/nginx-ingress-controller 和 jettech/kube-webhook-certgen ,可以通过网络拉取,也可以官网找。这里我使用的是本地的

[root@server1 ~]# docker tag quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.33.0 reg.westos.org/library/nginx-ingress-controller:0.33.0

[root@server1 ~]# docker tag jettech/kube-webhook-certgen:v1.2.0 reg.westos.org/library/kube-webhook-certgen:v1.2.0

[root@server1 ~]# docker push reg.westos.org/library/nginx-ingress-controller:0.33.0 ##上传到harbor仓库,方便实验

[root@server1 ~]# docker push reg.westos.org/library/kube-webhook-certgen:v1.2.0

## 环境的清理

[root@server2 ~]# kubectl delete -f damo.yml ##先删除前面实验添加的172.25.13.100的svc,然后在进行实验

[root@server2 ~]# vim damo.yml

[root@server2 ~]# cat damo.yml

---

apiVersion: v1

kind: Service

metadata:

name: myservice

spec:

selector:

app: myapp

ports:

- protocol: TCP

port: 80

targetPort: 80

type: ClusterIP ##这里的type需要修改

#clusterIP: None

#type: NodePort

#type: LoadBalancer

#externalIPs:

# - 172.25.13.100

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: demo2

spec:

replicas: 2

selector:

matchLabels:

app: myapp

template:

metadata:

labels:

app: myapp

spec:

containers:

- name: myapp

image: myapp:v2

[root@server2 ~]# kubectl apply -f damo.yml

## 正式部署

[root@server2 ingress]# cat deploy.yaml ##文件内容,和官方有点差距,经过优化,使用的是Daemonset控制器和hostnetwork

apiVersion: v1

kind: Namespace

metadata:

name: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

---

# Source: ingress-nginx/templates/controller-serviceaccount.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

helm.sh/chart: ingress-nginx-2.4.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.33.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/controller-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

labels:

helm.sh/chart: ingress-nginx-2.4.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.33.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller

namespace: ingress-nginx

data:

---

# Source: ingress-nginx/templates/clusterrole.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

helm.sh/chart: ingress-nginx-2.4.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.33.0

app.kubernetes.io/managed-by: Helm

name: ingress-nginx

namespace: ingress-nginx

rules:

- apiGroups:

- ''

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

verbs:

- list

- watch

- apiGroups:

- ''

resources:

- nodes

verbs:

- get

- apiGroups:

- ''

resources:

- services

verbs:

- get

- list

- update

- watch

- apiGroups:

- extensions

- networking.k8s.io # k8s 1.14+

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- ''

resources:

- events

verbs:

- create

- patch

- apiGroups:

- extensions

- networking.k8s.io # k8s 1.14+

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io # k8s 1.14+

resources:

- ingressclasses

verbs:

- get

- list

- watch

---

# Source: ingress-nginx/templates/clusterrolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

helm.sh/chart: ingress-nginx-2.4.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.33.0

app.kubernetes.io/managed-by: Helm

name: ingress-nginx

namespace: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx

subjects:

- kind: ServiceAccount

name: ingress-nginx

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/controller-role.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

labels:

helm.sh/chart: ingress-nginx-2.4.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.33.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx

namespace: ingress-nginx

rules:

- apiGroups:

- ''

resources:

- namespaces

verbs:

- get

- apiGroups:

- ''

resources:

- configmaps

- pods

- secrets

- endpoints

verbs:

- get

- list

- watch

- apiGroups:

- ''

resources:

- services

verbs:

- get

- list

- update

- watch

- apiGroups:

- extensions

- networking.k8s.io # k8s 1.14+

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- extensions

- networking.k8s.io # k8s 1.14+

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io # k8s 1.14+

resources:

- ingressclasses

verbs:

- get

- list

- watch

- apiGroups:

- ''

resources:

- configmaps

resourceNames:

- ingress-controller-leader-nginx

verbs:

- get

- update

- apiGroups:

- ''

resources:

- configmaps

verbs:

- create

- apiGroups:

- ''

resources:

- endpoints

verbs:

- create

- get

- update

- apiGroups:

- ''

resources:

- events

verbs:

- create

- patch

---

# Source: ingress-nginx/templates/controller-rolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

helm.sh/chart: ingress-nginx-2.4.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.33.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx

namespace: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx

subjects:

- kind: ServiceAccount

name: ingress-nginx

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/controller-service-webhook.yaml

apiVersion: v1

kind: Service

metadata:

labels:

helm.sh/chart: ingress-nginx-2.4.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.33.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller-admission

namespace: ingress-nginx

spec:

type: ClusterIP

ports:

- name: https-webhook

port: 443

targetPort: webhook

selector:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

---

# Source: ingress-nginx/templates/controller-service.yaml

#apiVersion: v1

#kind: Service

#metadata:

# labels:

# helm.sh/chart: ingress-nginx-2.4.0

# app.kubernetes.io/name: ingress-nginx

# app.kubernetes.io/instance: ingress-nginx

# app.kubernetes.io/version: 0.33.0

# app.kubernetes.io/managed-by: Helm

# app.kubernetes.io/component: controller

# name: ingress-nginx-controller

# namespace: ingress-nginx

#spec:

# type: NodePort

# ports:

# - name: http

# port: 80

# protocol: TCP

# targetPort: http

# - name: https

# port: 443

# protocol: TCP

# targetPort: https

# selector:

# app.kubernetes.io/name: ingress-nginx

# app.kubernetes.io/instance: ingress-nginx

# app.kubernetes.io/component: controller

---

# Source: ingress-nginx/templates/controller-deployment.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

labels:

helm.sh/chart: ingress-nginx-2.4.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.33.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller

namespace: ingress-nginx

spec:

selector:

matchLabels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

revisionHistoryLimit: 10

minReadySeconds: 0

template:

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

spec:

hostNetwork: true

nodeSelector:

kubernetes.io/hostname: server4

dnsPolicy: ClusterFirst

containers:

- name: controller

image: nginx-ingress-controller:0.33.0

imagePullPolicy: IfNotPresent

lifecycle:

preStop:

exec:

command:

- /wait-shutdown

args:

- /nginx-ingress-controller

- --election-id=ingress-controller-leader

- --ingress-class=nginx

- --configmap=ingress-nginx/ingress-nginx-controller

- --validating-webhook=:8443

- --validating-webhook-certificate=/usr/local/certificates/cert

- --validating-webhook-key=/usr/local/certificates/key

securityContext:

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

runAsUser: 101

allowPrivilegeEscalation: true

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

livenessProbe:

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 1

successThreshold: 1

failureThreshold: 3

readinessProbe:

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 1

successThreshold: 1

failureThreshold: 3

ports:

- name: http

containerPort: 80

protocol: TCP

- name: https

containerPort: 443

protocol: TCP

- name: webhook

containerPort: 8443

protocol: TCP

volumeMounts:

- name: webhook-cert

mountPath: /usr/local/certificates/

readOnly: true

resources:

requests:

cpu: 100m

memory: 90Mi

serviceAccountName: ingress-nginx

terminationGracePeriodSeconds: 300

volumes:

- name: webhook-cert

secret:

secretName: ingress-nginx-admission

---

# Source: ingress-nginx/templates/admission-webhooks/validating-webhook.yaml

apiVersion: admissionregistration.k8s.io/v1beta1

kind: ValidatingWebhookConfiguration

metadata:

labels:

helm.sh/chart: ingress-nginx-2.4.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.33.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

name: ingress-nginx-admission

namespace: ingress-nginx

webhooks:

- name: validate.nginx.ingress.kubernetes.io

rules:

- apiGroups:

- extensions

- networking.k8s.io

apiVersions:

- v1beta1

operations:

- CREATE

- UPDATE

resources:

- ingresses

failurePolicy: Fail

clientConfig:

service:

namespace: ingress-nginx

name: ingress-nginx-controller-admission

path: /extensions/v1beta1/ingresses

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/clusterrole.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-2.4.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.33.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

namespace: ingress-nginx

rules:

- apiGroups:

- admissionregistration.k8s.io

resources:

- validatingwebhookconfigurations

verbs:

- get

- update

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/clusterrolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-2.4.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.33.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

namespace: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx-admission

subjects:

- kind: ServiceAccount

name: ingress-nginx-admission

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/job-createSecret.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: ingress-nginx-admission-create

annotations:

helm.sh/hook: pre-install,pre-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-2.4.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.33.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

namespace: ingress-nginx

spec:

template:

metadata:

name: ingress-nginx-admission-create

labels:

helm.sh/chart: ingress-nginx-2.4.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.33.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

spec:

containers:

- name: create

image: kube-webhook-certgen:v1.2.0

imagePullPolicy: IfNotPresent

args:

- create

- --host=ingress-nginx-controller-admission,ingress-nginx-controller-admission.ingress-nginx.svc

- --namespace=ingress-nginx

- --secret-name=ingress-nginx-admission

restartPolicy: OnFailure

serviceAccountName: ingress-nginx-admission

securityContext:

runAsNonRoot: true

runAsUser: 2000

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/job-patchWebhook.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: ingress-nginx-admission-patch

annotations:

helm.sh/hook: post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-2.4.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.33.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

namespace: ingress-nginx

spec:

template:

metadata:

name: ingress-nginx-admission-patch

labels:

helm.sh/chart: ingress-nginx-2.4.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.33.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

spec:

containers:

- name: patch

image: kube-webhook-certgen:v1.2.0

imagePullPolicy: IfNotPresent

args:

- patch

- --webhook-name=ingress-nginx-admission

- --namespace=ingress-nginx

- --patch-mutating=false

- --secret-name=ingress-nginx-admission

- --patch-failure-policy=Fail

restartPolicy: OnFailure

serviceAccountName: ingress-nginx-admission

securityContext:

runAsNonRoot: true

runAsUser: 2000

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/role.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-2.4.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.33.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

namespace: ingress-nginx

rules:

- apiGroups:

- ''

resources:

- secrets

verbs:

- get

- create

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/rolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-2.4.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.33.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

namespace: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx-admission

subjects:

- kind: ServiceAccount

name: ingress-nginx-admission

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/serviceaccount.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-2.4.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.33.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

namespace: ingress-nginx

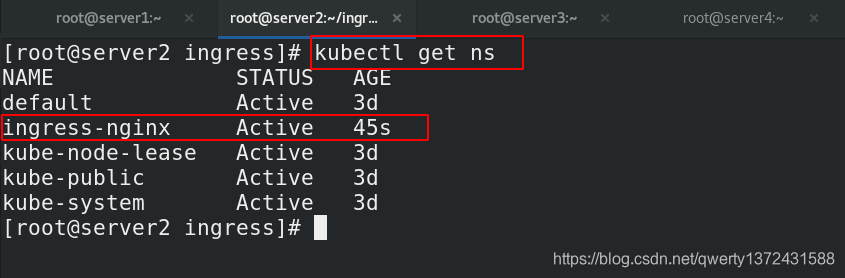

[root@server2 ingress]# kubectl apply -f deploy.yaml

[root@server2 ingress]# kubectl get ns ##安装成功后出现新的namespace:ingress-nginx

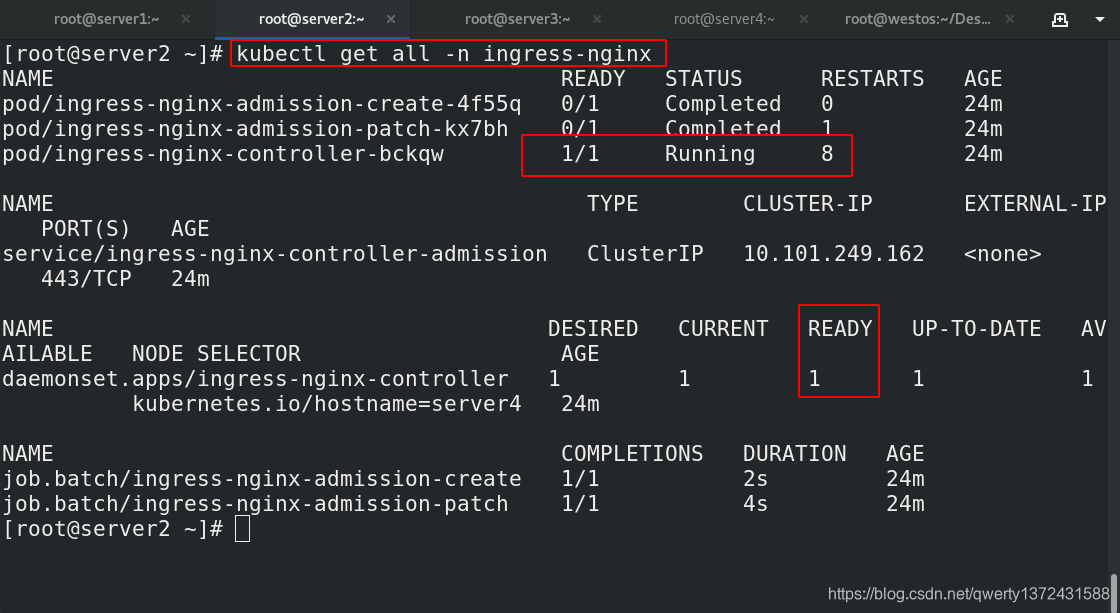

[root@server2 ingress]# kubectl get all -n ingress-nginx ##查看服务是否安装成功

[root@server2 ingress]# kubectl get all -o wide -n ingress-nginx ##查看新namespace详细信息

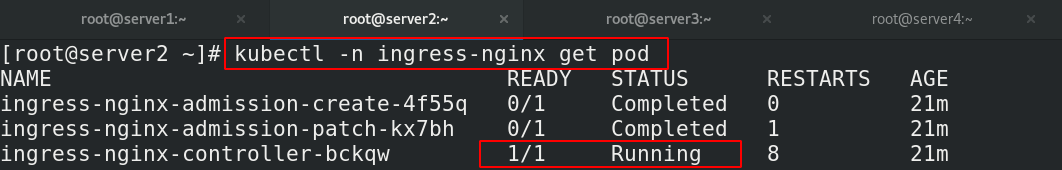

[root@server2 ingress]# kubectl -n ingress-nginx get pod ##查看是否运行成功,READY状态必须为1

4.2 ingress配置

4.2.1 配置基本的测试文件

4.2.1.1 一个host

[root@server2 ~]# cd ingress/

[root@server2 ingress]# vim nginx.yml

[root@server2 ingress]# cat nginx.yml

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: ingress-demo

spec:

rules:

- host: www1.westos.org

http:

paths:

- path: /

backend:

serviceName: myservice

servicePort: 80

[root@server2 ingress]# kubectl apply -f nginx.yml ##创建ingress

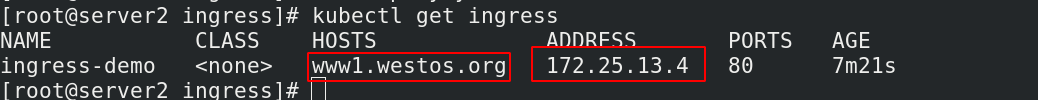

[root@server2 ingress]# kubectl get ingress ##查看创建的ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

ingress-demo <none> www1.westos.org 80 20s

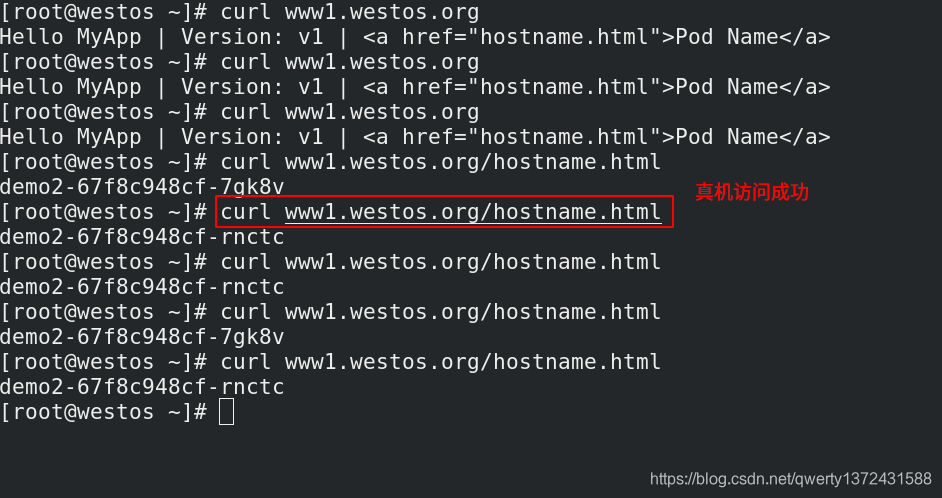

[root@westos ~]# vim /etc/hosts ##真机需要做解析

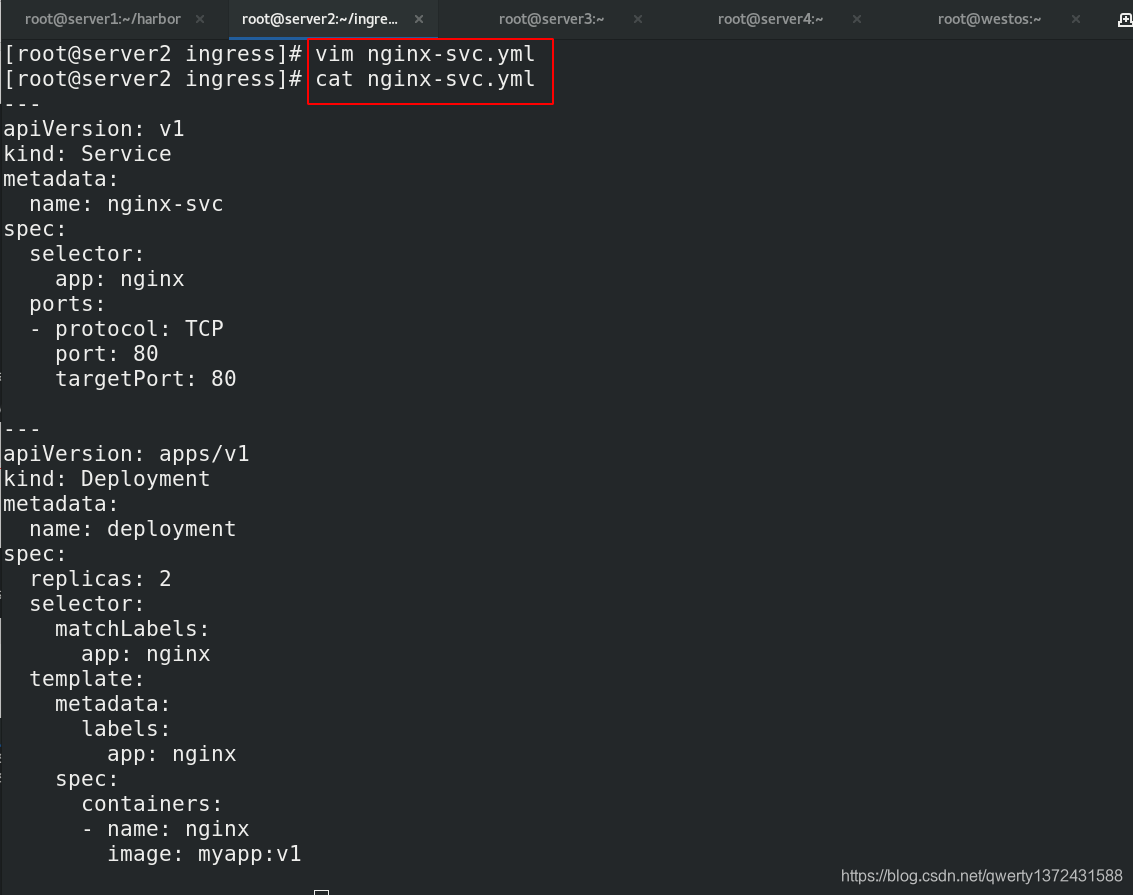

[root@server2 ingress]# cat nginx-svc.yml ##在重写一个服务,为后面实验进行区分

---

apiVersion: v1

kind: Service

metadata:

name: nginx-svc

spec:

selector:

app: nginx

ports:

- protocol: TCP

port: 80

targetPort: 80

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: deployment

spec:

replicas: 2

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: myapp:v1

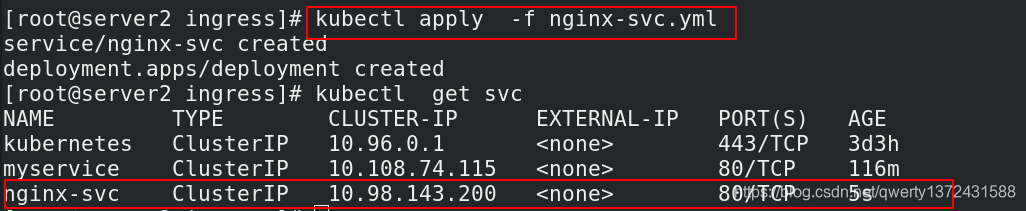

[root@server2 ingress]# kubectl apply -f nginx-svc.yml

[root@server2 ingress]# kubectl get svc ##查看新加的services

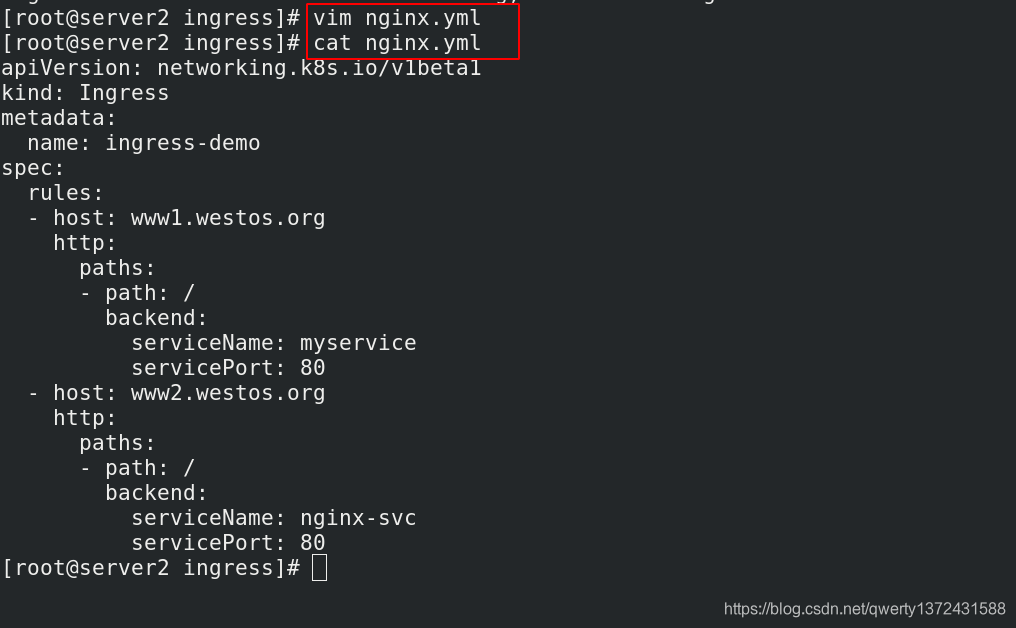

4.2.1.2 两个host

[root@server2 ingress]# vim nginx.yml

[root@server2 ingress]# cat nginx.yml

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: ingress-demo

spec:

rules:

- host: www1.westos.org

http:

paths:

- path: /

backend:

serviceName: myservice

servicePort: 80

- host: www2.westos.org

http:

paths:

- path: /

backend:

serviceName: nginx-svc

servicePort: 80

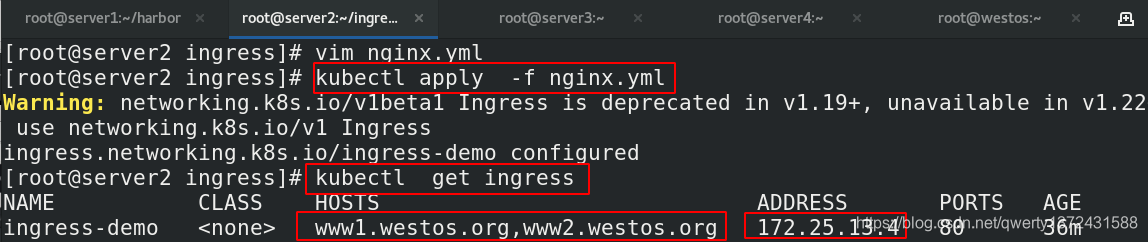

[root@server2 ingress]# kubectl apply -f nginx.yml ##

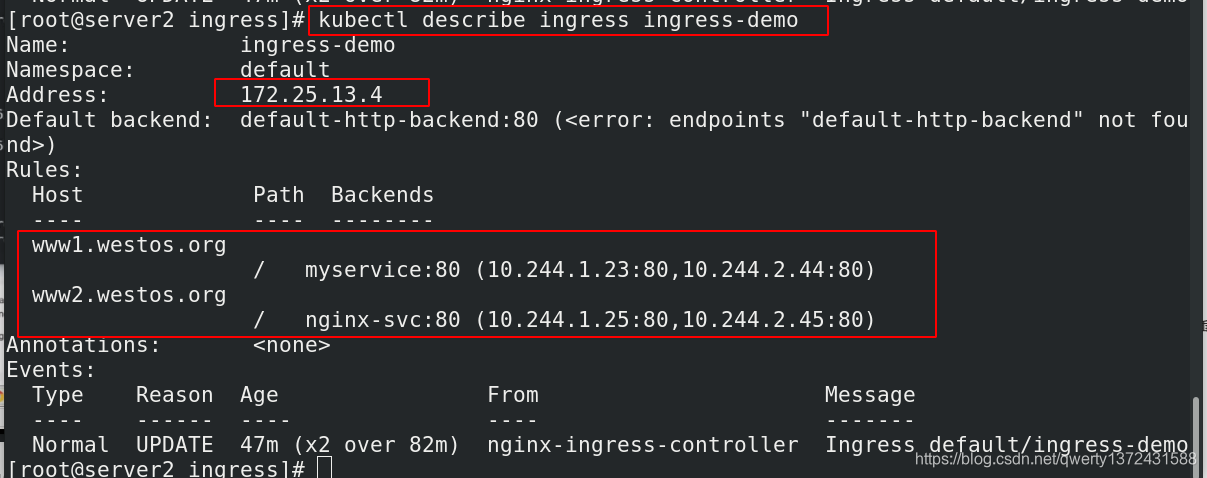

[root@server2 ingress]# kubectl get ingress ##查看ingress

4.2.2 证书加密

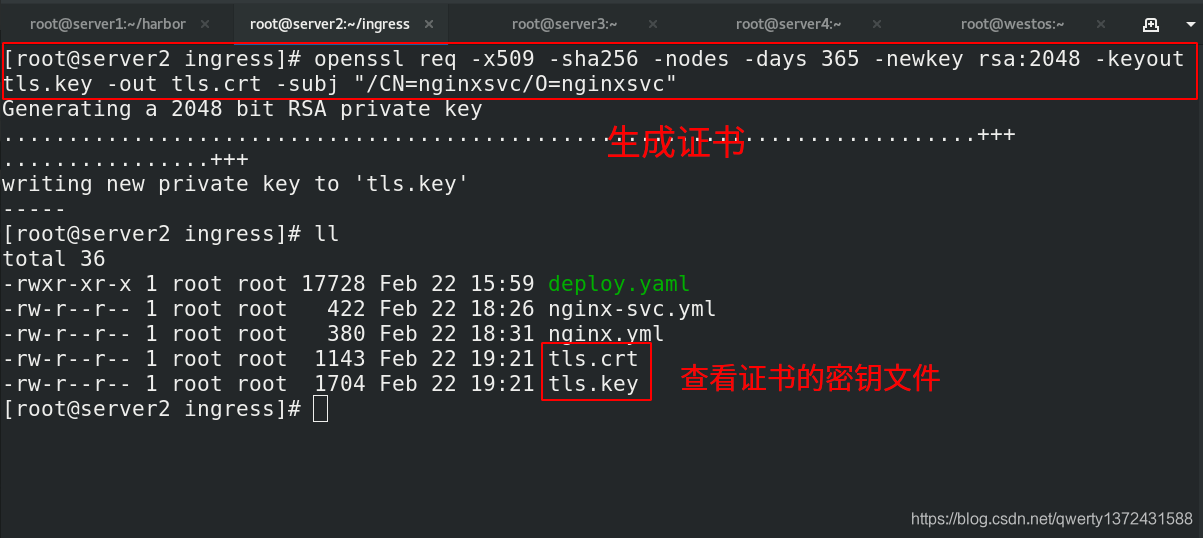

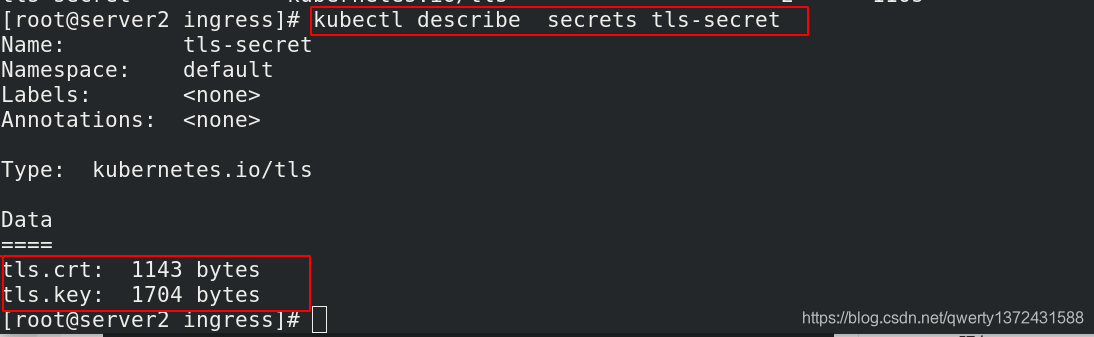

[root@server2 ingress]# openssl req -x509 -sha256 -nodes -days 365 -newkey rsa:2048 -keyout tls.key -out tls.crt -subj "/CN=nginxsvc/O=nginxsvc" ##生成证书文件

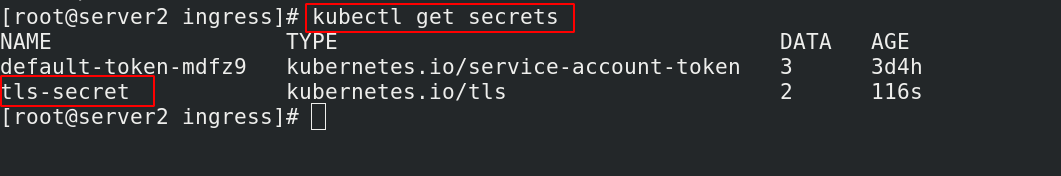

[root@server2 ingress]# kubectl create secret tls tls-secret --key tls.key --cert tls.crt ##生成缓存的密钥文件,相当于缓存到secret这个文件中

[root@server2 ingress]# kubectl get secrets ##查看生成的密钥文件

[root@server2 ingress]# kubectl describe secrets tls-secret ##查看密钥详细信息

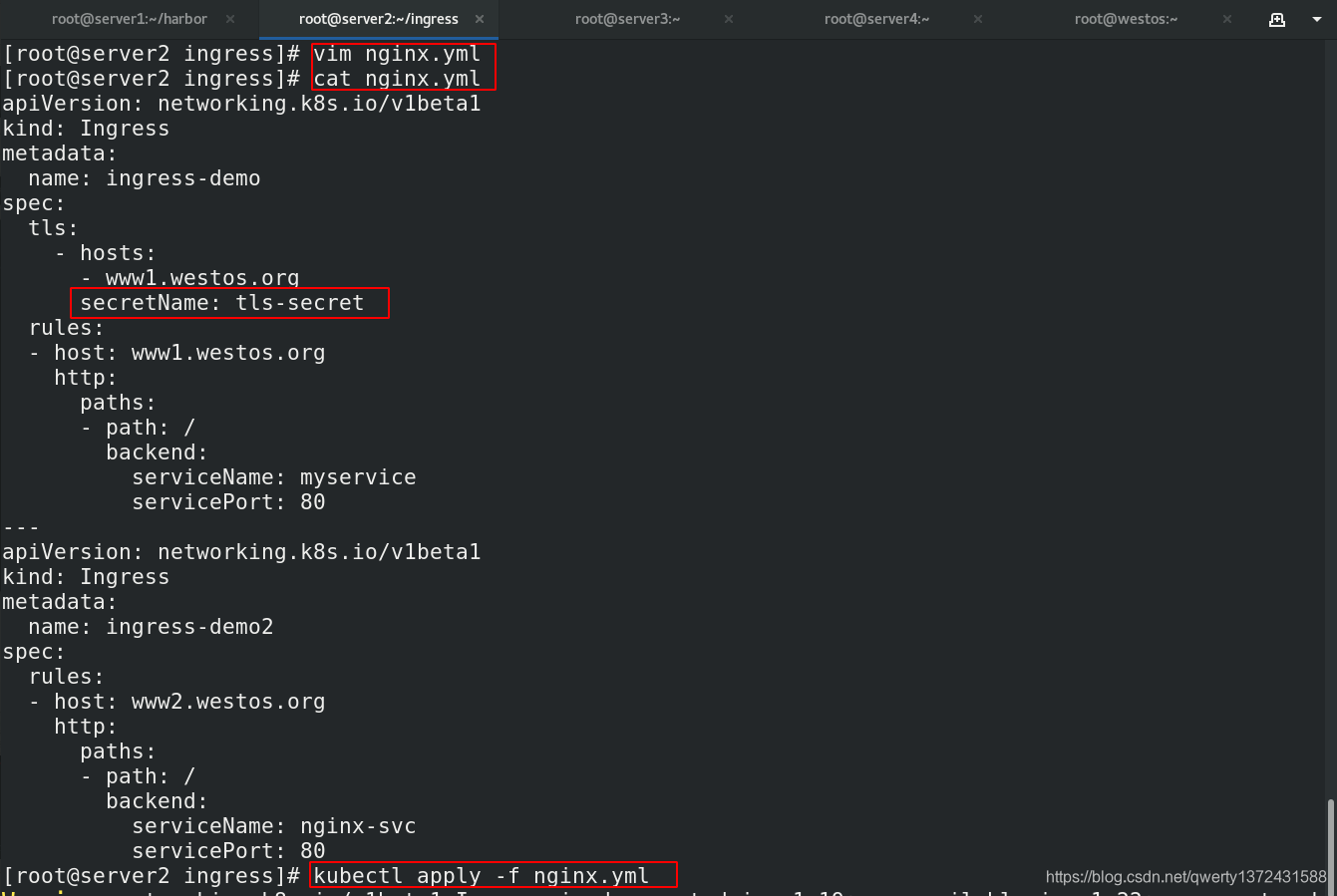

[root@server2 ingress]# vim nginx.yml

[root@server2 ingress]# cat nginx.yml

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: ingress-demo

spec:

tls:

- hosts:

- www1.westos.org

secretName: tls-secret ##为www1.westos.org添加证书

rules:

- host: www1.westos.org

http:

paths:

- path: /

backend:

serviceName: myservice

servicePort: 80

---

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: ingress-demo2

spec:

rules:

- host: www2.westos.org

http:

paths:

- path: /

backend:

serviceName: nginx-svc

servicePort: 80

[root@server2 ingress]# kubectl apply -f nginx.yml ##创建

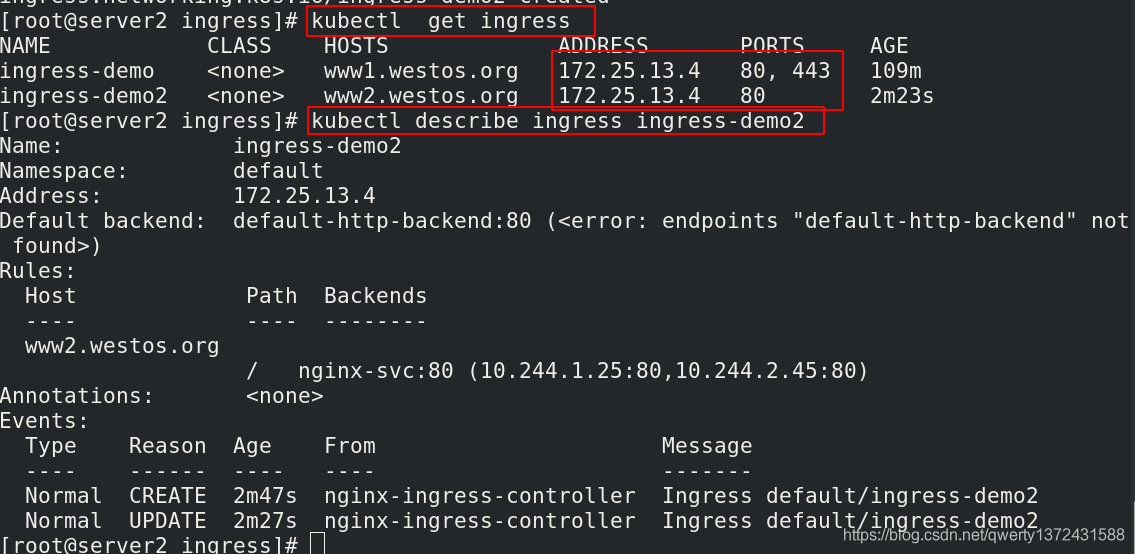

[root@server2 ingress]# kubectl get ingress ##可以发现有了443端口

NAME CLASS HOSTS ADDRESS PORTS AGE

ingress-demo <none> www1.westos.org 172.25.13.4 80, 443 109m

ingress-demo2 <none> www2.westos.org 172.25.13.4 80 2m23s

[root@server2 ingress]# kubectl describe ingress ingress-demo2

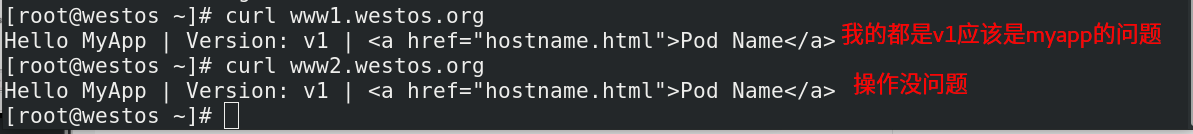

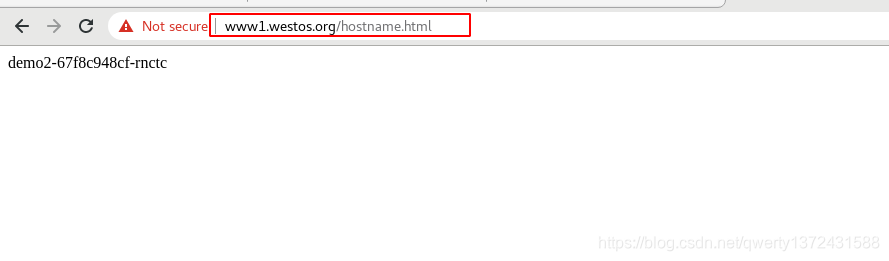

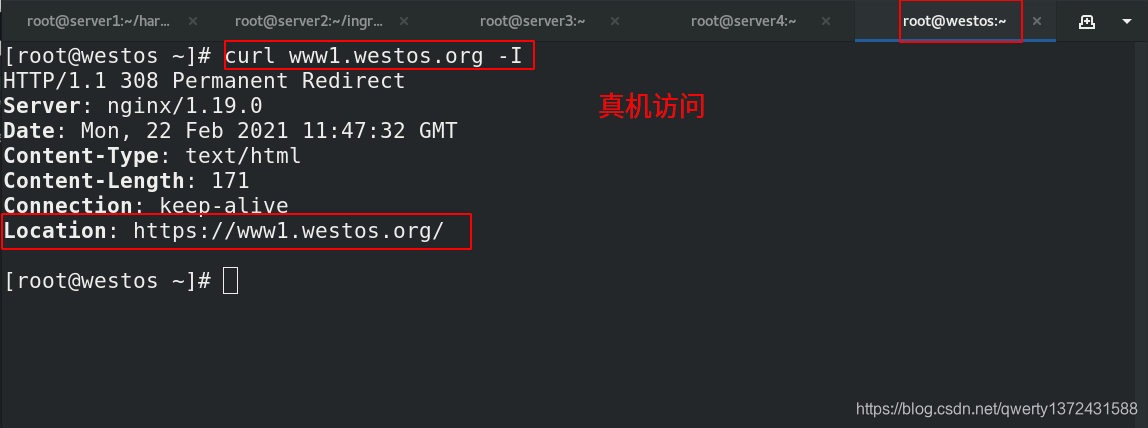

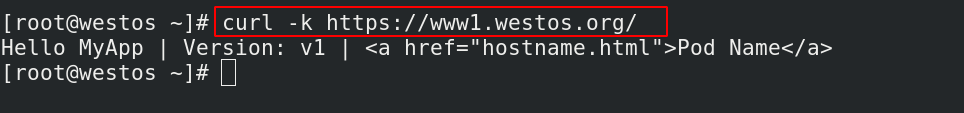

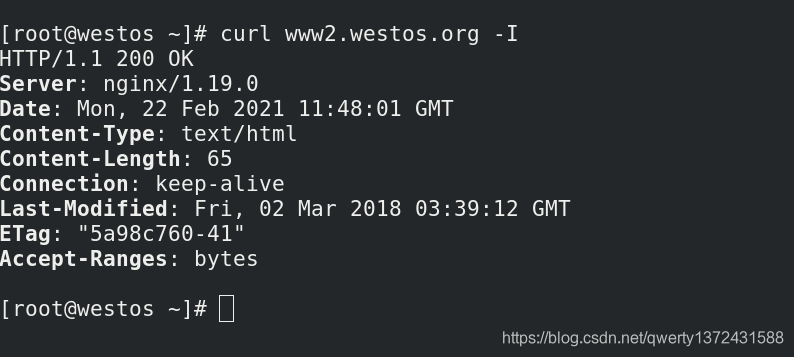

[root@westos ~]# curl www1.westos.org -I ##真机访问

[root@westos ~]# curl -k https://www1.westos.org/ ##真机直接访问重定向网址

[root@westos ~]# curl www2.westos.org -I

4.2.3 证书加密与用户认证

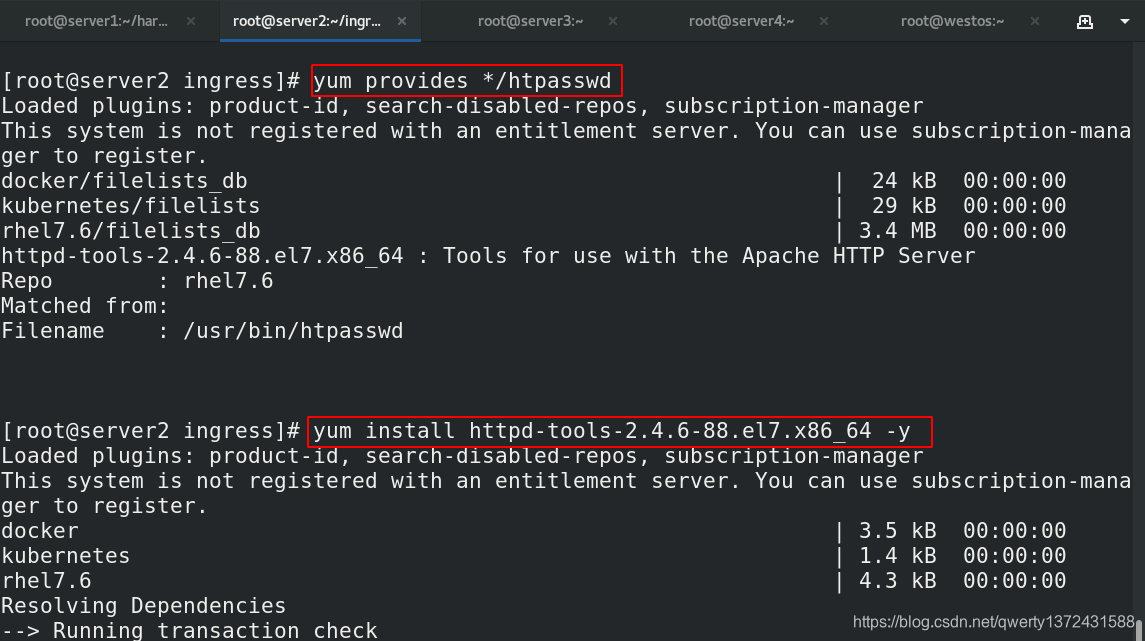

[root@server2 ingress]# yum provides */htpasswd ##查看htpasswd软件属于哪个安装包

[root@server2 ingress]# yum install httpd-tools-2.4.6-88.el7.x86_64 -y ##安装对应软件

[root@server2 ingress]# htpasswd -c auth zhy ##建立认证用户

[root@server2 ingress]# htpasswd auth admin ##再建立一个

[root@server2 ingress]# cat auth ##查看认证的用户

zhy:$apr1$Q1LeQyt7$CWuCeqN2fo8/0cvE6nf.A.

admin:$apr1$AetqdllY$QAYkZ7Vc0W304Y3OpsUON.

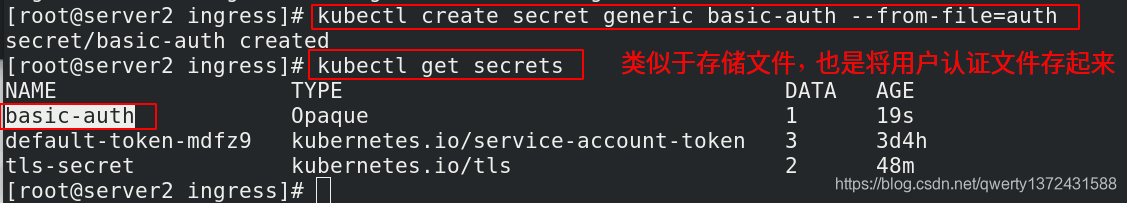

[root@server2 ingress]# kubectl create secret generic basic-auth --from-file=auth ##存储用户认证文件

[root@server2 ingress]# kubectl get secrets ##存储成功

NAME TYPE DATA AGE

basic-auth Opaque 1 19s

default-token-mdfz9 kubernetes.io/service-account-token 3 3d4h

tls-secret kubernetes.io/tls 2 48m

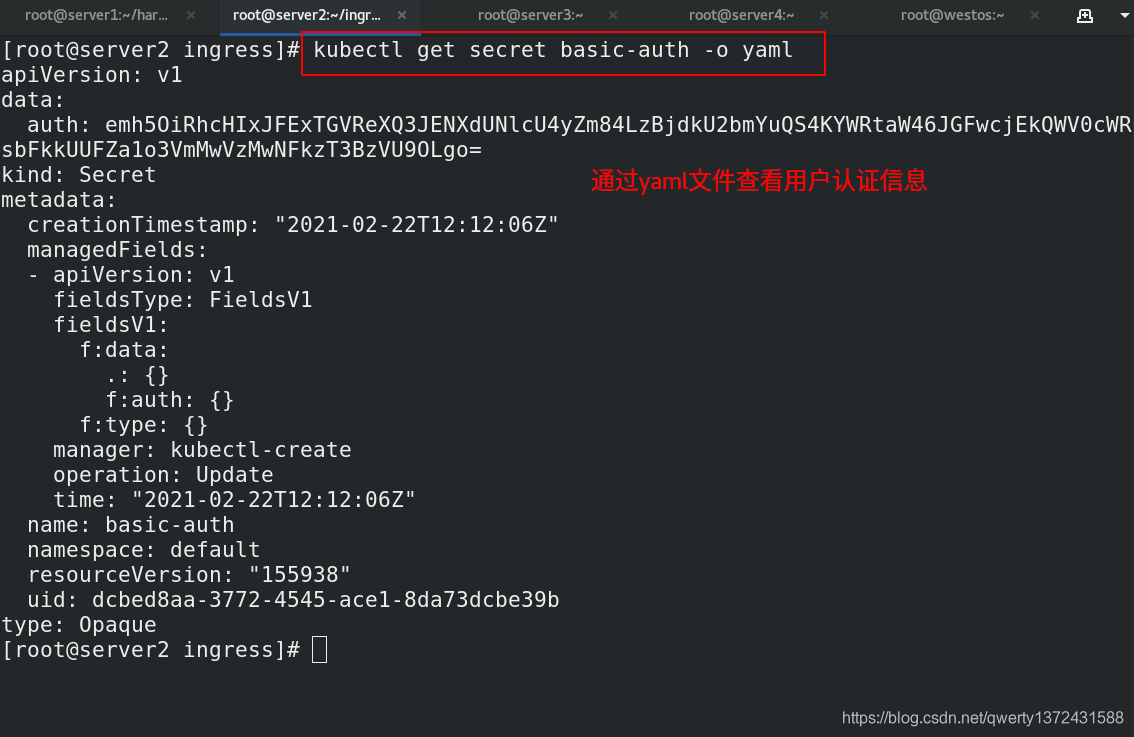

[root@server2 ingress]# kubectl get secret basic-auth -o yaml ##通过yaml文件查看用户认证信息

[root@server2 ingress]# cat nginx.yml

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: ingress-demo

annotations:

nginx.ingress.kubernetes.io/auth-type: basic

nginx.ingress.kubernetes.io/auth-secret: basic-auth

nginx.ingress.kubernetes.io/auth-realm: 'Authentication Required - wxh'

spec:

tls:

- hosts:

- www1.westos.org

secretName: tls-secret

rules:

- host: www1.westos.org

http:

paths:

- path: /

backend:

serviceName: myservice

servicePort: 80

---

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: ingress-demo2

spec:

rules:

- host: www2.westos.org

http:

paths:

- path: /

backend:

serviceName: nginx-svc

servicePort: 80

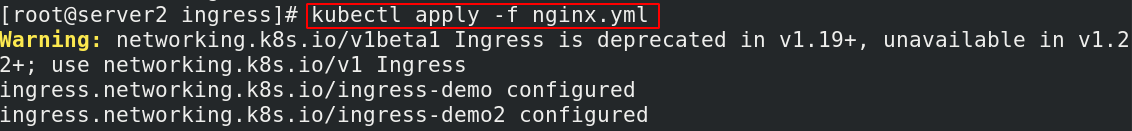

[root@server2 ingress]# kubectl apply -f nginx.yml ##创建

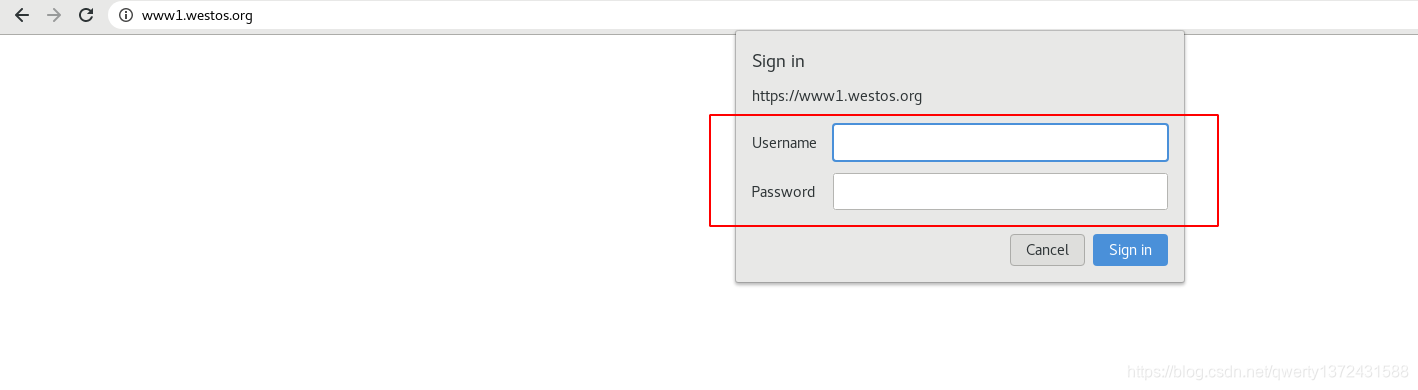

#然后网页访问www1.westos.org发现需要用户认证,登陆即可

4.2.4 简单设置重定向

[root@server2 ingress]# vim nginx.yml

[root@server2 ingress]# cat nginx.yml

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: ingress-demo

annotations:

nginx.ingress.kubernetes.io/auth-type: basic

nginx.ingress.kubernetes.io/auth-secret: basic-auth

nginx.ingress.kubernetes.io/auth-realm: 'Authentication Required - wxh'

spec:

tls:

- hosts:

- www1.westos.org

secretName: tls-secret

rules:

- host: www1.westos.org

http:

paths:

- path: /

backend:

serviceName: myservice

servicePort: 80

---

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: ingress-demo2

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /hostname.html ##设置重定向信息,可以直接访问到www1.westos.org/hostname.html

spec:

rules:

- host: www2.westos.org

http:

paths:

- backend:

serviceName: nginx-svc

servicePort: 80

path: / ##访问的根目录

4.2.5 地址重写(复杂的重定向)

[root@server2 ingress]# vim nginx.yml

[root@server2 ingress]# cat nginx.yml

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: ingress-demo

annotations:

nginx.ingress.kubernetes.io/auth-type: basic

nginx.ingress.kubernetes.io/auth-secret: basic-auth

nginx.ingress.kubernetes.io/auth-realm: 'Authentication Required - wxh'

spec:

tls:

- hosts:

- www1.westos.org

secretName: tls-secret

rules:

- host: www1.westos.org

http:

paths:

- path: /

backend:

serviceName: myservice

servicePort: 80

---

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: ingress-demo2

annotations:

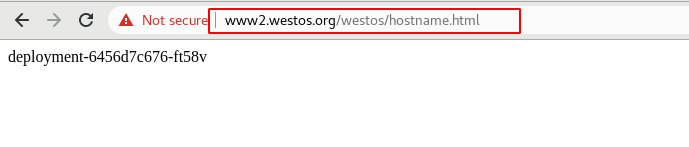

nginx.ingress.kubernetes.io/rewrite-target: /$2 ##定位到$2,指关键字后面的所有内容

spec:

rules:

- host: www2.westos.org

http:

paths:

- backend:

serviceName: nginx-svc

servicePort: 80

path: /westos(/|$)(.*) ##访问必须添加westos路径(即域名后面加westos,然后重新定向到别的路径)这关键字随意。

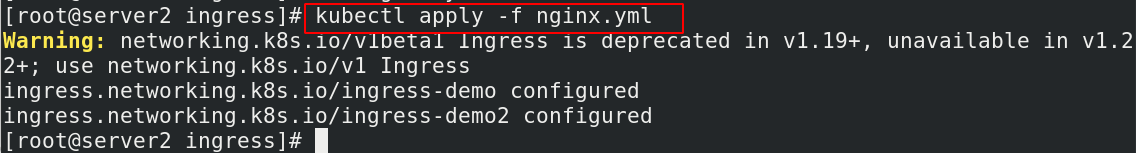

[root@server2 ingress]# kubectl apply -f nginx.yml

[root@server2 ingress]# kubectl describe ingress ingress-demo2 ##查看是否生效

5. 补充