在家躲肺炎,继续写实例,通过上一篇的文章我们可以看到,虽然filebeat对采集的数据进行了初步筛选,但在kibana里面看到的信息还是不够直观简洁,今天这篇文章将解决第二个问题,logstash如何格式化消息上传给elasticsearch?

跟上一篇类似,也主要是对logstash配置文件的研究

先贴上官方7.5版的配置说明地址,有需要的同学可以自行查看:https://www.elastic.co/guide/en/logstash/current/configuration.html

一个基本的logstash配置文件的结构,由input,filter,output三部分组成,如下图:

一、input可以使用多种插件做为输入源,比如kafka,beats,redis等等,不同插件的配置可以查看官方配置说明:

https://www.elastic.co/guide/en/logstash/current/input-plugins.html

1、插件可以要求设置的值是某种类型,如布尔值、列表值或哈希值。支持以下值类型:

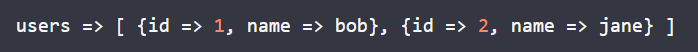

Array:

Lists:

Boolean:

![]()

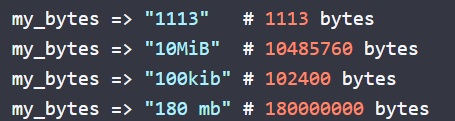

Bytes:

Codec:

![]()

Hash:

Number:

![]()

Password:

![]()

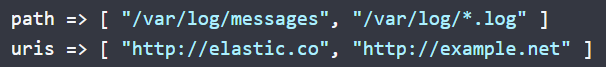

Uri:

![]()

Path:

![]()

String:

![]()

Escape sequences(转义序列):

![]()

2、我使用的是kafka作为输入源,input配置如下:

input {

kafka{

#向插件配置添加唯一的ID。如果没有指定ID,Logstash将生成一个ID。强烈建议在配置中设置此ID。当您有两个或多个相同类型的插件时,这尤其有用,例如,如果您有两个kafka输入。在本例中添加命名ID将有助于在使用监视api时监视Logstash

id => "kafka_plugin_id"

#将类型字段添加到此输入处理的所有事件。类型主要用于激活筛选器。该类型作为事件本身的一部分存储,因此您也可以使用该类型在Kibana中搜索它。如果您试图在已经有一个事件的情况下设置一个类型(例如,当您将事件从托运人发送到索引器)时,新的输入将不会覆盖现有的类型。即使发送到另一个Logstash服务器,在发货人处设置的类型在其生命周期内也与该事件保持一致

type => "logstash-kafka-plugin"

#发出请求时传递给服务器的id字符串。这样做的目的是通过允许包含逻辑应用程序名来跟踪请求源,而不仅仅是ip/端口,默认值为:logstash

client_id => "logstash-kafka"

#此使用者所属组的标识符。当使用者组由多个处理器组成的单个逻辑订阅服务器。主题中的消息将分发到具有相同组id的所有日志存储实例

group_id => "logstash-kafka"

#如果Kafka中没有初始偏移或偏移超出范围,该怎么办:最早:自动将偏移重置为最早 偏移最新:自动将偏移重置为最新 偏移无:如果未找到使用者组的先前偏移量,则向使用者引发异常任何其他内容:向消费者抛出异常

auto_offset_reset => "latest"

#理想情况下,您应该拥有与分区数量相同的线程,以达到完美的平衡 - 比分区多的线程意味着某些线程将处于空闲状态

consumer_threads => 5

#选项将Kafka元数据(如主题、消息大小)添加到事件。这将向logstash事件添加一个名为kafka的字段,该字段包含以下属性{topic,consumer_group,partition,offset,key}

decorate_events => true

#要订阅的主题列表,默认为[“logstash”]

topics => ["scm_log"]

#用于建立到群集的初始连接的Kafka实例的URL列表。此列表的格式应为host1:port1,host2:port2,默认值为"localhost:9092"

bootstrap_servers => ["127.0.0.1:9092,127.0.0.1:9093,127.0.0.1:9094"]

}

}kafka可用的metadata字段有如下:

二、filter plugins过滤器插件集

官方配置说明:https://www.elastic.co/guide/en/logstash/current/filter-plugins.html

比较常用的有

1、grok(Grok是一种将非结构化日志数据解析为结构化和可查询的数据的好方法,此工具非常适合syslog日志、apache和其他web服务器日志、mysql日志)

grok的官方说明:https://www.elastic.co/guide/en/logstash/current/plugins-filters-grok.html

预定义的一些正则:https://github.com/logstash-plugins/logstash-patterns-core/blob/master/patterns/grok-patterns

有一篇文章写得也还不错,同学们可以参考:https://www.jianshu.com/p/d3042a08eb5e

grok调试工具可以使用 http://grokdebug.herokuapp.com/

也可以使用Kibana 中自带的 Grok Debugger 来测试自己写的正则表达式解析日志是否正确

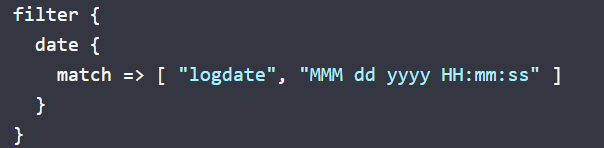

2、date(日期过滤器用于解析字段中的日期,然后使用该日期或时间戳作为事件的日志存储时间戳。)

配置说明:https://www.elastic.co/guide/en/logstash/current/plugins-filters-date.html,简单示例:

3、mutate(mutate过滤器允许您在字段上执行常规突变。您可以重命名、移除、替换和修改事件中的字段)

配置说明:https://www.elastic.co/guide/en/logstash/current/plugins-filters-mutate.html,简单示例:

三、output插件

上面对logstash的配置文件有了一个大致的了解,下面来动手改造我们自己的配置文件

1、去掉没用的信息,下面是filebeat发送给logstash的信息,我们把没用的都去掉,只保留message

{

"@timestamp" => 2020-02-12T03:28:52.300Z,

"@metadata" => {

"kafka" => {

"timestamp" => 1581478130397,

"topic" => "scm_log",

"consumer_group" => "logstash-kafka",

"key" => nil,

"partition" => 0,

"offset" => 15908

}

},

"@version" => "1",

"message" => "{\"@timestamp\":\"2020-02-12T03:28:50.397Z\",\"@metadata\":{\"beat\":\"filebeat\",\"type\":\"_doc\",\"version\":\"7.5.2\"},\"fields\":{\"data-resource\":\"scm\"},\"ecs\":{\"version\":\"1.1.0\"},\"host\":{\"name\":\"DESKTOP-3IOIJPC\",\"id\":\"18552622-f5bf-4e96-9148-53d85b5a5b13\",\"hostname\":\"DESKTOP-3IOIJPC\",\"architecture\":\"x86_64\",\"os\":{\"name\":\"Windows 10 Home China\",\"kernel\":\"10.0.18362.592 (WinBuild.160101.0800)\",\"build\":\"18362.592\",\"platform\":\"windows\",\"version\":\"10.0\",\"family\":\"windows\"}},\"agent\":{\"type\":\"filebeat\",\"ephemeral_id\":\"f5d7437d-da3e-4bce-9a27-820335f5feb8\",\"hostname\":\"DESKTOP-3IOIJPC\",\"id\":\"21483606-ba2b-4d2b-878b-242aa321e300\",\"version\":\"7.5.2\"},\"log\":{\"offset\":0,\"file\":{\"path\":\"D:\\\\localinstall\\\\ELK\\\\data-log\\\\test.out\"}},\"message\":\"[2019-11-20 11:26:30.573][ERROR] 26067#0: *17918 connect() failed (111: Connection refused) while connecting to upstream, client: 192.168.32.17, server: localhost, request: \\\"GET /wss/ HTTP/1.1\\\", upstream: \\\"http://192.168.12.106:8010/\\\", host: \\\"192.168.12.106\\\"\",\"input\":{\"type\":\"log\"}}",

"type" => "logstash-kafka-plugin"

}

2、在filebeat.yml文件里面可以指定去掉哪些字段

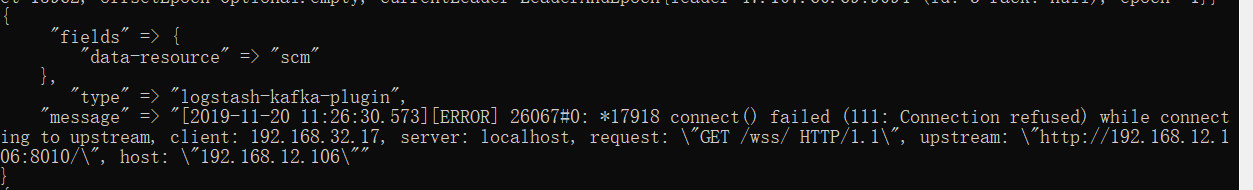

3、看结果,logstash收到的消息中已经没有了上面去掉的那些字段,要注意@timestamp,@metadata官方说明不能被删除,后续我们可以在logstash里面删除。

4、在logstash里面去掉timestamp,下图是配置说明,以及各参数会去掉哪些数据的指向。

注意:上图中有一处错误“tags" => [

[0] "_grokparsefailure”,这里其实是因为我的配置文件中写了一个grok{}标签,提示grok解析错误,去掉后,tags就没有了。

5、看logstash输出结果,已经很简洁了。

6、上面是比较简单的日志了,我们还有一些较为复杂的,完整的日志如下:

[2019-11-20 11:26:30.573][ERROR] 26067#0: *17918 connect() failed (111: Connection refused) while connecting to upstream, client: 192.168.32.17, server: localhost, request: "GET /wss/ HTTP/1.1", upstream: "http://192.168.12.106:8010/", host: "192.168.12.106" [2019-11-20 11:26:30.573][info] 26067#0: [2019-11-20 12:05:10.123][error] 26067#0: *17922 open() "/data/programs/nginx/html/ws" failed (2: No such file or directory), client: 192.168.32.17, server: localhost, request: "GET /ws HTTP/1.1", host: "192.168.12.106" [2019-11-20 20:25:22.573][DubboServerHandler-192.168.0.5:20904-thread-3][ERROR][?][]:{conn-10005, pstmt-20003} execute error. select count(1) from ( select t.RETAIL_INVOICE_ID as retailInvoiceId, t.RETAIL_INVOICE_CODE as retailInvoiceCode, t.BILL_CODE as billCode, t.CUSTOMER_ID as customerId, t.CURRENCY as currency , t.SALE_CHANNEL as saleChannel, t.REMARK as remark, t.COMPANY_ID as companyId, t.CREATED_BY as createdBy, t.CREATED_DATE as createdDate , t.MODIFIED_BY as modifiedBy, t.MODIFIED_DATE as modifiedDate, t.RD_STATUS as rdStatus, t.DATA_ID as dataId, t.COMPANY_NAME as companyName , case T.DATA_FROM when 1 then 'TMS' when 2 then '接口' when 3 then '手动导入' else null end as dataFromName where T.RD_STATUS = 1 and TSP.BL_SCM = 1 and t.DATE_INVOICE >= to_date('2019-11-13 00:00:00', 'yyyy-mm-dd hh24:mi:ss') and t.DATE_INVOICE <= to_date('2019-11-20 23:59:59', 'yyyy-mm-dd hh24:mi:ss') order by T.CREATED_DATE desc ) TOTAL java.sql.SQLSyntaxErrorException: ORA-00920: invalid relational operator at oracle.jdbc.driver.SQLStateMapping.newSQLException(SQLStateMapping.java:91) ~[mybatis-generator-core-1.3.2-ztd.jar:?] at oracle.jdbc.driver.DatabaseError.newSQLException(DatabaseError.java:133) ~[mybatis-generator-core-1.3.2-ztd.jar:?] at oracle.jdbc.driver.DatabaseError.throwSqlException(DatabaseError.java:206) ~[mybatis-generator-core-1.3.2-ztd.jar:?] at oracle.jdbc.driver.T4CTTIoer.processError(T4CTTIoer.java:455) ~[mybatis-generator-core-1.3.2-ztd.jar:?] at oracle.jdbc.driver.T4CTTIoer.processError(T4CTTIoer.java:413) ~[mybatis-generator-core-1.3.2-ztd.jar:?] at oracle.jdbc.driver.T4C8Oall.receive(T4C8Oall.java:1034) ~[mybatis-generator-core-1.3.2-ztd.jar:?] at oracle.jdbc.driver.T4CPreparedStatement.doOall8(T4CPreparedStatement.java:194) ~[mybatis-generator-core-1.3.2-ztd.jar:?] at oracle.jdbc.driver.T4CPreparedStatement.executeForDescribe(T4CPreparedStatement.java:791) ~[mybatis-generator-core-1.3.2-ztd.jar:?] at oracle.jdbc.driver.T4CPreparedStatement.executeMaybeDescribe(T4CPreparedStatement.java:866) ~[mybatis-generator-core-1.3.2-ztd.jar:?] at oracle.jdbc.driver.OracleStatement.doExecuteWithTimeout(OracleStatement.java:1186) ~[mybatis-generator-core-1.3.2-ztd.jar:?] at oracle.jdbc.driver.OraclePreparedStatement.executeInternal(OraclePreparedStatement.java:3387) ~[mybatis-generator-core-1.3.2-ztd.jar:?] at oracle.jdbc.driver.OraclePreparedStatement.executeQuery(OraclePreparedStatement.java:3431) ~[mybatis-generator-core-1.3.2-ztd.jar:?] at oracle.jdbc.driver.OraclePreparedStatementWrapper.executeQuery(OraclePreparedStatementWrapper.java:1491) ~[mybatis-generator-core-1.3.2-ztd.jar:?] at com.alibaba.druid.filter.FilterChainImpl.preparedStatement_executeQuery(FilterChainImpl.java:2830) ~[druid-1.1.5.jar:1.1.5] at com.alibaba.druid.filter.FilterEventAdapter.preparedStatement_executeQuery(FilterEventAdapter.java:465) ~[druid-1.1.5.jar:1.1.5] at com.alibaba.druid.filter.FilterChainImpl.preparedStatement_executeQuery(FilterChainImpl.java:2827) ~[druid-1.1.5.jar:1.1.5] at com.alibaba.druid.filter.FilterEventAdapter.preparedStatement_executeQuery(FilterEventAdapter.java:465) ~[druid-1.1.5.jar:1.1.5] at com.alibaba.druid.filter.FilterChainImpl.preparedStatement_executeQuery(FilterChainImpl.java:2827) ~[druid-1.1.5.jar:1.1.5] at com.alibaba.druid.proxy.jdbc.PreparedStatementProxyImpl.executeQuery(PreparedStatementProxyImpl.java:181) ~[druid-1.1.5.jar:1.1.5] at com.alibaba.druid.pool.DruidPooledPreparedStatement.executeQuery(DruidPooledPreparedStatement.java:228) ~[druid-1.1.5.jar:1.1.5] at com.baomidou.mybatisplus.plugins.PaginationInterceptor.count(PaginationInterceptor.java:189) ~[mybatis-plus-2.0.2.jar:?] at com.baomidou.mybatisplus.plugins.PaginationInterceptor.intercept(PaginationInterceptor.java:156) ~[mybatis-plus-2.0.2.jar:?] at org.apache.ibatis.plugin.Plugin.invoke(Plugin.java:61) ~[mybatis-3.4.2.jar:3.4.2] at com.sun.proxy.$Proxy184.query(Unknown Source) ~[?:?] at org.apache.ibatis.session.defaults.DefaultSqlSession.selectList(DefaultSqlSession.java:148) ~[mybatis-3.4.2.jar:3.4.2] at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) ~[?:1.8.0_171] at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) ~[?:1.8.0_171] at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) ~[?:1.8.0_171] at java.lang.reflect.Method.invoke(Method.java:498) ~[?:1.8.0_171] at org.mybatis.spring.SqlSessionTemplate$SqlSessionInterceptor.invoke(SqlSessionTemplate.java:433) ~[mybatis-spring-1.3.1.jar:1.3.1] at com.sun.proxy.$Proxy57.selectList(Unknown Source) ~[?:?] at org.mybatis.spring.SqlSessionTemplate.selectList(SqlSessionTemplate.java:238) ~[mybatis-spring-1.3.1.jar:1.3.1] at org.apache.ibatis.binding.MapperMethod.executeForMany(MapperMethod.java:135) ~[mybatis-3.4.2.jar:3.4.2] at org.apache.ibatis.binding.MapperMethod.execute(MapperMethod.java:75) ~[mybatis-3.4.2.jar:3.4.2] at org.apache.ibatis.binding.MapperProxy.invoke(MapperProxy.java:59) ~[mybatis-3.4.2.jar:3.4.2] at com.sun.proxy.$Proxy131.findPage(Unknown Source) ~[?:?] at com.zhitengda.dolphin.modular.service.impl.invoice.retailinvoice.TabRetailInvoiceServiceImpl.findPage(TabRetailInvoiceServiceImpl.java:83) ~[classes/:?] at com.zhitengda.dolphin.modular.service.impl.invoice.retailinvoice.TabRetailInvoiceServiceImpl$$FastClassBySpringCGLIB$$2020b273.invoke(<generated>) ~[classes/:?] at org.springframework.cglib.proxy.MethodProxy.invoke(MethodProxy.java:204) ~[spring-core-4.3.16.RELEASE.jar:4.3.16.RELEASE] at org.springframework.aop.framework.CglibAopProxy$CglibMethodInvocation.invokeJoinpoint(CglibAopProxy.java:738) ~[spring-aop-4.3.16.RELEASE.jar:4.3.16.RELEASE] at org.springframework.aop.framework.ReflectiveMethodInvocation.proceed(ReflectiveMethodInvocation.java:157) ~[spring-aop-4.3.16.RELEASE.jar:4.3.16.RELEASE] at org.springframework.aop.framework.adapter.MethodBeforeAdviceInterceptor.invoke(MethodBeforeAdviceInterceptor.java:52) ~[spring-aop-4.3.16.RELEASE.jar:4.3.16.RELEASE] at org.springframework.aop.framework.ReflectiveMethodInvocation.proceed(ReflectiveMethodInvocation.java:179) ~[spring-aop-4.3.16.RELEASE.jar:4.3.16.RELEASE] at org.springframework.aop.aspectj.AspectJAfterAdvice.invoke(AspectJAfterAdvice.java:47) ~[spring-aop-4.3.16.RELEASE.jar:4.3.16.RELEASE] at org.springframework.aop.framework.ReflectiveMethodInvocation.proceed(ReflectiveMethodInvocation.java:179) ~[spring-aop-4.3.16.RELEASE.jar:4.3.16.RELEASE] at org.springframework.aop.aspectj.AspectJAfterThrowingAdvice.invoke(AspectJAfterThrowingAdvice.java:62) ~[spring-aop-4.3.16.RELEASE.jar:4.3.16.RELEASE] at org.springframework.aop.framework.ReflectiveMethodInvocation.proceed(ReflectiveMethodInvocation.java:179) ~[spring-aop-4.3.16.RELEASE.jar:4.3.16.RELEASE] at org.springframework.aop.framework.adapter.MethodBeforeAdviceInterceptor.invoke(MethodBeforeAdviceInterceptor.java:52) ~[spring-aop-4.3.16.RELEASE.jar:4.3.16.RELEASE] at org.springframework.aop.framework.ReflectiveMethodInvocation.proceed(ReflectiveMethodInvocation.java:179) ~[spring-aop-4.3.16.RELEASE.jar:4.3.16.RELEASE] at org.springframework.aop.aspectj.AspectJAfterAdvice.invoke(AspectJAfterAdvice.java:47) ~[spring-aop-4.3.16.RELEASE.jar:4.3.16.RELEASE] at org.springframework.aop.framework.ReflectiveMethodInvocation.proceed(ReflectiveMethodInvocation.java:179) ~[spring-aop-4.3.16.RELEASE.jar:4.3.16.RELEASE] at org.springframework.transaction.interceptor.TransactionInterceptor$1.proceedWithInvocation(TransactionInterceptor.java:99) ~[spring-tx-4.3.16.RELEASE.jar:4.3.16.RELEASE] at org.springframework.transaction.interceptor.TransactionAspectSupport.invokeWithinTransaction(TransactionAspectSupport.java:282) ~[spring-tx-4.3.16.RELEASE.jar:4.3.16.RELEASE] at org.springframework.transaction.interceptor.TransactionInterceptor.invoke(TransactionInterceptor.java:96) ~[spring-tx-4.3.16.RELEASE.jar:4.3.16.RELEASE] at org.springframework.aop.framework.ReflectiveMethodInvocation.proceed(ReflectiveMethodInvocation.java:179) ~[spring-aop-4.3.16.RELEASE.jar:4.3.16.RELEASE] at org.springframework.aop.interceptor.ExposeInvocationInterceptor.invoke(ExposeInvocationInterceptor.java:92) ~[spring-aop-4.3.16.RELEASE.jar:4.3.16.RELEASE] at org.springframework.aop.framework.ReflectiveMethodInvocation.proceed(ReflectiveMethodInvocation.java:179) ~[spring-aop-4.3.16.RELEASE.jar:4.3.16.RELEASE] at org.springframework.aop.framework.CglibAopProxy$DynamicAdvisedInterceptor.intercept(CglibAopProxy.java:673) ~[spring-aop-4.3.16.RELEASE.jar:4.3.16.RELEASE] at com.zhitengda.dolphin.modular.service.impl.invoice.retailinvoice.TabRetailInvoiceServiceImpl$$EnhancerBySpringCGLIB$$7183f17b.findPage(<generated>) ~[classes/:?] at com.zhitengda.dolphin.modular.service.impl.invoice.retailinvoice.TabRetailInvoiceServiceImpl$$FastClassBySpringCGLIB$$2020b273.invoke(<generated>) ~[classes/:?] at org.springframework.cglib.proxy.MethodProxy.invoke(MethodProxy.java:204) ~[spring-core-4.3.16.RELEASE.jar:4.3.16.RELEASE] at org.springframework.aop.framework.CglibAopProxy$CglibMethodInvocation.invokeJoinpoint(CglibAopProxy.java:738) ~[spring-aop-4.3.16.RELEASE.jar:4.3.16.RELEASE] at org.springframework.aop.framework.ReflectiveMethodInvocation.proceed(ReflectiveMethodInvocation.java:157) ~[spring-aop-4.3.16.RELEASE.jar:4.3.16.RELEASE] at org.springframework.transaction.interceptor.TransactionInterceptor$1.proceedWithInvocation(TransactionInterceptor.java:99) ~[spring-tx-4.3.16.RELEASE.jar:4.3.16.RELEASE] at org.springframework.transaction.interceptor.TransactionAspectSupport.invokeWithinTransaction(TransactionAspectSupport.java:282) ~[spring-tx-4.3.16.RELEASE.jar:4.3.16.RELEASE] at org.springframework.transaction.interceptor.TransactionInterceptor.invoke(TransactionInterceptor.java:96) ~[spring-tx-4.3.16.RELEASE.jar:4.3.16.RELEASE] at org.springframework.aop.framework.ReflectiveMethodInvocation.proceed(ReflectiveMethodInvocation.java:179) ~[spring-aop-4.3.16.RELEASE.jar:4.3.16.RELEASE] at org.springframework.aop.framework.CglibAopProxy$DynamicAdvisedInterceptor.intercept(CglibAopProxy.java:673) ~[spring-aop-4.3.16.RELEASE.jar:4.3.16.RELEASE] at com.zhitengda.dolphin.modular.service.impl.invoice.retailinvoice.TabRetailInvoiceServiceImpl$$EnhancerBySpringCGLIB$$62d14ac0.findPage(<generated>) ~[classes/:?] at com.zhitengda.dolphin.modular.service.impl.invoice.retailinvoice.TabRetailInvoiceServiceImpl$$FastClassBySpringCGLIB$$2020b273.invoke(<generated>) ~[classes/:?] at org.springframework.cglib.proxy.MethodProxy.invoke(MethodProxy.java:204) ~[spring-core-4.3.16.RELEASE.jar:4.3.16.RELEASE] at org.springframework.aop.framework.CglibAopProxy$CglibMethodInvocation.invokeJoinpoint(CglibAopProxy.java:738) ~[spring-aop-4.3.16.RELEASE.jar:4.3.16.RELEASE] at org.springframework.aop.framework.ReflectiveMethodInvocation.proceed(ReflectiveMethodInvocation.java:157) ~[spring-aop-4.3.16.RELEASE.jar:4.3.16.RELEASE] at org.springframework.transaction.interceptor.TransactionInterceptor$1.proceedWithInvocation(TransactionInterceptor.java:99) ~[spring-tx-4.3.16.RELEASE.jar:4.3.16.RELEASE] at org.springframework.transaction.interceptor.TransactionAspectSupport.invokeWithinTransaction(TransactionAspectSupport.java:282) ~[spring-tx-4.3.16.RELEASE.jar:4.3.16.RELEASE] at org.springframework.transaction.interceptor.TransactionInterceptor.invoke(TransactionInterceptor.java:96) ~[spring-tx-4.3.16.RELEASE.jar:4.3.16.RELEASE] at org.springframework.aop.framework.ReflectiveMethodInvocation.proceed(ReflectiveMethodInvocation.java:179) ~[spring-aop-4.3.16.RELEASE.jar:4.3.16.RELEASE] at org.springframework.aop.framework.CglibAopProxy$DynamicAdvisedInterceptor.intercept(CglibAopProxy.java:673) ~[spring-aop-4.3.16.RELEASE.jar:4.3.16.RELEASE] at com.zhitengda.dolphin.modular.service.impl.invoice.retailinvoice.TabRetailInvoiceServiceImpl$$EnhancerBySpringCGLIB$$62d14ac0.findPage(<generated>) ~[classes/:?] at com.alibaba.dubbo.common.bytecode.Wrapper21.invokeMethod(Wrapper21.java) ~[dubbo-2.5.7.jar:2.5.7] at com.alibaba.dubbo.rpc.proxy.javassist.JavassistProxyFactory$1.doInvoke(JavassistProxyFactory.java:46) ~[dubbo-2.5.7.jar:2.5.7] at com.alibaba.dubbo.rpc.proxy.AbstractProxyInvoker.invoke(AbstractProxyInvoker.java:72) ~[dubbo-2.5.7.jar:2.5.7] at com.alibaba.dubbo.rpc.protocol.InvokerWrapper.invoke(InvokerWrapper.java:53) ~[dubbo-2.5.7.jar:2.5.7] at com.alibaba.dubbo.rpc.filter.ExceptionFilter.invoke(ExceptionFilter.java:64) ~[dubbo-2.5.7.jar:2.5.7] at com.alibaba.dubbo.rpc.protocol.ProtocolFilterWrapper$1.invoke(ProtocolFilterWrapper.java:69) ~[dubbo-2.5.7.jar:2.5.7] at com.alibaba.dubbo.monitor.support.MonitorFilter.invoke(MonitorFilter.java:75) ~[dubbo-2.5.7.jar:2.5.7] at com.alibaba.dubbo.rpc.protocol.ProtocolFilterWrapper$1.invoke(ProtocolFilterWrapper.java:69) ~[dubbo-2.5.7.jar:2.5.7] at com.alibaba.dubbo.rpc.filter.TimeoutFilter.invoke(TimeoutFilter.java:42) ~[dubbo-2.5.7.jar:2.5.7] at com.alibaba.dubbo.rpc.protocol.ProtocolFilterWrapper$1.invoke(ProtocolFilterWrapper.java:69) ~[dubbo-2.5.7.jar:2.5.7] at com.alibaba.dubbo.rpc.protocol.dubbo.filter.TraceFilter.invoke(TraceFilter.java:78) ~[dubbo-2.5.7.jar:2.5.7] at com.alibaba.dubbo.rpc.protocol.ProtocolFilterWrapper$1.invoke(ProtocolFilterWrapper.java:69) ~[dubbo-2.5.7.jar:2.5.7] at com.alibaba.dubbo.rpc.filter.ContextFilter.invoke(ContextFilter.java:61) ~[dubbo-2.5.7.jar:2.5.7] at com.alibaba.dubbo.rpc.protocol.ProtocolFilterWrapper$1.invoke(ProtocolFilterWrapper.java:69) ~[dubbo-2.5.7.jar:2.5.7] at com.alibaba.dubbo.rpc.filter.GenericFilter.invoke(GenericFilter.java:132) ~[dubbo-2.5.7.jar:2.5.7] at com.alibaba.dubbo.rpc.protocol.ProtocolFilterWrapper$1.invoke(ProtocolFilterWrapper.java:69) ~[dubbo-2.5.7.jar:2.5.7] at com.alibaba.dubbo.rpc.filter.ClassLoaderFilter.invoke(ClassLoaderFilter.java:38) ~[dubbo-2.5.7.jar:2.5.7] at com.alibaba.dubbo.rpc.protocol.ProtocolFilterWrapper$1.invoke(ProtocolFilterWrapper.java:69) ~[dubbo-2.5.7.jar:2.5.7] at com.alibaba.dubbo.rpc.filter.EchoFilter.invoke(EchoFilter.java:38) ~[dubbo-2.5.7.jar:2.5.7] at com.alibaba.dubbo.rpc.protocol.ProtocolFilterWrapper$1.invoke(ProtocolFilterWrapper.java:69) ~[dubbo-2.5.7.jar:2.5.7] at com.alibaba.dubbo.rpc.protocol.dubbo.DubboProtocol$1.reply(DubboProtocol.java:98) ~[dubbo-2.5.7.jar:2.5.7] at com.alibaba.dubbo.remoting.exchange.support.header.HeaderExchangeHandler.handleRequest(HeaderExchangeHandler.java:98) ~[dubbo-2.5.7.jar:2.5.7] at com.alibaba.dubbo.remoting.exchange.support.header.HeaderExchangeHandler.received(HeaderExchangeHandler.java:170) ~[dubbo-2.5.7.jar:2.5.7] at com.alibaba.dubbo.remoting.transport.DecodeHandler.received(DecodeHandler.java:52) ~[dubbo-2.5.7.jar:2.5.7] at com.alibaba.dubbo.remoting.transport.dispatcher.ChannelEventRunnable.run(ChannelEventRunnable.java:81) ~[dubbo-2.5.7.jar:2.5.7] at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) [?:1.8.0_171] at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) [?:1.8.0_171] at java.lang.Thread.run(Thread.java:748) [?:1.8.0_171]7、我们先对上面的第三个error块进行解析,我期望logstash发给elasticsearch的结果是:

errortime:[2019-11-20 20:25:22.573]

errorlevel:ERROR

errortype:java.sql.SQLSyntaxErrorException: ORA-00920: invalid relational operator

errorsql:select * ........

errorclass:com.zhitengda.dolphin.modular.service.impl.invoice.retailinvoice.TabRetailInvoiceServiceImpl.findPage(TabRetailInvoiceServiceImpl.java:83) ~[classes/:?]研究了半天,把第一第二、第三种error的正则都弄好了,期间一直用kibana的grok debugger做调试,先上图看一下结果

再看kibana里面的效果

展开再看

8、可以看出基本已经达到效果了,配置文件中使用了grok,mutate,json等插件处理数据,上配置:

filter {

json{

source => "message"

#去掉message json字符串中的@metadata参数

remove_field => ["@metadata"]

}

date{

match => ["timestamp","yyyy-MM-dd HH:mm:ss,SSS","ISO8601"]

locale => "en"

target => [ "@timestamp" ]

timezone => "Asia/Shanghai"

}

grok {

#多项匹配,如果日志中有几种类型的日志,可以写多种正则去匹配,从上至下,直到匹配到为止,没有匹配到的话,会解析出错“_grokparsefailure”

match => {

"message" => [

#第一、第二种error解析正则

"%{TIMESTAMP_ISO8601:errortime}(.+)\]\[%{LOGLEVEL:errorlevel}\]%{SPACE}(?<errormsg>(.+)), client:%{SPACE}%{IP:client}(.+)request:%{SPACE}%{QS:errormethod}",

#第三种error解析正则 "(?m)"匹配多行,因为filebeat中已经做了多行转换,因此在这里可写可不写

"(?m)%{TIMESTAMP_ISO8601:errortime}(.+)\]\[%{LOGLEVEL:errorlevel}(.+)(?<errormsg>ORA\-[0-9]+:(.+)\n\n\t)(.+)%{SPACE}at (?<errorclass>(com.zhitengda.%{JAVACLASS}))\.%{JAVAMETHOD:errormethod}\(%{JAVAFILE:errorfile}(?::%{NUMBER:errorline})?\)"

]

}

remove_field => ["message"]

}

mutate{

#去掉root节点的@metadata与@version两个参数

remove_field =>["@metadata","@version"]

gsub =>["errormsg","\n\n\t",""]

}

}一开始弄正则会有点麻烦,需要花点耐心,弄熟后就很快了。

另外在kibana dubugger里面解析日志时回车换行符是/r/n,但在logstash里面发现是/n,没有/r,当时把在kibana里面调试好的正则放到logstash管道配置文件中老是报错,花了点时间查错。

好了,这篇文章暂时就写到这里吧,通过这一章,我们基本上知道根据自己的实际日志数据,该如何通过logstash支持的插件处理数据,最终拿到我们想要的。