超参数网络搜索

由于各个新模型在执行交叉验证的过程中间是相互独立的,所以我们可以充分利用多核处理器(Multicore processor)甚至是分布式的计算资源来从事并行搜索,节省运算时间。

# 导入20类新闻文本抓取器

from sklearn.datasets import fetch_20newsgroups

import numpy as np

news = fetch_20newsgroups(subset='all')

print(news.DESCR)

.. _20newsgroups_dataset:

The 20 newsgroups text dataset

------------------------------

The 20 newsgroups dataset comprises around 18000 newsgroups posts on

20 topics split in two subsets: one for training (or development)

and the other one for testing (or for performance evaluation). The split

between the train and test set is based upon a messages posted before

and after a specific date.

This module contains two loaders. The first one,

:func:`sklearn.datasets.fetch_20newsgroups`,

returns a list of the raw texts that can be fed to text feature

extractors such as :class:`sklearn.feature_extraction.text.CountVectorizer`

with custom parameters so as to extract feature vectors.

The second one, :func:`sklearn.datasets.fetch_20newsgroups_vectorized`,

returns ready-to-use features, i.e., it is not necessary to use a feature

extractor.

**Data Set Characteristics:**

================= ==========

Classes 20

Samples total 18846

Dimensionality 1

Features text

================= ==========

Usage

~~~~~

The :func:`sklearn.datasets.fetch_20newsgroups` function is a data

fetching / caching functions that downloads the data archive from

the original `20 newsgroups website`_, extracts the archive contents

in the ``~/scikit_learn_data/20news_home`` folder and calls the

:func:`sklearn.datasets.load_files` on either the training or

testing set folder, or both of them::

>>> from sklearn.datasets import fetch_20newsgroups

>>> newsgroups_train = fetch_20newsgroups(subset='train')

>>> from pprint import pprint

>>> pprint(list(newsgroups_train.target_names))

['alt.atheism',

'comp.graphics',

'comp.os.ms-windows.misc',

'comp.sys.ibm.pc.hardware',

'comp.sys.mac.hardware',

'comp.windows.x',

'misc.forsale',

'rec.autos',

'rec.motorcycles',

'rec.sport.baseball',

'rec.sport.hockey',

'sci.crypt',

'sci.electronics',

'sci.med',

'sci.space',

'soc.religion.christian',

'talk.politics.guns',

'talk.politics.mideast',

'talk.politics.misc',

'talk.religion.misc']

The real data lies in the ``filenames`` and ``target`` attributes. The target

attribute is the integer index of the category::

>>> newsgroups_train.filenames.shape

(11314,)

>>> newsgroups_train.target.shape

(11314,)

>>> newsgroups_train.target[:10]

array([ 7, 4, 4, 1, 14, 16, 13, 3, 2, 4])

It is possible to load only a sub-selection of the categories by passing the

list of the categories to load to the

:func:`sklearn.datasets.fetch_20newsgroups` function::

>>> cats = ['alt.atheism', 'sci.space']

>>> newsgroups_train = fetch_20newsgroups(subset='train', categories=cats)

>>> list(newsgroups_train.target_names)

['alt.atheism', 'sci.space']

>>> newsgroups_train.filenames.shape

(1073,)

>>> newsgroups_train.target.shape

(1073,)

>>> newsgroups_train.target[:10]

array([0, 1, 1, 1, 0, 1, 1, 0, 0, 0])

Converting text to vectors

~~~~~~~~~~~~~~~~~~~~~~~~~~

In order to feed predictive or clustering models with the text data,

one first need to turn the text into vectors of numerical values suitable

for statistical analysis. This can be achieved with the utilities of the

``sklearn.feature_extraction.text`` as demonstrated in the following

example that extract `TF-IDF`_ vectors of unigram tokens

from a subset of 20news::

>>> from sklearn.feature_extraction.text import TfidfVectorizer

>>> categories = ['alt.atheism', 'talk.religion.misc',

... 'comp.graphics', 'sci.space']

>>> newsgroups_train = fetch_20newsgroups(subset='train',

... categories=categories)

>>> vectorizer = TfidfVectorizer()

>>> vectors = vectorizer.fit_transform(newsgroups_train.data)

>>> vectors.shape

(2034, 34118)

The extracted TF-IDF vectors are very sparse, with an average of 159 non-zero

components by sample in a more than 30000-dimensional space

(less than .5% non-zero features)::

>>> vectors.nnz / float(vectors.shape[0])

159.01327...

:func:`sklearn.datasets.fetch_20newsgroups_vectorized` is a function which

returns ready-to-use token counts features instead of file names.

.. _`20 newsgroups website`: http://people.csail.mit.edu/jrennie/20Newsgroups/

.. _`TF-IDF`: https://en.wikipedia.org/wiki/Tf-idf

Filtering text for more realistic training

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

It is easy for a classifier to overfit on particular things that appear in the

20 Newsgroups data, such as newsgroup headers. Many classifiers achieve very

high F-scores, but their results would not generalize to other documents that

aren't from this window of time.

For example, let's look at the results of a multinomial Naive Bayes classifier,

which is fast to train and achieves a decent F-score::

>>> from sklearn.naive_bayes import MultinomialNB

>>> from sklearn import metrics

>>> newsgroups_test = fetch_20newsgroups(subset='test',

... categories=categories)

>>> vectors_test = vectorizer.transform(newsgroups_test.data)

>>> clf = MultinomialNB(alpha=.01)

>>> clf.fit(vectors, newsgroups_train.target)

MultinomialNB(alpha=0.01, class_prior=None, fit_prior=True)

>>> pred = clf.predict(vectors_test)

>>> metrics.f1_score(newsgroups_test.target, pred, average='macro')

0.88213...

(The example :ref:`sphx_glr_auto_examples_text_plot_document_classification_20newsgroups.py` shuffles

the training and test data, instead of segmenting by time, and in that case

multinomial Naive Bayes gets a much higher F-score of 0.88. Are you suspicious

yet of what's going on inside this classifier?)

Let's take a look at what the most informative features are:

>>> import numpy as np

>>> def show_top10(classifier, vectorizer, categories):

... feature_names = np.asarray(vectorizer.get_feature_names())

... for i, category in enumerate(categories):

... top10 = np.argsort(classifier.coef_[i])[-10:]

... print("%s: %s" % (category, " ".join(feature_names[top10])))

...

>>> show_top10(clf, vectorizer, newsgroups_train.target_names)

alt.atheism: edu it and in you that is of to the

comp.graphics: edu in graphics it is for and of to the

sci.space: edu it that is in and space to of the

talk.religion.misc: not it you in is that and to of the

You can now see many things that these features have overfit to:

- Almost every group is distinguished by whether headers such as

``NNTP-Posting-Host:`` and ``Distribution:`` appear more or less often.

- Another significant feature involves whether the sender is affiliated with

a university, as indicated either by their headers or their signature.

- The word "article" is a significant feature, based on how often people quote

previous posts like this: "In article [article ID], [name] <[e-mail address]>

wrote:"

- Other features match the names and e-mail addresses of particular people who

were posting at the time.

With such an abundance of clues that distinguish newsgroups, the classifiers

barely have to identify topics from text at all, and they all perform at the

same high level.

For this reason, the functions that load 20 Newsgroups data provide a

parameter called **remove**, telling it what kinds of information to strip out

of each file. **remove** should be a tuple containing any subset of

``('headers', 'footers', 'quotes')``, telling it to remove headers, signature

blocks, and quotation blocks respectively.

>>> newsgroups_test = fetch_20newsgroups(subset='test',

... remove=('headers', 'footers', 'quotes'),

... categories=categories)

>>> vectors_test = vectorizer.transform(newsgroups_test.data)

>>> pred = clf.predict(vectors_test)

>>> metrics.f1_score(pred, newsgroups_test.target, average='macro')

0.77310...

This classifier lost over a lot of its F-score, just because we removed

metadata that has little to do with topic classification.

It loses even more if we also strip this metadata from the training data:

>>> newsgroups_train = fetch_20newsgroups(subset='train',

... remove=('headers', 'footers', 'quotes'),

... categories=categories)

>>> vectors = vectorizer.fit_transform(newsgroups_train.data)

>>> clf = MultinomialNB(alpha=.01)

>>> clf.fit(vectors, newsgroups_train.target)

MultinomialNB(alpha=0.01, class_prior=None, fit_prior=True)

>>> vectors_test = vectorizer.transform(newsgroups_test.data)

>>> pred = clf.predict(vectors_test)

>>> metrics.f1_score(newsgroups_test.target, pred, average='macro')

0.76995...

Some other classifiers cope better with this harder version of the task. Try

running :ref:`sphx_glr_auto_examples_model_selection_grid_search_text_feature_extraction.py` with and without

the ``--filter`` option to compare the results.

.. topic:: Recommendation

When evaluating text classifiers on the 20 Newsgroups data, you

should strip newsgroup-related metadata. In scikit-learn, you can do this by

setting ``remove=('headers', 'footers', 'quotes')``. The F-score will be

lower because it is more realistic.

.. topic:: Examples

* :ref:`sphx_glr_auto_examples_model_selection_grid_search_text_feature_extraction.py`

* :ref:`sphx_glr_auto_examples_text_plot_document_classification_20newsgroups.py`

# 分割数据

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(news.data[:3000], news.target[:3000], test_size=0.25, random_state=33)

# 导入支持向量机(分类)模型

from sklearn.svm import SVC

# 导入TF-IDF文本抽取器

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.pipeline import Pipeline

# 利用Pipeline进行系统搭建

clf = Pipeline([('vect', TfidfVectorizer(stop_words='english')), ('svc', SVC())])

# 超参数设置 4*3=12组

parameters = {

'svc__gamma':np.logspace(-2, 1, 4), 'svc__C':np.logspace(-1, 1, 3)}

from sklearn.model_selection import GridSearchCV

# 初始化配置,n_job=-1代表使用计算机的全部CPU

gs = GridSearchCV(estimator=clf, param_grid=parameters, verbose=2, refit=True, cv=3, n_jobs=-1)

网络搜索函数参数:

class sklearn.model_selection.GridSearchCV(estimator, param_grid, scoring=None, fit_params=None, n_jobs=1, iid=True, refit=True, cv=None, verbose=0, pre_dispatch=‘2*n_jobs’, error_score=’raise’, return_train_score=’warn’)

-

estimator

选择使用的分类器,并且传入除需要确定最佳的参数之外的其他参数。每一个分类器都需要一个scoring参数,或者score方法:

estimator=RandomForestClassifier(min_samples_split=100,min_samples_leaf=20,max_depth=8,max_features=‘sqrt’,random_state=10),

-

param_grid

需要最优化的参数的取值,值为字典或者列表,例如:param_grid =param_test1,param_test1 = {‘n_estimators’:range(10,71,10)}。

-

scoring=None

模型评价标准,默认None,这时需要使用score函数;或者如scoring=‘roc_auc’,根据所选模型不同,评价准则不同。字符串(函数名),或是可调用对象,需要其函数签名形如:scorer(estimator, X, y);如果是None,则使用estimator的误差估计函数。具体值的选取看本篇第三节内容。

-

fit_params=None

-

n_jobs=1

n_jobs: 并行数,int:个数,-1:跟CPU核数一致, 1:默认值

-

iid=True

iid:默认True,为True时,默认为各个样本fold概率分布一致,误差估计为所有样本之和,而非各个fold的平均。

-

refit=True

默认为True,程序将会以交叉验证训练集得到的最佳参数,重新对所有可用的训练集与开发集进行,作为最终用于性能评估的最佳模型参数。即在搜索参数结束后,用最佳参数结果再次fit一遍全部数据集。

-

cv=None

交叉验证参数,默认None,使用三折交叉验证。指定fold数量,默认为3,也可以是yield训练/测试数据的生成器。

-

verbose=0, scoring=None

verbose:日志冗长度,int:冗长度,0:不输出训练过程,1:偶尔输出,>1:对每个子模型都输出。

-

pre_dispatch=‘2*n_jobs’

指定总共分发的并行任务数。当n_jobs大于1时,数据将在每个运行点进行复制,这可能导致OOM,而设置pre_dispatch参数,则可以预先划分总共的job数量,使数据最多被复制pre_dispatch次

-

error_score=’raise’

-

return_train_score=’warn’

如果“False”,cv_results_属性将不包括训练分数

回到sklearn里面的GridSearchCV,GridSearchCV用于系统地遍历多种参数组合,通过交叉验证确定最佳效果参数。

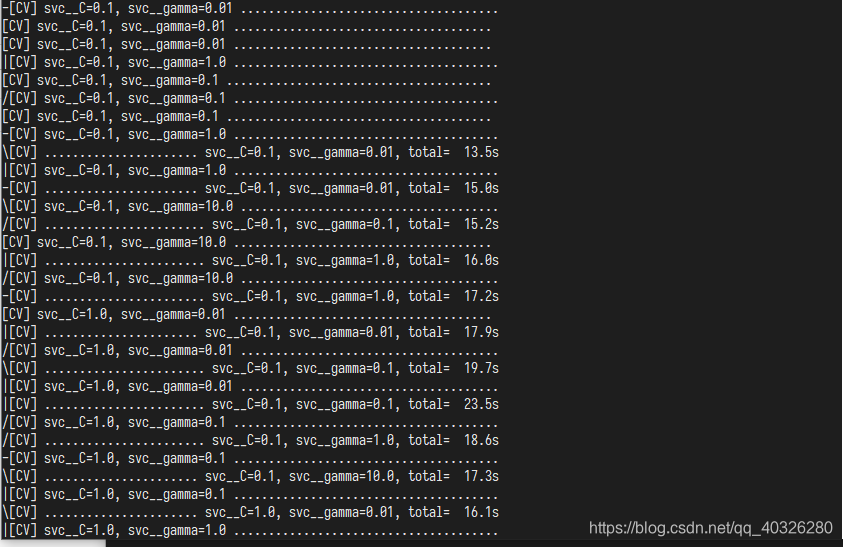

%time

gs_result = gs.fit(X_train, y_train)

Wall time: 0 ns

Fitting 3 folds for each of 12 candidates, totalling 36 fits

[Parallel(n_jobs=-1)]: Using backend LokyBackend with 8 concurrent workers.

[Parallel(n_jobs=-1)]: Done 36 out of 36 | elapsed: 1.5min finished

返回结果

-

cv_results_ : dict of numpy (masked) ndarrays

具有键作为列标题和值作为列的dict,可以导入到DataFrame中。注意,“params”键用于存储所有参数候选项的参数设置列表。

-

best_estimator_ : estimator

通过搜索选择的估计器,即在左侧数据上给出最高分数(或指定的最小损失)的估计器。 如果refit = False,则不可用。

-

best_score_ : float

best_estimator的分数

-

best_params_ : dict

在保存数据上给出最佳结果的参数设置

-

best_index_ : int

对应于最佳候选参数设置的索引(cv_results_数组)。

search.cv_results _ [‘params’] [search.best_index_]中的dict给出了最佳模型的参数设置,给出了最高的平均分数(search.best_score_)。 -

scorer_ : function

Scorer function used on the held out data to choose the best parameters for the model.

-

n_splits_ : int

The number of cross-validation splits (folds/iterations).

-

grid_scores_:

给出不同参数情况下的评价结果

print(gs_result.score(X_test, y_test))

print(gs_result.best_params_, gs.best_score_)

0.8226666666666667

{'svc__C': 10.0, 'svc__gamma': 0.1} 0.7888888888888889