CentOS7搭建Kubernetes v1.20集群

一、环境准备

1.1基本环境

| 项目 | 软件及版本 |

|---|---|

| 操作系统 | Mac Mojave 10.14.6 |

| 虚拟化软件 | VMware Fusion 10.0.0 |

| CentOS镜像 | CentOS-7-x86_64-DVD-1908.iso |

| Docker | 1.13.1 |

| Kubernetes | 1.20.2 |

创建两个虚拟机,安装CentOS系统,虚拟机要求:

CPU:最低2c

内存:最低4G

1.2网络配置

先配置网络,然后通过secureCRT连接进行操作,vmware fusion直连的终端还是有很多不方便的地方。

两台虚拟机,分配配置IP地址为172.16.197.160和172.16.197.161,修改各自的网络配置文件

cd /etc/sysconfig/network-scripts/

vi ifcfg-ens33

172.16.197.160的网络配置为:

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO="static" #修改为static,静态IP地址

IPADDR=172.16.197.160 #设置为合法范围内的某个IP地址

GATEWAY=172.16.197.2 #设置为前面记录的网关

NETMASK=255.255.255.0 #设置为前面记录的子网掩码

DNS1="10.13.7.64" #设置为前面记录的DNS地址

DNS2="10.13.7.63"

DNS3="172.20.10.1"

DNS4="192.168.1.1"

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens33

UUID=fe4bddc9-aa46-46ed-8c39-56dfa3961460

DEVICE=ens33

ONBOOT=yes

172.16.197.161的网络配置为:

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO="static" #修改为static,静态IP地址

IPADDR=172.16.197.161 #设置为合法范围内的某个IP地址

GATEWAY=172.16.197.2 #设置为前面记录的网关

NETMASK=255.255.255.0 #设置为前面记录的子网掩码

DNS1="10.13.7.64" #设置为前面记录的DNS地址

DNS2="10.13.7.63"

DNS3="172.20.10.1"

DNS4="192.168.1.1"

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens33

UUID=fe4bddc9-aa46-46ed-8c39-56dfa3961460

DEVICE=ens33

ONBOOT=yes

新虚拟机查看网络配置时,发现不能使用ifconfig命令,通过yum install net-tools进行安装,又发现不能使用yum命令,报错信息为:could not resolve host: mirrorlist.centos.org,参考《CentOS7的yum无法安装,报错could not resolve host: mirrorlist.centos.org》进行处理。

本机使用VMware Fusion搭建虚拟机,配置静态IP地址可以参考《Mac VMware Fusion CentOS7配置静态IP》

1.3yum源配置

需要增加国内kubernetes源,可以使用清华kubernetes源,也可以采用阿里云的kubernetes源,下面介绍下使用阿里云的kubernetes源:

cat <<EOF > kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

sudo mv kubernetes.repo /etc/yum.repos.d/

另外也可以为了安装相关组件提升速度,配置CentOS国内yum源,可以看我单独写的另外一篇文章:《CentOS7配置国内yum源》。

1.4修改机器名

修改机器名,否则在搭建集群时,所有机器都是localhost.localdomain,导致无法注册到master。

设置IP地址为172.16.197.160的虚拟机机器名为k8s.master001

hostnamectl set-hostname k8s.master001

设置IP地址为172.16.197.161的虚拟机机器名为k8s.node001

hostnamectl set-hostname k8s.node001

1.5修改安全策略

关闭防火墙

systemctl disable firewalld

关闭selinux,修改配置/etc/selinux/config,将SELINUX设置为disabled,然后重启机器;

vi /etc/selinux/config

修改完成后重启机器。

reboot now

1.6修改系统配置

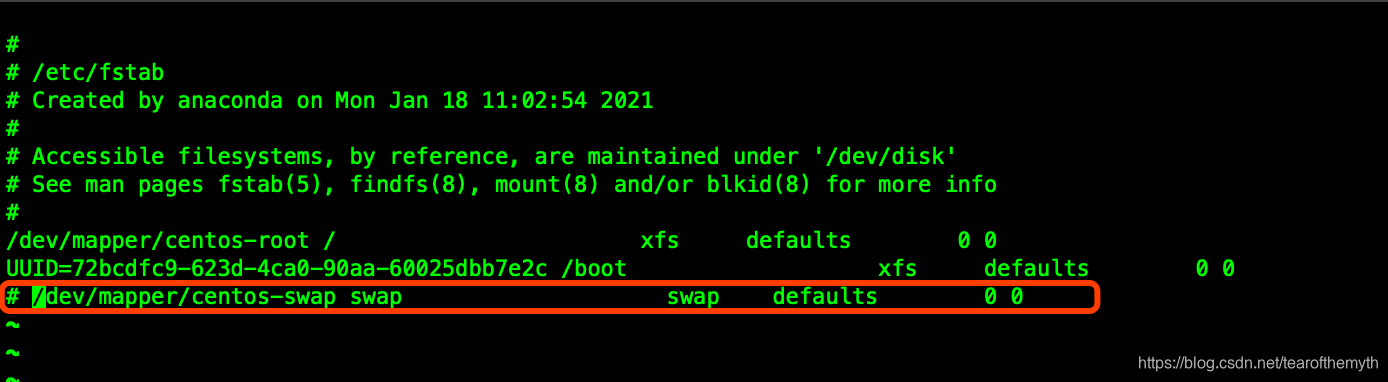

关闭swap,修改/etc/fstab,注释最后一行;

vi /etc/fstab

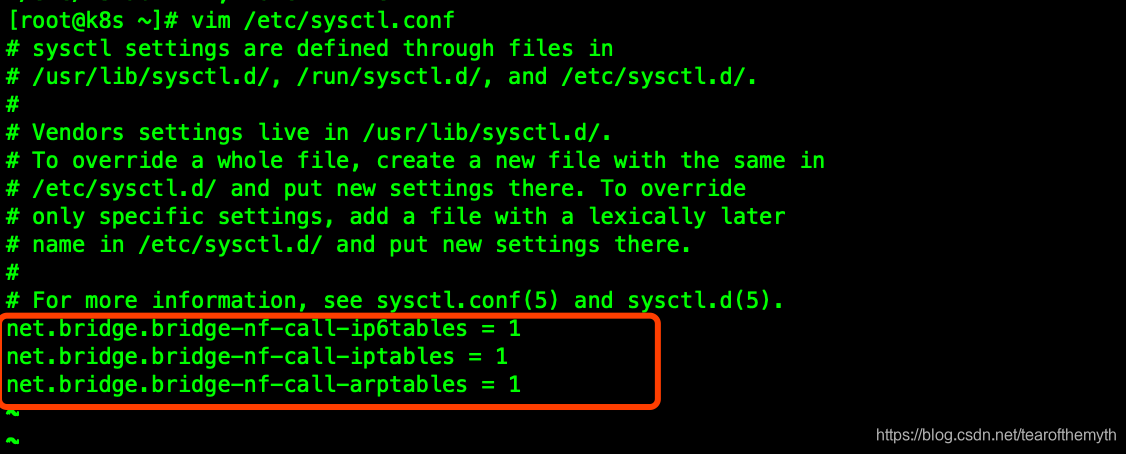

开启 bridge-nf,修改

开启 bridge-nf,修改/etc/sysctl.conf,末尾添加如下配置:

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-arptables = 1

然后重启机器,reboot now,修改完毕。

二、安装Docker

2.1安装Docker

yum -y install docker

2.2启动Docker

启动docker,并设置开机启动:

systemctl enable docker && systemctl start docker

2.3配置国内镜像仓库

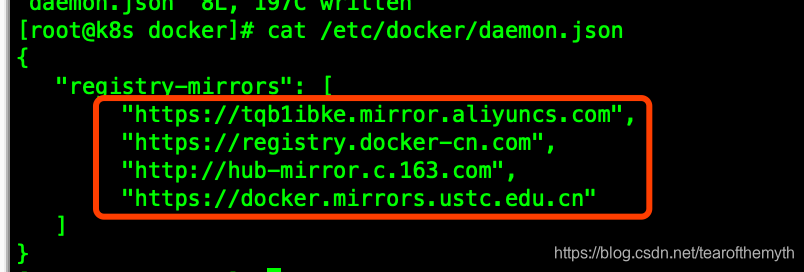

修改docker配置文件/etc/docker/daemon.json,如果没有就新建一个,配置内容:

其中https://tqb1ibke.mirror.aliyuncs.com为阿里云镜像加速站,注册一个阿里云账号就可以查看到分配给自己的加速站地址。

重启Docker服务

sudo systemctl daemon-reload

sudo systemctl restart docker

三、安装Kubernetes(Master)

3.1安装相关组件

yum -y install kubelet kubeadm kubectl kubernetes-cni

启动kubelet,并设置开机启动:

systemctl enable kubelet && systemctl start kubelet

3.2安装master节点

使用kubeadm工具安装kubernetes集群master节点,因为本案例打算使用Flannel作为集群的网络插件,所以在安装时,需要制定CIDR,添加参数pod-network-cidr:

kubeadm init --image-repository registry.aliyuncs.com/google_containers --pod-network-cidr=10.244.0.0/16

kubeadm默认会去国外的镜像仓库下载kubernetes的相关镜像,我们通过传参使用阿里云镜像仓库--image-repository registry.aliyuncs.com/google_containers。

等待一段时间后,可以得到安装完成的界面:

[root@k8s manifests]# kubeadm init --image-repository registry.aliyuncs.com/google_containers --pod-network-cidr=10.244.0.0/16

[init] Using Kubernetes version: v1.20.2

[preflight] Running pre-flight checks

[WARNING Hostname]: hostname "k8s.master001" could not be reached

[WARNING Hostname]: hostname "k8s.master001": lookup k8s.master001 on 10.13.7.64:53: no such host

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [k8s.master001 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 172.16.197.160]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [k8s.master001 localhost] and IPs [172.16.197.160 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [k8s.master001 localhost] and IPs [172.16.197.160 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 16.003945 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.20" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node k8s.master001 as control-plane by adding the labels "node-role.kubernetes.io/master=''" and "node-role.kubernetes.io/control-plane='' (deprecated)"

[mark-control-plane] Marking the node k8s.master001 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: 2c1vo6.s4owm5tqggl08way

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 172.16.197.160:6443 --token 2c1vo6.s4owm5tqggl08way \

--discovery-token-ca-cert-hash sha256:7ef0f00150be68e05e5b39001a09899f2c2cd3426b254a10f86c81e57aed9c8c

按照上述提示,顺次执行一下命令

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

如果是root用户,可以只执行如下命令:

export KUBECONFIG=/etc/kubernetes/admin.conf

最后,需要牢记安装最后的kubeadm join命令,在节点加入到集群时,会用到该命令:

kubeadm join 172.16.197.160:6443 --token 2c1vo6.s4owm5tqggl08way --discovery-token-ca-cert-hash sha256:7ef0f00150be68e05e5b39001a09899f2c2cd3426b254a10f86c81e57aed9c8c

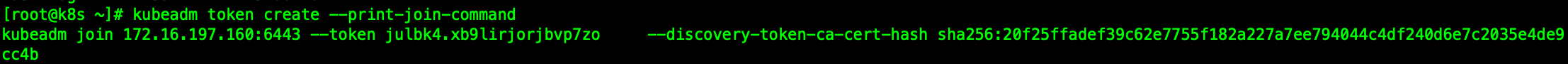

token有效期默认是24小时,如果忘记了,可以使用如下命令再生成一个:

kubeadm token create --print-join-command

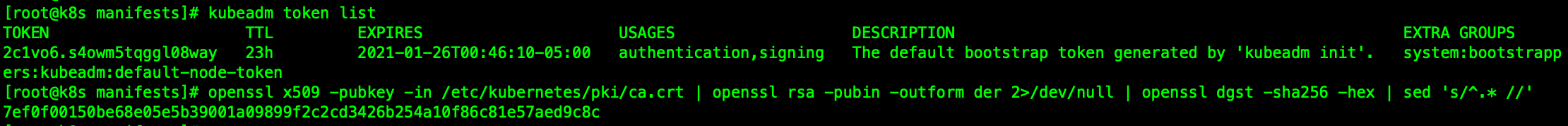

也可以通过kubeadm token list查看token,通过openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //'查看discovery-token-ca-cert-hash

kubeadm token list

openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //'

可以看到token和discovery-token-ca-cert-hash的值。

3.3环境变量

上节中的export命令重启后需要重新执行,所以我们可以把它写入到.bash_profile中,具体如下:

echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile

source ~/.bash_profile

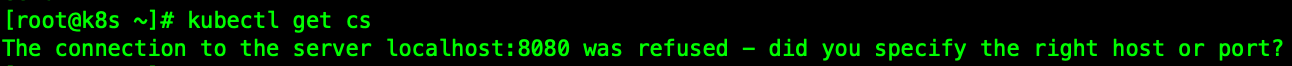

kubectl命令需要使用kubernetes-admin来运行,所以在不执行以上命令时,使用kubectl命令,会出现如下错误:

The connection to the server localhost:8080 was refused - did you specify the right host or port?

3.4检查状态

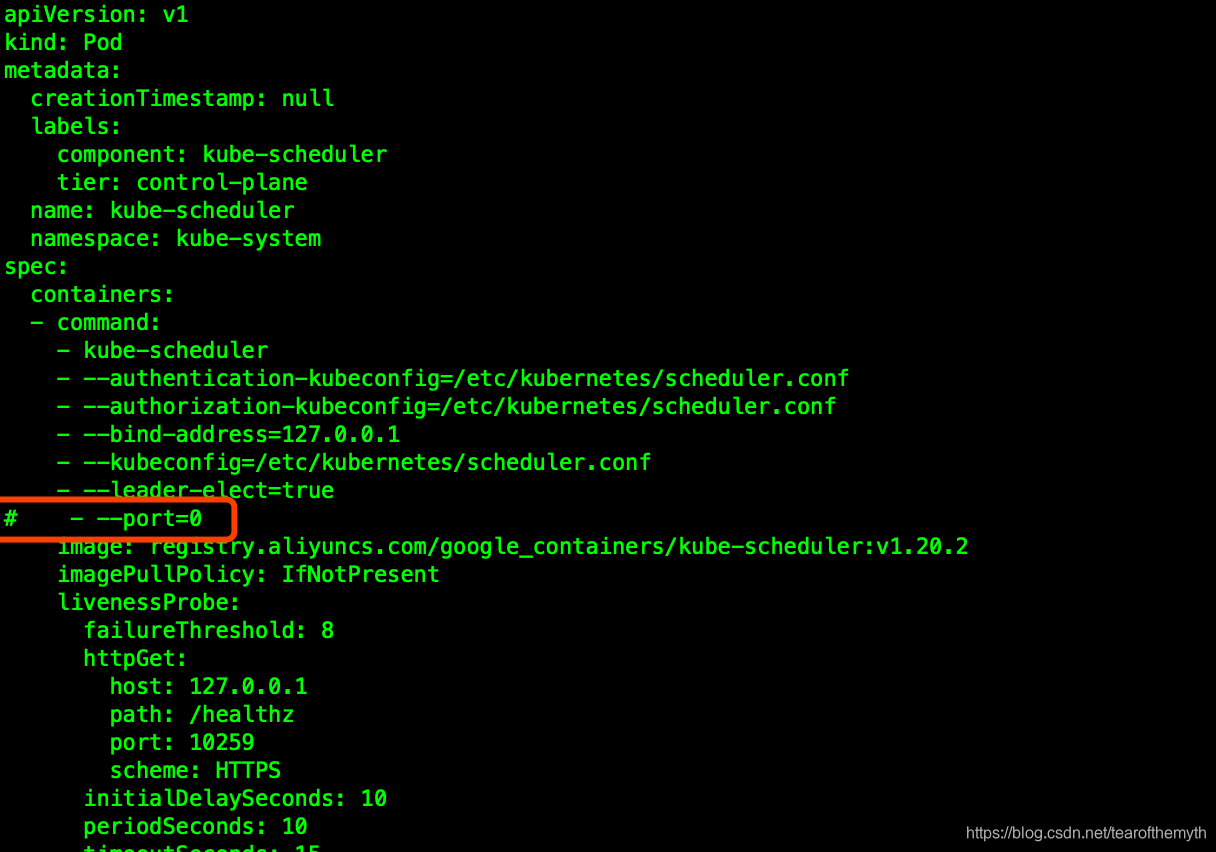

执行kubectl get cs查看组件及状态,发现scheduler和controller-manager的状态为Unhealthy,一般是因为kube-scheduler和kube-controller-manager组件配置默认禁用了非安全端口,修改配置文件中的port=0配置,配置文件路径:

/etc/kubernetes/manifests/kube-scheduler.yaml

/etc/kubernetes/manifests/kube-controller-manager.yaml

分别找到两个文件的- --port=0,将其注释掉:

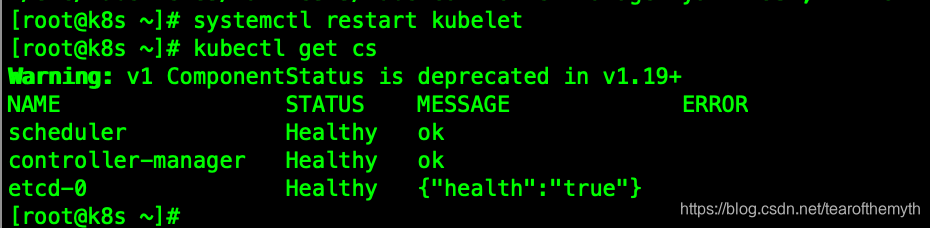

然后执行systemctl restart kubelet重启kubelet,查看状态,已经正常:

3.5安装Flannel网络插件

通过执行官网命令安装Flannel插件:

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

此时发现无法进行安装,报错:Unable to connect to the server: read tcp 172.16.197.160:52028->151.101.76.133:443: read: connection reset by peer,说明因为网络问题无法访问到yml文件。

我已经将该文件kube-flannel.yml下载下来,供大家使用。

将该文件上传到服务器后,在当前文件目录执行命令进行安装:

kubectl apply -f kube-flannel.yml

可以看到输出日志:

[root@k8s ~]# kubectl apply -f kube-flannel.yml

podsecuritypolicy.policy/psp.flannel.unprivileged created

Warning: rbac.authorization.k8s.io/v1beta1 ClusterRole is deprecated in v1.17+, unavailable in v1.22+; use rbac.authorization.k8s.io/v1 ClusterRole

clusterrole.rbac.authorization.k8s.io/flannel created

Warning: rbac.authorization.k8s.io/v1beta1 ClusterRoleBinding is deprecated in v1.17+, unavailable in v1.22+; use rbac.authorization.k8s.io/v1 ClusterRoleBinding

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds-amd64 created

daemonset.apps/kube-flannel-ds-arm64 created

daemonset.apps/kube-flannel-ds-arm created

daemonset.apps/kube-flannel-ds-ppc64le created

daemonset.apps/kube-flannel-ds-s390x created

通过命令kubectl get pods -o wide --all-namespaces查看当前pod:

[root@k8s ~]# kubectl get pods -o wide --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system coredns-7f89b7bc75-5c6zv 0/1 Pending 0 50m <none> <none> <none> <none>

kube-system coredns-7f89b7bc75-lbnxs 0/1 Pending 0 50m <none> <none> <none> <none>

kube-system etcd-k8s.master001 1/1 Running 0 50m 172.16.197.160 k8s.master001 <none> <none>

kube-system kube-apiserver-k8s.master001 1/1 Running 0 50m 172.16.197.160 k8s.master001 <none> <none>

kube-system kube-controller-manager-k8s.master001 1/1 Running 0 37m 172.16.197.160 k8s.master001 <none> <none>

kube-system kube-flannel-ds-amd64-qhbzl 0/1 Init:0/1 0 80s 172.16.197.160 k8s.master001 <none> <none>

kube-system kube-proxy-5856c 1/1 Running 0 50m 172.16.197.160 k8s.master001 <none> <none>

kube-system kube-scheduler-k8s.master001 1/1 Running 0 36m 172.16.197.160 k8s.master001 <none> <none>

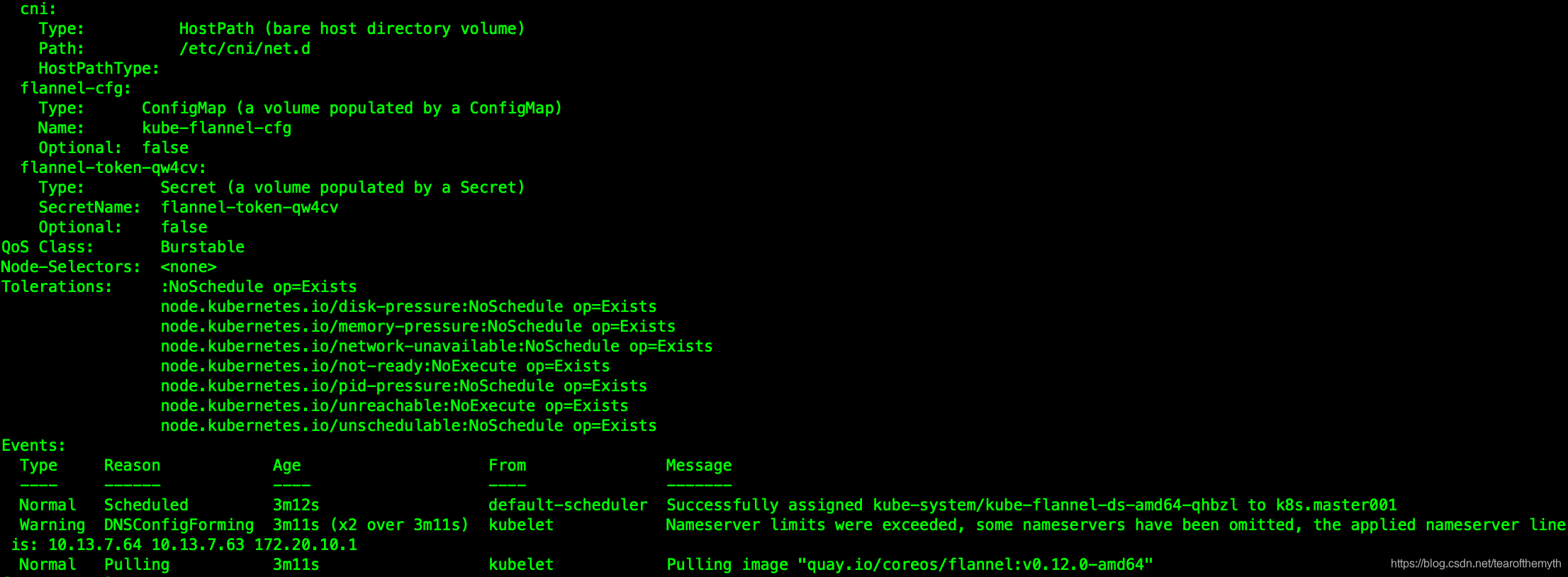

发现pod kube-flannel-ds-amd64-qhbzl一直为Init:0/1状态,通过执行命令查看pod启动情况可以发现问题,

kubectl describe pods kube-flannel-ds-amd64-qhbzl -n kube-system

发现一直停留在Pulling image "quay.io/coreos/flannel:v0.12.0-amd64",说明又是因为网络原因,无法下载镜像,可以从其他地方下载,然后重新打tag,也可以从其他地方导出,然后重新load到当前机器,此处就不具体说了。

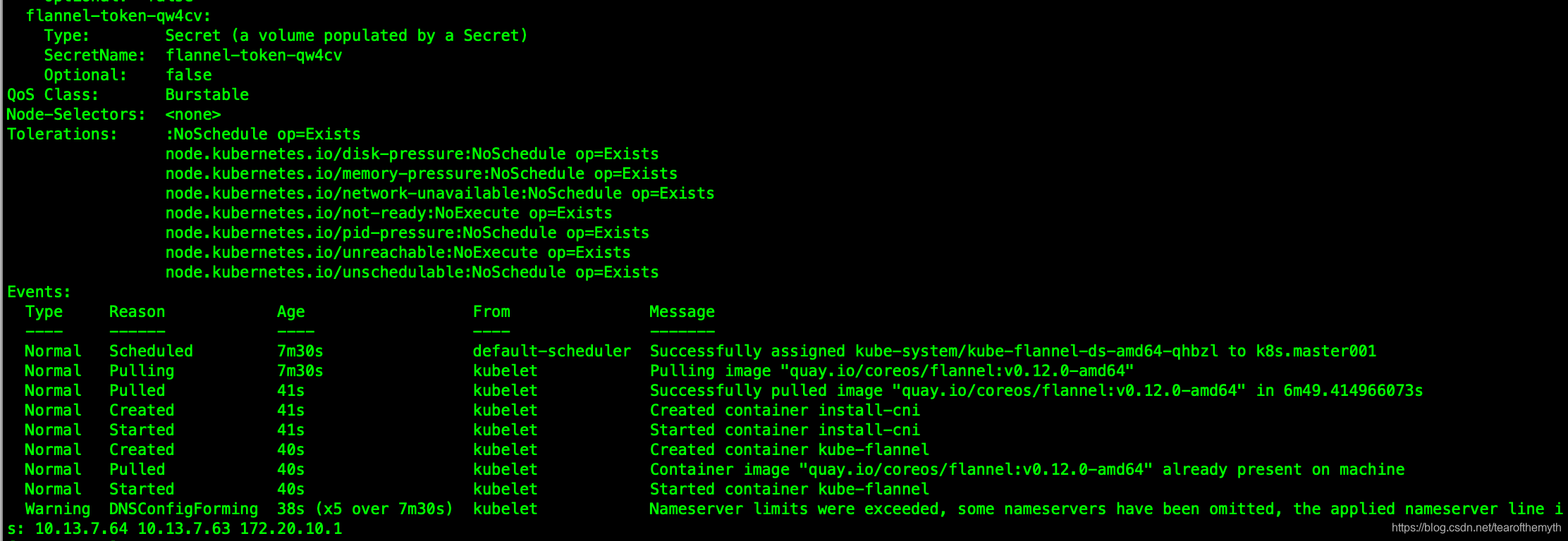

解决问题后,可以看到pod正常启动的日志:

然后重新查看pods:

[root@k8s ~]# kubectl get pods -o wide --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system coredns-7f89b7bc75-5c6zv 1/1 Running 0 57m 10.244.0.2 k8s.master001 <none> <none>

kube-system coredns-7f89b7bc75-lbnxs 1/1 Running 0 57m 10.244.0.3 k8s.master001 <none> <none>

kube-system etcd-k8s.master001 1/1 Running 0 57m 172.16.197.160 k8s.master001 <none> <none>

kube-system kube-apiserver-k8s.master001 1/1 Running 0 57m 172.16.197.160 k8s.master001 <none> <none>

kube-system kube-controller-manager-k8s.master001 1/1 Running 0 43m 172.16.197.160 k8s.master001 <none> <none>

kube-system kube-flannel-ds-amd64-qhbzl 1/1 Running 0 7m40s 172.16.197.160 k8s.master001 <none> <none>

kube-system kube-proxy-5856c 1/1 Running 0 57m 172.16.197.160 k8s.master001 <none> <none>

kube-system kube-scheduler-k8s.master001 1/1 Running 0 43m 172.16.197.160 k8s.master001 <none> <none>

kube-flannel-ds-amd64-qhbzl正常启动后,coredns即可自动启动。

四、安装Kubernetes(Node)

4.1初始化环境

按照如上步骤,配置节点环境:

网络配置静态IP(1.2)

修改机器名(1.4)

修改安全策略(1.5)

修改系统配置(1.6)

安装docker(二)

安装kubeadm、kubelet、kubectl以及kubernetes-cni(3.1)

拷贝master节点的admin.conf到相同位置,文件位置:/etc/kubernetes/admin.conf,

然后在node节点执行:

echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile

source ~/.bash_profile

4.2加入集群

在node节点执行kubeadm join命令:

kubeadm join 172.16.197.160:6443 --token 2c1vo6.s4owm5tqggl08way --discovery-token-ca-cert-hash sha256:7ef0f00150be68e05e5b39001a09899f2c2cd3426b254a10f86c81e57aed9c8c

4.3环境检查

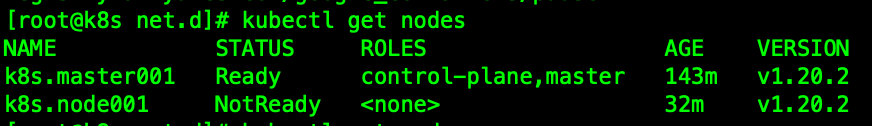

执行命令kubectl get nodes查看node状态,发现node节点为NotReady状态:

执行kubectl get pods -o wide --all-namespaces,查看pods,发现pod kube-flannel-ds-amd64-84ql2为Init:0/1状态:

执行kubectl describe pods kube-flannel-ds-amd64-84ql2 -n kube-system查看pod启动日志,发现无法获取镜像:

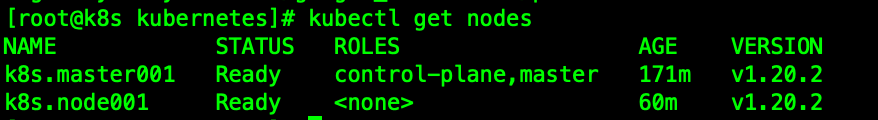

照旧通过其他手段获取到镜像后,查看pods状态,发现pod kube-flannel-ds-amd64-84ql2的状态变为Init:ImagePullBackOff,重启kubelet服务:

systemctl restart kubelet

重新查看pods状态,全部正常Running:

查看node状态,全部为Ready状态:

至此,Kubernetes集群搭建完成。