为什么有这么多线程模型和线程池策略?

在网络通信过程中,会发生很多事件,如连接事件,读取到消息的事件。当读取到这个事件的时候,我们是将事件直接在IO线程上执行,还是放到线程池中执行?是需要我们根据业务的场景来进行配置的,这样能最大限度的提高吞吐量

如果事件处理的逻辑能迅速完成,并且不会发起新的 IO 请求,比如只是在内存中记个标识,则直接在 IO 线程上处理更快,因为减少了线程池调度。

但如果事件处理逻辑较慢,或者需要发起新的 IO 请求,比如需要查询数据库,则必须派发到线程池,否则 IO 线程阻塞,将导致不能接收其它请求。

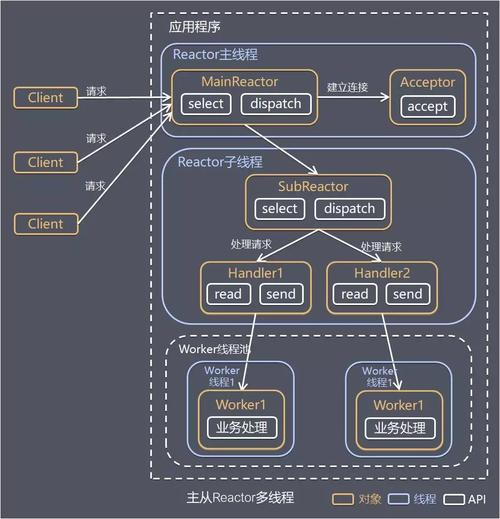

因为Dubbo通信默认使用的是Netty,这里的IO线程就是Reactor线程。所以上节我们介绍Reactor开发模式的时候,要把业务逻辑的执行放在Worker线程池中,不然并发上不去。

我就以Netty来演示一下不同的处理策略对QPS的影响,这样你就能搞懂Dubbo为什么要搞这么多线程模型和线程池策略。

在Reactor线程上直接处理请求

Server端启动引导类

public class Server {

private final int port;

public Server(int port) {

this.port = port;

}

public void start() throws InterruptedException {

EventLoopGroup bossGroup = new NioEventLoopGroup();

EventLoopGroup workerGroup = new NioEventLoopGroup();

try {

ServerBootstrap bootstrap = new ServerBootstrap();

bootstrap.group(bossGroup, workerGroup)

.channel(NioServerSocketChannel.class)

.childOption(ChannelOption.SO_REUSEADDR, true)

.childHandler(new ChannelInitializer<SocketChannel>() {

@Override

protected void initChannel(SocketChannel ch) throws Exception {

ChannelPipeline pipeline = ch.pipeline();

pipeline.addLast(new FixedLengthFrameDecoder(Long.BYTES));

pipeline.addLast(ServerBusinessHandler.INSTANCE);

}

});

ChannelFuture future = bootstrap.bind(port).sync();

System.out.println("服务端启动了");

future.channel().closeFuture().sync();

} finally {

bossGroup.shutdownGracefully().sync();

workerGroup.shutdownGracefully().sync();

}

}

public static void main(String[] args) throws InterruptedException {

new Server(8080).start();

}

}

Server端处理业务逻辑的handler,直接将客户端的读取返回。

@Slf4j

@ChannelHandler.Sharable

public class ServerBusinessHandler extends SimpleChannelInboundHandler<ByteBuf> {

public static final ChannelHandler INSTANCE = new ServerBusinessHandler();

@Override

protected void channelRead0(ChannelHandlerContext ctx, ByteBuf msg) throws Exception {

ByteBuf data = Unpooled.directBuffer();

data.writeBytes(msg);

Object result = getResult(data);

ctx.channel().writeAndFlush(result);

}

/**

* 90.0%的响应时间为1m,以此类推

*/

private Object getResult(ByteBuf data) {

// 90.0% == 1ms

// 95.0% == 10ms

// 99.0% == 100ms

// 99.9% == 1000ms

int level = ThreadLocalRandom.current().nextInt(1, 1000);

int time;

if (level <= 900) {

time = 1;

} else if (level <= 950) {

time = 10;

} else if (level <= 990) {

time = 100;

} else {

time = 1000;

}

try {

TimeUnit.MILLISECONDS.sleep(time);

} catch (InterruptedException e) {

e.printStackTrace();

}

return data;

}

}

客户端启动引导类,建立1000个连接

public class Client {

private final String host;

private final int port;

public Client(String host, int port) {

this.host = host;

this.port = port;

}

public void start() throws Exception {

EventLoopGroup group = new NioEventLoopGroup();

Bootstrap bootstrap = new Bootstrap();

bootstrap.group(group)

.channel(NioSocketChannel.class)

.option(ChannelOption.SO_REUSEADDR, true);

bootstrap.handler(new ChannelInitializer<SocketChannel>() {

@Override

protected void initChannel(SocketChannel ch) throws Exception {

ChannelPipeline pipeline = ch.pipeline();

pipeline.addLast(new FixedLengthFrameDecoder(Long.BYTES));

pipeline.addLast(ClientBusinessHandler.INSTANCE);

}

});

// 注意不要关闭

for (int i = 0; i < 1000; i++) {

bootstrap.connect(host, port).get();

}

}

public static void main(String[] args) throws Exception {

new Client("127.0.0.1", 8080).start();

}

}

Client端处理业务逻辑的handler,每隔1s向服务端发送当前时间戳

@Slf4j

@ChannelHandler.Sharable

public class ClientBusinessHandler extends SimpleChannelInboundHandler<ByteBuf> {

public static final ChannelHandler INSTANCE = new ClientBusinessHandler();

// 开始发起请求的时间

private static AtomicLong beginTime = new AtomicLong(0);

// 总的响应时间

private static AtomicLong totalResponseTime = new AtomicLong(0);

// 总的响应次数

private static AtomicLong totalRequest = new AtomicLong(0);

/**

* qps: 每秒的查询量

*/

public static final Thread thread = new Thread(() -> {

while (true) {

long duration = System.currentTimeMillis() - beginTime.get();

if (duration != 0) {

System.out.println("qps: " + 1000 * totalRequest.get() / duration

+ " avg response time: " + ((float) totalResponseTime.get()) / totalRequest.get());

try {

TimeUnit.SECONDS.sleep(2);

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}

});

@Override

protected void channelRead0(ChannelHandlerContext ctx, ByteBuf msg) throws Exception {

totalResponseTime.addAndGet(System.currentTimeMillis() - msg.readLong());

totalRequest.incrementAndGet();

if (beginTime.compareAndSet(0, System.currentTimeMillis())) {

thread.start();

}

}

@Override

public void channelActive(ChannelHandlerContext ctx) throws Exception {

// 每隔1秒发送一次请求

ctx.executor().scheduleAtFixedRate(() -> {

ByteBuf byteBuf = ctx.alloc().ioBuffer();

byteBuf.writeLong(System.currentTimeMillis());

ctx.channel().writeAndFlush(byteBuf);

}, 0, 1, TimeUnit.SECONDS);

}

}

测试的qps如下,qps在500左右,平均响应时间不断增加。

qps: 20000 avg response time: 92.2

qps: 550 avg response time: 284.71674

qps: 568 avg response time: 875.98816

qps: 513 avg response time: 1262.3419

qps: 496 avg response time: 1874.6201

qps: 501 avg response time: 2421.7954

qps: 485 avg response time: 2907.4338

qps: 457 avg response time: 3244.938

qps: 481 avg response time: 4103.203

qps: 480 avg response time: 4645.6143

qps: 484 avg response time: 5207.447

qps: 487 avg response time: 5727.8823

因为业务逻辑都用Reactor线程来执行,会阻塞新的连接请求,导致整体的响应速度较慢。我们可以把执行业务逻辑这部分放到线程池中执行。

用自定义的业务线程池处理请求

Server类中的启动类设置为如下

bootstrap.group(bossGroup, workerGroup)

.channel(NioServerSocketChannel.class)

.childOption(ChannelOption.SO_REUSEADDR, true)

.childHandler(new ChannelInitializer<SocketChannel>() {

@Override

protected void initChannel(SocketChannel ch) throws Exception {

ChannelPipeline pipeline = ch.pipeline();

pipeline.addLast(new FixedLengthFrameDecoder(Long.BYTES));

pipeline.addLast(ServerBusinessThreadPoolHandler.INSTANCE);

}

});

ServerBusinessThreadPoolHandler类定义如下

@Slf4j

@ChannelHandler.Sharable

public class ServerBusinessThreadPoolHandler extends SimpleChannelInboundHandler<ByteBuf> {

public static final ChannelHandler INSTANCE = new ServerBusinessThreadPoolHandler();

private static ExecutorService threadPool = Executors.newFixedThreadPool(16);

@Override

protected void channelRead0(ChannelHandlerContext ctx, ByteBuf msg) throws Exception {

ByteBuf data = Unpooled.directBuffer();

data.writeBytes(msg);

threadPool.submit(() -> {

Object result = getResult(data);

ctx.channel().writeAndFlush(result);

});

}

private Object getResult(ByteBuf data) {

// 90.0% == 1ms

// 95.0% == 10ms

// 99.0% == 100ms

// 99.9% == 1000ms

int level = ThreadLocalRandom.current().nextInt(1, 1000);

int time;

if (level <= 900) {

time = 1;

} else if (level <= 950) {

time = 10;

} else if (level <= 990) {

time = 100;

} else {

time = 1000;

}

try {

TimeUnit.MILLISECONDS.sleep(time);

} catch (InterruptedException e) {

e.printStackTrace();

}

return data;

}

}

测试的qps如下,qps在1000左右,平均响应时间在200ms左右

qps: 5000 avg response time: 163.6

qps: 932 avg response time: 204.34776

qps: 970 avg response time: 283.8759

qps: 1018 avg response time: 265.13214

qps: 1012 avg response time: 236.31958

qps: 1010 avg response time: 210.48587

qps: 1008 avg response time: 185.67007

qps: 1006 avg response time: 181.65208

qps: 1005 avg response time: 184.55135

qps: 997 avg response time: 196.81935

qps: 983 avg response time: 221.25288

用线程池来执行业务逻辑这个功能很常见,所以Netty也提供了类似的功能

用Netty提供的线程池处理请求

定义一个businessGroup来处理业务逻辑,这样ServerBusinessHandler所有方法的调用都会在线程池中执行。

public class Server {

private final int port;

public Server(int port) {

this.port = port;

}

public void start() throws InterruptedException {

EventLoopGroup bossGroup = new NioEventLoopGroup();

EventLoopGroup workerGroup = new NioEventLoopGroup();

EventLoopGroup businessGroup = new NioEventLoopGroup(1000);

try {

ServerBootstrap bootstrap = new ServerBootstrap();

bootstrap.group(bossGroup, workerGroup)

.channel(NioServerSocketChannel.class)

.childOption(ChannelOption.SO_REUSEADDR, true)

.childHandler(new ChannelInitializer<SocketChannel>() {

@Override

protected void initChannel(SocketChannel ch) throws Exception {

ChannelPipeline pipeline = ch.pipeline();

pipeline.addLast(new FixedLengthFrameDecoder(Long.BYTES));

// 增加handler的时候,指定businessGroup

pipeline.addLast(businessGroup, ServerBusinessHandler.INSTANCE);

}

});

ChannelFuture future = bootstrap.bind(port).sync();

System.out.println("服务端启动了");

future.channel().closeFuture().sync();

} finally {

bossGroup.shutdownGracefully().sync();

workerGroup.shutdownGracefully().sync();

}

}

public static void main(String[] args) throws InterruptedException {

new Server(8080).start();

}

}

可以看到qps在1000左右,但是响应时间更短。因此可以预估用Netty提供的线程池qps会更大,只是因为这个demo每秒的请求量为1000

qps: 1000 avg response time: 98.0

qps: 1049 avg response time: 17.517094

qps: 1024 avg response time: 16.01046

qps: 1016 avg response time: 16.015198

qps: 1012 avg response time: 15.585056

qps: 1010 avg response time: 15.070582

qps: 1009 avg response time: 15.440399

qps: 1006 avg response time: 15.048695

qps: 1006 avg response time: 15.080034

qps: 1005 avg response time: 15.242393

qps: 1005 avg response time: 15.867775

对比一下

| 线程模型 | qps | 响应时间 |

|---|---|---|

| 在Reactor线程上直接处理请求 | 500 | 随着时间不断增加 |

| 用自定义的业务线程池处理请求 | 1000 | 200ms |

| 用Netty提供的线程池处理请求 | 1000 | 20ms |

Dubbo中的线程模型和线程池策略

因为要适应不同的场景,所有Dubbo提供了多种线程模型和线程池策略,相关配置如下

<dubbo:protocol name="dubbo" dispatcher="all" threadpool="fixed" threads="100" />

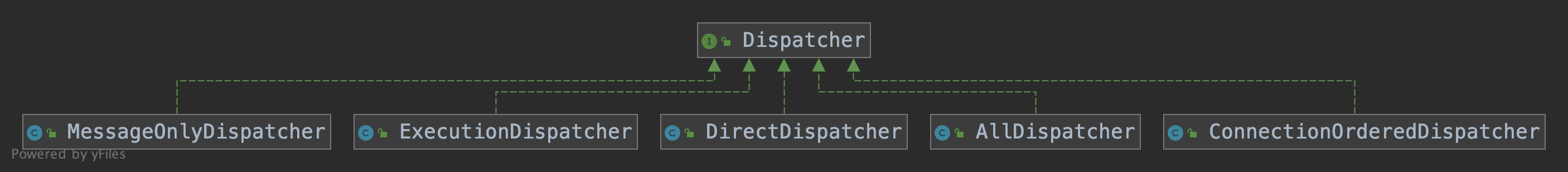

Dubbo提供的线程模型如下

| 类别 | 解释 |

|---|---|

| all | 所有消息都派发到线程池,包括请求,响应,连接事件,断开事件,心跳等 |

| direct | 所有消息都不派发到线程池,全部在 IO 线程上直接执行 |

| message | 只有请求响应消息派发到线程池,其它连接断开事件,心跳等消息,直接在 IO 线程上执行 |

| execution | 只有请求消息派发到线程池,不含响应,响应和其它连接断开事件,心跳等消息,直接在 IO 线程上执行 |

| connection | 在 IO 线程上,将连接断开事件放入队列,有序逐个执行,其它消息派发到线程池 |

看一下AllChannelHandler源码,其余类的源码类似,不再分析

public class AllChannelHandler extends WrappedChannelHandler {

public AllChannelHandler(ChannelHandler handler, URL url) {

super(handler, url);

}

/** 处理连接事件 */

@Override

public void connected(Channel channel) throws RemotingException {

ExecutorService cexecutor = getExecutorService();

try {

cexecutor.execute(new ChannelEventRunnable(channel, handler, ChannelState.CONNECTED));

} catch (Throwable t) {

throw new ExecutionException("connect event", channel, getClass() + " error when process connected event .", t);

}

}

/** 处理断开事件 */

@Override

public void disconnected(Channel channel) throws RemotingException {

ExecutorService cexecutor = getExecutorService();

try {

cexecutor.execute(new ChannelEventRunnable(channel, handler, ChannelState.DISCONNECTED));

} catch (Throwable t) {

throw new ExecutionException("disconnect event", channel, getClass() + " error when process disconnected event .", t);

}

}

/** 处理请求和响应消息,这里的 message 变量类型可能是 Request,也可能是 Response */

@Override

public void received(Channel channel, Object message) throws RemotingException {

ExecutorService cexecutor = getExecutorService();

try {

cexecutor.execute(new ChannelEventRunnable(channel, handler, ChannelState.RECEIVED, message));

} catch (Throwable t) {

// 省略部分代码

}

}

/** 处理异常信息 */

@Override

public void caught(Channel channel, Throwable exception) throws RemotingException {

ExecutorService cexecutor = getExecutorService();

try {

cexecutor.execute(new ChannelEventRunnable(channel, handler, ChannelState.CAUGHT, exception));

} catch (Throwable t) {

throw new ExecutionException("caught event", channel, getClass() + " error when process caught event .", t);

}

}

}

dubbo是如何确定使用哪种线程模型的?

public class NettyServer extends AbstractServer implements Server {

public NettyServer(URL url, ChannelHandler handler) throws RemotingException {

super(url, ChannelHandlers.wrap(handler, ExecutorUtil.setThreadName(url, SERVER_THREAD_POOL_NAME)));

}

}

public class ChannelHandlers {

private static ChannelHandlers INSTANCE = new ChannelHandlers();

protected ChannelHandlers() {

}

public static ChannelHandler wrap(ChannelHandler handler, URL url) {

return ChannelHandlers.getInstance().wrapInternal(handler, url);

}

protected static ChannelHandlers getInstance() {

return INSTANCE;

}

static void setTestingChannelHandlers(ChannelHandlers instance) {

INSTANCE = instance;

}

protected ChannelHandler wrapInternal(ChannelHandler handler, URL url) {

return new MultiMessageHandler(new HeartbeatHandler(ExtensionLoader.getExtensionLoader(Dispatcher.class)

.getAdaptiveExtension().dispatch(handler, url)));

}

}

创建NettyServe,初始化handler的时候,ChannelHandlers#warp方法最后会调用到wrapInternal方法,通过SPI确定了Dispatcher的类型,默认为all

@SPI(AllDispatcher.NAME)

public interface Dispatcher {

@Adaptive({

Constants.DISPATCHER_KEY, "dispather", "channel.handler"})

ChannelHandler dispatch(ChannelHandler handler, URL url);

}

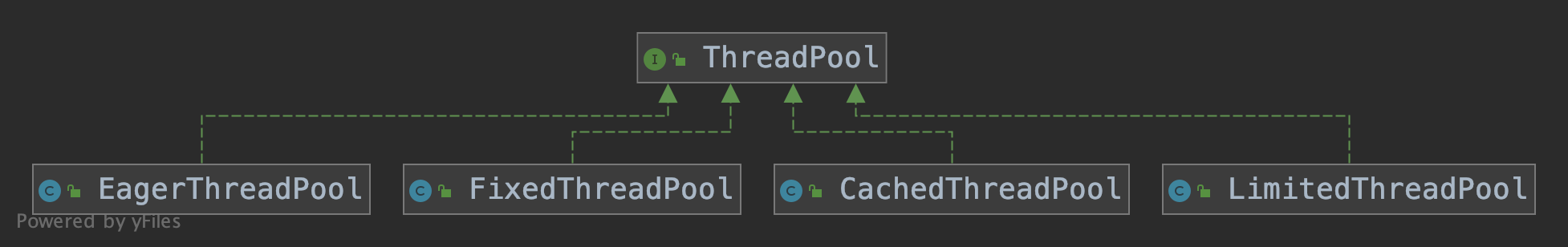

提供的线程池如下

| 类别 | 解释 |

|---|---|

| fixed | 固定大小线程池,启动时建立线程,不关闭,一直持有 |

| cached | 缓存线程池,空闲一分钟自动删除,需要时重建 |

| limited | 可伸缩线程池,但池中的线程数只会增长不会收缩。只增长不收缩的目的是为了避免收缩时突然来了大流量引起的性能问题 |

| eager | 优先创建Worker线程池。在任务数量大于corePoolSize但是小于maximumPoolSize时,优先创建Worker来处理任务。当任务数量大于maximumPoolSize时,将任务放入阻塞队列中。阻塞队列充满时抛出RejectedExecutionException。(相比于cached:cached在任务数量超过maximumPoolSize时直接抛出异常而不是将任务放入阻塞队列) |

public class FixedThreadPool implements ThreadPool {

@Override

public Executor getExecutor(URL url) {

// 获取线程池中线程的名称前缀

String name = url.getParameter(Constants.THREAD_NAME_KEY, Constants.DEFAULT_THREAD_NAME);

// 获取线程个数

int threads = url.getParameter(Constants.THREADS_KEY, Constants.DEFAULT_THREADS);

// 获取线程池队列大小

int queues = url.getParameter(Constants.QUEUES_KEY, Constants.DEFAULT_QUEUES);

// 线程数为0,使用SynchronousQueue。小于0,使用无界队列,大于0,使用有界队列

return new ThreadPoolExecutor(threads, threads, 0, TimeUnit.MILLISECONDS,

queues == 0 ? new SynchronousQueue<Runnable>() :

(queues < 0 ? new LinkedBlockingQueue<Runnable>()

: new LinkedBlockingQueue<Runnable>(queues)),

new NamedInternalThreadFactory(name, true), new AbortPolicyWithReport(name, url));

}

}

代码类似,都是创建线程池,只不过参数有可能不同

dubbo是如何确定使用哪种线程池的?

以AllChannelHandler为例

public class AllChannelHandler extends WrappedChannelHandler {

public AllChannelHandler(ChannelHandler handler, URL url) {

super(handler, url);

}

}

public WrappedChannelHandler(ChannelHandler handler, URL url) {

this.handler = handler;

this.url = url;

// 获取线程池类型

executor = (ExecutorService) ExtensionLoader.getExtensionLoader(ThreadPool.class).getAdaptiveExtension().getExecutor(url);

// 省略部分代码

}

在AllChannelHandler构造函数中确定了线程池类型,默认的类型为FixedThreadPool

@SPI("fixed")

public interface ThreadPool {

@Adaptive({

Constants.THREADPOOL_KEY})

Executor getExecutor(URL url);

}

参考博客

[1]http://dubbo.apache.org/zh-cn/docs/user/demos/thread-model.html