Webot 与ROS通讯

在前面的三篇博客中我们实现了使用RGB-D相机进行构建稠密的点云地图,由于RGB-D相机无法在室外使用,而自动驾驶的汽车多装配有双目相机。因此,在这最后一篇博客中我们实现使用双目相机构建稠密的点云地图。

ORB-SLAM2 在线构建稠密点云(一)

ORB-SLAM2 在线构建稠密点云(二)

ORB-SLAM2 在线构建稠密点云(三)

我们使用的框架还是不变,依旧是将ORB-SLAM作为位姿估计的节点,然后地图构建作为一个节点,只是在这个情况中我们需要增加一个双目立体匹配的节点,该节点是将双目相机获取到的图像实时的计算出一帧深度图像。最后再由建图节点完成拼接,以生产全局一致的点云地图。(增加了图中红色的模块)

1 ORB-SLAM2 双目在线运行

1.1 KITTI数据集运行

export ROS_PACKAGE_PATH=${ROS_PACKAGE_PATH}:/home/crp/crp/SLAM/ORB_SLAM2/Examples/ROS

rosrun ORB_SLAM2 Stereo Vocabulary/ORBvoc.txt Examples/Stereo/KITTI04-12.yaml false

1.2 Indemind 双目相机在线运行

双目相机我们使用的是Indemind的双目相机,这个相机是一个国产的高帧率全曝光相机,驱动安装方式可以参见博客(INDEMIND 双目相机使用教程)。

1.2.1 添加ROS节点文件

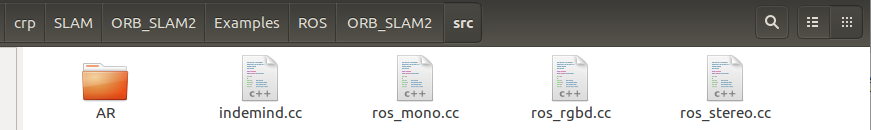

修改一下 Examples/ROS/ORB_SLAM2/src 目录下的文件。我们复制 ros_stereo.cc 文件然后重命名为 indemind.cc

在 indemind.cc 填入以下内容:

#include<iostream>

#include<algorithm>

#include<fstream>

#include<chrono>

#include <ros/ros.h>

#include <ros/spinner.h>

#include <sensor_msgs/CameraInfo.h>

#include <sensor_msgs/Image.h>

#include <sensor_msgs/PointCloud2.h>

#include <nav_msgs/Path.h>

#include <tf/transform_broadcaster.h>

#include <cv_bridge/cv_bridge.h>

#include <cv_bridge/cv_bridge.h>

#include <message_filters/subscriber.h>

#include <message_filters/time_synchronizer.h>

#include <message_filters/sync_policies/approximate_time.h>

#include<opencv2/core/core.hpp>

#include"../../../include/System.h"

using namespace std;

class ImageGrabber

{

public:

ros::NodeHandle nh;

ros::Publisher pub_rgb,pub_depth,pub_tcw,pub_camerapath;

size_t mcounter=0;

nav_msgs::Path camerapath;

ImageGrabber(ORB_SLAM2::System* pSLAM):mpSLAM(pSLAM),nh("~")

{

//创建ROS的发布节点

pub_rgb= nh.advertise<sensor_msgs::Image> ("Left/Image", 10);

pub_depth= nh.advertise<sensor_msgs::Image> ("Right/Image", 10);

pub_tcw= nh.advertise<geometry_msgs::PoseStamped> ("CameraPose", 10);

pub_camerapath= nh.advertise<nav_msgs::Path> ("Path", 10);

}

void GrabStereo(const sensor_msgs::Image::ConstPtr msgLeft,const sensor_msgs::Image::ConstPtr msgRight);

ORB_SLAM2::System* mpSLAM;

bool do_rectify;

cv::Mat M1l,M2l,M1r,M2r;

};

int main(int argc, char **argv)

{

ros::init(argc, argv, "STEREO");

ros::start();

if(argc != 4)

{

cerr << endl << "Usage: rosrun ORB_SLAM2 Stereo path_to_vocabulary path_to_settings do_rectify" << endl;

ros::shutdown();

return 1;

}

// Create SLAM system. It initializes all system threads and gets ready to process frames.

ORB_SLAM2::System SLAM(argv[1],argv[2],ORB_SLAM2::System::STEREO,true);

ImageGrabber igb(&SLAM);

stringstream ss(argv[3]);

ss >> boolalpha >> igb.do_rectify;

if(igb.do_rectify)

{

// Load settings related to stereo calibration

cv::FileStorage fsSettings(argv[2], cv::FileStorage::READ);

if(!fsSettings.isOpened())

{

cerr << "ERROR: Wrong path to settings" << endl;

return -1;

}

cv::Mat K_l, K_r, P_l, P_r, R_l, R_r, D_l, D_r;

fsSettings["LEFT.K"] >> K_l;

fsSettings["RIGHT.K"] >> K_r;

fsSettings["LEFT.P"] >> P_l;

fsSettings["RIGHT.P"] >> P_r;

fsSettings["LEFT.R"] >> R_l;

fsSettings["RIGHT.R"] >> R_r;

fsSettings["LEFT.D"] >> D_l;

fsSettings["RIGHT.D"] >> D_r;

int rows_l = fsSettings["LEFT.height"];

int cols_l = fsSettings["LEFT.width"];

int rows_r = fsSettings["RIGHT.height"];

int cols_r = fsSettings["RIGHT.width"];

if(K_l.empty() || K_r.empty() || P_l.empty() || P_r.empty() || R_l.empty() || R_r.empty() || D_l.empty() || D_r.empty() ||

rows_l==0 || rows_r==0 || cols_l==0 || cols_r==0)

{

cerr << "ERROR: Calibration parameters to rectify stereo are missing!" << endl;

return -1;

}

cv::initUndistortRectifyMap(K_l,D_l,R_l,P_l.rowRange(0,3).colRange(0,3),cv::Size(cols_l,rows_l),CV_32F,igb.M1l,igb.M2l);

cv::initUndistortRectifyMap(K_r,D_r,R_r,P_r.rowRange(0,3).colRange(0,3),cv::Size(cols_r,rows_r),CV_32F,igb.M1r,igb.M2r);

}

ros::NodeHandle nh;

message_filters::Subscriber<sensor_msgs::Image> left_sub(nh, "/indemind/left/image_raw", 1);

message_filters::Subscriber<sensor_msgs::Image> right_sub(nh, "/indemind/right/image_raw", 1);

typedef message_filters::sync_policies::ApproximateTime<sensor_msgs::Image, sensor_msgs::Image> sync_pol;

message_filters::Synchronizer<sync_pol> sync(sync_pol(10), left_sub,right_sub);

sync.registerCallback(boost::bind(&ImageGrabber::GrabStereo,&igb,_1,_2));

ros::spin();

// Stop all threads

SLAM.Shutdown();

// Save camera trajectory

SLAM.SaveKeyFrameTrajectoryTUM("KeyFrameTrajectory_TUM_Format.txt");

SLAM.SaveTrajectoryTUM("FrameTrajectory_TUM_Format.txt");

SLAM.SaveTrajectoryKITTI("FrameTrajectory_KITTI_Format.txt");

ros::shutdown();

return 0;

}

void ImageGrabber::GrabStereo(const sensor_msgs::Image::ConstPtr msgLeft,const sensor_msgs::Image::ConstPtr msgRight)

{

// Copy the ros image message to cv::Mat.

cv_bridge::CvImageConstPtr cv_ptrLeft;

try

{

cv_ptrLeft = cv_bridge::toCvShare(msgLeft);

}

catch (cv_bridge::Exception& e)

{

ROS_ERROR("cv_bridge exception: %s", e.what());

return;

}

cv_bridge::CvImageConstPtr cv_ptrRight;

try

{

cv_ptrRight = cv_bridge::toCvShare(msgRight);

}

catch (cv_bridge::Exception& e)

{

ROS_ERROR("cv_bridge exception: %s", e.what());

return;

}

bool isKeyFrame =true;

cv::Mat Tcw;

if(do_rectify)

{

cv::Mat imLeft, imRight;

cv::remap(cv_ptrLeft->image,imLeft,M1l,M2l,cv::INTER_LINEAR);

cv::remap(cv_ptrRight->image,imRight,M1r,M2r,cv::INTER_LINEAR);

Tcw=mpSLAM->TrackStereo(imLeft,imRight,cv_ptrLeft->header.stamp.toSec());

// cv::imshow("left",imLeft);

// cv::imshow("right",imRight);

// cv::waitKey(1);

}

else

{

Tcw=mpSLAM->TrackStereo(cv_ptrLeft->image,cv_ptrRight->image,cv_ptrLeft->header.stamp.toSec());

}

if (!Tcw.empty())

{

//cv::Mat Twc =Tcw.inv();

//cv::Mat TWC=orbslam->mpTracker->mCurrentFrame.mTcw.inv();

cv::Mat RWC= Tcw.rowRange(0,3).colRange(0,3);

cv::Mat tWC= Tcw.rowRange(0,3).col(3);

tf::Matrix3x3 M(RWC.at<float>(0,0),RWC.at<float>(0,1),RWC.at<float>(0,2),

RWC.at<float>(1,0),RWC.at<float>(1,1),RWC.at<float>(1,2),

RWC.at<float>(2,0),RWC.at<float>(2,1),RWC.at<float>(2,2));

tf::Vector3 V(tWC.at<float>(0), tWC.at<float>(1), tWC.at<float>(2));

tf::Quaternion q;

M.getRotation(q);

tf::Pose tf_pose(q,V);

double roll,pitch,yaw;

M.getRPY(roll,pitch,yaw);

//cout<<"roll: "<<roll<<" pitch: "<<pitch<<" yaw: "<<yaw;

// cout<<" t: "<<tWC.at<float>(0)<<" "<<tWC.at<float>(1)<<" "<<tWC.at<float>(2)<<endl;

if(roll == 0 || pitch==0 || yaw==0)

return ;

// ------

std_msgs::Header header ;

header.stamp =msgLeft->header.stamp;

header.seq = msgLeft->header.seq;

header.frame_id="camera";

//cout<<"depth type: "<< depth. type()<<endl;

sensor_msgs::Image::ConstPtr rgb_msg = msgLeft;

sensor_msgs::Image::ConstPtr depth_msg=msgRight;

geometry_msgs::PoseStamped tcw_msg;

tcw_msg.header=header;

tf::poseTFToMsg(tf_pose, tcw_msg.pose);

camerapath.header =header;

camerapath.poses.push_back(tcw_msg);

pub_tcw.publish(tcw_msg); //Tcw位姿信息

pub_camerapath.publish(camerapath); //相机轨迹

if( isKeyFrame)

{

pub_rgb.publish(rgb_msg);

pub_depth.publish(depth_msg);

}

}

else

{

cout<<"Twc is empty ..."<<endl;

}

}

修改 Examples/ROS/ORB_SLAM2 目录下的 CMakeLists.txt 文件,增加如下内容:

1.2.2 修改配置文件

a. 修改接收的topic为 "/indemind/left/image_raw " 和 “/indemind/right/image_raw”

b. 重新用 ./build_ros.sh 脚本编译

c. 在 ORB_SLAM2/Examples/Stereo 目录下新建文件 INDEMIND.yaml ,根据你使用的相机矫正参数,修改左右目相机的内参数

在配置参数的时候有几个坑需要大家注意,在github上对ORB_SLAM2的运行参数有说明(Calibration and setting suggestions #89 ),其次在CSDN博客 EwenWanW 博主的文章的文章也有解释。

- Camera.bf 中的b指基线baseline(单位:米),f 是焦距fx(x轴和y轴差距不大),bf=b*f,和ThDepth一起决定了深度点的范围:bf * ThDepth / fx,即大致为b * ThDepth。例如,在EuRoC.yaml中 fx为435.2,基线长度是11cm(也就是0.11m),因此的bf为47.9。又因为有效深度=bf * ThDepth / fx = 3.85米,因此计算出ThDepth为35;

其次,由于我用的是indemind的双目相机,这个相机的驱动是我自己在官方基础上修改的,输出的图像是去畸变的图像,因此需要把 INDEMIND.yaml 文件里面的,畸变参数 LEFT.D 、RIGHT.D 设置为0,其他参数保留相机的校准参数。我所使用的参数文件如下:(相机内参由官方提供)

%YAML:1.0

#--------------------------------------------------------------------------------------------

# Camera Parameters. Adjust them!

#--------------------------------------------------------------------------------------------

# Camera calibration and distortion parameters (OpenCV)

Camera.fx: 488.336

Camera.fy: 488.336

Camera.cx: 644.347

Camera.cy: 401.562

Camera.k1: 0.0

Camera.k2: 0.0

Camera.p1: 0.0

Camera.p2: 0.0

Camera.width: 1280

Camera.height: 800

# Camera frames per second

Camera.fps: 50.0

# stereo baseline times fx

Camera.bf: 58.60032

# Color order of the images (0: BGR, 1: RGB. It is ignored if images are grayscale)

Camera.RGB: 1

# Close/Far threshold. Baseline times.

ThDepth: 29

#--------------------------------------------------------------------------------------------

# Stereo Rectification. Only if you need to pre-rectify the images.

# Camera.fx, .fy, etc must be the same as in LEFT.P

#--------------------------------------------------------------------------------------------

LEFT.height: 800

LEFT.width: 1280

LEFT.D: !!opencv-matrix

rows: 1

cols: 5

dt: d

data:[0.00, 0.0, 0.0, 0.0, 0.0]

LEFT.K: !!opencv-matrix

rows: 3

cols: 3

dt: d

data: [488.336, 0.0, 644.347, 0.0, 488.343, 401.5618, 0.0, 0.0, 1.0]

LEFT.R: !!opencv-matrix

rows: 3

cols: 3

dt: d

data: [ 0.99994682029098447, 0.0042379807775701547, 0.00940192048883879, -0.0041952912163475201, 0.99998082283423240, -0.00455560044138561203, -0.0094210467337519227, 0.0045159143814361286,

0.99994542370858408 ]

LEFT.P: !!opencv-matrix

rows: 3

cols: 4

dt: d

data: [518.5203729276, 0, 645.250058581, 0, 0, 518.5203729276, 416.3532389988, 0, 0, 0, 1, 0]

RIGHT.height: 800

RIGHT.width: 1280

RIGHT.D: !!opencv-matrix

rows: 1

cols: 5

dt: d

data:[0.0, 0.0, 0.0, 0.0, 0.0]

RIGHT.K: !!opencv-matrix

rows: 3

cols: 3

dt: d

data: [490.450, 0.0, 649.103, 0.0, 489.989, 429.680, 0.0, 0.0, 1]

RIGHT.R: !!opencv-matrix

rows: 3

cols: 3

dt: d

data: [0.99998560954804505, 0.00493522221472591, -0.0021033968993112787, -0.0049256307871090041, 0.99997755842320279, 0.0045410143491452538, 0.0021257606106615196, -0.0045305884453716748,

0.99998747737667426 ]

RIGHT.P: !!opencv-matrix

rows: 3

cols: 4

dt: d

data: [518.5203729276, 0, 645.250058581, -623.895187325, 0, 518.5203729276, 416.3532389988, 0, 0, 0, 1, 0]

#--------------------------------------------------------------------------------------------

# ORB Parameters

#--------------------------------------------------------------------------------------------

# ORB Extractor: Number of features per image

ORBextractor.nFeatures: 1200

# ORB Extractor: Scale factor between levels in the scale pyramid

ORBextractor.scaleFactor: 1.2

# ORB Extractor: Number of levels in the scale pyramid

ORBextractor.nLevels: 8

# ORB Extractor: Fast threshold

# Image is divided in a grid. At each cell FAST are extracted imposing a minimum response.

# Firstly we impose iniThFAST. If no corners are detected we impose a lower value minThFAST

# You can lower these values if your images have low contrast

ORBextractor.iniThFAST: 20

ORBextractor.minThFAST: 7

#--------------------------------------------------------------------------------------------

# Viewer Parameters

#--------------------------------------------------------------------------------------------

Viewer.KeyFrameSize: 0.05

Viewer.KeyFrameLineWidth: 1

Viewer.GraphLineWidth: 0.9

Viewer.PointSize:2

Viewer.CameraSize: 0.08

Viewer.CameraLineWidth: 3

Viewer.ViewpointX: 0

Viewer.ViewpointY: -0.7

Viewer.ViewpointZ: -1.8

Viewer.ViewpointF: 500

1.2.3 启动运行双目在线节点

打开两个终端输入以下内容,启动节点

export ROS_PACKAGE_PATH=${ROS_PACKAGE_PATH}:/home/crp/crp/SLAM/ORB_SLAM2/Examples/ROS

rosrun ORB_SLAM2 indemind Vocabulary/ORBvoc.txt Examples/Stereo/INDEMIND.yaml true

sudo -s

cd ~/catkin_ws

source devel/setup.bash

roslaunch indemind_stereo stereo.launch

该节点一共会发布4个topic, 以下是测试效果:

/STEREO/CameraPose 和 /STEREO/Path 分别是发布相机的位姿和轨迹

/STEREO/Left/Image 和 /STEREO/Right/Image 这是用于发布关键帧的图像帧

实际中我们发现indemind相机的效果不如ZED相机效果好。

2 添加ELAS 节点

elas库的配置方法请参考我的博客(双目相机计算稠密深度点云(一)),需将elas_ros和pointcloud_mapping放置于同一个ROS工作空间。

3 修改建图节点

由于elas发布的直接是图像对应的点云,因此我们需要在建图节点中新建一个cpp文件或者在原来的代码基础上修改为接收点云Topic和位姿Topic,示例代码如下:

void callback_pointcloud(const sensor_msgs::PointCloud2::ConstPtr msgPointCloud,

const geometry_msgs::PoseStamped::ConstPtr tcw )

{

geometry_msgs::PoseStamped Tcw=*tcw;

Eigen::Quaterniond q =Eigen::Quaterniond(Tcw.pose.orientation.w,Tcw.pose.orientation.x,Tcw.pose.orientation.y,Tcw.pose.orientation.z) ;

Eigen::AngleAxisd V6(q);

// V6.fromRotationMatrix<double,3,3>(q.toRotationMatrix());

Eigen::Isometry3d T = Eigen::Isometry3d::Identity( ); // 三维变换矩阵

T.rotate(V6); // 旋转部分赋值

T(0,3)= Tcw.pose.position.x;

T(1,3)= Tcw.pose.position.y;

T(2,3)= Tcw.pose.position.z;

// 已测试接受到的数据没有问题

//cout<<"T:"<<T.matrix()<<endl;

PointCloud::Ptr tem_cloud1( new PointCloud() );

pcl::fromROSMsg(*msgPointCloud, *tem_cloud1);

mvGlobalPointClouds.push_back(*tem_cloud1); //存储关键帧对应的点云序列

mvGlobalPointCloudsPose.push_back(T);

unique_lock<mutex> lck(keyframeMutex);

{

mGlobalPointCloudID++;

mbKeyFrameUpdate =true;

}

cout<<"receive a keyframe, id = "<<mGlobalPointCloudID<<" map size="<<tem_cloud1->points.size()<<endl; ;

}

pcl::PointCloud< PointT >::Ptr generatePointCloud( int index_i)

{

PointCloud::Ptr tmp( new PointCloud() );

*tmp = mvGlobalPointClouds[index_i];

Eigen::Isometry3d T = mvGlobalPointCloudsPose[index_i];

// 已测试接受到的数据没有问题

//cout<<"T:"<<T.matrix()<<endl;

if(tmp->empty() )

return nullptr;

//cout<<"T:"<<T.matrix()<<endl;

chrono::steady_clock::time_point t1 = chrono::steady_clock::now();

PointCloud::Ptr cloud_voxel_tem(new PointCloud);

tmp->is_dense = false;

voxel.setInputCloud( tmp );

//voxel.setLeafSize( mresolution, mresolution, mresolution);

voxel.filter( *cloud_voxel_tem );

//cloud_after_PassThrough->swap( *cloud_voxel_tem );

mLastGlobalPointCloudID++; //用于指示处理到哪一个位置

PointCloud::Ptr cloud1(new PointCloud);

pcl::transformPointCloud( *cloud_voxel_tem, *cloud1, T.matrix());

chrono::steady_clock::time_point t2 = chrono::steady_clock::now();

chrono::duration<double> time_used = chrono::duration_cast<chrono::duration<double>>( t2-t1 );

cout<<GREEN<<"generate point cloud from kf-ID:"<<mLastGlobalPointCloudID<<", size="<<cloud1->points.size()

<<", cost time: "<<time_used.count()*1000<<" ms ."<<WHITE<<endl;

return cloud1;

}

/**

* 原来版本的显示函数

* 由于随着尺寸的增加以后,显示函数会异常退出

*/

void viewer(void)

{

pcl::visualization::CloudViewer pcl_viewer("viewer");

//pcl::visualization::PCLVisualizer viewer;

//viewer.setBackgroundColor(100, 100, 100); // rgb

size_t N=0,i=0;

bool KFUpdate=false;

cv::namedWindow("pointcloud");

//pcl::visualization::KeyboardEvent event;

//ros::AsyncSpinner spinner(2); // Use 1threads

spinner.start();

while(ros::ok())

{

{

unique_lock<mutex> lck_shutdown( shutDownMutex );

if (shutDownFlag)

{

break;

}

}

// keyframe is updated

KFUpdate=false;

{

unique_lock<mutex> lck( keyframeMutex );

N =mvGlobalPointCloudsPose.size();

KFUpdate=mbKeyFrameUpdate;

mbKeyFrameUpdate=false;

}

if(KFUpdate || N>lastKeyframeSize)

{

for ( i=lastKeyframeSize; i<N && i<(lastKeyframeSize+5); i++ )

{

PointCloud::Ptr cloud1( new PointCloud() );

cloud1 = generatePointCloud(i);

*globalMap += *cloud1;

//sensor_msgs::PointCloud2 local;

//pcl::toROSMsg(*cloud1,local);// 转换成ROS下的数据类型 最终通过topic发布

//local.header.stamp=ros::Time::now();

//local.header.frame_id ="world";

//pub_local_pointcloud.publish(local);

//pcl_viewer.showCloud( cloud1 );

}

int buff_length=150;

if(i > (buff_length+5))

{

unique_lock<mutex> lck( deletekeyframeMutex );

mvGlobalPointCloudsPose.erase(mvGlobalPointCloudsPose.begin(),mvGlobalPointCloudsPose.begin()+buff_length);

mvGlobalPointClouds.erase(mvGlobalPointClouds.begin(),mvGlobalPointClouds.begin()+buff_length);

i=i-buff_length;

cout<<RED<<"delete keyframe ...."<<WHITE<<endl;

}

// publish global pointcloud map in ROS topic

// sensor_msgs::PointCloud2 output;

// pcl::toROSMsg(*globalMap,output);// 转换成ROS下的数据类型 最终通过topic发布

// output.header.stamp=ros::Time::now();

// output.header.frame_id ="world";

// pub_global_pointcloud.publish(output);

pcl_viewer.showCloud( globalMap );

cout<<"show global map, size="<<globalMap->points.size()<<endl;

// if(((N-lastKeyframeSize)>2 )&& (globalMap->points.size()>0))

// if((N==i )&& (globalMap->points.size()>0))

// shutdown(); //处理到最后一帧的时候保存点云,写文件很耗时

lastKeyframeSize = i;

//viewer.addPointCloud(globalMap, "globalMap");

// viewer.spin();

}

// if (event.keyDown())

// {

// //打印出按下的按键信息

// cout << event.getKeySym() << endl;

// }

int key_value=cv::waitKey(1);

switch (key_value)

{

case 's':

cout<<"key_value:"<<key_value<<endl;

shutdown();

break;

// case 'S': cout<<"key_value:"<<key_value<<endl; break;

// case '\r':break;

// case 0x18:break;//cancel

// case 0x1B:break;//escape

default:break;

}

}

shutdown();

spinner.stop();

}

这里在之前的教程中我只更新了以下3个函数:

void viewer(void)

void callback_pointcloud(const sensor_msgs::PointCloud2::ConstPtr msgPointCloud,

const geometry_msgs::PoseStamped::ConstPtr tcw )

pcl::PointCloud< PointT >::Ptr generatePointCloud( int index_i)

改为了同时接收点云和位姿。

以下是使用双目相机在校园里面重建的效果,(使用的是关键帧保障ELAS的计算实时)

在KITTI数据集上的效果:

完整的代码将在后续贴出来!

参考资料

[1] Geiger A, Roser M, Urtasun R. Efficient large-scale stereo matching[C]//Asian conference on computer vision. Springer, Berlin, Heidelberg, 2010: 25-38.