百度语音助手集成到Fragment里面怎么避坑?

其实集成到Fragment才是比较实用的,但是没看到有相关详细的教程,所以本人尝试去挖这个坑看看能不能填上,终于终于还是搞出来了。

首先你还是要进行一些导包修改版本等相关的操作,不懂可以点下这个链接百度语音识别集成到HelloWorld,这个步骤完成后,回到你要集成的Fragment里面,可以看下我的Fragment的布局:

<?xml version="1.0" encoding="utf-8"?>

<FrameLayout xmlns:android="http://schemas.android.com/apk/res/android"

xmlns:tools="http://schemas.android.com/tools"

android:layout_width="match_parent"

android:layout_height="match_parent"

tools:context=".VcFragment">

<RelativeLayout

android:layout_width="match_parent"

android:layout_height="match_parent"

tools:ignore="UselessParent">

<ImageView

android:id="@+id/iv_vc"

android:layout_width="100dp"

android:layout_height="100dp"

android:layout_alignParentEnd="true"

android:layout_alignParentBottom="true"

android:layout_marginEnd="154dp"

android:layout_marginBottom="160dp"

android:contentDescription="@string/to"

android:src="@drawable/ic_mkf" />

<TextView

android:id="@+id/tv_vcResult"

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:layout_alignParentTop="true"

android:layout_alignParentEnd="true"

android:layout_centerVertical="true"

android:layout_marginTop="163dp"

android:layout_marginEnd="0dp"

android:text="@string/ress"

android:textAlignment="center"

android:textColor="@color/colorPrimary"

android:textSize="20sp"

android:textStyle="bold" />

</RelativeLayout>

</FrameLayout>

逻辑代码如下:

import android.Manifest;

import android.annotation.SuppressLint;

import android.app.Activity;

import android.app.usage.UsageEvents;

import android.content.pm.PackageManager;

import android.os.Bundle;

import androidx.annotation.NonNull;

import androidx.annotation.Nullable;

import androidx.core.app.ActivityCompat;

import androidx.core.content.ContextCompat;

import androidx.fragment.app.Fragment;

import android.os.Handler;

import android.os.Message;

import android.util.Log;

import android.view.LayoutInflater;

import android.view.MotionEvent;

import android.view.View;

import android.view.ViewGroup;

import android.widget.ImageView;

import android.widget.TextView;

import android.widget.Toast;

import com.baidu.aip.asrwakeup3.core.recog.MyRecognizer;

import com.baidu.aip.asrwakeup3.core.recog.listener.IRecogListener;

import com.baidu.aip.asrwakeup3.core.recog.listener.MessageStatusRecogListener;

import com.baidu.aip.asrwakeup3.core.recog.listener.StatusRecogListener;

import com.baidu.aip.asrwakeup3.core.util.FileUtil;

import com.baidu.speech.EventManager;

import com.baidu.speech.asr.SpeechConstant;

import org.json.JSONException;

import org.json.JSONObject;

import java.util.ArrayList;

import java.util.LinkedHashMap;

import java.util.Map;

import java.util.Objects;

/**

* A simple {@link Fragment} subclass.

*/

public class VcFragment extends Fragment {

public VcFragment() {

// Required empty public constructor

}

private TextView tv_vcResult;

// public EventManager asr;

protected MyRecognizer myRecognizer;

protected Handler handler;

protected String resultTxt = null;

@SuppressLint({"ClickableViewAccessibility", "HandlerLeak"})

@Override

public View onCreateView(@NonNull LayoutInflater inflater,@Nullable ViewGroup container,

@Nullable Bundle savedInstanceState) {

// Inflate the layout for this fragment

inflater = LayoutInflater.from(getContext());

View myview = inflater.inflate(R.layout.fragment_vc, container, false);

//建立一个线程来处理信息

handler = new Handler() {

@Override

public void handleMessage(Message msg) {

super.handleMessage(msg);

handleMsg(msg);

}

};

tv_vcResult = myview.findViewById(R.id.tv_vcResult);

ImageView iv_vc = myview.findViewById(R.id.iv_vc);

iv_vc.setOnTouchListener(new View.OnTouchListener() {

@Override

public boolean onTouch(View v, MotionEvent event) {

int action = event.getAction();

if (action == MotionEvent.ACTION_DOWN) {

tv_vcResult.setText("识别中...");

// Toast.makeText(getContext(), "正在识别....", Toast.LENGTH_SHORT).show();

start();

} else if (action == MotionEvent.ACTION_UP) {

stop();

}

return false;

}

});

initSpeechRecog();

initPermission();

return myview;

}

//初始化语音识别

private void initSpeechRecog() {

IRecogListener listener = new MessageStatusRecogListener(handler);

if (myRecognizer == null) {

myRecognizer = new MyRecognizer(this.getContext(), listener);

}

}

//开始识别

private void start() {

final Map<String, Object> params = new LinkedHashMap<String, Object>();

params.put(SpeechConstant.ACCEPT_AUDIO_VOLUME, false);

params.put(SpeechConstant.PID, 1536);//普通话

myRecognizer.start(params);

}

//停止识别

private void stop() {

myRecognizer.stop();

}

//回调事件处理

private void handleMsg(Message msg) {

if (msg.what == MessageStatusRecogListener.STATUS_FINISHED) {

// String resultTxt = null;

try {

JSONObject msgObj = new JSONObject(msg.obj.toString());

if (msg.arg2 == 1) {

String error = msgObj.getString("error");

System.out.println("error =>" + error);

if ("0".equals(error)) {

resultTxt = msgObj.getString("best_result");

tv_vcResult.setText(resultTxt);

}

}

} catch (JSONException e) {

e.printStackTrace();

}

}

}

//释放资源

@Override

public void onDestroy() {

super.onDestroy();

if (myRecognizer != null) {

myRecognizer.release();//释放资源,不然程序会闪退

myRecognizer = null;

}

}

//调用权限

private void initPermission() {

String[] permissions = {Manifest.permission.RECORD_AUDIO,

Manifest.permission.ACCESS_NETWORK_STATE,

Manifest.permission.INTERNET,

Manifest.permission.READ_PHONE_STATE,

Manifest.permission.WRITE_EXTERNAL_STORAGE,

Manifest.permission.MODIFY_AUDIO_SETTINGS,

Manifest.permission.ACCESS_WIFI_STATE,

Manifest.permission.CHANGE_WIFI_STATE

};

ArrayList<String> toApplyList = new ArrayList<>();

for (String perm : permissions) {

if (PackageManager.PERMISSION_GRANTED != ContextCompat.checkSelfPermission(Objects.requireNonNull(getActivity()), perm)) {

toApplyList.add(perm);

//进入这里代表没有权限

}

}

String[] tmplist = new String[toApplyList.size()];

if (!toApplyList.isEmpty()) {

ActivityCompat.requestPermissions(getActivity(), toApplyList.toArray(tmplist), 123);

}

}

@Override

public void onRequestPermissionsResult(int requestCode, @NonNull String[] permissions, @NonNull int[] grantResults) {

// 此处为android 6.0以上动态授权的回调,用户自行实现。

}

然后你可以在自己的日志看到识别效果,但是你的TextView一点反应都没,怎么办呢?

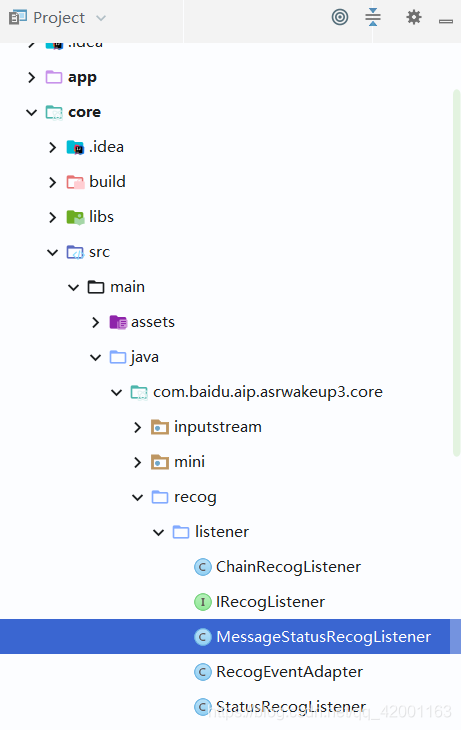

首先把Android改成Project,找到以下路径

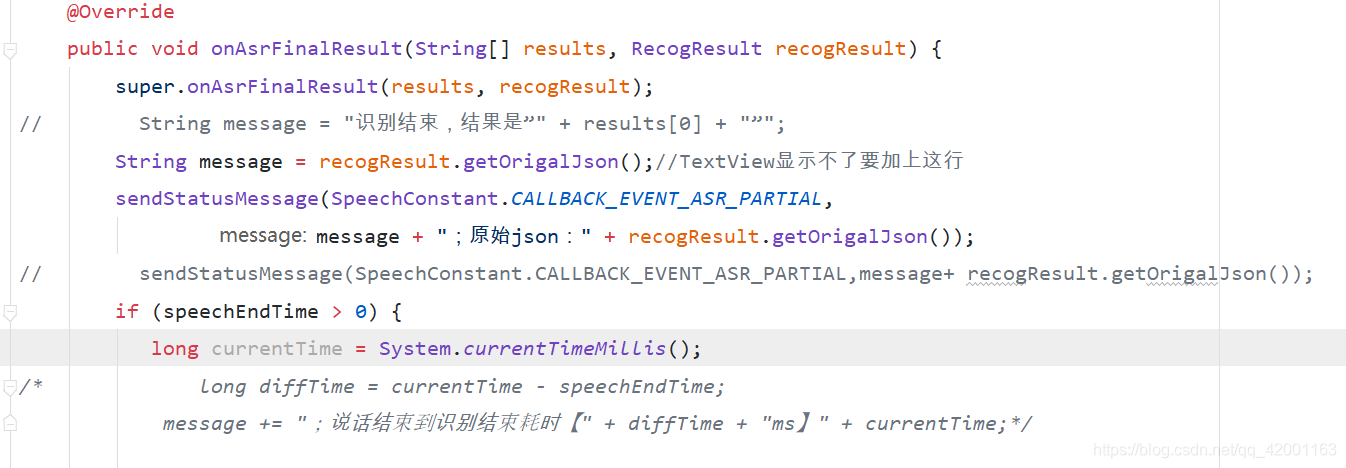

修改两行代码就可以了,如下图所示:

效果可以看图