之前也写过word2vec词向量文本分类实现,不过那是基于Keras。

今天来写下tensoflow版的代码。

再来感受下它的魅力。

tensorflow比Keras更接近底层,可以更方便让我们理解Word2vector如何应用在文本分类中

简化版例子。

之前的文本分类博客链接:

基于词向量word2vec模型的文本分类实现(算例有代码 Keras版)

短文本分类:电力95598工单分类实现 tf-idf

算例

第一步:导入包

#!/usr/bin/env python3

# -*- coding: utf-8 -*-

# @Author: yudengwu

# @Date : 2020/9/6

import tensorflow as tf

import numpy as np

第二步:数据

为了方便,我只列出啦其个样本,且样本为英文。如果是中文,那么代码里的sentences应该为分词并去除停用词后的数据。

本例子中样本有的长度为3,有的为2。对应现实生活中不同长度的文本。

如果是中文例子,则长度指的是(分词并去除停用词后)样本包含词语的个数。

labels为标签,简单2分类操作。

sentences = ["i love you","love you","he loves me", "she likes baseball", "i hate you","hate you","sorry for that", "this is awful"]

labels = [1,1,1,1,0,0,0,0] # 1 is good, 0 is not good.

第三步:获取词典

word_dict = {w: i+1 for i, w in enumerate(word_list)}

字典中i来说一般不加1,默认从0 开始 ,我这里加1。是因为我后面要为断文本填0操作,使各个文本长度一致,是填充的0和原始字典0区别开来,所有字典中的i+1。

word_list = " ".join(sentences).split()

word_list = list(set(word_list))

word_dict = {w: i+1 for i, w in enumerate(word_list)}#词典

print(word_dict)

vocab_size = len(word_dict)+1#词语个数

print(vocab_size)

word_dict:

{‘she’: 1, ‘for’: 2, ‘baseball’: 3, ‘that’: 4, ‘me’: 5, ‘loves’: 6, ‘likes’: 7, ‘hate’: 8, ‘this’: 9, ‘you’: 10, ‘i’: 11, ‘awful’: 12, ‘is’: 13, ‘sorry’: 14, ‘love’: 15, ‘he’: 16}

vocab_size:

16

vocab_size=词语数+1,+1代表填充的0,代表未知词,后面用到

第四步:设置参数

num_list=[len(one.split()) for one in sentences]#[3, 2, 3, 3, 3, 2, 3, 3]

sequence_length = max(num_list)#求取最大文本长度3

这两行代码为获取最大文本长度

# Text-CNN Parameter

num_list=[len(one.split()) for one in sentences]#[3, 2, 3, 3, 3, 2, 3, 3]

sequence_length = max(num_list)#求取最大文本长度3

embedding_size = 2 # n-gram

num_classes = 2 # 0 or 1

filter_sizes = [2,2,2] # n-gram window

num_filters = 3

第五步:输入输出处理

inputs = []

for sen in sentences:

c=[word_dict[n] for n in sen.split()]#

if len(c)<=sequence_length:

c.extend([0 for i in range(sequence_length-len(c))])

inputs.append(np.array(c))

outputs = []

for out in labels:

outputs.append(np.eye(num_classes)[out]) # ONE-HOT : To using Tensor Softmax Loss function

print('inputs',inputs)

print('outputs',outputs)

inputs [array([ 2, 15, 7]), array([15, 7, 0]), array([ 6, 14, 16]), array([13, 5, 8]), array([2, 3, 7]), array([3, 7, 0]), array([ 9, 10, 11]), array([ 4, 1, 12])]

outputs [array([0., 1.]), array([0., 1.]), array([0., 1.]), array([0., 1.]), array([1., 0.]), array([1., 0.]), array([1., 0.]), array([1., 0.])]

通过以上处理我们成功将文本用数值数据表示啦出来,可以将此数据拿到任意一个分类模型进行训练。

这种文本表示方法存在弊端,如果样本中只有一个长文本,其他全是短文本,则短文本表示中有很多为0,存在稀疏性问题。这个问题我们通过模型解决。

这种表示像是词典模型的变种。

第六步:模型

# Model

X = tf.placeholder(tf.int32, [None, sequence_length])

Y = tf.placeholder(tf.int32, [None, num_classes])

W = tf.Variable(tf.random_uniform([vocab_size, embedding_size], -1.0, 1.0))

embedded_chars = tf.nn.embedding_lookup(W, X) # [batch_size, sequence_length, embedding_size]

embedded_chars = tf.expand_dims(embedded_chars, -1) # add channel(=1) [batch_size, sequence_length, embedding_size, 1]

pooled_outputs = []

for i, filter_size in enumerate(filter_sizes):

filter_shape = [filter_size, embedding_size, 1, num_filters]

W = tf.Variable(tf.truncated_normal(filter_shape, stddev=0.1))

b = tf.Variable(tf.constant(0.1, shape=[num_filters]))

conv = tf.nn.conv2d(embedded_chars, # [batch_size, sequence_length, embedding_size, 1]

W, # [filter_size(n-gram window), embedding_size, 1, num_filters(=3)]

strides=[1, 1, 1, 1],

padding='VALID')

h = tf.nn.relu(tf.nn.bias_add(conv, b))

pooled = tf.nn.max_pool(h,

ksize=[1, sequence_length - filter_size + 1, 1, 1], # [batch_size, filter_height, filter_width, channel]

strides=[1, 1, 1, 1],

padding='VALID')

pooled_outputs.append(pooled) # dim of pooled : [batch_size(=6), output_height(=1), output_width(=1), channel(=1)]

num_filters_total = num_filters * len(filter_sizes)

h_pool = tf.concat(pooled_outputs, num_filters) # h_pool : [batch_size(=6), output_height(=1), output_width(=1), channel(=1) * 3]

h_pool_flat = tf.reshape(h_pool, [-1, num_filters_total]) # [batch_size, ]

# Model-Training

Weight = tf.get_variable('W', shape=[num_filters_total, num_classes],

initializer=tf.contrib.layers.xavier_initializer())

Bias = tf.Variable(tf.constant(0.1, shape=[num_classes]))

model = tf.nn.xw_plus_b(h_pool_flat, Weight, Bias)

cost = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits_v2(logits=model, labels=Y))

optimizer = tf.train.AdamOptimizer(0.001).minimize(cost)

# Model-Predict

hypothesis = tf.nn.softmax(model)

predictions = tf.argmax(hypothesis, 1)

解释:tf.random_uniform((4, 4), minval=low,maxval=high,dtype=tf.float32)))返回4*4的矩阵,产生于low和high之间,产生的值是均匀分布的

tf.nn.embedding_lookup(params,ids)是用于查找在params中取出下标为ids的各个项。代码中的W为各个词都生成一个向量,tf.nn.embedding_lookup(W,x)想当与只找出文本中有的词的词向量。embedding用于词向量降维。

看到这里,我们可以将得到的词向量应用在分类模型中。

以下关于模型部分如果觉得难以理解可以不看,毕竟模型可以换。

tf.expand_dims(embedded_chars, -1) 填充维度,是输入变成卷积网络输入格式。

下列代码部分是拼接几个卷积池化层结果

pooled_outputs = []

for i, filter_size in enumerate(filter_sizes):

filter_shape = [filter_size, embedding_size, 1, num_filters]

W = tf.Variable(tf.truncated_normal(filter_shape, stddev=0.1))

b = tf.Variable(tf.constant(0.1, shape=[num_filters]))

conv = tf.nn.conv2d(embedded_chars, # [batch_size, sequence_length, embedding_size, 1]

W, # [filter_size(n-gram window), embedding_size, 1, num_filters(=3)]

strides=[1, 1, 1, 1],

padding=‘VALID’)

h = tf.nn.relu(tf.nn.bias_add(conv, b))

pooled = tf.nn.max_pool(h,

ksize=[1, sequence_length - filter_size + 1, 1, 1], # [batch_size, filter_height, filter_width, channel]

strides=[1, 1, 1, 1],

padding=‘VALID’)

pooled_outputs.append(pooled) # dim of pooled : [batch_size(=6), output_height(=1), output_width(=1), channel(=1)]

多久没研究TensorFlow版卷积,参数有些看不懂啦。

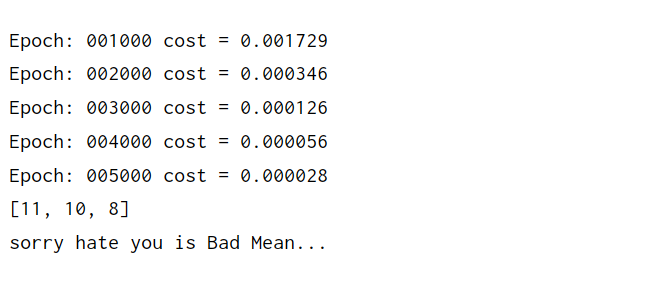

第七步:训练

# Training

init = tf.global_variables_initializer()

sess = tf.Session()

sess.run(init)

for epoch in range(5000):

_, loss = sess.run([optimizer, cost], feed_dict={X: inputs, Y: outputs})

if (epoch + 1)%1000 == 0:

print('Epoch:', '%06d' % (epoch + 1), 'cost =', '{:.6f}'.format(loss))

第八步:预测

如果测试文本比我们训练集最长文本还要长,要截断文本。

截断文本本文代码未做演示。

# Test

test_text = 'sorry hate you'

tests = []

c1=[word_dict[n] for n in test_text.split()]

print(c1)

if len(c1) <= sequence_length:

c1.extend([0 for i in range(sequence_length - len(c1))])

tests.append(np.array(c1))

predict = sess.run([predictions], feed_dict={X: tests})

result = predict[0][0]

if result == 0:

print(test_text,"is Bad Mean...")

else:

print(test_text,"is Good Mean!!")

这份代码我是参照的GitHub上,原代码比这个简单没有考虑文本长度不一的情况。

电气专业的计算机萌新:余登武。看到这里说明你觉得本文对你有用,请点个赞支持下,谢谢。