1,Datax下载使用

阿里巴巴开源-数据迁移工具:

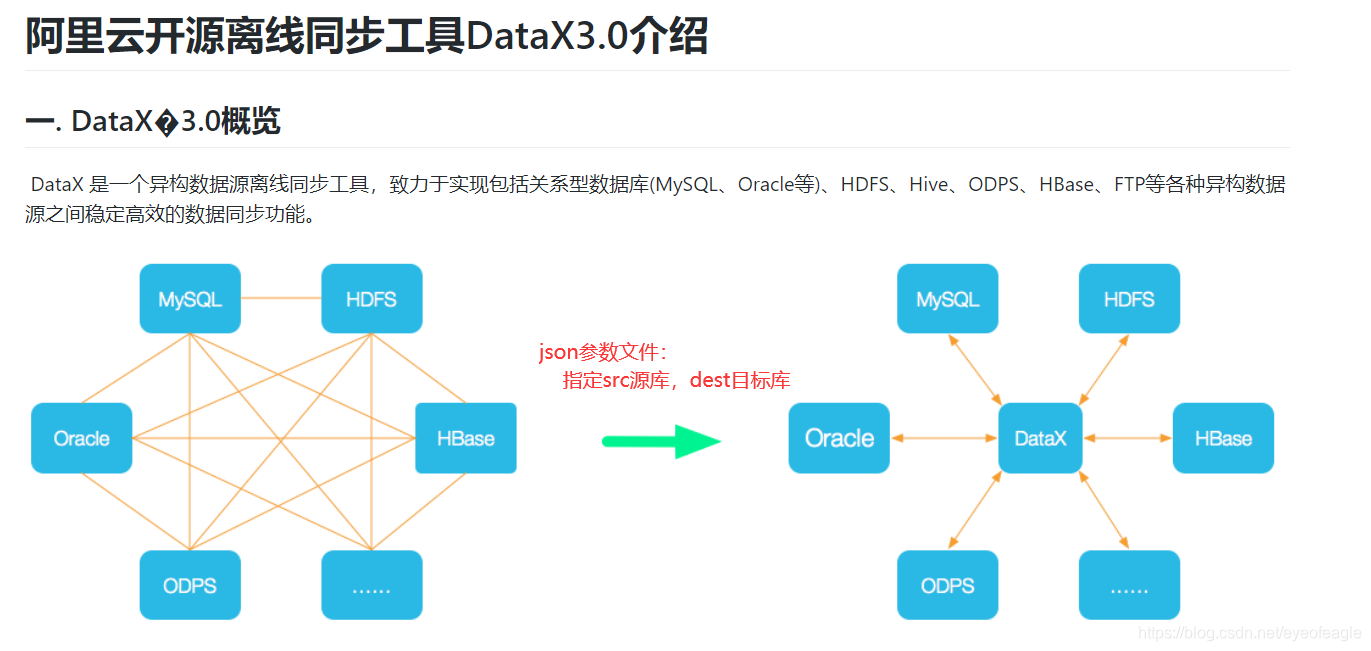

- DataX简介-架构设计:

https://github.com/alibaba/DataX/blob/master/introduction.md - 用户指南:

https://github.com/alibaba/DataX/blob/master/userGuid.md

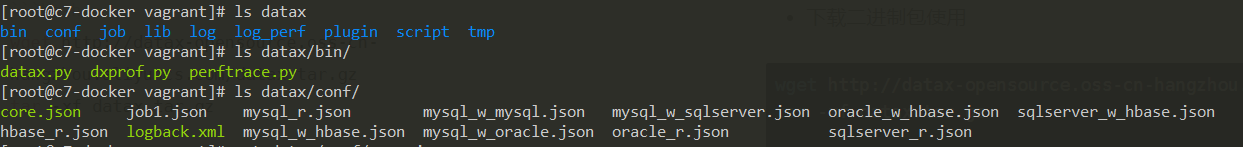

下载二进制包使用

下载,并解压到linux服务器某目录:http://datax-opensource.oss-cn-hangzhou.aliyuncs.com/datax.tar.gz

- 目录结构

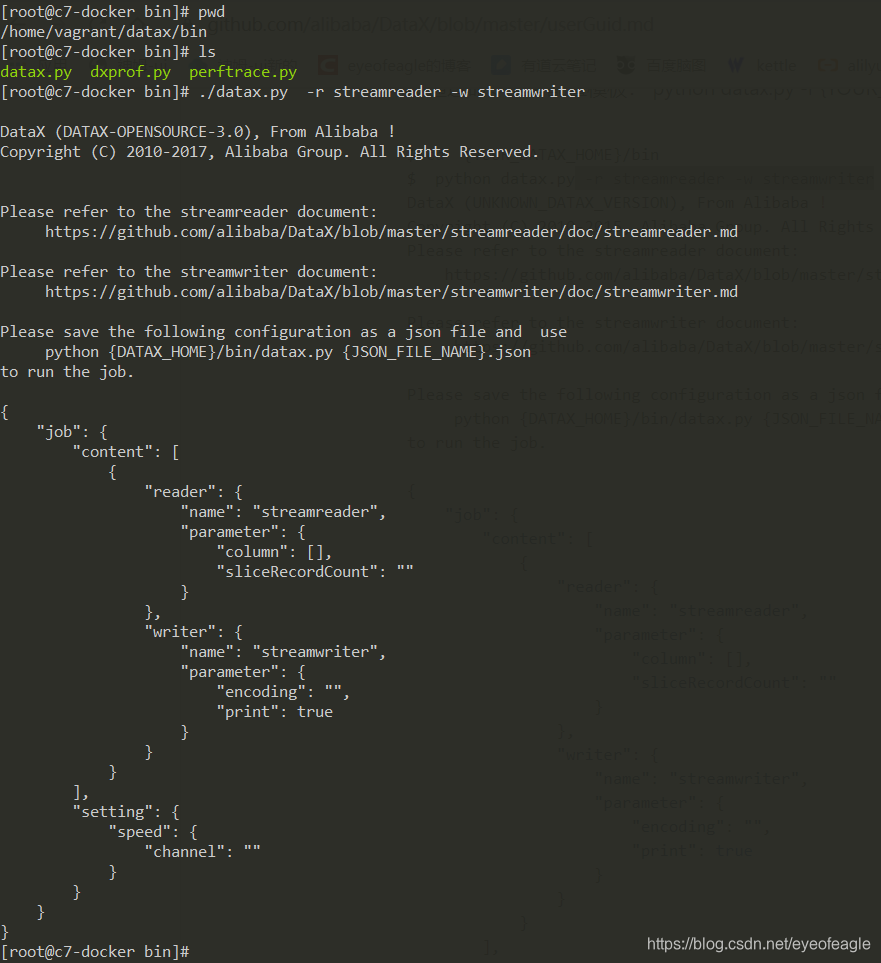

- 测试使用:参数结构(json形式)

编写json参数,执行测试任务

[root@c7-docker bin]# cat ../conf/job1.json

{

"job": {

"content": [

{

"reader": {

"name": "streamreader",

"parameter": {

"sliceRecordCount": 10,

"column": [

{

"type": "long",

"value": "10"

},

{

"type": "string",

"value": "hello,你好,世界-DataX"

}

]

}

},

"writer": {

"name": "streamwriter",

"parameter": {

"encoding": "UTF-8",

"print": true

}

}

}

],

"setting": {

"speed": {

"channel": 5

}

}

}

}

[root@c7-docker bin]# ./datax.py ../conf/job1.json

DataX (DATAX-OPENSOURCE-3.0), From Alibaba !

Copyright (C) 2010-2017, Alibaba Group. All Rights Reserved.

2020-08-09 00:03:05.424 [main] INFO VMInfo - VMInfo# operatingSystem class => sun.management.OperatingSystemImpl

2020-08-09 00:03:05.429 [main] INFO Engine - the machine info =>

osInfo: Oracle Corporation 1.8 25.211-b12

jvmInfo: Linux amd64 3.10.0-957.12.2.el7.x86_64

cpu num: 1

totalPhysicalMemory: -0.00G

freePhysicalMemory: -0.00G

maxFileDescriptorCount: -1

currentOpenFileDescriptorCount: -1

GC Names [Copy, MarkSweepCompact]

MEMORY_NAME | allocation_size | init_size

Eden Space | 273.06MB | 273.06MB

Code Cache | 240.00MB | 2.44MB

Survivor Space | 34.13MB | 34.13MB

Compressed Class Space | 1,024.00MB | 0.00MB

Metaspace | -0.00MB | 0.00MB

Tenured Gen | 682.69MB | 682.69MB

....

2020-08-09 00:03:05.469 [main] WARN Engine - prioriy set to 0, because NumberFormatException, the value is: null

2020-08-09 00:03:05.471 [main] INFO PerfTrace - PerfTrace traceId=job_-1, isEnable=false, priority=0

2020-08-09 00:03:05.471 [main] INFO JobContainer - DataX jobContainer starts job.

2020-08-09 00:03:05.472 [main] INFO JobContainer - Set jobId = 0

2020-08-09 00:03:05.504 [job-0] INFO JobContainer - jobContainer starts to do prepare ...

2020-08-09 00:03:05.518 [job-0] INFO JobContainer - DataX Reader.Job [streamreader] do prepare work .

2020-08-09 00:03:05.518 [job-0] INFO JobContainer - DataX Writer.Job [streamwriter] do prepare work .

2020-08-09 00:03:05.518 [job-0] INFO JobContainer - jobContainer starts to do split ...

2020-08-09 00:03:05.519 [job-0] INFO JobContainer - Job set Channel-Number to 5 channels.

2020-08-09 00:03:05.519 [job-0] INFO JobContainer - DataX Reader.Job [streamreader] splits to [5] tasks.

2020-08-09 00:03:05.520 [job-0] INFO JobContainer - DataX Writer.Job [streamwriter] splits to [5] tasks.

2020-08-09 00:03:05.541 [job-0] INFO JobContainer - jobContainer starts to do schedule ...

2020-08-09 00:03:05.560 [job-0] INFO JobContainer - Scheduler starts [1] taskGroups.

2020-08-09 00:03:05.561 [job-0] INFO JobContainer - Running by standalone Mode.

2020-08-09 00:03:05.585 [taskGroup-0] INFO TaskGroupContainer - taskGroupId=[0] start [5] channels for [5] tasks.

2020-08-09 00:03:05.588 [taskGroup-0] INFO Channel - Channel set byte_speed_limit to -1, No bps activated.

2020-08-09 00:03:05.588 [taskGroup-0] INFO Channel - Channel set record_speed_limit to -1, No tps activated.

2020-08-09 00:03:05.611 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[3] attemptCount[1] is started

2020-08-09 00:03:05.623 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[2] attemptCount[1] is started

2020-08-09 00:03:05.630 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[0] attemptCount[1] is started

2020-08-09 00:03:05.660 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[1] attemptCount[1] is started

2020-08-09 00:03:05.672 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[4] attemptCount[1] is started

10 hello,你好,世界-DataX

10 hello,你好,世界-DataX

10 hello,你好,世界-DataX

10 hello,你好,世界-DataX

10 hello,你好,世界-DataX

10 hello,你好,世界-DataX

10 hello,你好,世界-DataX

.......

2020-08-09 00:03:05.774 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[0] is successed, used[149]ms

2020-08-09 00:03:05.774 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[1] is successed, used[142]ms

2020-08-09 00:03:05.774 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[2] is successed, used[161]ms

2020-08-09 00:03:05.774 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[3] is successed, used[171]ms

2020-08-09 00:03:05.774 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[4] is successed, used[112]ms

2020-08-09 00:03:05.774 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] completed it's tasks.

2020-08-09 00:03:15.595 [job-0] INFO StandAloneJobContainerCommunicator - Total 50 records, 950 bytes | Speed 95B/s, 5 records/s |

Error 0 records, 0 bytes | All Task WaitWriterTime 0.000s | All Task WaitRead

erTime 0.000s | Percentage 100.00%

2020-08-09 00:03:15.596 [job-0] INFO AbstractScheduler - Scheduler accomplished all tasks.

2020-08-09 00:03:15.596 [job-0] INFO JobContainer - DataX Writer.Job [streamwriter] do post work.

2020-08-09 00:03:15.596 [job-0] INFO JobContainer - DataX Reader.Job [streamreader] do post work.

2020-08-09 00:03:15.596 [job-0] INFO JobContainer - DataX jobId [0] completed successfully.

2020-08-09 00:03:15.597 [job-0] INFO HookInvoker - No hook invoked, because base dir not exists or is a file: /home/vagrant/datax/hook

2020-08-09 00:03:15.597 [job-0] INFO JobContainer -

[total cpu info] =>

averageCpu | maxDeltaCpu | minDeltaCpu

-1.00% | -1.00% | -1.00%

[total gc info] =>

NAME | totalGCCount | maxDeltaGCCount | minDeltaGCCount | totalGCTime | maxDeltaGCTime | minDeltaGCTime

Copy | 0 | 0 | 0 | 0.000s | 0.000s | 0.000s

MarkSweepCompact | 0 | 0 | 0 | 0.000s | 0.000s | 0.000s

2020-08-09 00:03:15.597 [job-0] INFO JobContainer - PerfTrace not enable!

2020-08-09 00:03:15.597 [job-0] INFO StandAloneJobContainerCommunicator - Total 50 records, 950 bytes | Speed 95B/s, 5 records/s | Error 0 records, 0 bytes |

All Task WaitWriterTime 0.000s | All Task WaitRead

erTime 0.000s | Percentage 100.00%

2020-08-09 00:03:15.598 [job-0] INFO JobContainer -

任务启动时刻 : 2020-08-09 00:03:05

任务结束时刻 : 2020-08-09 00:03:15

任务总计耗时 : 10s

任务平均流量 : 95B/s

记录写入速度 : 5rec/s

读出记录总数 : 50

读写失败总数 : 0

[root@c7-docker bin]#

2,从关系型数据库,抽到Hbase

从DataX主页:查看’Support Data Channels’ --> Hbase1.1: 写 ,查找hbase写入参数:https://github.com/alibaba/DataX

###### 查看目标服务器:hbase版本

[root@hbase-server oracle-packs]$ hbase version

Java HotSpot(TM) 64-Bit Server VM warning: Using incremental CMS is deprecated and will likely be removed in a future release

HBase 1.2.0-cdh5.12.0

Source code repository file:///data/jenkins/workspace/generic-package-rhel64-6-0/topdir/BUILD/hbase-1.2.0-cdh5.12.0 revision=Unknown

Compiled by jenkins on Thu Jun 29 04:42:07 PDT 2017

From source with checksum 6834049453a9459ccaf4cadbf9a54b2c

######从客户端机器操作DataX: 把关系型数据库(sqlserver,mysql,oracle)数据,抽取到hbase

###### 从sqlserver-> hbase: 选择hbase-writer, sqlserver-reader

[root@c7-docker bin]# cat ../conf/sqlserver_w_hbase.json

{

"job": {

"setting": {

"speed": {

"channel": 5

}

},

"content": [

{

"reader": {

"name": "sqlserverreader",

"parameter": {

"username": "sa",

"password": "XXXabc123$",

"where": "",

"connection": [

{

"querySql": [

"SELECT t1.id, t1.name , t2.job

FROM master.dbo.t1

left join t2

on t1.id=t2.id"

],

"jdbcUrl": [

"jdbc:sqlserver://192.168.56.101:1433"

]

}

]

}

},

"writer": {

"name": "hbase11xwriter",

"parameter": {

"hbaseConfig": {

"hbase.zookeeper.quorum": "192.168.56.101:2181"

},

"table": "t",

"mode": "normal",

"rowkeyColumn": [

{

"index":0,

"type":"string"

}

],

"column": [

{

"index":1,

"name": "f:name",

"type": "string"

},

{

"index":2,

"name": "f:job",

"type": "string"

}

],

"encoding": "utf-8"

}

}

}

]

}

}

###### 从mysql-> hbase: 选择hbase-writer, mysql-reader

[root@c7-docker bin]# cat ../conf/mysql_w_hbase.json

...

"reader": {

"name":"mysqlreader",

"parameter":{

"username":"root",

"password":"123456",

"connection":[

{

"querySql":[

"select id,name from test.t1 where id > 2"

],

"jdbcUrl":[

"jdbc:mysql://192.168.56.161:3306"

]

}

]

}

},

...

###### 从oracle-> hbase: 选择hbase-writer, oracle-reader

[root@c7-docker bin]# cat ../conf/oracle_w_hbase.json

....

"reader": {

"name": "oraclereader",

"parameter": {

"username": "system",

"password": "orcl",

"connection": [

{

"querySql": [

" select id,name,\"data\" from bi.t1 where id < 1000"

],

"jdbcUrl": [

"jdbc:oracle:thin:@192.168.56.101:1521:orcl"

]

}

]

}

},

....