Adaboost Matlab 实现

Adaboost Matlab 实现

算法介绍:Adaboost (Adaptive boosting) 是由 Yoav Freund 和 Robert Schapire 在1995年在 "A Short Introduction to Boosting" 论文中提出。论文链接.

参考书籍:机器学习实战

这一程序随机生成数据集和标签,通过Adaboost算法训练弱分类器,最终合并为一强分类器,通过画出阈值分割线,直观显示出Adaboost的分割方式。

代码实现

这里附上我的Adaboost的matlab实现。

% Writen by: Weichen GU

% Date : 2019/03/09

function Adaboost

% Initialization

clc;

clear;

cla;

% Global range definition

global lowT;

global highT;

lowT = 0; highT = 3000;

% Generate data and labels

% [1] random labels

[data, label] = createData(50,2,lowT,highT);

% [2] Rectangle shape region labels

%label = (data(:,1)-data(:,2) > 0)*2 - 1;

% Generate data

%[data, ~] = createData(500,2,lowT,highT);

% [3] Circle shape region labels

%label = (((data(:,1)-1500).^2+(data(:,2)-1500).^2 -1000^2) > 0)*2 - 1;

% [4] Hyperbolic shape region labels

%label = (((data(:,1)-1500).^2-(data(:,2)-1500).^2 -300^2) > 0)*2 - 1;

% Draw the original figure of data with different labels

figure(1);

clf;

format rat

hold on

plot( data(label==1,1),data(label==1,2),'b+','LineWidth',3,'MarkerSize',8);

plot(data(label==-1,1),data(label==-1,2),'ro','LineWidth',3,'MarkerSize',8);

xlabel('x');ylabel('y');axis([lowT highT lowT highT]);

title('Orignal data');

hold off

% Draw the predicted data and labels

figure(2);

clf;

hold on

% Using Adaboost algorithm to train the data

[~,~,strongClassifierLabel]=AdaboostTrain(data,label,10000);

% Plot the predicted value and labels

plot( data(strongClassifierLabel==1,1),data(strongClassifierLabel==1,2),'b+','LineWidth',3,'MarkerSize',8);

plot(data(strongClassifierLabel==-1,1),data(strongClassifierLabel==-1,2),'ro','LineWidth',3,'MarkerSize',8);

xlabel('x');ylabel('y');axis([lowT highT lowT highT]);

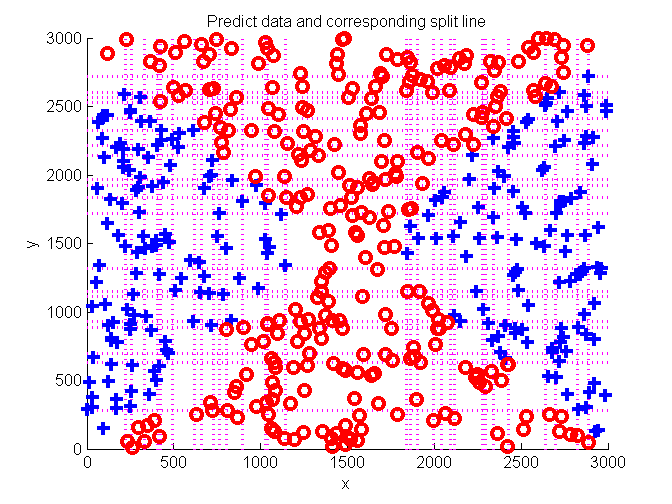

title('Predict data and corresponding split line');

hold off

end

%--- This function is to create sample data ---%

function [data,label] = createData(m,n,lowT,highT)

% Default parameters

if ~nargin

lowT = 1;highT = 100;m = 2;n = 10;

end

% The samples should be less than the total samples

if((m*n)>highT^2)

return;

end

data = [];label = [];

for i = 1:1:m

s = [];

t = 1;

while 1

temp = randi([lowT, highT],1);

if(isempty(find(s==temp, 1)))

s=[s temp];

t = t+1;

end

if(t>n)

break;

end

end

data = [data;s];

label = randi([0, 1],m,1);

end

% Create labels (-1,1)

label = label*2-1;

end

%--- weakClassifier function ---%

function [retLabel] = weakClassifier(data,dimension, threshVal,threshMethod)

% Weak classifier

if ~threshMethod % 0 - lowThresh, 1- highThresh

threshMethod = 0;

end

retLabel = zeros(length(data),1)-1;

data_temp = data(:,dimension);

retLabel(data_temp>threshVal)=1;

% highThresh

if(threshMethod==1)

retLabel = -retLabel;

end

end

function [bestWeakClassifier, minErr, bestLabel]=selectBestStump(data, label,D)

[m, n] = size(data);

step = 2*m; % Step definion

bestWeakClassifier = []; % Best weak classifier in one loop

bestLabel = zeros(m,1); % Best predicted label

minErr = inf; % Initialize minimum error

success=0; % This is for draw the split line(threshold) of every loop

for i=1:1:n

minValue = min(data(:,i)); % Get the minimum value

maxValue = max(data(:,i)); % and the maximum value

stepValue = (maxValue-minValue)/step; % Split the difference by the steps

% Traverse the values

for j = -1:step+1

% Low threshold and high threshold

for k = 0:1:1

threshVal = minValue+stepValue*j;

% for every threshold get the predictlabel

predictLabel = weakClassifier(data,i,threshVal,k);

% assign error data to 1 and right data to0

errData = ones(m,1);

errData(label==predictLabel)=0;

% Get the weighted error by multipling D and errData

weightedErr =D'*errData;

% Get the minumum weightedErr in one loop

if (weightedErr<minErr)

success=1;

dimemsion = i;

thresh_temp = threshVal;

minErr = weightedErr;

bestLabel = predictLabel;

bestWeakClassifier={'dimension' i;'thresh' threshVal};

end

end

end

end

% Draw the split line(threshold) in one loop

if (success ==1)

drawline(dimemsion,thresh_temp,step);

end

end

%--- This function is to draw the split line in every loop ---%

function [ok] = drawline(i,threshVal,step)

global lowT;global highT;

% i = 1 horizontal axis

if(i==1)

cx=threshVal*ones(1,step+1);

cy=lowT:(highT-lowT)/step:highT;

plot(cx,cy,'m:','lineWidth',2)

end

% i = 2 vertical axis

if(i==2)

cy=threshVal*ones(1,step+1);

cx=lowT:(highT-lowT)/step:highT;

plot(cx,cy,'m:','lineWidth',2)

end

% Return value

ok = 1;

end

%--- Simple realization method for Adaboost algorithm ---%

function [weakClassifier,err,strongClassifier] = AdaboostTrain(data,label,numIteration)

[m, ~] = size(data);

weakClassifier = {};

D = ones(m,1)/m;

combinedWeakClassifier = zeros(m,1);

for i = 1:1:numIteration

[bestWeakClassifier, minErr, bestLabel]=selectBestStump(data, label,D);

alpha= 0.5*log((1-minErr)/minErr);

bestWeakClassifier(3,:) = {'alpha' alpha};

% Store weak classifier parameters

weakClassifier=[weakClassifier;bestWeakClassifier];

% Get D value

D = D.*exp(-alpha*label.*bestLabel);

Z_t = 2*sqrt(minErr*(1-minErr));

% Normalize D, we can also divide D by sum(D)

D = D/Z_t;

% Combine weak classifier

combinedWeakClassifier = combinedWeakClassifier+alpha*bestLabel;

% Generate strong classifier

strongClassifier = sign(combinedWeakClassifier);

[~,err]=symerr(label,strongClassifier);

% Display error rate

err = vpa(err)

% If error = 0, then return

if(err==0.0)

break;

end

end

end结果展示

-

随机生成 5 个带标签的点集,并使用Adaboost得到含有阈值分割线的结果图。

-

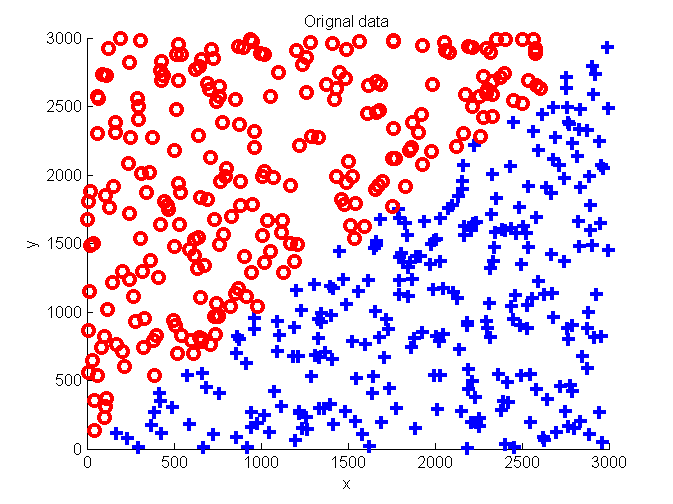

随机生成 100 个带标签的点集,并使用Adaboost得到含有阈值分割线的结果图。

-

生成 500 个带三角形区域标签的点集,并使用Adaboost得到含有阈值分割线的结果图。

-

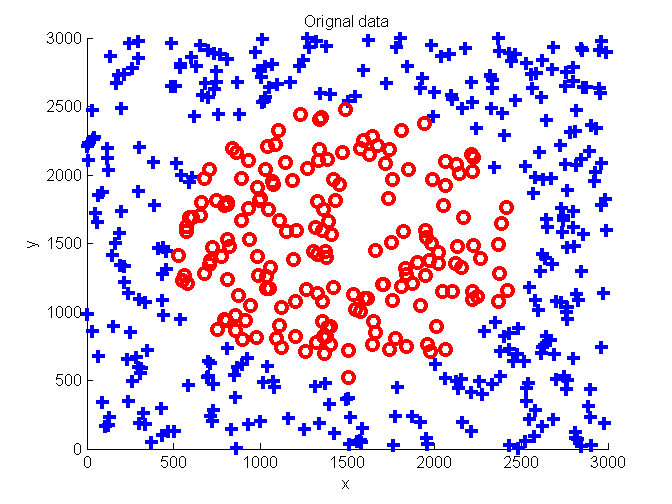

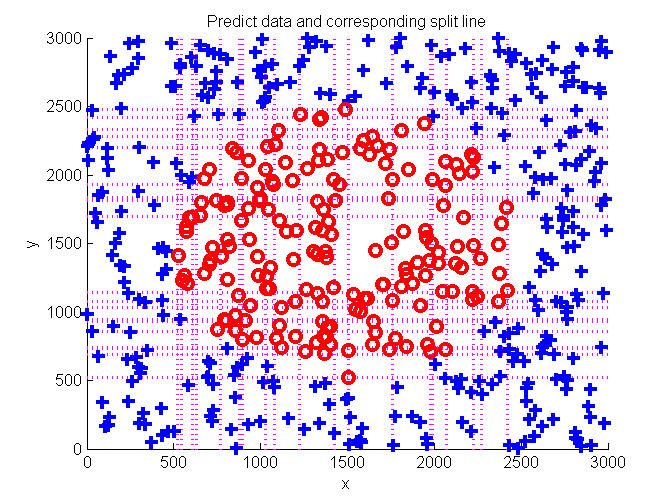

生成 500 个带圆形区域标签的点集,并使用Adaboost得到含有阈值分割线的结果图。

-

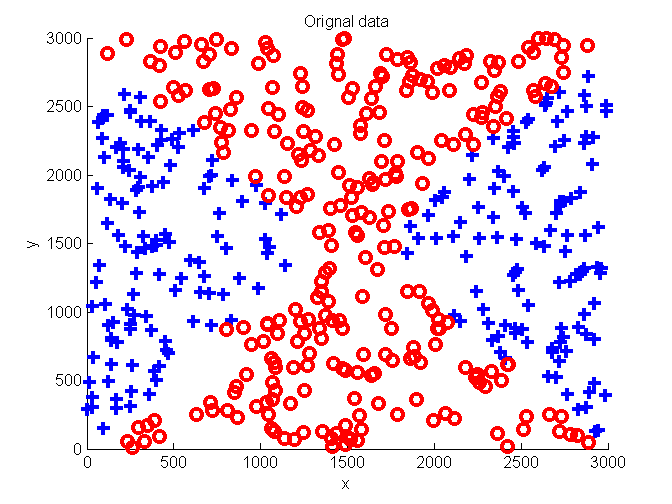

生成 500 个带双曲线区域标签的点集,并使用Adaboost得到含有阈值分割线的结果图。

动态展示

下面是Adaboost的动态展示程序下载链接:

https://download.csdn.net/download/weixin_43290523/11560664