简介

本身我对文本方面的比如自然语言处理什么的钻研的不多,这里是我之前写的邮件分类,用的方法其实是很简单的算法,同时这种处理方式可以说是最常用的文本处理技巧。

下下来一个是为了自己记录一下,当然如果您刚刚入门机器学习或者NLP,能给您一些帮助也最好不过了。

数据集

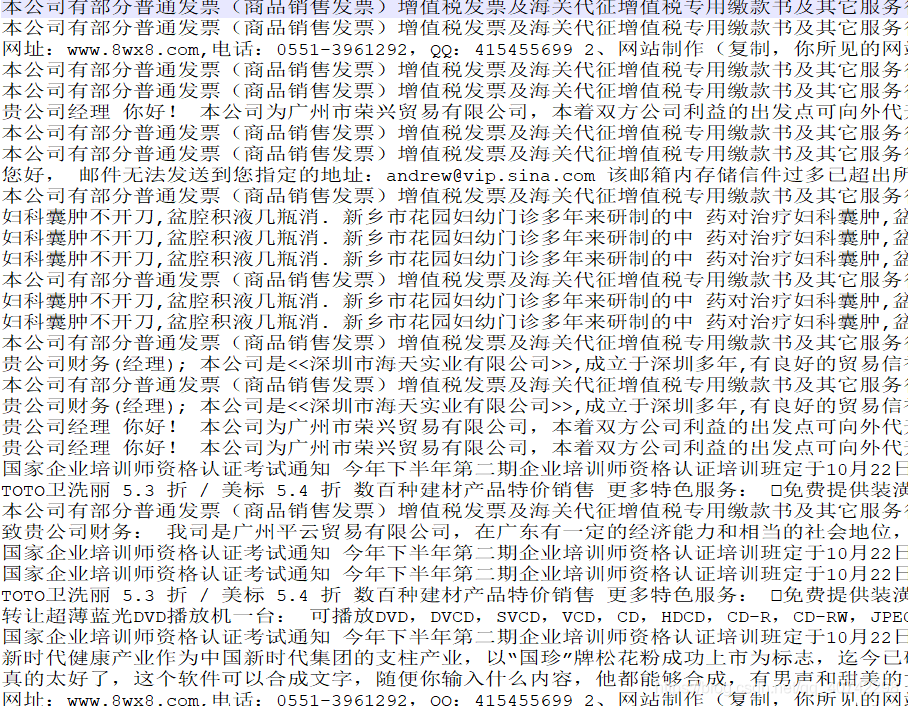

- 垃圾邮件

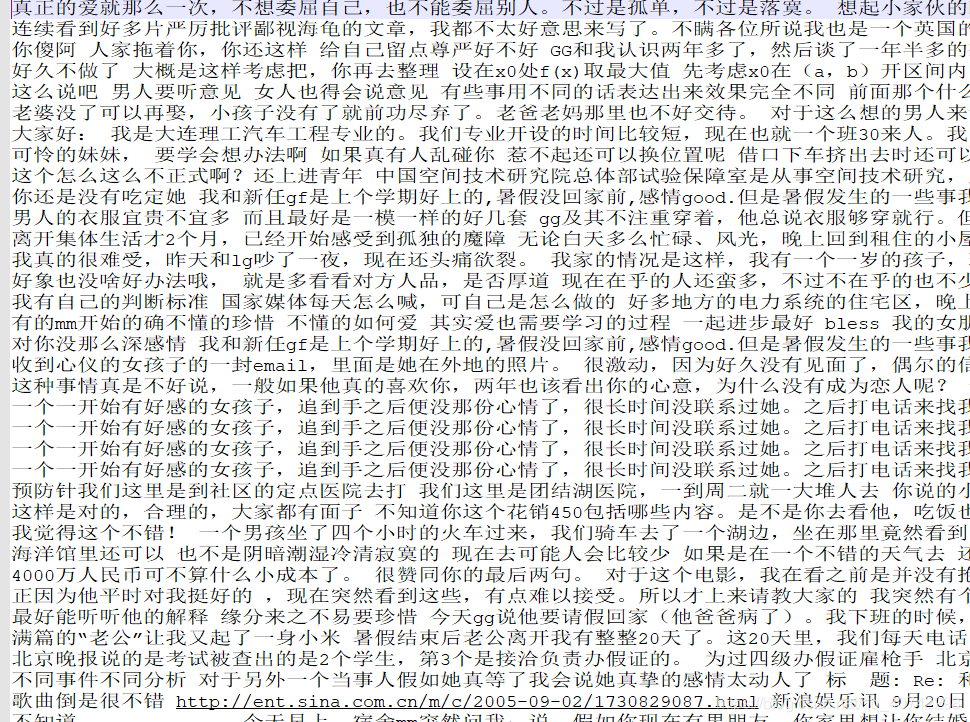

- 普通邮件

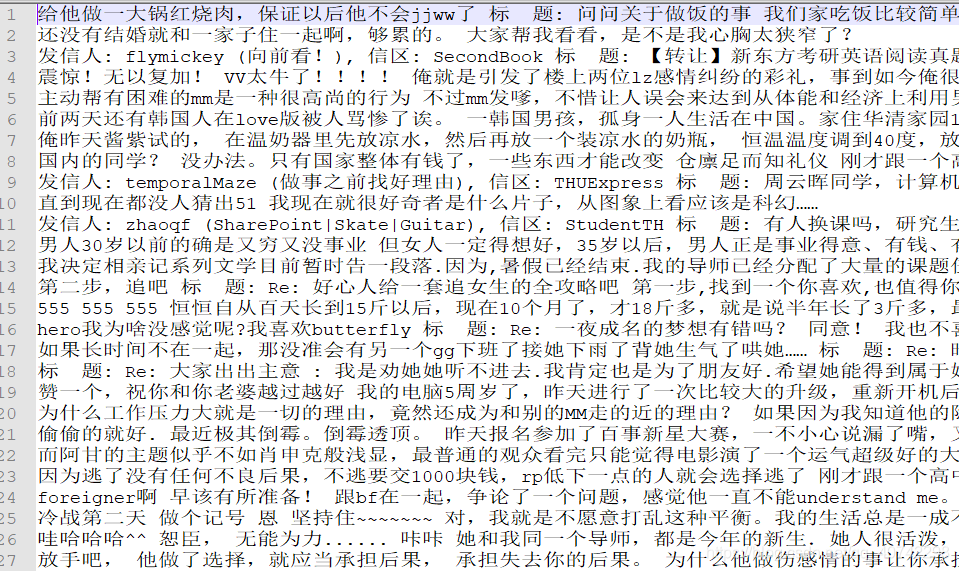

当然还有测试集:

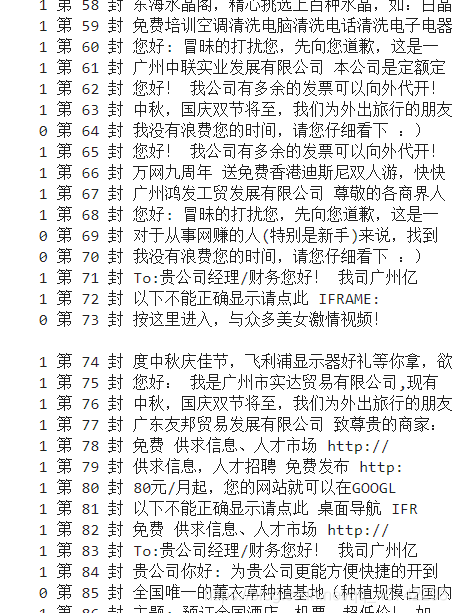

这里我使用的数据集中的部分截图。

代码

导入库函数

import jieba

import numpy as np

from sklearn.feature_extraction.text import CountVectorizer

from sklearn.naive_bayes import MultinomialNB

读取数据

停用词文档。

stopWordFilePath = 'stopword.txt'

stopList = [word.strip() for word in open(stopWordFilePath, encoding='utf-8').readlines()]

stopList[:4]

ham = []

spam = []

with open('ham_100.utf8', 'r', encoding='utf-8') as f:

for line in f.readlines():

ham.append(line)

with open('spam_100.utf8', 'r', encoding='utf-8') as f:

for line in f.readlines():

spam.append(line)

ham[:2],spam[:2]

## 读取测试集

test_data = []

with open('test.utf8', 'r', encoding='utf-8') as f:

for line in f.readlines():

test_data.append(line)

cut_test_data = [jieba.cut(item) for item in test_data]

test_data_fin = [' '.join(word) for word in cut_test_data]

分词并去掉停用词

cut_ham = [jieba.cut(sentence=str0) for str0 in ham]

train_ham = [' '.join(item) for item in cut_ham]

train_ham[:3]

cut_spam = [jieba.cut(str0) for str0 in spam]

train_spam = [' '.join(item) for item in cut_spam]

train_spam[:4]

这里使用词袋处理停用词。

count = CountVectorizer(stop_words = stopList)

train_X_tmp = train_spam + train_ham

count.fit(train_spam + train_ham + test_data_fin)

train_X = count.transform(train_X_tmp).toarray()

train_X.shape

添加标记 1 : 是垃圾邮件 0 : 不是垃圾邮件

train_y_spam = [1 for i in range(len(train_spam))]

train_y_ham = [0 for i in range(len(train_ham))]

合并数据集

train_y_spam = [1 for i in range(len(train_spam))]

train_y_ham = [0 for i in range(len(train_ham))]

模型构建

mnb = MultinomialNB()

mnb.fit(train_X, train_y)

y_pred = mnb.predict(test_x)

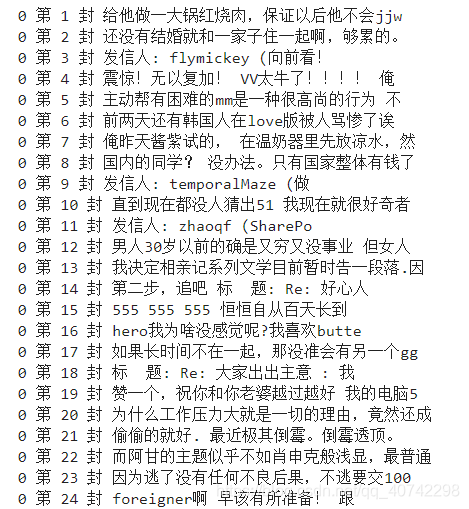

y_pred

for lab, (index, i) in zip(y_pred, enumerate(test_data)):

print(lab, "第", index+1, "封", i[:20])

算是做个笔记,大家共勉~~