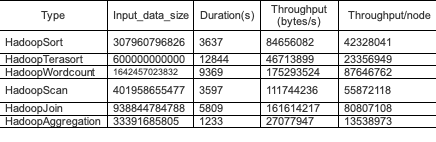

Hadoop 2.7.4集群基准测试

DN:32C 128G * 8,1块HDD 4T

hibench.scale.profile bigdata

hibench.default.map.parallelism 64

hibench.default.shuffle.parallelism 64

遇到的问题:

问题1:

Container launch failed for container_1591910537318_0012_01_002523 : java.io.IOException: Failed on local exception: java.io.IOException: java.net.SocketTimeoutException: 60000 millis timeout while waiting for channel to be ready for read. ch : java.nio.channels.SocketChannel[connected local=/10.0.0.222:52069 remote=CnBRWfGV-Core5.jcloud.local/10.0.0.220:45454]; Host Details : local host is: "CnBRWfGV-Core8.jcloud.local/10.0.0.222"; destination host is: "CnBRWfGV-Core5.jcloud.local":45454; at org.apache.hadoop.net.NetUtils.wrapException(NetUtils.java:776) at org.apache.hadoop.ipc.Client.call(Client.java:1480) at org.apache.hadoop.ipc.Client.call(Client.java:1413) at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:229) at com.sun.proxy.$Proxy81.startContainers(Unknown Source) at org.apache.hadoop.yarn.api.impl.pb.client.ContainerManagementProtocolPBClientImpl.startContainers(ContainerManagementProtocolPBClientImpl.java:96) at sun.reflect.GeneratedMethodAccessor13.invoke(Unknown Source) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:498) at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:191) at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:102) at com.sun.proxy.$Proxy82.startContainers(Unknown Source) at org.apache.hadoop.mapreduce.v2.app.launcher.ContainerLauncherImpl$Container.launch(ContainerLauncherImpl.java:152) at org.apache.hadoop.mapreduce.v2.app.launcher.ContainerLauncherImpl$EventProcessor.run(ContainerLauncherImpl.java:375) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617) at java.lang.Thread.run(Thread.java:745) Caused by: java.io.IOException: java.net.SocketTimeoutException: 60000 millis timeout while waiting for channel to be ready for read. ch : java.nio.channels.SocketChannel[connected local=/10.0.0.222:52069 remote=CnBRWfGV-Core5.jcloud.local/10.0.0.220:45454] at org.apache.hadoop.ipc.Client$Connection$1.run(Client.java:688) at java.security.AccessController.doPrivileged(Native Method) at javax.security.auth.Subject.doAs(Subject.java:422) at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1746) at org.apache.hadoop.ipc.Client$Connection.handleSaslConnectionFailure(Client.java:651) at org.apache.hadoop.ipc.Client$Connection.setupIOstreams(Client.java:738) at org.apache.hadoop.ipc.Client$Connection.access$2900(Client.java:376) at org.apache.hadoop.ipc.Client.getConnection(Client.java:1529) at org.apache.hadoop.ipc.Client.call(Client.java:1452) ... 15 more Caused by: java.net.SocketTimeoutException: 60000 millis timeout while waiting for channel to be ready for read. ch : java.nio.channels.SocketChannel[connected local=/10.0.0.222:52069 remote=CnBRWfGV-Core5.jcloud.local/10.0.0.220:45454] at org.apache.hadoop.net.SocketIOWithTimeout.doIO(SocketIOWithTimeout.java:164) at org.apache.hadoop.net.SocketInputStream.read(SocketInputStream.java:161) at org.apache.hadoop.net.SocketInputStream.read(SocketInputStream.java:131) at java.io.FilterInputStream.read(FilterInputStream.java:133) at java.io.BufferedInputStream.fill(BufferedInputStream.java:246) at java.io.BufferedInputStream.read(BufferedInputStream.java:265) at java.io.DataInputStream.readInt(DataInputStream.java:387) at org.apache.hadoop.security.SaslRpcClient.saslConnect(SaslRpcClient.java:367) at org.apache.hadoop.ipc.Client$Connection.setupSaslConnection(Client.java:561) at org.apache.hadoop.ipc.Client$Connection.access$1900(Client.java:376) at org.apache.hadoop.ipc.Client$Connection$2.run(Client.java:730) at org.apache.hadoop.ipc.Client$Connection$2.run(Client.java:726) at java.security.AccessController.doPrivileged(Native Method) at javax.security.auth.Subject.doAs(Subject.java:422) at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1746) at org.apache.hadoop.ipc.Client$Connection.setupIOstreams(Client.java:726) ... 18 more

解决:出现以上错误是因为datanode的服务线程连接数都被占用,导致Yarn等待超时

1. 修改datanode的处理线程数量: hdfs-site.xml的 dfs.datanode.handler.count,默认是10

2.修改客户端的超时时间: hdfs-site.xml的 dfs.client.socket-timeout,默认是60000ms

修改完之后,同步到每个节点:ansible all -m copy -a "src=/usr/local/hadoop-2.7.4/etc/hadoop/hdfs-site.xml dest=/usr/local/hadoop-2.7.4/etc/hadoop/hdfs-site.xml"

刷新配置信息:hdfs dfsadmin -refreshNodes

问题2:

Error: java.io.IOException: All datanodes DatanodeInfoWithStorage[10.0.0.219:50010,DS-538c6742-6e34-453c-b4c9-c89efaf63905,DISK] are bad. Aborting... at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.setupPipelineForAppendOrRecovery(DFSOutputStream.java:1224) at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.processDatanodeError(DFSOutputStream.java:990) at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.run(DFSOutputStream.java:507) Container killed by the ApplicationMaster. Container killed on request. Exit code is 143 Container exited with a non-zero exit code 143

解决:可能是datanode的数据盘太少,而container的数量太多,在并发写盘时会出这个问题,后来每台机器的container数量降到了8个,没再出现。

Hibench是如何计算map的container的数量的呢?

我在跑wordcount这个case的时候,设置的是64个并行度,那么wordcount准备数据时,一共创建了64个文件,如下:

23.9 G /HiBench/Wordcount/Input/part-m-00000

23.9 G /HiBench/Wordcount/Input/part-m-00001

23.9 G /HiBench/Wordcount/Input/part-m-00002

23.9 G /HiBench/Wordcount/Input/part-m-00003

23.9 G /HiBench/Wordcount/Input/part-m-00004

.......

在进行wordcount分析时,一共产生了6144个map,这是怎么算的呢?先看两个参数:

<property>

<name>file.blocksize</name>

<value>67108864</value> 67108864 / 1024 /1024 = 64mb

<source>core-default.xml</source>

</property>

<property>

<name>mapreduce.input.fileinputformat.split.minsize</name>

<value>268435456</value> 268435456 / 1024 /1024=256mb

<source>mapred-site.xml</source>

</property>

1.5 T /HiBench/Wordcount/Input 一共输入文件大小是 1.5 * 1024 * 1024 = 1572864 mb,1572864 /6144 = 256,也就说每个map处理256mb的数据,也就是 mapreduce.input.fileinputformat.split.minsize 起了作用

因此 wordcount一共启动了6144个map container

参考文档:https://blog.csdn.net/gangchengzhong/article/details/54861082