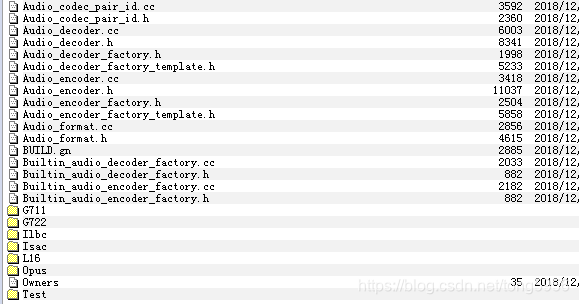

在webrtc的API模块中,分析Audio_codecs文件夹下的内容,该文件主要包括:

这里对Audio_decode.h、Audio_encode.h、Audio_decode_factory.h、Audio_decode_factory.h、Builtin_audio_decode_factory.cc、Builtin_audio_encode_factory.cc等进行分析,并简要介绍G711、G722、Opus等编码器接口

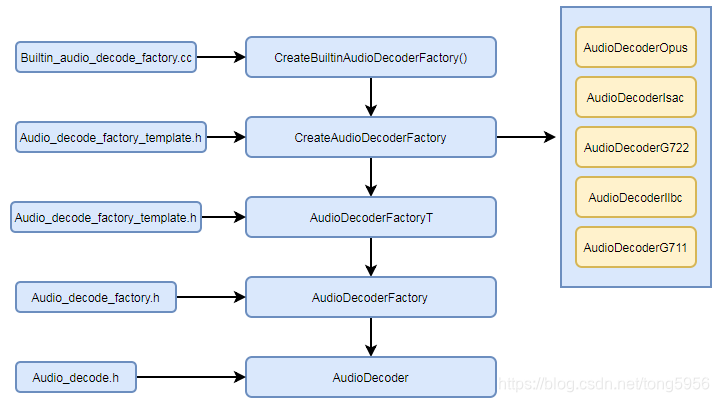

解码模块流程:

编码模块流程:

首先是解码:

Audio_decode.h:

#ifndef API_AUDIO_CODECS_AUDIO_DECODER_H_

#define API_AUDIO_CODECS_AUDIO_DECODER_H_

#include <stddef.h>

#include <stdint.h>

#include <memory>

#include <vector>

#include "absl/types/optional.h"

#include "api/array_view.h"

#include "rtc_base/buffer.h"

#include "rtc_base/constructormagic.h"

namespace webrtc {

class AudioDecoder {

public:

enum SpeechType {

kSpeech = 1,

kComfortNoise = 2,

};

// Used by PacketDuration below. Save the value -1 for errors.

enum { kNotImplemented = -2 };

AudioDecoder() = default;

virtual ~AudioDecoder() = default;

class EncodedAudioFrame {

public:

struct DecodeResult {

size_t num_decoded_samples;

SpeechType speech_type;

};

virtual ~EncodedAudioFrame() = default;

// Returns the duration in samples-per-channel of this audio frame.

// If no duration can be ascertained, returns zero.

virtual size_t Duration() const = 0;

// Returns true if this packet contains DTX.

virtual bool IsDtxPacket() const;

// Decodes this frame of audio and writes the result in |decoded|.

// |decoded| must be large enough to store as many samples as indicated by a

// call to Duration() . On success, returns an absl::optional containing the

// total number of samples across all channels, as well as whether the

// decoder produced comfort noise or speech. On failure, returns an empty

// absl::optional. Decode may be called at most once per frame object.

virtual absl::optional<DecodeResult> Decode(

rtc::ArrayView<int16_t> decoded) const = 0;

};

struct ParseResult {

ParseResult();

ParseResult(uint32_t timestamp,

int priority,

std::unique_ptr<EncodedAudioFrame> frame);

ParseResult(ParseResult&& b);

~ParseResult();

ParseResult& operator=(ParseResult&& b);

// The timestamp of the frame is in samples per channel.

uint32_t timestamp;

// The relative priority of the frame compared to other frames of the same

// payload and the same timeframe. A higher value means a lower priority.

// The highest priority is zero - negative values are not allowed.

int priority;

std::unique_ptr<EncodedAudioFrame> frame;

};

// Let the decoder parse this payload and prepare zero or more decodable

// frames. Each frame must be between 10 ms and 120 ms long. The caller must

// ensure that the AudioDecoder object outlives any frame objects returned by

// this call. The decoder is free to swap or move the data from the |payload|

// buffer. |timestamp| is the input timestamp, in samples, corresponding to

// the start of the payload.

virtual std::vector<ParseResult> ParsePayload(rtc::Buffer&& payload,

uint32_t timestamp);

// TODO(bugs.webrtc.org/10098): The Decode and DecodeRedundant methods are

// obsolete; callers should call ParsePayload instead. For now, subclasses

// must still implement DecodeInternal.

// Decodes |encode_len| bytes from |encoded| and writes the result in

// |decoded|. The maximum bytes allowed to be written into |decoded| is

// |max_decoded_bytes|. Returns the total number of samples across all

// channels. If the decoder produced comfort noise, |speech_type|

// is set to kComfortNoise, otherwise it is kSpeech. The desired output

// sample rate is provided in |sample_rate_hz|, which must be valid for the

// codec at hand.

int Decode(const uint8_t* encoded,

size_t encoded_len,

int sample_rate_hz,

size_t max_decoded_bytes,

int16_t* decoded,

SpeechType* speech_type);

// Same as Decode(), but interfaces to the decoders redundant decode function.

// The default implementation simply calls the regular Decode() method.

int DecodeRedundant(const uint8_t* encoded,

size_t encoded_len,

int sample_rate_hz,

size_t max_decoded_bytes,

int16_t* decoded,

SpeechType* speech_type);

// Indicates if the decoder implements the DecodePlc method.

virtual bool HasDecodePlc() const;

// Calls the packet-loss concealment of the decoder to update the state after

// one or several lost packets. The caller has to make sure that the

// memory allocated in |decoded| should accommodate |num_frames| frames.

virtual size_t DecodePlc(size_t num_frames, int16_t* decoded);

// Asks the decoder to generate packet-loss concealment and append it to the

// end of |concealment_audio|. The concealment audio should be in

// channel-interleaved format, with as many channels as the last decoded

// packet produced. The implementation must produce at least

// requested_samples_per_channel, or nothing at all. This is a signal to the

// caller to conceal the loss with other means. If the implementation provides

// concealment samples, it is also responsible for "stitching" it together

// with the decoded audio on either side of the concealment.

// Note: The default implementation of GeneratePlc will be deleted soon. All

// implementations must provide their own, which can be a simple as a no-op.

// TODO(bugs.webrtc.org/9676): Remove default impementation.

virtual void GeneratePlc(size_t requested_samples_per_channel,

rtc::BufferT<int16_t>* concealment_audio);

// Resets the decoder state (empty buffers etc.).

virtual void Reset() = 0;

// Notifies the decoder of an incoming packet to NetEQ.

virtual int IncomingPacket(const uint8_t* payload,

size_t payload_len,

uint16_t rtp_sequence_number,

uint32_t rtp_timestamp,

uint32_t arrival_timestamp);

// Returns the last error code from the decoder.

virtual int ErrorCode();

// Returns the duration in samples-per-channel of the payload in |encoded|

// which is |encoded_len| bytes long. Returns kNotImplemented if no duration

// estimate is available, or -1 in case of an error.

virtual int PacketDuration(const uint8_t* encoded, size_t encoded_len) const;

// Returns the duration in samples-per-channel of the redandant payload in

// |encoded| which is |encoded_len| bytes long. Returns kNotImplemented if no

// duration estimate is available, or -1 in case of an error.

virtual int PacketDurationRedundant(const uint8_t* encoded,

size_t encoded_len) const;

// Detects whether a packet has forward error correction. The packet is

// comprised of the samples in |encoded| which is |encoded_len| bytes long.

// Returns true if the packet has FEC and false otherwise.

virtual bool PacketHasFec(const uint8_t* encoded, size_t encoded_len) const;

// Returns the actual sample rate of the decoder's output. This value may not

// change during the lifetime of the decoder.

virtual int SampleRateHz() const = 0;

// The number of channels in the decoder's output. This value may not change

// during the lifetime of the decoder.

virtual size_t Channels() const = 0;

protected:

static SpeechType ConvertSpeechType(int16_t type);

virtual int DecodeInternal(const uint8_t* encoded,

size_t encoded_len,

int sample_rate_hz,

int16_t* decoded,

SpeechType* speech_type) = 0;

virtual int DecodeRedundantInternal(const uint8_t* encoded,

size_t encoded_len,

int sample_rate_hz,

int16_t* decoded,

SpeechType* speech_type);

private:

RTC_DISALLOW_COPY_AND_ASSIGN(AudioDecoder);

};

} // namespace webrtc

#endif // API_AUDIO_CODECS_AUDIO_DECODER_H_

Audio_decode_factory.h:

/*

* Copyright (c) 2016 The WebRTC project authors. All Rights Reserved.

*

* Use of this source code is governed by a BSD-style license

* that can be found in the LICENSE file in the root of the source

* tree. An additional intellectual property rights grant can be found

* in the file PATENTS. All contributing project authors may

* be found in the AUTHORS file in the root of the source tree.

*/

#ifndef API_AUDIO_CODECS_AUDIO_DECODER_FACTORY_H_

#define API_AUDIO_CODECS_AUDIO_DECODER_FACTORY_H_

#include <memory>

#include <vector>

#include "absl/types/optional.h"

#include "api/audio_codecs/audio_codec_pair_id.h"

#include "api/audio_codecs/audio_decoder.h"

#include "api/audio_codecs/audio_format.h"

#include "rtc_base/refcount.h"

namespace webrtc {

// A factory that creates AudioDecoders.

class AudioDecoderFactory : public rtc::RefCountInterface {

public:

virtual std::vector<AudioCodecSpec> GetSupportedDecoders() = 0;

virtual bool IsSupportedDecoder(const SdpAudioFormat& format) = 0;

// Create a new decoder instance. The `codec_pair_id` argument is used to link

// encoders and decoders that talk to the same remote entity: if a

// AudioEncoderFactory::MakeAudioEncoder() and a

// AudioDecoderFactory::MakeAudioDecoder() call receive non-null IDs that

// compare equal, the factory implementations may assume that the encoder and

// decoder form a pair. (The intended use case for this is to set up

// communication between the AudioEncoder and AudioDecoder instances, which is

// needed for some codecs with built-in bandwidth adaptation.)

//

// Note: Implementations need to be robust against combinations other than

// one encoder, one decoder getting the same ID; such decoders must still

// work.

virtual std::unique_ptr<AudioDecoder> MakeAudioDecoder(

const SdpAudioFormat& format,

absl::optional<AudioCodecPairId> codec_pair_id) = 0;

};

} // namespace webrtc

#endif // API_AUDIO_CODECS_AUDIO_DECODER_FACTORY_H_

Audio_decode_factory_template.h:

#ifndef API_AUDIO_CODECS_AUDIO_DECODER_FACTORY_TEMPLATE_H_

#define API_AUDIO_CODECS_AUDIO_DECODER_FACTORY_TEMPLATE_H_

#include <memory>

#include <vector>

#include "api/audio_codecs/audio_decoder_factory.h"

#include "rtc_base/refcountedobject.h"

#include "rtc_base/scoped_ref_ptr.h"

namespace webrtc {

namespace audio_decoder_factory_template_impl {

template <typename... Ts>

struct Helper;

// Base case: 0 template parameters.

template <>

struct Helper<> {

static void AppendSupportedDecoders(std::vector<AudioCodecSpec>* specs) {}

static bool IsSupportedDecoder(const SdpAudioFormat& format) { return false; }

static std::unique_ptr<AudioDecoder> MakeAudioDecoder(

const SdpAudioFormat& format,

absl::optional<AudioCodecPairId> codec_pair_id) {

return nullptr;

}

};

// Inductive case: Called with n + 1 template parameters; calls subroutines

// with n template parameters.

template <typename T, typename... Ts>

struct Helper<T, Ts...> {

static void AppendSupportedDecoders(std::vector<AudioCodecSpec>* specs) {

T::AppendSupportedDecoders(specs);

Helper<Ts...>::AppendSupportedDecoders(specs);

}

static bool IsSupportedDecoder(const SdpAudioFormat& format) {

auto opt_config = T::SdpToConfig(format);

static_assert(std::is_same<decltype(opt_config),

absl::optional<typename T::Config>>::value,

"T::SdpToConfig() must return a value of type "

"absl::optional<T::Config>");

return opt_config ? true : Helper<Ts...>::IsSupportedDecoder(format);

}

static std::unique_ptr<AudioDecoder> MakeAudioDecoder(

const SdpAudioFormat& format,

absl::optional<AudioCodecPairId> codec_pair_id) {

auto opt_config = T::SdpToConfig(format);

return opt_config ? T::MakeAudioDecoder(*opt_config, codec_pair_id)

: Helper<Ts...>::MakeAudioDecoder(format, codec_pair_id);

}

};

template <typename... Ts>

class AudioDecoderFactoryT : public AudioDecoderFactory {

public:

std::vector<AudioCodecSpec> GetSupportedDecoders() override {

std::vector<AudioCodecSpec> specs;

Helper<Ts...>::AppendSupportedDecoders(&specs);

return specs;

}

bool IsSupportedDecoder(const SdpAudioFormat& format) override {

return Helper<Ts...>::IsSupportedDecoder(format);

}

std::unique_ptr<AudioDecoder> MakeAudioDecoder(

const SdpAudioFormat& format,

absl::optional<AudioCodecPairId> codec_pair_id) override {

return Helper<Ts...>::MakeAudioDecoder(format, codec_pair_id);

}

};

} // namespace audio_decoder_factory_template_impl

// Make an AudioDecoderFactory that can create instances of the given decoders.

//

// Each decoder type is given as a template argument to the function; it should

// be a struct with the following static member functions:

//

// // Converts |audio_format| to a ConfigType instance. Returns an empty

// // optional if |audio_format| doesn't correctly specify an decoder of our

// // type.

// absl::optional<ConfigType> SdpToConfig(const SdpAudioFormat& audio_format);

//

// // Appends zero or more AudioCodecSpecs to the list that will be returned

// // by AudioDecoderFactory::GetSupportedDecoders().

// void AppendSupportedDecoders(std::vector<AudioCodecSpec>* specs);

//

// // Creates an AudioDecoder for the specified format. Used to implement

// // AudioDecoderFactory::MakeAudioDecoder().

// std::unique_ptr<AudioDecoder> MakeAudioDecoder(

// const ConfigType& config,

// absl::optional<AudioCodecPairId> codec_pair_id);

//

// ConfigType should be a type that encapsulates all the settings needed to

// create an AudioDecoder. T::Config (where T is the decoder struct) should

// either be the config type, or an alias for it.

//

// Whenever it tries to do something, the new factory will try each of the

// decoder types in the order they were specified in the template argument

// list, stopping at the first one that claims to be able to do the job.

//

// TODO(kwiberg): Point at CreateBuiltinAudioDecoderFactory() for an example of

// how it is used.

template <typename... Ts>

rtc::scoped_refptr<AudioDecoderFactory> CreateAudioDecoderFactory() {

// There's no technical reason we couldn't allow zero template parameters,

// but such a factory couldn't create any decoders, and callers can do this

// by mistake by simply forgetting the <> altogether. So we forbid it in

// order to prevent caller foot-shooting.

static_assert(sizeof...(Ts) >= 1,

"Caller must give at least one template parameter");

return rtc::scoped_refptr<AudioDecoderFactory>(

new rtc::RefCountedObject<

audio_decoder_factory_template_impl::AudioDecoderFactoryT<Ts...>>());

}

} // namespace webrtc

#endif // API_AUDIO_CODECS_AUDIO_DECODER_FACTORY_TEMPLATE_H_

Builtin_audio_decode_factory.cc:

#include "api/audio_codecs/builtin_audio_decoder_factory.h"

#include <memory>

#include <vector>

#include "api/audio_codecs/L16/audio_decoder_L16.h"

#include "api/audio_codecs/audio_decoder_factory_template.h"

#include "api/audio_codecs/g711/audio_decoder_g711.h"

#include "api/audio_codecs/g722/audio_decoder_g722.h"

#if WEBRTC_USE_BUILTIN_ILBC

#include "api/audio_codecs/ilbc/audio_decoder_ilbc.h" // nogncheck

#endif

#include "api/audio_codecs/isac/audio_decoder_isac.h"

#if WEBRTC_USE_BUILTIN_OPUS

#include "api/audio_codecs/opus/audio_decoder_opus.h" // nogncheck

#endif

namespace webrtc {

namespace {

// Modify an audio decoder to not advertise support for anything.

template <typename T>

struct NotAdvertised {

using Config = typename T::Config;

static absl::optional<Config> SdpToConfig(

const SdpAudioFormat& audio_format) {

return T::SdpToConfig(audio_format);

}

static void AppendSupportedDecoders(std::vector<AudioCodecSpec>* specs) {

// Don't advertise support for anything.

}

static std::unique_ptr<AudioDecoder> MakeAudioDecoder(

const Config& config,

absl::optional<AudioCodecPairId> codec_pair_id = absl::nullopt) {

return T::MakeAudioDecoder(config, codec_pair_id);

}

};

} // namespace

rtc::scoped_refptr<AudioDecoderFactory> CreateBuiltinAudioDecoderFactory() {

return CreateAudioDecoderFactory<

#if WEBRTC_USE_BUILTIN_OPUS

AudioDecoderOpus,

#endif

AudioDecoderIsac, AudioDecoderG722,

#if WEBRTC_USE_BUILTIN_ILBC

AudioDecoderIlbc,

#endif

AudioDecoderG711, NotAdvertised<AudioDecoderL16>>();

}

} // namespace webrtc

然后是编码,

Audio_encode.h文件:

#ifndef API_AUDIO_CODECS_AUDIO_ENCODER_H_

#define API_AUDIO_CODECS_AUDIO_ENCODER_H_

#include <memory>

#include <string>

#include <vector>

#include "absl/types/optional.h"

#include "api/array_view.h"

#include "api/call/bitrate_allocation.h"

#include "rtc_base/buffer.h"

#include "rtc_base/deprecation.h"

namespace webrtc {

class RtcEventLog;

// Statistics related to Audio Network Adaptation.

struct ANAStats {

ANAStats();

ANAStats(const ANAStats&);

~ANAStats();

// Number of actions taken by the ANA bitrate controller since the start of

// the call. If this value is not set, it indicates that the bitrate

// controller is disabled.

absl::optional<uint32_t> bitrate_action_counter;

// Number of actions taken by the ANA channel controller since the start of

// the call. If this value is not set, it indicates that the channel

// controller is disabled.

absl::optional<uint32_t> channel_action_counter;

// Number of actions taken by the ANA DTX controller since the start of the

// call. If this value is not set, it indicates that the DTX controller is

// disabled.

absl::optional<uint32_t> dtx_action_counter;

// Number of actions taken by the ANA FEC controller since the start of the

// call. If this value is not set, it indicates that the FEC controller is

// disabled.

absl::optional<uint32_t> fec_action_counter;

// Number of times the ANA frame length controller decided to increase the

// frame length since the start of the call. If this value is not set, it

// indicates that the frame length controller is disabled.

absl::optional<uint32_t> frame_length_increase_counter;

// Number of times the ANA frame length controller decided to decrease the

// frame length since the start of the call. If this value is not set, it

// indicates that the frame length controller is disabled.

absl::optional<uint32_t> frame_length_decrease_counter;

// The uplink packet loss fractions as set by the ANA FEC controller. If this

// value is not set, it indicates that the ANA FEC controller is not active.

absl::optional<float> uplink_packet_loss_fraction;

};

// This is the interface class for encoders in AudioCoding module. Each codec

// type must have an implementation of this class.

class AudioEncoder {

public:

// Used for UMA logging of codec usage. The same codecs, with the

// same values, must be listed in

// src/tools/metrics/histograms/histograms.xml in chromium to log

// correct values.

enum class CodecType {

kOther = 0, // Codec not specified, and/or not listed in this enum

kOpus = 1,

kIsac = 2,

kPcmA = 3,

kPcmU = 4,

kG722 = 5,

kIlbc = 6,

// Number of histogram bins in the UMA logging of codec types. The

// total number of different codecs that are logged cannot exceed this

// number.

kMaxLoggedAudioCodecTypes

};

struct EncodedInfoLeaf {

size_t encoded_bytes = 0;

uint32_t encoded_timestamp = 0;

int payload_type = 0;

bool send_even_if_empty = false;

bool speech = true;

CodecType encoder_type = CodecType::kOther;

};

// This is the main struct for auxiliary encoding information. Each encoded

// packet should be accompanied by one EncodedInfo struct, containing the

// total number of |encoded_bytes|, the |encoded_timestamp| and the

// |payload_type|. If the packet contains redundant encodings, the |redundant|

// vector will be populated with EncodedInfoLeaf structs. Each struct in the

// vector represents one encoding; the order of structs in the vector is the

// same as the order in which the actual payloads are written to the byte

// stream. When EncoderInfoLeaf structs are present in the vector, the main

// struct's |encoded_bytes| will be the sum of all the |encoded_bytes| in the

// vector.

struct EncodedInfo : public EncodedInfoLeaf {

EncodedInfo();

EncodedInfo(const EncodedInfo&);

EncodedInfo(EncodedInfo&&);

~EncodedInfo();

EncodedInfo& operator=(const EncodedInfo&);

EncodedInfo& operator=(EncodedInfo&&);

std::vector<EncodedInfoLeaf> redundant;

};

virtual ~AudioEncoder() = default;

// Returns the input sample rate in Hz and the number of input channels.

// These are constants set at instantiation time.

virtual int SampleRateHz() const = 0;

virtual size_t NumChannels() const = 0;

// Returns the rate at which the RTP timestamps are updated. The default

// implementation returns SampleRateHz().

virtual int RtpTimestampRateHz() const;

// Returns the number of 10 ms frames the encoder will put in the next

// packet. This value may only change when Encode() outputs a packet; i.e.,

// the encoder may vary the number of 10 ms frames from packet to packet, but

// it must decide the length of the next packet no later than when outputting

// the preceding packet.

virtual size_t Num10MsFramesInNextPacket() const = 0;

// Returns the maximum value that can be returned by

// Num10MsFramesInNextPacket().

virtual size_t Max10MsFramesInAPacket() const = 0;

// Returns the current target bitrate in bits/s. The value -1 means that the

// codec adapts the target automatically, and a current target cannot be

// provided.

virtual int GetTargetBitrate() const = 0;

// Accepts one 10 ms block of input audio (i.e., SampleRateHz() / 100 *

// NumChannels() samples). Multi-channel audio must be sample-interleaved.

// The encoder appends zero or more bytes of output to |encoded| and returns

// additional encoding information. Encode() checks some preconditions, calls

// EncodeImpl() which does the actual work, and then checks some

// postconditions.

EncodedInfo Encode(uint32_t rtp_timestamp,

rtc::ArrayView<const int16_t> audio,

rtc::Buffer* encoded);

// Resets the encoder to its starting state, discarding any input that has

// been fed to the encoder but not yet emitted in a packet.

virtual void Reset() = 0;

// Enables or disables codec-internal FEC (forward error correction). Returns

// true if the codec was able to comply. The default implementation returns

// true when asked to disable FEC and false when asked to enable it (meaning

// that FEC isn't supported).

virtual bool SetFec(bool enable);

// Enables or disables codec-internal VAD/DTX. Returns true if the codec was

// able to comply. The default implementation returns true when asked to

// disable DTX and false when asked to enable it (meaning that DTX isn't

// supported).

virtual bool SetDtx(bool enable);

// Returns the status of codec-internal DTX. The default implementation always

// returns false.

virtual bool GetDtx() const;

// Sets the application mode. Returns true if the codec was able to comply.

// The default implementation just returns false.

enum class Application { kSpeech, kAudio };

virtual bool SetApplication(Application application);

// Tells the encoder about the highest sample rate the decoder is expected to

// use when decoding the bitstream. The encoder would typically use this

// information to adjust the quality of the encoding. The default

// implementation does nothing.

virtual void SetMaxPlaybackRate(int frequency_hz);

// This is to be deprecated. Please use |OnReceivedTargetAudioBitrate|

// instead.

// Tells the encoder what average bitrate we'd like it to produce. The

// encoder is free to adjust or disregard the given bitrate (the default

// implementation does the latter).

RTC_DEPRECATED virtual void SetTargetBitrate(int target_bps);

// Causes this encoder to let go of any other encoders it contains, and

// returns a pointer to an array where they are stored (which is required to

// live as long as this encoder). Unless the returned array is empty, you may

// not call any methods on this encoder afterwards, except for the

// destructor. The default implementation just returns an empty array.

// NOTE: This method is subject to change. Do not call or override it.

virtual rtc::ArrayView<std::unique_ptr<AudioEncoder>>

ReclaimContainedEncoders();

// Enables audio network adaptor. Returns true if successful.

virtual bool EnableAudioNetworkAdaptor(const std::string& config_string,

RtcEventLog* event_log);

// Disables audio network adaptor.

virtual void DisableAudioNetworkAdaptor();

// Provides uplink packet loss fraction to this encoder to allow it to adapt.

// |uplink_packet_loss_fraction| is in the range [0.0, 1.0].

virtual void OnReceivedUplinkPacketLossFraction(

float uplink_packet_loss_fraction);

// Provides 1st-order-FEC-recoverable uplink packet loss rate to this encoder

// to allow it to adapt.

// |uplink_recoverable_packet_loss_fraction| is in the range [0.0, 1.0].

virtual void OnReceivedUplinkRecoverablePacketLossFraction(

float uplink_recoverable_packet_loss_fraction);

// Provides target audio bitrate to this encoder to allow it to adapt.

virtual void OnReceivedTargetAudioBitrate(int target_bps);

// Provides target audio bitrate and corresponding probing interval of

// the bandwidth estimator to this encoder to allow it to adapt.

virtual void OnReceivedUplinkBandwidth(int target_audio_bitrate_bps,

absl::optional<int64_t> bwe_period_ms);

// Provides target audio bitrate and corresponding probing interval of

// the bandwidth estimator to this encoder to allow it to adapt.

virtual void OnReceivedUplinkAllocation(BitrateAllocationUpdate update);

// Provides RTT to this encoder to allow it to adapt.

virtual void OnReceivedRtt(int rtt_ms);

// Provides overhead to this encoder to adapt. The overhead is the number of

// bytes that will be added to each packet the encoder generates.

virtual void OnReceivedOverhead(size_t overhead_bytes_per_packet);

// To allow encoder to adapt its frame length, it must be provided the frame

// length range that receivers can accept.

virtual void SetReceiverFrameLengthRange(int min_frame_length_ms,

int max_frame_length_ms);

// Get statistics related to audio network adaptation.

virtual ANAStats GetANAStats() const;

protected:

// Subclasses implement this to perform the actual encoding. Called by

// Encode().

virtual EncodedInfo EncodeImpl(uint32_t rtp_timestamp,

rtc::ArrayView<const int16_t> audio,

rtc::Buffer* encoded) = 0;

};

} // namespace webrtc

#endif // API_AUDIO_CODECS_AUDIO_ENCODER_H_

Audio_encode_factory.h:

#ifndef API_AUDIO_CODECS_AUDIO_ENCODER_FACTORY_H_

#define API_AUDIO_CODECS_AUDIO_ENCODER_FACTORY_H_

#include <memory>

#include <vector>

#include "absl/types/optional.h"

#include "api/audio_codecs/audio_codec_pair_id.h"

#include "api/audio_codecs/audio_encoder.h"

#include "api/audio_codecs/audio_format.h"

#include "rtc_base/refcount.h"

namespace webrtc {

// A factory that creates AudioEncoders.

class AudioEncoderFactory : public rtc::RefCountInterface {

public:

// Returns a prioritized list of audio codecs, to use for signaling etc.

virtual std::vector<AudioCodecSpec> GetSupportedEncoders() = 0;

// Returns information about how this format would be encoded, provided it's

// supported. More format and format variations may be supported than those

// returned by GetSupportedEncoders().

virtual absl::optional<AudioCodecInfo> QueryAudioEncoder(

const SdpAudioFormat& format) = 0;

// Creates an AudioEncoder for the specified format. The encoder will tags its

// payloads with the specified payload type. The `codec_pair_id` argument is

// used to link encoders and decoders that talk to the same remote entity: if

// a AudioEncoderFactory::MakeAudioEncoder() and a

// AudioDecoderFactory::MakeAudioDecoder() call receive non-null IDs that

// compare equal, the factory implementations may assume that the encoder and

// decoder form a pair. (The intended use case for this is to set up

// communication between the AudioEncoder and AudioDecoder instances, which is

// needed for some codecs with built-in bandwidth adaptation.)

//

// Note: Implementations need to be robust against combinations other than

// one encoder, one decoder getting the same ID; such encoders must still

// work.

//

// TODO(ossu): Try to avoid audio encoders having to know their payload type.

virtual std::unique_ptr<AudioEncoder> MakeAudioEncoder(

int payload_type,

const SdpAudioFormat& format,

absl::optional<AudioCodecPairId> codec_pair_id) = 0;

};

} // namespace webrtc

#endif // API_AUDIO_CODECS_AUDIO_ENCODER_FACTORY_H_

Audio_encode_factory_template.h:

#ifndef API_AUDIO_CODECS_AUDIO_ENCODER_FACTORY_TEMPLATE_H_

#define API_AUDIO_CODECS_AUDIO_ENCODER_FACTORY_TEMPLATE_H_

#include <memory>

#include <vector>

#include "api/audio_codecs/audio_encoder_factory.h"

#include "rtc_base/refcountedobject.h"

#include "rtc_base/scoped_ref_ptr.h"

namespace webrtc {

namespace audio_encoder_factory_template_impl {

template <typename... Ts>

struct Helper;

// Base case: 0 template parameters.

template <>

struct Helper<> {

static void AppendSupportedEncoders(std::vector<AudioCodecSpec>* specs) {}

static absl::optional<AudioCodecInfo> QueryAudioEncoder(

const SdpAudioFormat& format) {

return absl::nullopt;

}

static std::unique_ptr<AudioEncoder> MakeAudioEncoder(

int payload_type,

const SdpAudioFormat& format,

absl::optional<AudioCodecPairId> codec_pair_id) {

return nullptr;

}

};

// Inductive case: Called with n + 1 template parameters; calls subroutines

// with n template parameters.

template <typename T, typename... Ts>

struct Helper<T, Ts...> {

static void AppendSupportedEncoders(std::vector<AudioCodecSpec>* specs) {

T::AppendSupportedEncoders(specs);

Helper<Ts...>::AppendSupportedEncoders(specs);

}

static absl::optional<AudioCodecInfo> QueryAudioEncoder(

const SdpAudioFormat& format) {

auto opt_config = T::SdpToConfig(format);

static_assert(std::is_same<decltype(opt_config),

absl::optional<typename T::Config>>::value,

"T::SdpToConfig() must return a value of type "

"absl::optional<T::Config>");

return opt_config ? absl::optional<AudioCodecInfo>(

T::QueryAudioEncoder(*opt_config))

: Helper<Ts...>::QueryAudioEncoder(format);

}

static std::unique_ptr<AudioEncoder> MakeAudioEncoder(

int payload_type,

const SdpAudioFormat& format,

absl::optional<AudioCodecPairId> codec_pair_id) {

auto opt_config = T::SdpToConfig(format);

if (opt_config) {

return T::MakeAudioEncoder(*opt_config, payload_type, codec_pair_id);

} else {

return Helper<Ts...>::MakeAudioEncoder(payload_type, format,

codec_pair_id);

}

}

};

template <typename... Ts>

class AudioEncoderFactoryT : public AudioEncoderFactory {

public:

std::vector<AudioCodecSpec> GetSupportedEncoders() override {

std::vector<AudioCodecSpec> specs;

Helper<Ts...>::AppendSupportedEncoders(&specs);

return specs;

}

absl::optional<AudioCodecInfo> QueryAudioEncoder(

const SdpAudioFormat& format) override {

return Helper<Ts...>::QueryAudioEncoder(format);

}

std::unique_ptr<AudioEncoder> MakeAudioEncoder(

int payload_type,

const SdpAudioFormat& format,

absl::optional<AudioCodecPairId> codec_pair_id) override {

return Helper<Ts...>::MakeAudioEncoder(payload_type, format, codec_pair_id);

}

};

} // namespace audio_encoder_factory_template_impl

// Make an AudioEncoderFactory that can create instances of the given encoders.

//

// Each encoder type is given as a template argument to the function; it should

// be a struct with the following static member functions:

//

// // Converts |audio_format| to a ConfigType instance. Returns an empty

// // optional if |audio_format| doesn't correctly specify an encoder of our

// // type.

// absl::optional<ConfigType> SdpToConfig(const SdpAudioFormat& audio_format);

//

// // Appends zero or more AudioCodecSpecs to the list that will be returned

// // by AudioEncoderFactory::GetSupportedEncoders().

// void AppendSupportedEncoders(std::vector<AudioCodecSpec>* specs);

//

// // Returns information about how this format would be encoded. Used to

// // implement AudioEncoderFactory::QueryAudioEncoder().

// AudioCodecInfo QueryAudioEncoder(const ConfigType& config);

//

// // Creates an AudioEncoder for the specified format. Used to implement

// // AudioEncoderFactory::MakeAudioEncoder().

// std::unique_ptr<AudioDecoder> MakeAudioEncoder(

// const ConfigType& config,

// int payload_type,

// absl::optional<AudioCodecPairId> codec_pair_id);

//

// ConfigType should be a type that encapsulates all the settings needed to

// create an AudioEncoder. T::Config (where T is the encoder struct) should

// either be the config type, or an alias for it.

//

// Whenever it tries to do something, the new factory will try each of the

// encoders in the order they were specified in the template argument list,

// stopping at the first one that claims to be able to do the job.

//

// TODO(kwiberg): Point at CreateBuiltinAudioEncoderFactory() for an example of

// how it is used.

template <typename... Ts>

rtc::scoped_refptr<AudioEncoderFactory> CreateAudioEncoderFactory() {

// There's no technical reason we couldn't allow zero template parameters,

// but such a factory couldn't create any encoders, and callers can do this

// by mistake by simply forgetting the <> altogether. So we forbid it in

// order to prevent caller foot-shooting.

static_assert(sizeof...(Ts) >= 1,

"Caller must give at least one template parameter");

return rtc::scoped_refptr<AudioEncoderFactory>(

new rtc::RefCountedObject<

audio_encoder_factory_template_impl::AudioEncoderFactoryT<Ts...>>());

}

} // namespace webrtc

#endif // API_AUDIO_CODECS_AUDIO_ENCODER_FACTORY_TEMPLATE_H_

Builtin_audio_encode_factory.cc:

#include "api/audio_codecs/builtin_audio_encoder_factory.h"

#include <memory>

#include <vector>

#include "api/audio_codecs/L16/audio_encoder_L16.h"

#include "api/audio_codecs/audio_encoder_factory_template.h"

#include "api/audio_codecs/g711/audio_encoder_g711.h"

#include "api/audio_codecs/g722/audio_encoder_g722.h"

#if WEBRTC_USE_BUILTIN_ILBC

#include "api/audio_codecs/ilbc/audio_encoder_ilbc.h" // nogncheck

#endif

#include "api/audio_codecs/isac/audio_encoder_isac.h"

#if WEBRTC_USE_BUILTIN_OPUS

#include "api/audio_codecs/opus/audio_encoder_opus.h" // nogncheck

#endif

namespace webrtc {

namespace {

// Modify an audio encoder to not advertise support for anything.

template <typename T>

struct NotAdvertised {

using Config = typename T::Config;

static absl::optional<Config> SdpToConfig(

const SdpAudioFormat& audio_format) {

return T::SdpToConfig(audio_format);

}

static void AppendSupportedEncoders(std::vector<AudioCodecSpec>* specs) {

// Don't advertise support for anything.

}

static AudioCodecInfo QueryAudioEncoder(const Config& config) {

return T::QueryAudioEncoder(config);

}

static std::unique_ptr<AudioEncoder> MakeAudioEncoder(

const Config& config,

int payload_type,

absl::optional<AudioCodecPairId> codec_pair_id = absl::nullopt) {

return T::MakeAudioEncoder(config, payload_type, codec_pair_id);

}

};

} // namespace

rtc::scoped_refptr<AudioEncoderFactory> CreateBuiltinAudioEncoderFactory() {

return CreateAudioEncoderFactory<

#if WEBRTC_USE_BUILTIN_OPUS

AudioEncoderOpus,

#endif

AudioEncoderIsac, AudioEncoderG722,

#if WEBRTC_USE_BUILTIN_ILBC

AudioEncoderIlbc,

#endif

AudioEncoderG711, NotAdvertised<AudioEncoderL16>>();

}

} // namespace webrtc