第3.1章 卷积神经网络CNN-2.1:动手实现简单的CNN

一、导入数据集并初始化数据集

使用cifar-10数据集,下载完成后,解压缩。cifar是32x32的彩色图像。

1.加载数据

data是一个字典,通过print(data.keys())查看字典的关键字。

data有四个关键字:'batch_label', 'labels', 'data', 'filenames',通过print(data['data'])查看某一关键字中的内容。

import pickle

def load(filename):

with open(filename, 'rb') as fo:

data = pickle.load(fo, encoding='latin1')

return data

path = 'D:\WorkSpace\PyCharmSpace\cifar-10-batches-py\data_batch_1'

data = load(path)

2.数据转图片

如果img = np.reshape(picData[i], (3, 32, 32)); img = img.transpose(1, 2, 0)用img = np.reshape(picData[i], (32, 32,3))代替,生成的一张图片将会是由3×3的9张图片组成的灰度图片

import numpy as np

from matplotlib.image import imsave

picData = data['data']

picLabel = data['labels']

fileName = data['filenames']

for i in range(len(picData)):

picName = 'D:\WorkSpace\PyCharmSpace\cifar-10-batches-py\imgs\\' + str(picLabel[i]) + '_' + str(fileName[i]) + '.jpg'

img = np.reshape(picData[i], (3, 32, 32))

img = img.transpose(1, 2, 0)

imsave(picName, img)

3.图片转rgb3通道三维矩阵

I1 = Image.open('D:\WorkSpace\PyCharmSpace\cifar-10-batches-py\img_1\\0_29.jpg')

I1_array = np.array(I1)

print(I1_array.shape)

输出(32, 32, 3)

4.用四维矩阵表示多张图片

I1 = Image.open('D:\WorkSpace\PyCharmSpace\cifar-10-batches-py\img_1\\0_29.jpg')

I2 = Image.open('D:\WorkSpace\PyCharmSpace\cifar-10-batches-py\img_1\\0_30.jpg')

I1_array = np.array(I1)

I2_array = np.array(I2)

#先拼接成一个行向量

d = np.append(I1_array,I2_array)

#获取原矩阵的维数

dim = I1_array.shape

#再通过原矩阵的维数重新组合

data = d.reshape(2,dim[0],dim[1],dim[2])

print(data.shape)

输出(2, 32, 32, 3)

二、基本函数

1.padding函数

def zero_pad(X, pad):

"""

Pad with zeros all images of the dataset X.

The padding is applied to the height and width of an image.

Argument:

X -- python numpy array of shape (m, n_H, n_W, n_C) representing a batch of m images

pad -- integer, amount of padding around each image on vertical and horizontal dimensions

Returns:

X_pad -- padded image of shape (m, n_H + 2*pad, n_W + 2*pad, n_C)

"""

#'constant'后面还有一个参数'constant_value',默认为0

X_pad = np.pad(X, ((0, 0), (pad, pad), (pad, pad), (0, 0)), 'constant')

return X_pad

2.一个单位的卷积运算

def conv_single_step(a_slice_prev, W, b):

"""

Apply one filter defined by parameters W on a single slice (a_slice_prev) of the output activation

of the previous layer.

Arguments:

a_slice_prev -- slice of input data of shape (f, f, n_C_prev)

W -- Weight parameters contained in a window - matrix of shape (f, f, n_C_prev)

b -- Bias parameters contained in a window - matrix of shape (1, 1, 1)

Returns:

Z -- a scalar value, the result of convolving the sliding window (W, b) on a slice x of the input data

"""

Z = np.sum(a_slice_prev * W)

Z = float(Z + b)

return Z

三、卷积层、池化层

这两层的代码的基本一样,主要区别:

- 卷积层需要进行卷积运算,池化层直接取区域最大值;

- 卷积层output的大小和填充大小有关,池化层不进行填充;

- 卷积层output第三维度的大小和过滤器的个数有关,池化层第三维度的大小与input大小一样

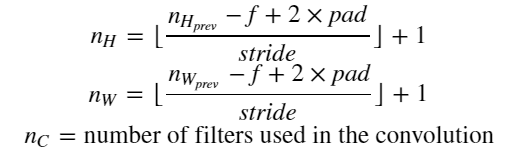

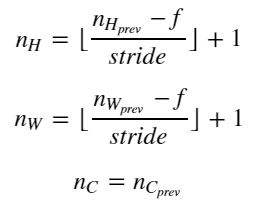

左边是卷积层计算output大小,右边是池化层计算output大小

1.一层卷积层

def conv_forward(A_prev, W, b, hparameters):

"""

Implements the forward propagation for a convolution function

Arguments:

A_prev -- output activations of the previous layer,

numpy array of shape (m, n_H_prev, n_W_prev, n_C_prev)

W -- Weights, numpy array of shape (f, f, n_C_prev, n_C)

b -- Biases, numpy array of shape (1, 1, 1, n_C)

hparameters -- python dictionary containing "stride" and "pad"

Returns:

Z -- conv output, numpy array of shape (m, n_H, n_W, n_C)

cache -- cache of values needed for the conv_backward() function

"""

(m, n_H_prev, n_W_prev, n_C_prev) = A_prev.shape

(f, f, n_C_prev, n_C) = W.shape

stride = hparameters['stride']

pad = hparameters['pad']

n_H = int((n_H_prev + 2 * pad - f) / stride + 1)

n_W = int((n_W_prev + 2 * pad - f) / stride + 1)

Z = np.zeros((m, n_H, n_W, n_C))

A_prev_pad = zero_pad(A_prev, pad)

for i in range(m): # loop over the batch of training examples

a_prev_pad = A_prev_pad[i, :, :, :] # Select ith training example's padded activation

for h in range(n_H): # loop over vertical axis of the output volume

vert_start = h * stride

vert_end = vert_start + f

for w in range(n_W): # loop over horizontal axis of the output volume

horiz_start = w * stride

horiz_end = horiz_start + f

for c in range(n_C): # loop over channels (= #filters) of the output volume

a_slice_prev = a_prev_pad[vert_start:vert_end, horiz_start:horiz_end, :]

weights = W[:, :, :, c]

biases = b[:, :, :, c]

Z[i, h, w, c] = conv_single_step(a_slice_prev, weights, biases)

assert (Z.shape == (m, n_H, n_W, n_C))

cache = (A_prev, W, b, hparameters)

return Z, cache

2.一层池化层

def pool_forward(A_prev, hparameters, mode="max"):

"""

Implements the forward pass of the pooling layer

Arguments:

A_prev -- Input data, numpy array of shape (m, n_H_prev, n_W_prev, n_C_prev)

hparameters -- python dictionary containing "f" and "stride"

mode -- the pooling mode you would like to use, defined as a string ("max" or "average")

Returns:

A -- output of the pool layer, a numpy array of shape (m, n_H, n_W, n_C)

cache -- cache used in the backward pass of the pooling layer, contains the input and hparameters

"""

(m, n_H_prev, n_W_prev, n_C_prev) = A_prev.shape

f = hparameters["f"]

stride = hparameters["stride"]

n_H = int(1 + (n_H_prev - f) / stride)

n_W = int(1 + (n_W_prev - f) / stride)

n_C = n_C_prev

A = np.zeros((m, n_H, n_W, n_C))

for i in range(m): # loop over the training examples

for h in range(n_H): # loop on the vertical axis of the output volume

vert_start = h * stride

vert_end = vert_start + f

for w in range(n_W): # loop on the horizontal axis of the output volume

horiz_start = w * stride

horiz_end = horiz_start + f

for c in range(n_C): # loop over the channels of the output volume

a_prev_slice = A_prev[i, vert_start:vert_end, horiz_start:horiz_end, c]

if mode == "max":

A[i, h, w, c] = np.max(a_prev_slice)

elif mode == "average":

A[i, h, w, c] = np.mean(a_prev_slice)

# Store the input and hparameters in "cache" for pool_backward()

cache = (A_prev, hparameters)

assert (A.shape == (m, n_H, n_W, n_C))

return A, cache

四、反向传播

一般只需要实现前向传播,深度学习的框架会做反向传播。 卷积神经网络(CNN)反向传播算法