时间序列数据建模流程范例

国内的新冠肺炎疫情从发现至今已经持续3个多月了,这场起源于吃野味的灾难给大家的生活造成了诸多方面的影响。

有的同学是收入上的,有的同学是感情上的,有的同学是心理上的,还有的同学是体重上的。

那么国内的新冠肺炎疫情何时结束呢?什么时候我们才可以重获自由呢?

本篇文章将利用TensorFlow2.0建立时间序列RNN模型,对国内的新冠肺炎疫情结束时间进行预测。

一,准备数据

本文的数据集取自tushare,获取该数据集的方法参考了以下文章。

《https://zhuanlan.zhihu.com/p/109556102》

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import tensorflow as tf

from tensorflow.keras import models,layers,losses,metrics,callbacks

%matplotlib inline

%config InlineBackend.figure_format = 'svg'

df = pd.read_csv("./data/covid-19.csv",sep = "\t")

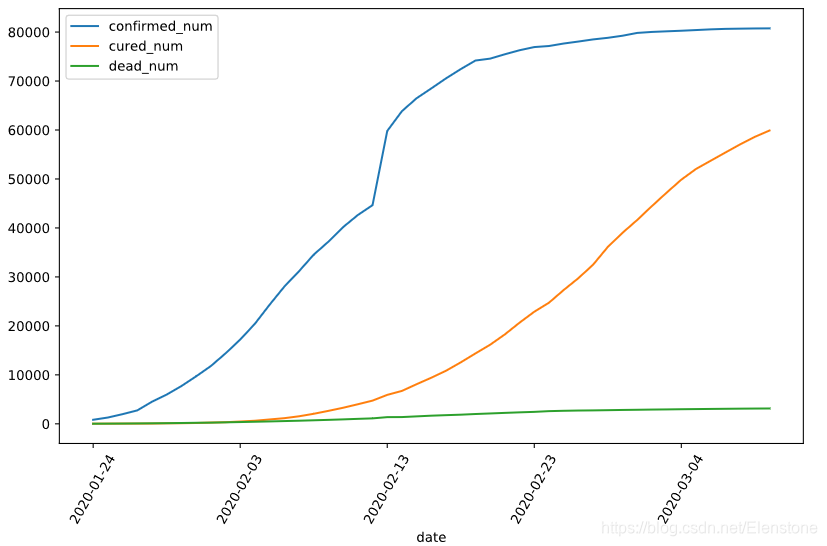

df.plot(x = "date",y = ["confirmed_num","cured_num","dead_num"],figsize=(10,6))

plt.xticks(rotation=60)

(array([-10., 0., 10., 20., 30., 40., 50.]),

<a list of 7 Text xticklabel objects>)

dfdata = df.set_index("date")

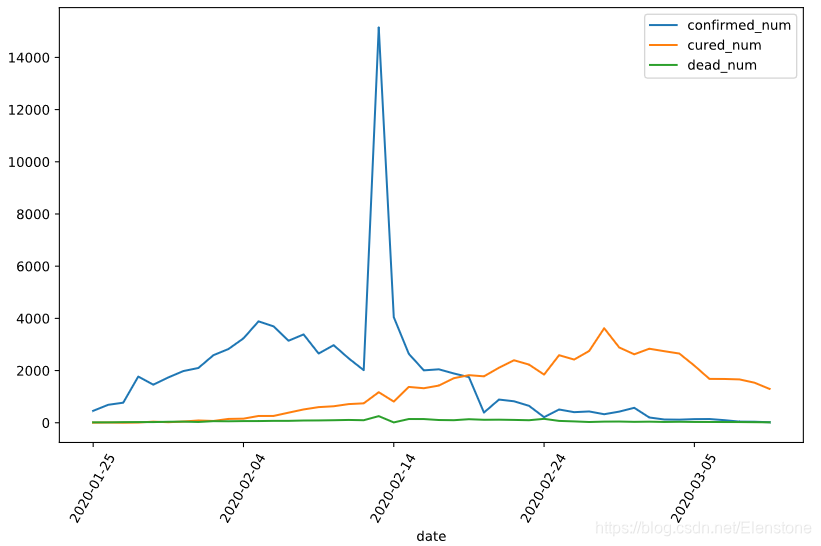

dfdiff = dfdata.diff(periods=1).dropna()

dfdiff = dfdiff.reset_index("date")

dfdiff.plot(x = "date",y = ["confirmed_num","cured_num","dead_num"],figsize=(10,6))

plt.xticks(rotation=60)

dfdiff = dfdiff.drop("date",axis = 1).astype("float32")

#用某日前8天窗口数据作为输入预测该日数据

WINDOW_SIZE = 8

def batch_dataset(dataset):

dataset_batched = dataset.batch(WINDOW_SIZE,drop_remainder=True)

return dataset_batched

ds_data = tf.data.Dataset.from_tensor_slices(tf.constant(dfdiff.values,dtype = tf.float32)) \

.window(WINDOW_SIZE,shift=1).flat_map(batch_dataset)

ds_label = tf.data.Dataset.from_tensor_slices(

tf.constant(dfdiff.values[WINDOW_SIZE:],dtype = tf.float32))

#数据较小,可以将全部训练数据放入到一个batch中,提升性能

ds_train = tf.data.Dataset.zip((ds_data,ds_label)).batch(38).cache()

二,定义模型

使用Keras接口有以下3种方式构建模型:使用Sequential按层顺序构建模型,使用函数式API构建任意结构模型,继承Model基类构建自定义模型。

此处选择使用函数式API构建任意结构模型。

#考虑到新增确诊,新增治愈,新增死亡人数数据不可能小于0,设计如下结构

class Block(layers.Layer):

def __init__(self, **kwargs):

super(Block, self).__init__(**kwargs)

def call(self, x_input,x):

x_out = tf.maximum((1+x)*x_input[:,-1,:],0.0)

return x_out

def get_config(self):

config = super(Block, self).get_config()

return config

tf.keras.backend.clear_session()

x_input = layers.Input(shape = (None,3),dtype = tf.float32)

x = layers.LSTM(3,return_sequences = True,input_shape=(None,3))(x_input)

x = layers.LSTM(3,return_sequences = True,input_shape=(None,3))(x)

x = layers.LSTM(3,return_sequences = True,input_shape=(None,3))(x)

x = layers.LSTM(3,input_shape=(None,3))(x)

x = layers.Dense(3)(x)

#考虑到新增确诊,新增治愈,新增死亡人数数据不可能小于0,设计如下结构

#x = tf.maximum((1+x)*x_input[:,-1,:],0.0)

x = Block()(x_input,x)

model = models.Model(inputs = [x_input],outputs = [x])

model.summary()

Model: "model"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_1 (InputLayer) [(None, None, 3)] 0

_________________________________________________________________

lstm (LSTM) (None, None, 3) 84

_________________________________________________________________

lstm_1 (LSTM) (None, None, 3) 84

_________________________________________________________________

lstm_2 (LSTM) (None, None, 3) 84

_________________________________________________________________

lstm_3 (LSTM) (None, 3) 84

_________________________________________________________________

dense (Dense) (None, 3) 12

_________________________________________________________________

block (Block) (None, 3) 0

=================================================================

Total params: 348

Trainable params: 348

Non-trainable params: 0

_________________________________________________________________

三,训练模型

训练模型通常有3种方法,内置fit方法,内置train_on_batch方法,以及自定义训练循环。此处我们选择最常用也最简单的内置fit方法。

注:循环神经网络调试较为困难,需要设置多个不同的学习率多次尝试,以取得较好的效果。

#自定义损失函数,考虑平方差和预测目标的比值

class MSPE(losses.Loss):

def call(self,y_true,y_pred):

err_percent = (y_true - y_pred)**2/(tf.maximum(y_true**2,1e-7))

mean_err_percent = tf.reduce_mean(err_percent)

return mean_err_percent

def get_config(self):

config = super(MSPE, self).get_config()

return config

import datetime

import os

optimizer = tf.keras.optimizers.Adam(learning_rate=0.01)

model.compile(optimizer=optimizer,loss=MSPE(name = "MSPE"))

current_time = datetime.datetime.now().strftime("%Y%m%d-%H%M%S")

logdir = os.path.join(".\\data\\keras_model",current_time)

tb_callback = tf.keras.callbacks.TensorBoard(logdir, histogram_freq=1)

#如果loss在100个epoch后没有提升,学习率减半。

lr_callback = tf.keras.callbacks.ReduceLROnPlateau(monitor="loss",factor = 0.5, patience = 100)

#当loss在200个epoch后没有提升,则提前终止训练。

stop_callback = tf.keras.callbacks.EarlyStopping(monitor = "loss", patience= 200)

callbacks_list = [tb_callback,lr_callback,stop_callback]

history = model.fit(ds_train,epochs=500,callbacks = callbacks_list)

Epoch 1/500

1/1 [==============================] - 8s 8s/step - loss: 3.3592

Epoch 2/500

WARNING:tensorflow:Method (on_train_batch_end) is slow compared to the batch update (0.149920). Check your callbacks.

1/1 [==============================] - 0s 245ms/step - loss: 3.2057

Epoch 3/500

1/1 [==============================] - 0s 103ms/step - loss: 3.0463

Epoch 4/500

1/1 [==============================] - 0s 100ms/step - loss: 2.8786

Epoch 5/500

1/1 [==============================] - 0s 95ms/step - loss: 2.7016

Epoch 6/500

1/1 [==============================] - 0s 100ms/step - loss: 2.5145

Epoch 7/500

1/1 [==============================] - 0s 113ms/step - loss: 2.3169

Epoch 8/500

1/1 [==============================] - 0s 98ms/step - loss: 2.1090

Epoch 9/500

1/1 [==============================] - 0s 99ms/step - loss: 1.8919

Epoch 10/500

1/1 [==============================] - 0s 194ms/step - loss: 1.6681

Epoch 11/500

1/1 [==============================] - 0s 94ms/step - loss: 1.4420

Epoch 12/500

1/1 [==============================] - 0s 99ms/step - loss: 1.2206

Epoch 13/500

1/1 [==============================] - 0s 114ms/step - loss: 1.0131

Epoch 14/500

1/1 [==============================] - 0s 185ms/step - loss: 0.8308

Epoch 15/500

1/1 [==============================] - 0s 100ms/step - loss: 0.6851

Epoch 16/500

1/1 [==============================] - 0s 113ms/step - loss: 0.5832

Epoch 17/500

1/1 [==============================] - 0s 123ms/step - loss: 0.5255

Epoch 18/500

1/1 [==============================] - 0s 121ms/step - loss: 0.5047

Epoch 19/500

1/1 [==============================] - 0s 194ms/step - loss: 0.5093

Epoch 20/500

1/1 [==============================] - 0s 98ms/step - loss: 0.5254

Epoch 21/500

1/1 [==============================] - 0s 98ms/step - loss: 0.5403

Epoch 22/500

1/1 [==============================] - 0s 131ms/step - loss: 0.5457

Epoch 23/500

1/1 [==============================] - 0s 115ms/step - loss: 0.5394

Epoch 24/500

1/1 [==============================] - 0s 106ms/step - loss: 0.5227

Epoch 25/500

1/1 [==============================] - 0s 191ms/step - loss: 0.4988

Epoch 26/500

1/1 [==============================] - 0s 122ms/step - loss: 0.4715

Epoch 27/500

1/1 [==============================] - 0s 105ms/step - loss: 0.4446

Epoch 28/500

1/1 [==============================] - 0s 214ms/step - loss: 0.4209

Epoch 29/500

1/1 [==============================] - 0s 123ms/step - loss: 0.4024

Epoch 30/500

1/1 [==============================] - 0s 103ms/step - loss: 0.3897

Epoch 31/500

1/1 [==============================] - 0s 128ms/step - loss: 0.3830

Epoch 32/500

1/1 [==============================] - 0s 135ms/step - loss: 0.3814

Epoch 33/500

1/1 [==============================] - 0s 98ms/step - loss: 0.3835

Epoch 34/500

1/1 [==============================] - 0s 120ms/step - loss: 0.3881

Epoch 35/500

1/1 [==============================] - 0s 107ms/step - loss: 0.3937

Epoch 36/500

1/1 [==============================] - 0s 86ms/step - loss: 0.3993

Epoch 37/500

1/1 [==============================] - 0s 100ms/step - loss: 0.4040

Epoch 38/500

1/1 [==============================] - 0s 247ms/step - loss: 0.4073

Epoch 39/500

1/1 [==============================] - 0s 147ms/step - loss: 0.4089

Epoch 40/500

1/1 [==============================] - 0s 138ms/step - loss: 0.4089

Epoch 41/500

1/1 [==============================] - 0s 120ms/step - loss: 0.4075

Epoch 42/500

1/1 [==============================] - 0s 116ms/step - loss: 0.4048

Epoch 43/500

1/1 [==============================] - 0s 246ms/step - loss: 0.4014

Epoch 44/500

1/1 [==============================] - 0s 112ms/step - loss: 0.3975

Epoch 45/500

1/1 [==============================] - 0s 98ms/step - loss: 0.3936

Epoch 46/500

1/1 [==============================] - 0s 168ms/step - loss: 0.3899

Epoch 47/500

1/1 [==============================] - 0s 118ms/step - loss: 0.3867

Epoch 48/500

1/1 [==============================] - 0s 124ms/step - loss: 0.3842

Epoch 49/500

1/1 [==============================] - 0s 118ms/step - loss: 0.3824

Epoch 50/500

1/1 [==============================] - 0s 103ms/step - loss: 0.3812

Epoch 51/500

1/1 [==============================] - 0s 140ms/step - loss: 0.3807

Epoch 52/500

1/1 [==============================] - 0s 142ms/step - loss: 0.3806

Epoch 53/500

1/1 [==============================] - 0s 135ms/step - loss: 0.3808

Epoch 54/500

1/1 [==============================] - 0s 102ms/step - loss: 0.3812

Epoch 55/500

1/1 [==============================] - 0s 116ms/step - loss: 0.3815

Epoch 56/500

1/1 [==============================] - 0s 114ms/step - loss: 0.3818

Epoch 57/500

1/1 [==============================] - 0s 115ms/step - loss: 0.3818

Epoch 58/500

1/1 [==============================] - 0s 102ms/step - loss: 0.3817

Epoch 59/500

1/1 [==============================] - 0s 115ms/step - loss: 0.3815

Epoch 60/500

1/1 [==============================] - 0s 92ms/step - loss: 0.3811

Epoch 61/500

1/1 [==============================] - 0s 116ms/step - loss: 0.3807

Epoch 62/500

1/1 [==============================] - 0s 116ms/step - loss: 0.3803

Epoch 63/500

1/1 [==============================] - 0s 96ms/step - loss: 0.3800

Epoch 64/500

1/1 [==============================] - 0s 121ms/step - loss: 0.3797

Epoch 65/500

1/1 [==============================] - 0s 124ms/step - loss: 0.3796

Epoch 66/500

1/1 [==============================] - 0s 86ms/step - loss: 0.3795

Epoch 67/500

1/1 [==============================] - 0s 100ms/step - loss: 0.3795

Epoch 68/500

1/1 [==============================] - 0s 111ms/step - loss: 0.3796

Epoch 69/500

1/1 [==============================] - 0s 108ms/step - loss: 0.3797

Epoch 70/500

1/1 [==============================] - 0s 117ms/step - loss: 0.3798

Epoch 71/500

1/1 [==============================] - 0s 141ms/step - loss: 0.3798

Epoch 72/500

1/1 [==============================] - 0s 108ms/step - loss: 0.3798

Epoch 73/500

1/1 [==============================] - 0s 130ms/step - loss: 0.3798

Epoch 74/500

1/1 [==============================] - 0s 117ms/step - loss: 0.3797

Epoch 75/500

1/1 [==============================] - 0s 112ms/step - loss: 0.3795

Epoch 76/500

1/1 [==============================] - 0s 119ms/step - loss: 0.3794

Epoch 77/500

1/1 [==============================] - 0s 105ms/step - loss: 0.3792

Epoch 78/500

1/1 [==============================] - 0s 101ms/step - loss: 0.3791

Epoch 79/500

1/1 [==============================] - 0s 116ms/step - loss: 0.3789

Epoch 80/500

1/1 [==============================] - 0s 104ms/step - loss: 0.3789

Epoch 81/500

1/1 [==============================] - 0s 105ms/step - loss: 0.3788

Epoch 82/500

1/1 [==============================] - 0s 109ms/step - loss: 0.3788

Epoch 83/500

1/1 [==============================] - 0s 113ms/step - loss: 0.3788

Epoch 84/500

1/1 [==============================] - 0s 99ms/step - loss: 0.3788

Epoch 85/500

1/1 [==============================] - 0s 101ms/step - loss: 0.3789

Epoch 86/500

1/1 [==============================] - 0s 106ms/step - loss: 0.3789

Epoch 87/500

1/1 [==============================] - 0s 104ms/step - loss: 0.3789

Epoch 88/500

1/1 [==============================] - 0s 100ms/step - loss: 0.3790

Epoch 89/500

1/1 [==============================] - 0s 94ms/step - loss: 0.3790

Epoch 90/500

1/1 [==============================] - 0s 116ms/step - loss: 0.3790

Epoch 91/500

1/1 [==============================] - 0s 95ms/step - loss: 0.3789

Epoch 92/500

1/1 [==============================] - 0s 114ms/step - loss: 0.3789

Epoch 93/500

1/1 [==============================] - 0s 116ms/step - loss: 0.3789

Epoch 94/500

1/1 [==============================] - 0s 101ms/step - loss: 0.3789

Epoch 95/500

1/1 [==============================] - 0s 125ms/step - loss: 0.3788

Epoch 96/500

1/1 [==============================] - 0s 118ms/step - loss: 0.3788

Epoch 97/500

1/1 [==============================] - 0s 217ms/step - loss: 0.3788

Epoch 98/500

1/1 [==============================] - 0s 108ms/step - loss: 0.3788

Epoch 99/500

1/1 [==============================] - 0s 119ms/step - loss: 0.3788

Epoch 100/500

1/1 [==============================] - 0s 122ms/step - loss: 0.3788

Epoch 101/500

1/1 [==============================] - 0s 123ms/step - loss: 0.3788

Epoch 102/500

1/1 [==============================] - 0s 128ms/step - loss: 0.3788

Epoch 103/500

1/1 [==============================] - 0s 107ms/step - loss: 0.3788

Epoch 104/500

1/1 [==============================] - 0s 143ms/step - loss: 0.3788

Epoch 105/500

1/1 [==============================] - 0s 123ms/step - loss: 0.3788

Epoch 106/500

1/1 [==============================] - 0s 111ms/step - loss: 0.3788

Epoch 107/500

1/1 [==============================] - 0s 104ms/step - loss: 0.3788

Epoch 108/500

1/1 [==============================] - 0s 138ms/step - loss: 0.3788

Epoch 109/500

1/1 [==============================] - 0s 254ms/step - loss: 0.3788

Epoch 110/500

1/1 [==============================] - 0s 134ms/step - loss: 0.3788

Epoch 111/500

1/1 [==============================] - 0s 119ms/step - loss: 0.3788

Epoch 112/500

1/1 [==============================] - 0s 118ms/step - loss: 0.3788

Epoch 113/500

1/1 [==============================] - 0s 114ms/step - loss: 0.3788

Epoch 114/500

1/1 [==============================] - 0s 87ms/step - loss: 0.3788

Epoch 115/500

1/1 [==============================] - 0s 98ms/step - loss: 0.3788

Epoch 116/500

1/1 [==============================] - 0s 83ms/step - loss: 0.3788

Epoch 117/500

1/1 [==============================] - 0s 112ms/step - loss: 0.3788

Epoch 118/500

1/1 [==============================] - 0s 115ms/step - loss: 0.3788

Epoch 119/500

1/1 [==============================] - 0s 114ms/step - loss: 0.3788

Epoch 120/500

1/1 [==============================] - 0s 98ms/step - loss: 0.3788

Epoch 121/500

1/1 [==============================] - 0s 119ms/step - loss: 0.3788

Epoch 122/500

1/1 [==============================] - 0s 223ms/step - loss: 0.3788

Epoch 123/500

1/1 [==============================] - 0s 99ms/step - loss: 0.3788

Epoch 124/500

1/1 [==============================] - 0s 115ms/step - loss: 0.3788

Epoch 125/500

1/1 [==============================] - 0s 98ms/step - loss: 0.3788

Epoch 126/500

1/1 [==============================] - 0s 114ms/step - loss: 0.3788

Epoch 127/500

1/1 [==============================] - 0s 129ms/step - loss: 0.3788

Epoch 128/500

1/1 [==============================] - 0s 124ms/step - loss: 0.3788

Epoch 129/500

1/1 [==============================] - 0s 147ms/step - loss: 0.3788

Epoch 130/500

1/1 [==============================] - 0s 118ms/step - loss: 0.3788

Epoch 131/500

1/1 [==============================] - 0s 131ms/step - loss: 0.3788

Epoch 132/500

1/1 [==============================] - 0s 115ms/step - loss: 0.3788

Epoch 133/500

1/1 [==============================] - 0s 165ms/step - loss: 0.3788

Epoch 134/500

1/1 [==============================] - 0s 147ms/step - loss: 0.3788

Epoch 135/500

1/1 [==============================] - 0s 139ms/step - loss: 0.3788

Epoch 136/500

1/1 [==============================] - 0s 115ms/step - loss: 0.3788

Epoch 137/500

1/1 [==============================] - 0s 96ms/step - loss: 0.3788

Epoch 138/500

1/1 [==============================] - 0s 117ms/step - loss: 0.3788

Epoch 139/500

1/1 [==============================] - 0s 110ms/step - loss: 0.3788

Epoch 140/500

1/1 [==============================] - 0s 123ms/step - loss: 0.3788

Epoch 141/500

1/1 [==============================] - 0s 129ms/step - loss: 0.3788

Epoch 142/500

1/1 [==============================] - 0s 116ms/step - loss: 0.3788

Epoch 143/500

1/1 [==============================] - 0s 147ms/step - loss: 0.3788

Epoch 144/500

1/1 [==============================] - 0s 110ms/step - loss: 0.3788

Epoch 145/500

1/1 [==============================] - 0s 86ms/step - loss: 0.3788

Epoch 146/500

1/1 [==============================] - 0s 118ms/step - loss: 0.3788

Epoch 147/500

1/1 [==============================] - 0s 143ms/step - loss: 0.3788

Epoch 148/500

1/1 [==============================] - 0s 121ms/step - loss: 0.3788

Epoch 149/500

1/1 [==============================] - 0s 90ms/step - loss: 0.3788

Epoch 150/500

1/1 [==============================] - 0s 94ms/step - loss: 0.3788

Epoch 151/500

1/1 [==============================] - 0s 126ms/step - loss: 0.3788

Epoch 152/500

1/1 [==============================] - 0s 110ms/step - loss: 0.3788

Epoch 153/500

1/1 [==============================] - 0s 191ms/step - loss: 0.3788

Epoch 154/500

1/1 [==============================] - 0s 103ms/step - loss: 0.3788

Epoch 155/500

1/1 [==============================] - 0s 108ms/step - loss: 0.3788

Epoch 156/500

1/1 [==============================] - 0s 101ms/step - loss: 0.3788

Epoch 157/500

1/1 [==============================] - 0s 112ms/step - loss: 0.3788

Epoch 158/500

1/1 [==============================] - 0s 119ms/step - loss: 0.3788

Epoch 159/500

1/1 [==============================] - 0s 108ms/step - loss: 0.3788

Epoch 160/500

1/1 [==============================] - 0s 123ms/step - loss: 0.3788

Epoch 161/500

1/1 [==============================] - 0s 107ms/step - loss: 0.3788

Epoch 162/500

1/1 [==============================] - 0s 126ms/step - loss: 0.3788

Epoch 163/500

1/1 [==============================] - 0s 94ms/step - loss: 0.3788

Epoch 164/500

1/1 [==============================] - 0s 118ms/step - loss: 0.3788

Epoch 165/500

1/1 [==============================] - 0s 194ms/step - loss: 0.3788

Epoch 166/500

1/1 [==============================] - 0s 140ms/step - loss: 0.3788

Epoch 167/500

1/1 [==============================] - 0s 189ms/step - loss: 0.3788

Epoch 168/500

1/1 [==============================] - 0s 109ms/step - loss: 0.3788

Epoch 169/500

1/1 [==============================] - 0s 87ms/step - loss: 0.3788

Epoch 170/500

1/1 [==============================] - 0s 101ms/step - loss: 0.3788

Epoch 171/500

1/1 [==============================] - 0s 162ms/step - loss: 0.3788

Epoch 172/500

1/1 [==============================] - 0s 129ms/step - loss: 0.3788

Epoch 173/500

1/1 [==============================] - 0s 116ms/step - loss: 0.3788

Epoch 174/500

1/1 [==============================] - 0s 111ms/step - loss: 0.3788

Epoch 175/500

1/1 [==============================] - 0s 117ms/step - loss: 0.3788

Epoch 176/500

1/1 [==============================] - 0s 111ms/step - loss: 0.3788

Epoch 177/500

1/1 [==============================] - 0s 100ms/step - loss: 0.3788

Epoch 178/500

1/1 [==============================] - 0s 95ms/step - loss: 0.3788

Epoch 179/500

1/1 [==============================] - 0s 115ms/step - loss: 0.3788

Epoch 180/500

1/1 [==============================] - 0s 112ms/step - loss: 0.3788

Epoch 181/500

1/1 [==============================] - 0s 101ms/step - loss: 0.3788

Epoch 182/500

1/1 [==============================] - 0s 96ms/step - loss: 0.3788

Epoch 183/500

1/1 [==============================] - 0s 129ms/step - loss: 0.3788

Epoch 184/500

1/1 [==============================] - 0s 153ms/step - loss: 0.3788

Epoch 185/500

1/1 [==============================] - 0s 132ms/step - loss: 0.3788

Epoch 186/500

1/1 [==============================] - 0s 114ms/step - loss: 0.3788

Epoch 187/500

1/1 [==============================] - 0s 112ms/step - loss: 0.3788

Epoch 188/500

1/1 [==============================] - 0s 117ms/step - loss: 0.3788

Epoch 189/500

1/1 [==============================] - 0s 146ms/step - loss: 0.3788

Epoch 190/500

1/1 [==============================] - 0s 115ms/step - loss: 0.3788

Epoch 191/500

1/1 [==============================] - 0s 119ms/step - loss: 0.3788

Epoch 192/500

1/1 [==============================] - 0s 248ms/step - loss: 0.3788

Epoch 193/500

1/1 [==============================] - 0s 134ms/step - loss: 0.3788

Epoch 194/500

1/1 [==============================] - 0s 156ms/step - loss: 0.3788

Epoch 195/500

1/1 [==============================] - 0s 108ms/step - loss: 0.3788

Epoch 196/500

1/1 [==============================] - 0s 93ms/step - loss: 0.3788

Epoch 197/500

1/1 [==============================] - 0s 95ms/step - loss: 0.3788

Epoch 198/500

1/1 [==============================] - 0s 100ms/step - loss: 0.3788

Epoch 199/500

1/1 [==============================] - 0s 98ms/step - loss: 0.3788

Epoch 200/500

1/1 [==============================] - 0s 150ms/step - loss: 0.3788

Epoch 201/500

1/1 [==============================] - 0s 144ms/step - loss: 0.3788

Epoch 202/500

1/1 [==============================] - 0s 125ms/step - loss: 0.3788

Epoch 203/500

1/1 [==============================] - 0s 104ms/step - loss: 0.3788

Epoch 204/500

1/1 [==============================] - 0s 115ms/step - loss: 0.3788

Epoch 205/500

1/1 [==============================] - 0s 113ms/step - loss: 0.3788

Epoch 206/500

1/1 [==============================] - 0s 115ms/step - loss: 0.3788

Epoch 207/500

1/1 [==============================] - 0s 114ms/step - loss: 0.3788

Epoch 208/500

1/1 [==============================] - 0s 82ms/step - loss: 0.3788

Epoch 209/500

1/1 [==============================] - 0s 131ms/step - loss: 0.3788

Epoch 210/500

1/1 [==============================] - 0s 247ms/step - loss: 0.3788

Epoch 211/500

1/1 [==============================] - 0s 98ms/step - loss: 0.3788

Epoch 212/500

1/1 [==============================] - 0s 107ms/step - loss: 0.3788

Epoch 213/500

1/1 [==============================] - 0s 105ms/step - loss: 0.3788

Epoch 214/500

1/1 [==============================] - 0s 97ms/step - loss: 0.3788

Epoch 215/500

1/1 [==============================] - 0s 97ms/step - loss: 0.3788

Epoch 216/500

1/1 [==============================] - 0s 105ms/step - loss: 0.3788

Epoch 217/500

1/1 [==============================] - 0s 99ms/step - loss: 0.3788

Epoch 218/500

1/1 [==============================] - 0s 87ms/step - loss: 0.3788

Epoch 219/500

1/1 [==============================] - 0s 98ms/step - loss: 0.3788

Epoch 220/500

1/1 [==============================] - 0s 99ms/step - loss: 0.3788

Epoch 221/500

1/1 [==============================] - 0s 97ms/step - loss: 0.3788

Epoch 222/500

1/1 [==============================] - 0s 120ms/step - loss: 0.3788

Epoch 223/500

1/1 [==============================] - 0s 106ms/step - loss: 0.3788

Epoch 224/500

1/1 [==============================] - 0s 88ms/step - loss: 0.3788

Epoch 225/500

1/1 [==============================] - 0s 99ms/step - loss: 0.3788

Epoch 226/500

1/1 [==============================] - 0s 98ms/step - loss: 0.3788

Epoch 227/500

1/1 [==============================] - 0s 100ms/step - loss: 0.3788

Epoch 228/500

1/1 [==============================] - 0s 95ms/step - loss: 0.3788

Epoch 229/500

1/1 [==============================] - 0s 115ms/step - loss: 0.3788

Epoch 230/500

1/1 [==============================] - 0s 200ms/step - loss: 0.3788

Epoch 231/500

1/1 [==============================] - 0s 178ms/step - loss: 0.3788

Epoch 232/500

1/1 [==============================] - 0s 98ms/step - loss: 0.3788

Epoch 233/500

1/1 [==============================] - 0s 163ms/step - loss: 0.3788

Epoch 234/500

1/1 [==============================] - 0s 222ms/step - loss: 0.3788

Epoch 235/500

1/1 [==============================] - 0s 122ms/step - loss: 0.3788

Epoch 236/500

1/1 [==============================] - 0s 96ms/step - loss: 0.3788

Epoch 237/500

1/1 [==============================] - 0s 88ms/step - loss: 0.3788

Epoch 238/500

1/1 [==============================] - 0s 97ms/step - loss: 0.3788

Epoch 239/500

1/1 [==============================] - 0s 98ms/step - loss: 0.3788

Epoch 240/500

1/1 [==============================] - 0s 113ms/step - loss: 0.3788

Epoch 241/500

1/1 [==============================] - 0s 98ms/step - loss: 0.3788

Epoch 242/500

1/1 [==============================] - 0s 109ms/step - loss: 0.3788

Epoch 243/500

1/1 [==============================] - 0s 111ms/step - loss: 0.3788

Epoch 244/500

1/1 [==============================] - 0s 117ms/step - loss: 0.3788

Epoch 245/500

1/1 [==============================] - 0s 111ms/step - loss: 0.3788

Epoch 246/500

1/1 [==============================] - 0s 116ms/step - loss: 0.3788

Epoch 247/500

1/1 [==============================] - 0s 132ms/step - loss: 0.3788

Epoch 248/500

1/1 [==============================] - 0s 126ms/step - loss: 0.3788

Epoch 249/500

1/1 [==============================] - 0s 118ms/step - loss: 0.3788

Epoch 250/500

1/1 [==============================] - 0s 103ms/step - loss: 0.3788

Epoch 251/500

1/1 [==============================] - 0s 107ms/step - loss: 0.3788

Epoch 252/500

1/1 [==============================] - 0s 112ms/step - loss: 0.3788

Epoch 253/500

1/1 [==============================] - 0s 118ms/step - loss: 0.3788

Epoch 254/500

1/1 [==============================] - 0s 118ms/step - loss: 0.3788

Epoch 255/500

1/1 [==============================] - 0s 91ms/step - loss: 0.3788

Epoch 256/500

1/1 [==============================] - 0s 103ms/step - loss: 0.3788

Epoch 257/500

1/1 [==============================] - 0s 91ms/step - loss: 0.3788

Epoch 258/500

1/1 [==============================] - 0s 94ms/step - loss: 0.3788

Epoch 259/500

1/1 [==============================] - 0s 100ms/step - loss: 0.3788

Epoch 260/500

1/1 [==============================] - 0s 110ms/step - loss: 0.3788

Epoch 261/500

1/1 [==============================] - 0s 101ms/step - loss: 0.3788

Epoch 262/500

1/1 [==============================] - 0s 127ms/step - loss: 0.3788

Epoch 263/500

1/1 [==============================] - 0s 109ms/step - loss: 0.3788

Epoch 264/500

1/1 [==============================] - 0s 102ms/step - loss: 0.3788

Epoch 265/500

1/1 [==============================] - 0s 115ms/step - loss: 0.3788

Epoch 266/500

1/1 [==============================] - 0s 111ms/step - loss: 0.3788

Epoch 267/500

1/1 [==============================] - 0s 100ms/step - loss: 0.3788

Epoch 268/500

1/1 [==============================] - 0s 102ms/step - loss: 0.3788

Epoch 269/500

1/1 [==============================] - 0s 217ms/step - loss: 0.3788

Epoch 270/500

1/1 [==============================] - 0s 112ms/step - loss: 0.3788

Epoch 271/500

1/1 [==============================] - 0s 116ms/step - loss: 0.3788

Epoch 272/500

1/1 [==============================] - 0s 108ms/step - loss: 0.3788

Epoch 273/500

1/1 [==============================] - 0s 88ms/step - loss: 0.3788

Epoch 274/500

1/1 [==============================] - 0s 133ms/step - loss: 0.3788

Epoch 275/500

1/1 [==============================] - 0s 164ms/step - loss: 0.3788

Epoch 276/500

1/1 [==============================] - 0s 259ms/step - loss: 0.3788

Epoch 277/500

1/1 [==============================] - 0s 181ms/step - loss: 0.3788

Epoch 278/500

1/1 [==============================] - 0s 103ms/step - loss: 0.3788

Epoch 279/500

1/1 [==============================] - 0s 122ms/step - loss: 0.3788

Epoch 280/500

1/1 [==============================] - 0s 90ms/step - loss: 0.3788

Epoch 281/500

1/1 [==============================] - 0s 103ms/step - loss: 0.3788

Epoch 282/500

1/1 [==============================] - 0s 106ms/step - loss: 0.3788

Epoch 283/500

1/1 [==============================] - 0s 104ms/step - loss: 0.3788

Epoch 284/500

1/1 [==============================] - 0s 99ms/step - loss: 0.3788

Epoch 285/500

1/1 [==============================] - 0s 109ms/step - loss: 0.3788

Epoch 286/500

1/1 [==============================] - 0s 99ms/step - loss: 0.3788

Epoch 287/500

1/1 [==============================] - 0s 115ms/step - loss: 0.3788

Epoch 288/500

1/1 [==============================] - 0s 102ms/step - loss: 0.3788

Epoch 289/500

1/1 [==============================] - 0s 93ms/step - loss: 0.3788

Epoch 290/500

1/1 [==============================] - 0s 94ms/step - loss: 0.3788

Epoch 291/500

1/1 [==============================] - 0s 115ms/step - loss: 0.3788

Epoch 292/500

1/1 [==============================] - 0s 85ms/step - loss: 0.3788

Epoch 293/500

1/1 [==============================] - 0s 109ms/step - loss: 0.3788

Epoch 294/500

1/1 [==============================] - 0s 95ms/step - loss: 0.3788

Epoch 295/500

1/1 [==============================] - 0s 116ms/step - loss: 0.3788

Epoch 296/500

1/1 [==============================] - 0s 91ms/step - loss: 0.3788

Epoch 297/500

1/1 [==============================] - 0s 108ms/step - loss: 0.3788

Epoch 298/500

1/1 [==============================] - 0s 103ms/step - loss: 0.3788

Epoch 299/500

1/1 [==============================] - 0s 99ms/step - loss: 0.3788

Epoch 300/500

1/1 [==============================] - 0s 124ms/step - loss: 0.3788

Epoch 301/500

1/1 [==============================] - 0s 110ms/step - loss: 0.3788

Epoch 302/500

1/1 [==============================] - 0s 248ms/step - loss: 0.3788

Epoch 303/500

1/1 [==============================] - 0s 108ms/step - loss: 0.3788

Epoch 304/500

1/1 [==============================] - 0s 118ms/step - loss: 0.3788

Epoch 305/500

1/1 [==============================] - 0s 110ms/step - loss: 0.3788

Epoch 306/500

1/1 [==============================] - 0s 121ms/step - loss: 0.3788

Epoch 307/500

1/1 [==============================] - 0s 103ms/step - loss: 0.3788

Epoch 308/500

1/1 [==============================] - 0s 109ms/step - loss: 0.3788

Epoch 309/500

1/1 [==============================] - 0s 110ms/step - loss: 0.3788

Epoch 310/500

1/1 [==============================] - 0s 118ms/step - loss: 0.3788

Epoch 311/500

1/1 [==============================] - 0s 206ms/step - loss: 0.3788

Epoch 312/500

1/1 [==============================] - 0s 113ms/step - loss: 0.3788

Epoch 313/500

1/1 [==============================] - 0s 130ms/step - loss: 0.3788

Epoch 314/500

1/1 [==============================] - 0s 125ms/step - loss: 0.3788

Epoch 315/500

1/1 [==============================] - 0s 180ms/step - loss: 0.3788

Epoch 316/500

1/1 [==============================] - 0s 128ms/step - loss: 0.3788

Epoch 317/500

1/1 [==============================] - 0s 124ms/step - loss: 0.3788

Epoch 318/500

1/1 [==============================] - 0s 144ms/step - loss: 0.3788

Epoch 319/500

1/1 [==============================] - 0s 118ms/step - loss: 0.3788

Epoch 320/500

1/1 [==============================] - 0s 118ms/step - loss: 0.3788

Epoch 321/500

1/1 [==============================] - 0s 124ms/step - loss: 0.3788

Epoch 322/500

1/1 [==============================] - 0s 102ms/step - loss: 0.3788

Epoch 323/500

1/1 [==============================] - 0s 108ms/step - loss: 0.3788

Epoch 324/500

1/1 [==============================] - 0s 119ms/step - loss: 0.3788

Epoch 325/500

1/1 [==============================] - 0s 119ms/step - loss: 0.3788

Epoch 326/500

1/1 [==============================] - 0s 118ms/step - loss: 0.3788

Epoch 327/500

1/1 [==============================] - 0s 124ms/step - loss: 0.3788

Epoch 328/500

1/1 [==============================] - 0s 124ms/step - loss: 0.3788

Epoch 329/500

1/1 [==============================] - 0s 117ms/step - loss: 0.3788

Epoch 330/500

1/1 [==============================] - 0s 178ms/step - loss: 0.3788

Epoch 331/500

1/1 [==============================] - 0s 118ms/step - loss: 0.3788

Epoch 332/500

1/1 [==============================] - 0s 114ms/step - loss: 0.3788

Epoch 333/500

1/1 [==============================] - 0s 135ms/step - loss: 0.3788

Epoch 334/500

1/1 [==============================] - 0s 121ms/step - loss: 0.3788

Epoch 335/500

1/1 [==============================] - 0s 121ms/step - loss: 0.3788

Epoch 336/500

1/1 [==============================] - 0s 95ms/step - loss: 0.3788

Epoch 337/500

1/1 [==============================] - 0s 115ms/step - loss: 0.3788

Epoch 338/500

1/1 [==============================] - 0s 95ms/step - loss: 0.3788

Epoch 339/500

1/1 [==============================] - 0s 99ms/step - loss: 0.3788

Epoch 340/500

1/1 [==============================] - 0s 94ms/step - loss: 0.3788

Epoch 341/500

1/1 [==============================] - 0s 101ms/step - loss: 0.3788

Epoch 342/500

1/1 [==============================] - 0s 96ms/step - loss: 0.3788

Epoch 343/500

1/1 [==============================] - 0s 99ms/step - loss: 0.3788

Epoch 344/500

1/1 [==============================] - 0s 122ms/step - loss: 0.3788

Epoch 345/500

1/1 [==============================] - 0s 107ms/step - loss: 0.3788

Epoch 346/500

1/1 [==============================] - 0s 88ms/step - loss: 0.3788

Epoch 347/500

1/1 [==============================] - 0s 91ms/step - loss: 0.3788

Epoch 348/500

1/1 [==============================] - 0s 95ms/step - loss: 0.3788

Epoch 349/500

1/1 [==============================] - 0s 101ms/step - loss: 0.3788

Epoch 350/500

1/1 [==============================] - 0s 86ms/step - loss: 0.3788

Epoch 351/500

1/1 [==============================] - 0s 92ms/step - loss: 0.3788

Epoch 352/500

1/1 [==============================] - 0s 88ms/step - loss: 0.3788

Epoch 353/500

1/1 [==============================] - 0s 101ms/step - loss: 0.3788

Epoch 354/500

1/1 [==============================] - 0s 97ms/step - loss: 0.3788

Epoch 355/500

1/1 [==============================] - 0s 147ms/step - loss: 0.3788

Epoch 356/500

1/1 [==============================] - 0s 212ms/step - loss: 0.3788

Epoch 357/500

1/1 [==============================] - 0s 100ms/step - loss: 0.3788

Epoch 358/500

1/1 [==============================] - 0s 112ms/step - loss: 0.3788

Epoch 359/500

1/1 [==============================] - 0s 117ms/step - loss: 0.3788

Epoch 360/500

1/1 [==============================] - 0s 163ms/step - loss: 0.3788

Epoch 361/500

1/1 [==============================] - 0s 128ms/step - loss: 0.3788

Epoch 362/500

1/1 [==============================] - 0s 307ms/step - loss: 0.3788

Epoch 363/500

1/1 [==============================] - 0s 107ms/step - loss: 0.3788

Epoch 364/500

1/1 [==============================] - 0s 114ms/step - loss: 0.3788

Epoch 365/500

1/1 [==============================] - 0s 106ms/step - loss: 0.3788

Epoch 366/500

1/1 [==============================] - 0s 97ms/step - loss: 0.3788

Epoch 367/500

1/1 [==============================] - 0s 123ms/step - loss: 0.3788

Epoch 368/500

1/1 [==============================] - 0s 106ms/step - loss: 0.3788

Epoch 369/500

1/1 [==============================] - 0s 97ms/step - loss: 0.3788

Epoch 370/500

1/1 [==============================] - 0s 96ms/step - loss: 0.3788

Epoch 371/500

1/1 [==============================] - 0s 106ms/step - loss: 0.3788

Epoch 372/500

1/1 [==============================] - 0s 115ms/step - loss: 0.3788

Epoch 373/500

1/1 [==============================] - 0s 104ms/step - loss: 0.3788

Epoch 374/500

1/1 [==============================] - 0s 100ms/step - loss: 0.3788

Epoch 375/500

1/1 [==============================] - 0s 107ms/step - loss: 0.3788

Epoch 376/500

1/1 [==============================] - 0s 95ms/step - loss: 0.3788

Epoch 377/500

1/1 [==============================] - 0s 111ms/step - loss: 0.3788

Epoch 378/500

1/1 [==============================] - 0s 113ms/step - loss: 0.3788

Epoch 379/500

1/1 [==============================] - 0s 93ms/step - loss: 0.3788

Epoch 380/500

1/1 [==============================] - 0s 97ms/step - loss: 0.3788

Epoch 381/500

1/1 [==============================] - 0s 84ms/step - loss: 0.3788

Epoch 382/500

1/1 [==============================] - 0s 100ms/step - loss: 0.3788

Epoch 383/500

1/1 [==============================] - 0s 80ms/step - loss: 0.3788

Epoch 384/500

1/1 [==============================] - 0s 99ms/step - loss: 0.3788

Epoch 385/500

1/1 [==============================] - 0s 97ms/step - loss: 0.3788

Epoch 386/500

1/1 [==============================] - 0s 105ms/step - loss: 0.3788

Epoch 387/500

1/1 [==============================] - 0s 98ms/step - loss: 0.3788

Epoch 388/500

1/1 [==============================] - 0s 92ms/step - loss: 0.3788

Epoch 389/500

1/1 [==============================] - 0s 97ms/step - loss: 0.3788

Epoch 390/500

1/1 [==============================] - 0s 107ms/step - loss: 0.3788

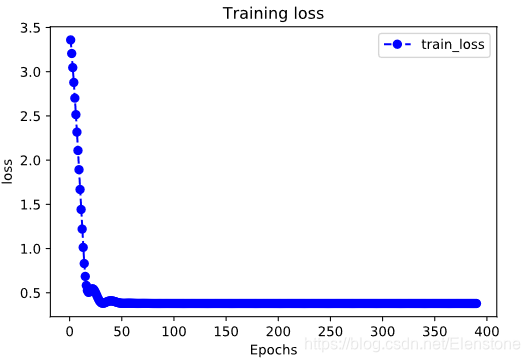

四,评估模型

评估模型一般要设置验证集或者测试集,由于此例数据较少,我们仅仅可视化损失函数在训练集上的迭代情况。

%matplotlib inline

%config InlineBackend.figure_format = 'svg'

import matplotlib.pyplot as plt

def plot_metric(history, metric):

train_metrics = history.history[metric]

epochs = range(1, len(train_metrics) + 1)

plt.plot(epochs, train_metrics, 'bo--')

plt.title('Training '+ metric)

plt.xlabel("Epochs")

plt.ylabel(metric)

plt.legend(["train_"+metric])

plt.show()

plot_metric(history,"loss")

五,使用模型

此处我们使用模型预测疫情结束时间,即 新增确诊病例为0 的时间。

#使用dfresult记录现有数据以及此后预测的疫情数据

dfresult = dfdiff[["confirmed_num","cured_num","dead_num"]].copy()

dfresult.tail()

| confirmed_num | cured_num | dead_num | |

|---|---|---|---|

| 41 | 143.0 | 1681.0 | 30.0 |

| 42 | 99.0 | 1678.0 | 28.0 |

| 43 | 44.0 | 1661.0 | 27.0 |

| 44 | 40.0 | 1535.0 | 22.0 |

| 45 | 19.0 | 1297.0 | 17.0 |

#预测此后100天的新增走势,将其结果添加到dfresult中

for i in range(100):

arr_predict = model.predict(tf.constant(tf.expand_dims(dfresult.values[-38:,:],axis = 0)))

dfpredict = pd.DataFrame(tf.cast(tf.floor(arr_predict),tf.float32).numpy(),

columns = dfresult.columns)

dfresult = dfresult.append(dfpredict,ignore_index=True)

dfresult.query("confirmed_num==0").head()

# 第55天开始新增确诊降为0,第45天对应3月10日,也就是10天后,即预计3月20日新增确诊降为0

# 注:该预测偏乐观

| confirmed_num | cured_num | dead_num | |

|---|---|---|---|

| 49 | 0.0 | 1199.0 | 0.0 |

| 50 | 0.0 | 1178.0 | 0.0 |

| 51 | 0.0 | 1158.0 | 0.0 |

| 52 | 0.0 | 1139.0 | 0.0 |

| 53 | 0.0 | 1121.0 | 0.0 |

dfresult.query("cured_num==0").head()

# 第164天开始新增治愈降为0,第45天对应3月10日,也就是大概4个月后,即7月10日左右全部治愈。

# 注: 该预测偏悲观,并且存在问题,如果将每天新增治愈人数加起来,将超过累计确诊人数。

dfresult.query("dead_num==0").head()

六,保存模型

推荐使用TensorFlow原生方式保存模型。

model.save('./data/tf_model_savedmodel', save_format="tf")

print('export saved model.')

WARNING:tensorflow:From D:\anaconda3\lib\site-packages\tensorflow_core\python\ops\resource_variable_ops.py:1786: calling BaseResourceVariable.__init__ (from tensorflow.python.ops.resource_variable_ops) with constraint is deprecated and will be removed in a future version.

Instructions for updating:

If using Keras pass *_constraint arguments to layers.

INFO:tensorflow:Assets written to: ./data/tf_model_savedmodel\assets

export saved model.

model_loaded = tf.keras.models.load_model('./data/tf_model_savedmodel',compile=False)

optimizer = tf.keras.optimizers.Adam(learning_rate=0.001)

model_loaded.compile(optimizer=optimizer,loss=MSPE(name = "MSPE"))

model_loaded.predict(ds_train)

array([[1.10418066e+03, 8.77097855e+01, 4.04874420e+00],

[1.36194556e+03, 6.87724457e+01, 8.23244667e+00],

[1.48609351e+03, 1.46515198e+02, 7.69261456e+00],

[1.70072229e+03, 1.56482224e+02, 8.63732147e+00],

[2.04423340e+03, 2.59142548e+02, 8.77227974e+00],

[1.94323169e+03, 2.60139252e+02, 9.85194492e+00],

[1.65337769e+03, 3.85723694e+02, 9.85194492e+00],

[1.78068201e+03, 5.08318054e+02, 1.16064005e+01],

[1.39508679e+03, 5.97024536e+02, 1.20112753e+01],

[1.56394910e+03, 6.29915710e+02, 1.30909405e+01],

[1.29776733e+03, 7.12641968e+02, 1.45754795e+01],

[1.05999243e+03, 7.41546326e+02, 1.30909405e+01],

[7.97019580e+03, 1.16713818e+03, 3.42793694e+01],

[2.12892749e+03, 8.09322083e+02, 1.75445592e+00],

[1.38930017e+03, 1.36847192e+03, 1.92990150e+01],

[1.05631006e+03, 1.31863684e+03, 1.91640568e+01],

[1.07735205e+03, 1.42030042e+03, 1.41706057e+01],

[9.93183899e+02, 1.70236719e+03, 1.32258987e+01],

[9.20062866e+02, 1.81798462e+03, 1.83543091e+01],

[2.05685867e+02, 1.77313293e+03, 1.53852291e+01],

[4.67659180e+02, 2.10204468e+03, 1.59250612e+01],

[4.32939819e+02, 2.38710156e+03, 1.47104378e+01],

[3.40880920e+02, 2.22164893e+03, 1.30909405e+01],

[1.12574875e+02, 1.83991199e+03, 2.02437229e+01],

[2.67233795e+02, 2.58046167e+03, 9.58202839e+00],

[2.13576630e+02, 2.41401245e+03, 7.01782370e+00],

[2.27779999e+02, 2.74093066e+03, 3.91378641e+00],

[1.72018616e+02, 3.61005493e+03, 5.93815851e+00],

[2.24623688e+02, 2.87548560e+03, 6.34303284e+00],

[3.01427124e+02, 2.61434961e+03, 4.72353506e+00],

[1.06262260e+02, 2.82764380e+03, 5.66824245e+00],

[6.57563477e+01, 2.73295703e+03, 4.18370247e+00],

[6.26000443e+01, 2.64325391e+03, 5.12840939e+00],

[7.31210632e+01, 2.18178076e+03, 4.18370247e+00],

[7.52252655e+01, 1.67545618e+03, 4.04874420e+00],

[5.20790291e+01, 1.67246606e+03, 3.77882814e+00],

[2.31462364e+01, 1.65552209e+03, 3.64386988e+00],

[2.10420322e+01, 1.52993774e+03, 2.96907926e+00]], dtype=float32)