0 TensorFlow的建模流程

尽管TensorFlow设计上足够灵活,可以用于进行各种复杂的数值计算。

但通常人们使用TensorFlow来实现机器学习模型,尤其常用于实现神经网络模型。

从原理上说可以使用张量构建计算图来定义神经网络,并通过自动微分机制训练模型。

但为简洁起见,一般推荐使用TensorFlow的高层次keras接口来实现神经网络网模型。

使用TensorFlow实现神经网络模型的一般流程包括:

1,准备数据

2,定义模型

3,训练模型

4,评估模型

5,使用模型

6,保存模型。

对新手来说,其中最困难的部分实际上是准备数据过程。

我们在实践中通常会遇到的数据类型包括结构化数据,图片数据,文本数据,时间序列数据。

我们将分别以titanic生存预测问题,cifar2图片分类问题,imdb电影评论分类问题,国内新冠疫情结束时间预测问题为例,演示应用tensorflow对这四类数据的建模方法。

1 准备数据

titanic数据集的目标是根据乘客信息预测他们在Titanic号撞击冰山沉没后能否生存。

结构化数据一般会使用Pandas中的DataFrame进行预处理。

import tensorflow as tf

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

from tensorflow.keras import models, layers

dftrain_raw = pd.read_csv('./data/titanic/train.csv')

dftest_raw = pd.read_csv('./data/titanic/test.csv')

dftrain_raw.head(10)

| PassengerId | Survived | Pclass | Name | Sex | Age | SibSp | Parch | Ticket | Fare | Cabin | Embarked | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 493 | 0 | 1 | Molson, Mr. Harry Markland | male | 55.0 | 0 | 0 | 113787 | 30.5000 | C30 | S |

| 1 | 53 | 1 | 1 | Harper, Mrs. Henry Sleeper (Myna Haxtun) | female | 49.0 | 1 | 0 | PC 17572 | 76.7292 | D33 | C |

| 2 | 388 | 1 | 2 | Buss, Miss. Kate | female | 36.0 | 0 | 0 | 27849 | 13.0000 | NaN | S |

| 3 | 192 | 0 | 2 | Carbines, Mr. William | male | 19.0 | 0 | 0 | 28424 | 13.0000 | NaN | S |

| 4 | 687 | 0 | 3 | Panula, Mr. Jaako Arnold | male | 14.0 | 4 | 1 | 3101295 | 39.6875 | NaN | S |

| 5 | 16 | 1 | 2 | Hewlett, Mrs. (Mary D Kingcome) | female | 55.0 | 0 | 0 | 248706 | 16.0000 | NaN | S |

| 6 | 228 | 0 | 3 | Lovell, Mr. John Hall ("Henry") | male | 20.5 | 0 | 0 | A/5 21173 | 7.2500 | NaN | S |

| 7 | 884 | 0 | 2 | Banfield, Mr. Frederick James | male | 28.0 | 0 | 0 | C.A./SOTON 34068 | 10.5000 | NaN | S |

| 8 | 168 | 0 | 3 | Skoog, Mrs. William (Anna Bernhardina Karlsson) | female | 45.0 | 1 | 4 | 347088 | 27.9000 | NaN | S |

| 9 | 752 | 1 | 3 | Moor, Master. Meier | male | 6.0 | 0 | 1 | 392096 | 12.4750 | E121 | S |

字段说明:

- Survived:0代表死亡,1代表存活【y标签】

- Pclass:乘客所持票类,有三种值(1,2,3) 【转换成onehot编码】

- Name:乘客姓名 【舍去】

- Sex:乘客性别 【转换成bool特征】

- Age:乘客年龄(有缺失) 【数值特征,添加“年龄是否缺失”作为辅助特征】

- SibSp:乘客兄弟姐妹/配偶的个数(整数值) 【数值特征】

- Parch:乘客父母/孩子的个数(整数值)【数值特征】

- Ticket:票号(字符串)【舍去】

- Fare:乘客所持票的价格(浮点数,0-500不等) 【数值特征】

- Cabin:乘客所在船舱(有缺失) 【添加“所在船舱是否缺失”作为辅助特征】

- Embarked:乘客登船港口:S、C、Q(有缺失)【转换成onehot编码,四维度 S,C,Q,nan】

利用Pandas的数据可视化功能我们可以简单地进行探索性数据分析EDA(Exploratory Data Analysis)。

label(Survived)分布情况

%matplotlib inline

%config InlineBackend.figure_format = 'png'

ax = dftrain_raw['Survived'].value_counts().plot(kind = 'bar',

figsize = (12,8),fontsize=15,rot = 0)

ax.set_ylabel('Counts',fontsize = 15)

ax.set_xlabel('Survived',fontsize = 15)

plt.show()

年龄分布情况

%matplotlib inline

%config InlineBackend.figure_format = 'png'

ax = dftrain_raw['Age'].plot(kind = 'hist',bins = 20,color= 'purple',

figsize = (12,8),fontsize=15)

ax.set_ylabel('Frequency',fontsize = 15)

ax.set_xlabel('Age',fontsize = 15)

plt.show()

年龄和label的相关性

%matplotlib inline

%config InlineBackend.figure_format = 'png'

ax = dftrain_raw.query('Survived == 0')['Age'].plot(kind = 'density',

figsize = (12,8),fontsize=15)

dftrain_raw.query('Survived == 1')['Age'].plot(kind = 'density',

figsize = (12,8),fontsize=15)

ax.legend(['Survived==0','Survived==1'],fontsize = 12)

ax.set_ylabel('Density',fontsize = 15)

ax.set_xlabel('Age',fontsize = 15)

plt.show()

下面为正式的数据预处理

def preprocessing(dfdata):

dfresult= pd.DataFrame()

#Pclass乘客所持票类进行one_hot编码

dfPclass = pd.get_dummies(dfdata['Pclass'])

dfPclass.columns = ['Pclass_' +str(x) for x in dfPclass.columns ]

dfresult = pd.concat([dfresult,dfPclass],axis = 1)

#Sex性别进行one_hot编码

dfSex = pd.get_dummies(dfdata['Sex'])

dfresult = pd.concat([dfresult,dfSex],axis = 1)

#Age 对age缺失的进行0填充

dfresult['Age'] = dfdata['Age'].fillna(0)

dfresult['Age_null'] = pd.isna(dfdata['Age']).astype('int32')

#SibSp,Parch,Fare 数值特征不处理

dfresult['SibSp'] = dfdata['SibSp']

dfresult['Parch'] = dfdata['Parch']

dfresult['Fare'] = dfdata['Fare']

#Carbin 缺失为1,没缺失为0

dfresult['Cabin_null'] = pd.isna(dfdata['Cabin']).astype('int32')

#Embarked one_hot编码,把nan考虑进去了

dfEmbarked = pd.get_dummies(dfdata['Embarked'],dummy_na=True)

dfEmbarked.columns = ['Embarked_' + str(x) for x in dfEmbarked.columns]

dfresult = pd.concat([dfresult,dfEmbarked],axis = 1)

return(dfresult)

x_train = preprocessing(dftrain_raw)

y_train = dftrain_raw['Survived'].values

x_test = preprocessing(dftest_raw)

y_test = dftest_raw['Survived'].values

print("x_train.shape =", x_train.shape )

print("x_test.shape =", x_test.shape )

x_train.shape = (712, 15)

x_test.shape = (179, 15)

x_train.head(10)

| Pclass_1 | Pclass_2 | Pclass_3 | female | male | Age | Age_null | SibSp | Parch | Fare | Cabin_null | Embarked_C | Embarked_Q | Embarked_S | Embarked_nan | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | 0 | 0 | 0 | 1 | 55.0 | 0 | 0 | 0 | 30.5000 | 0 | 0 | 0 | 1 | 0 |

| 1 | 1 | 0 | 0 | 1 | 0 | 49.0 | 0 | 1 | 0 | 76.7292 | 0 | 1 | 0 | 0 | 0 |

| 2 | 0 | 1 | 0 | 1 | 0 | 36.0 | 0 | 0 | 0 | 13.0000 | 1 | 0 | 0 | 1 | 0 |

| 3 | 0 | 1 | 0 | 0 | 1 | 19.0 | 0 | 0 | 0 | 13.0000 | 1 | 0 | 0 | 1 | 0 |

| 4 | 0 | 0 | 1 | 0 | 1 | 14.0 | 0 | 4 | 1 | 39.6875 | 1 | 0 | 0 | 1 | 0 |

| 5 | 0 | 1 | 0 | 1 | 0 | 55.0 | 0 | 0 | 0 | 16.0000 | 1 | 0 | 0 | 1 | 0 |

| 6 | 0 | 0 | 1 | 0 | 1 | 20.5 | 0 | 0 | 0 | 7.2500 | 1 | 0 | 0 | 1 | 0 |

| 7 | 0 | 1 | 0 | 0 | 1 | 28.0 | 0 | 0 | 0 | 10.5000 | 1 | 0 | 0 | 1 | 0 |

| 8 | 0 | 0 | 1 | 1 | 0 | 45.0 | 0 | 1 | 4 | 27.9000 | 1 | 0 | 0 | 1 | 0 |

| 9 | 0 | 0 | 1 | 0 | 1 | 6.0 | 0 | 0 | 1 | 12.4750 | 0 | 0 | 0 | 1 | 0 |

x_train[x_train['Embarked_nan']==1]

| Pclass_1 | Pclass_2 | Pclass_3 | female | male | Age | Age_null | SibSp | Parch | Fare | Cabin_null | Embarked_C | Embarked_Q | Embarked_S | Embarked_nan | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 232 | 1 | 0 | 0 | 1 | 0 | 38.0 | 0 | 0 | 0 | 80.0 | 0 | 0 | 0 | 0 | 1 |

| 292 | 1 | 0 | 0 | 1 | 0 | 62.0 | 0 | 0 | 0 | 80.0 | 0 | 0 | 0 | 0 | 1 |

只有两列是缺失的

2 定义模型

使用Keras接口有以下3种方式构建模型:使用Sequential按层顺序构建模型,使用函数式API构建任意结构模型,继承Model基类构建自定义模型。

此处选择使用最简单的Sequential,按层顺序模型。

tf.keras.backend.clear_session()

model = models.Sequential()

model.add(layers.Dense(20,activation = 'relu',input_shape=(15,)))

model.add(layers.Dense(10,activation = 'relu' ))

model.add(layers.Dense(1,activation = 'sigmoid' ))

model.summary()

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

dense (Dense) (None, 20) 320

_________________________________________________________________

dense_1 (Dense) (None, 10) 210

_________________________________________________________________

dense_2 (Dense) (None, 1) 11

=================================================================

Total params: 541

Trainable params: 541

Non-trainable params: 0

_________________________________________________________________

3 训练模型

训练模型通常有3种方法,内置fit方法,内置train_on_batch方法,以及自定义训练循环。此处我们选择最常用也最简单的内置fit方法。

# 二分类问题选择二元交叉熵损失函数

model.compile(optimizer='adam',

loss='binary_crossentropy',

metrics=['AUC'])

history = model.fit(x_train,y_train,

batch_size= 64,

epochs= 30,

validation_split=0.2 #分割一部分训练数据用于验证

)

Train on 569 samples, validate on 143 samples

Epoch 1/30

569/569 [==============================] - 1s 2ms/sample - loss: 1.1756 - AUC: 0.6290 - val_loss: 0.7064 - val_AUC: 0.6590

Epoch 2/30

569/569 [==============================] - 0s 65us/sample - loss: 0.7333 - AUC: 0.4361 - val_loss: 0.7154 - val_AUC: 0.4920

Epoch 3/30

569/569 [==============================] - 0s 78us/sample - loss: 0.6462 - AUC: 0.6062 - val_loss: 0.6499 - val_AUC: 0.6625

Epoch 4/30

569/569 [==============================] - 0s 65us/sample - loss: 0.6439 - AUC: 0.7018 - val_loss: 0.6540 - val_AUC: 0.6553

Epoch 5/30

569/569 [==============================] - 0s 76us/sample - loss: 0.6200 - AUC: 0.6988 - val_loss: 0.6369 - val_AUC: 0.6577

Epoch 6/30

569/569 [==============================] - 0s 63us/sample - loss: 0.6194 - AUC: 0.6903 - val_loss: 0.6283 - val_AUC: 0.6667

Epoch 7/30

569/569 [==============================] - 0s 64us/sample - loss: 0.6070 - AUC: 0.7183 - val_loss: 0.6237 - val_AUC: 0.6891

Epoch 8/30

569/569 [==============================] - 0s 65us/sample - loss: 0.6075 - AUC: 0.7341 - val_loss: 0.6139 - val_AUC: 0.6973

Epoch 9/30

569/569 [==============================] - 0s 67us/sample - loss: 0.6012 - AUC: 0.7386 - val_loss: 0.6119 - val_AUC: 0.7012

Epoch 10/30

569/569 [==============================] - 0s 66us/sample - loss: 0.5961 - AUC: 0.7458 - val_loss: 0.6119 - val_AUC: 0.7061

Epoch 11/30

569/569 [==============================] - 0s 64us/sample - loss: 0.5908 - AUC: 0.7472 - val_loss: 0.6091 - val_AUC: 0.7108

Epoch 12/30

569/569 [==============================] - 0s 67us/sample - loss: 0.5870 - AUC: 0.7567 - val_loss: 0.6084 - val_AUC: 0.7181

Epoch 13/30

569/569 [==============================] - 0s 65us/sample - loss: 0.5792 - AUC: 0.7672 - val_loss: 0.6095 - val_AUC: 0.7161

Epoch 14/30

569/569 [==============================] - 0s 63us/sample - loss: 0.5778 - AUC: 0.7697 - val_loss: 0.6105 - val_AUC: 0.7225

Epoch 15/30

569/569 [==============================] - 0s 70us/sample - loss: 0.5742 - AUC: 0.7784 - val_loss: 0.6144 - val_AUC: 0.7258

Epoch 16/30

569/569 [==============================] - 0s 67us/sample - loss: 0.5728 - AUC: 0.7739 - val_loss: 0.6171 - val_AUC: 0.7169

Epoch 17/30

569/569 [==============================] - 0s 65us/sample - loss: 0.5826 - AUC: 0.7698 - val_loss: 0.6181 - val_AUC: 0.7460

Epoch 18/30

569/569 [==============================] - 0s 68us/sample - loss: 0.5555 - AUC: 0.7895 - val_loss: 0.6053 - val_AUC: 0.7272

Epoch 19/30

569/569 [==============================] - 0s 62us/sample - loss: 0.5619 - AUC: 0.7890 - val_loss: 0.6040 - val_AUC: 0.7399

Epoch 20/30

569/569 [==============================] - 0s 66us/sample - loss: 0.5530 - AUC: 0.7972 - val_loss: 0.5990 - val_AUC: 0.7405

Epoch 21/30

569/569 [==============================] - 0s 75us/sample - loss: 0.5513 - AUC: 0.7976 - val_loss: 0.5981 - val_AUC: 0.7434

Epoch 22/30

569/569 [==============================] - 0s 76us/sample - loss: 0.5456 - AUC: 0.8040 - val_loss: 0.5955 - val_AUC: 0.7538

Epoch 23/30

569/569 [==============================] - 0s 79us/sample - loss: 0.5406 - AUC: 0.8061 - val_loss: 0.5908 - val_AUC: 0.7469

Epoch 24/30

569/569 [==============================] - 0s 77us/sample - loss: 0.5395 - AUC: 0.8105 - val_loss: 0.5900 - val_AUC: 0.7528

Epoch 25/30

569/569 [==============================] - 0s 93us/sample - loss: 0.5315 - AUC: 0.8118 - val_loss: 0.5856 - val_AUC: 0.7490

Epoch 26/30

569/569 [==============================] - 0s 70us/sample - loss: 0.5311 - AUC: 0.8107 - val_loss: 0.5891 - val_AUC: 0.7562

Epoch 27/30

569/569 [==============================] - 0s 74us/sample - loss: 0.5247 - AUC: 0.8214 - val_loss: 0.5812 - val_AUC: 0.7598

Epoch 28/30

569/569 [==============================] - 0s 65us/sample - loss: 0.5203 - AUC: 0.8243 - val_loss: 0.5787 - val_AUC: 0.7609

Epoch 29/30

569/569 [==============================] - 0s 69us/sample - loss: 0.5176 - AUC: 0.8244 - val_loss: 0.5759 - val_AUC: 0.7610

Epoch 30/30

569/569 [==============================] - 0s 59us/sample - loss: 0.5142 - AUC: 0.8317 - val_loss: 0.5836 - val_AUC: 0.7702

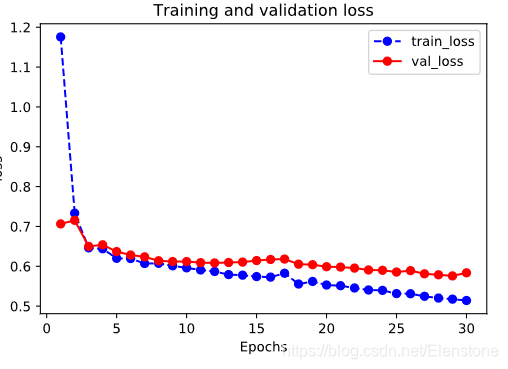

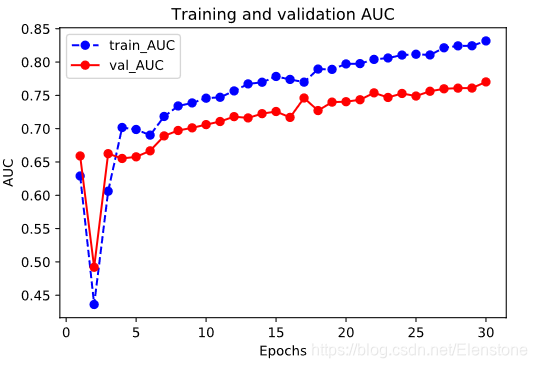

4 评估模型

我们首先评估一下模型在训练集和验证集上的效果。

%matplotlib inline

%config InlineBackend.figure_format = 'svg'

import matplotlib.pyplot as plt

def plot_metric(history, metric):

train_metrics = history.history[metric]

val_metrics = history.history['val_'+metric]

epochs = range(1, len(train_metrics) + 1)

plt.plot(epochs, train_metrics, 'bo--')

plt.plot(epochs, val_metrics, 'ro-')

plt.title('Training and validation '+ metric)

plt.xlabel("Epochs")

plt.ylabel(metric)

plt.legend(["train_"+metric, 'val_'+metric])

plt.show()

plot_metric(history,"loss")

plot_metric(history,"AUC")

我们再看一下模型在测试集上的效果.

model.evaluate(x = x_test,y = y_test)

179/179 [==============================] - 0s 67us/sample - loss: 0.5062 - AUC: 0.8309

[0.5062098619658187, 0.8309387]

5 使用模型

#预测概率

model.predict(x_test[0:10])

#model(tf.constant(x_test[0:10].values,dtype = tf.float32)) #等价写法

array([[0.15356979],

[0.36305907],

[0.3694313 ],

[0.6562717 ],

[0.41980737],

[0.502912 ],

[0.1906718 ],

[0.5757565 ],

[0.43784416],

[0.15257531]], dtype=float32)

#预测类别

model.predict_classes(x_test[0:10])

array([[0],

[0],

[0],

[1],

[0],

[1],

[0],

[1],

[0],

[0]])

6 保存模型

可以使用Keras方式保存模型,也可以使用TensorFlow原生方式保存。前者仅仅适合使用Python环境恢复模型,后者则可以跨平台进行模型部署。

推荐使用后一种方式进行保存。

1,Keras方式保存

# 保存模型结构及权重

model.save('./data/keras_model.h5')

del model #删除现有模型

# identical to the previous one

model = models.load_model('./data/keras_model.h5')

model.evaluate(x_test,y_test)

179/179 [==============================] - 0s 920us/sample - loss: 0.5062 - AUC: 0.8309

[0.5062098619658187, 0.8309387]

# 保存模型结构

json_str = model.to_json()

# 恢复模型结构

model_json = models.model_from_json(json_str)

#保存模型权重

model.save_weights('./data/keras_model_weight.h5')

# 恢复模型结构

model_json = models.model_from_json(json_str)

model_json.compile(

optimizer='adam',

loss='binary_crossentropy',

metrics=['AUC']

)

# 加载权重

model_json.load_weights('./data/keras_model_weight.h5')

model_json.evaluate(x_test,y_test)

179/179 [==============================] - 0s 920us/sample - loss: 0.5062 - AUC: 0.8309

[0.5062098619658187, 0.8309387]

2,TensorFlow原生方式保存

# 保存权重,该方式仅仅保存权重张量

model.save_weights('./data/tf_model_weights.ckpt',save_format = "tf")

# 保存模型结构与模型参数到文件,该方式保存的模型具有跨平台性便于部署

model.save('./data/tf_model_savedmodel', save_format="tf")

print('export saved model.')

model_loaded = tf.keras.models.load_model('./data/tf_model_savedmodel')

model_loaded.evaluate(x_test,y_test)

WARNING:tensorflow:From D:\anaconda3\lib\site-packages\tensorflow_core\python\ops\resource_variable_ops.py:1786: calling BaseResourceVariable.__init__ (from tensorflow.python.ops.resource_variable_ops) with constraint is deprecated and will be removed in a future version.

Instructions for updating:

If using Keras pass *_constraint arguments to layers.

INFO:tensorflow:Assets written to: ./data/tf_model_savedmodel\assets

export saved model.

179/179 [==============================] - 0s 1ms/sample - loss: 0.5062 - AUC: 0.8309

[0.5062096395306082, 0.8309387]

具体项目见我的github