Python模拟浏览器发送http请求

1.使用 urllib2 实现

#! /usr/bin/env python

# -*- coding=utf-8 -*-

import urllib2 url="https://www.baidu.com" req_header = {"User-Agent":"Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.11 (KHTML, like Gecko) Chrome/23.0.1271.64 Safari/537.11", "Accept":"text/html;q=0.9,*/*;q=0.8", "Accept-Charset":"ISO-8859-1,utf-8;q=0.7,*;q=0.3", "Accept-Encoding":"gzip", "Connection":"close", "Referer":None #注意如果依然不能抓取的话,这里可以设置抓取网站的host } req_timeout = 5 req = urllib2.Request(url,None,req_header) resp = urllib2.urlopen(req,None,req_timeout) html = resp.read() print(html)

2.使用 requests 模块

(1).get请求

#-*- coding:utf-8 -*-

import requests

url = "https://www.baidu.com" payload = {"key1": "value1", "key2": "value2"} r = requests.get(url, params=payload) print r.text

(2).post请求

#-*- coding:utf-8 -*-

import requests

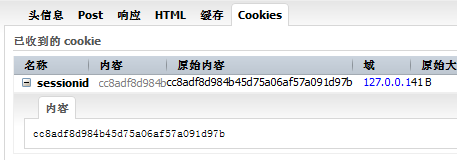

url1 = "http://www.exanple.com/login"#登陆地址 url2 = "http://www.example.com/main"#需要登陆才能访问的地址 data={"user":"user","password":"pass"} headers = { "Accept":"text/html,application/xhtml+xml,application/xml;", "Accept-Encoding":"gzip", "Accept-Language":"zh-CN,zh;q=0.8", "Referer":"http://www.example.com/", "User-Agent":"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/42.0.2311.90 Safari/537.36" } res1 = requests.post(url1, data=data, headers=headers) res2 = requests.get(url2, cookies=res1.cookies, headers=headers) print res2.content#获得二进制响应内容 print res2.raw#获得原始响应内容,需要stream=True print res2.raw.read(50) print type(res2.text)#返回解码成unicode的内容 print res2.url print res2.history#追踪重定向 print res2.cookies print res2.cookies["example_cookie_name"] print res2.headers print res2.headers["Content-Type"] print res2.headers.get("content-type") print res2.json#讲返回内容编码为json print res2.encoding#返回内容编码 print res2.status_code#返回http状态码 print res2.raise_for_status()#返回错误状态码

(3).使用session对象的写法

#-*- coding:utf-8 -*-

import requests

s = requests.Session()

url1 = "http://www.exanple.com/login"#登陆地址 url2 = "http://www.example.com/main"#需要登陆才能访问的地址 data={"user":"user","password":"pass"} headers = { "Accept":"text/html,application/xhtml+xml,application/xml;", "Accept-Encoding":"gzip", "Accept-Language":"zh-CN,zh;q=0.8", "Referer":"http://www.example.com/", "User-Agent":"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/42.0.2311.90 Safari/537.36" } prepped1 = requests.Request("POST", url1, data=data, headers=headers ).prepare() s.send(prepped1) """ 也可以这样写 res = requests.Request("POST", url1, data=data, headers=headers ) prepared = s.prepare_request(res) # do something with prepped.body # do something with prepped.headers s.send(prepared) """ prepare2 = requests.Request("POST", url2, headers=headers ).prepare() res2 = s.send(prepare2) print res2.content """另一种写法""" #-*- coding:utf-8 -*- import requests s = requests.Session() url1 = "http://www.exanple.com/login"#登陆地址 url2 = "http://www.example.com/main"#需要登陆才能访问的页面地址 data={"user":"user","password":"pass"} headers = { "Accept":"text/html,application/xhtml+xml,application/xml;", "Accept-Encoding":"gzip", "Accept-Language":"zh-CN,zh;q=0.8", "Referer":"http://www.example.com/", "User-Agent":"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/42.0.2311.90 Safari/537.36" } res1 = s.post(url1, data=data) res2 = s.post(url2) print(resp2.content)

3.其他的一些请求方式

>>> r = requests.put("http://httpbin.org/put")

>>> r = requests.delete("http://httpbin.org/delete") >>> r = requests.head("http://httpbin.org/get") >>> r = requests.options("http://httpbin.org/get")

1.使用 urllib2 实现

#! /usr/bin/env python

# -*- coding=utf-8 -*-

import urllib2 url="https://www.baidu.com" req_header = {"User-Agent":"Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.11 (KHTML, like Gecko) Chrome/23.0.1271.64 Safari/537.11", "Accept":"text/html;q=0.9,*/*;q=0.8", "Accept-Charset":"ISO-8859-1,utf-8;q=0.7,*;q=0.3", "Accept-Encoding":"gzip", "Connection":"close", "Referer":None #注意如果依然不能抓取的话,这里可以设置抓取网站的host } req_timeout = 5 req = urllib2.Request(url,None,req_header) resp = urllib2.urlopen(req,None,req_timeout) html = resp.read() print(html)

2.使用 requests 模块

(1).get请求

#-*- coding:utf-8 -*-

import requests

url = "https://www.baidu.com" payload = {"key1": "value1", "key2": "value2"} r = requests.get(url, params=payload) print r.text

(2).post请求

#-*- coding:utf-8 -*-

import requests

url1 = "http://www.exanple.com/login"#登陆地址 url2 = "http://www.example.com/main"#需要登陆才能访问的地址 data={"user":"user","password":"pass"} headers = { "Accept":"text/html,application/xhtml+xml,application/xml;", "Accept-Encoding":"gzip", "Accept-Language":"zh-CN,zh;q=0.8", "Referer":"http://www.example.com/", "User-Agent":"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/42.0.2311.90 Safari/537.36" } res1 = requests.post(url1, data=data, headers=headers) res2 = requests.get(url2, cookies=res1.cookies, headers=headers) print res2.content#获得二进制响应内容 print res2.raw#获得原始响应内容,需要stream=True print res2.raw.read(50) print type(res2.text)#返回解码成unicode的内容 print res2.url print res2.history#追踪重定向 print res2.cookies print res2.cookies["example_cookie_name"] print res2.headers print res2.headers["Content-Type"] print res2.headers.get("content-type") print res2.json#讲返回内容编码为json print res2.encoding#返回内容编码 print res2.status_code#返回http状态码 print res2.raise_for_status()#返回错误状态码

(3).使用session对象的写法

#-*- coding:utf-8 -*-

import requests

s = requests.Session()

url1 = "http://www.exanple.com/login"#登陆地址 url2 = "http://www.example.com/main"#需要登陆才能访问的地址 data={"user":"user","password":"pass"} headers = { "Accept":"text/html,application/xhtml+xml,application/xml;", "Accept-Encoding":"gzip", "Accept-Language":"zh-CN,zh;q=0.8", "Referer":"http://www.example.com/", "User-Agent":"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/42.0.2311.90 Safari/537.36" } prepped1 = requests.Request("POST", url1, data=data, headers=headers ).prepare() s.send(prepped1) """ 也可以这样写 res = requests.Request("POST", url1, data=data, headers=headers ) prepared = s.prepare_request(res) # do something with prepped.body # do something with prepped.headers s.send(prepared) """ prepare2 = requests.Request("POST", url2, headers=headers ).prepare() res2 = s.send(prepare2) print res2.content """另一种写法""" #-*- coding:utf-8 -*- import requests s = requests.Session() url1 = "http://www.exanple.com/login"#登陆地址 url2 = "http://www.example.com/main"#需要登陆才能访问的页面地址 data={"user":"user","password":"pass"} headers = { "Accept":"text/html,application/xhtml+xml,application/xml;", "Accept-Encoding":"gzip", "Accept-Language":"zh-CN,zh;q=0.8", "Referer":"http://www.example.com/", "User-Agent":"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/42.0.2311.90 Safari/537.36" } res1 = s.post(url1, data=data) res2 = s.post(url2) print(resp2.content)

3.其他的一些请求方式

>>> r = requests.put("http://httpbin.org/put")

>>> r = requests.delete("http://httpbin.org/delete") >>> r = requests.head("http://httpbin.org/get") >>> r = requests.options("http://httpbin.org/get")