手机号不同运营商分区

—————— —————— —————— —————— —————— —————— —————— —————— ——————

1.需求:

将统计结果按照手机号不同的运营商输出到不同文件中(

分区)

2.数据准备:

将此数据新建成phone.txt文档,以备实验时使用。

13596384305 18120518079 17560937269 15984545149 15371504522 13216078541 15039218857 14966563207 15627931768

15794900024 17302818676 18588110678 13522606930 13331962187 13266141372 13957395113 17337027119 13277392000

19803739787 17769861692 18646423152 15274577077 18112778710 18656385914 15276175595 14977991593 15699540800

18700387119 18012222346 15657741120 18785486871 18957727664 16632366931 13906414105 18004020243 17177353555

18895204970 19955274225 17169892365 18289084399 18002309511 15542059692 15210544320 17316780203 13127652125

15762798113 17347608390 18699077463 18789877280 19918341816 18688672020 15029599206 18136480764 13109117921

17842227875 14934645545 17130441359 18307730687 18133817256 13158020448 15887143679 13368527063 17558322272

19828498697 18101069025 18669756355 17250447425 18915634171 15561315675 13558592392 19938147907 16698748564

18493864567 18021715196 15698519484 15821283278 15333870685 18602348046 13470675456 13345176062 18504593663

15931069035 18153883784 18533464338 15708819040 18087615934 13267832146 17246214023 19928282730 13039769031

19832925459 18069458272 18675345626 18309262835 17394140304 15697350636 15981577508 14918516144 18509593532

14723178400 15338618250 14506355448 18378849132 14978570389 13171827211 15190759180 14939378893 17678611363

18276464861 17316924210 17619746208 18240392162 17735926481 13089053525 15051685601 14983278383 16694112385

13990785243 14967730107 17500967117 14784758100 14957120595 14586828314 18719933674 18965210364 15614411904

18899992193 19999689217 17587716111 18323178657 13359025688 16693882775 18486004670 18907800792 15519643539

18231221899 17770601274 16684947697 19895588843 19968499294 17175302648 13411916606 18941294548 18598801126

15240612069 17761783260 18528800901 18749836044 13394523364 15584060794 13489642440 19958713538 18542519760

17215272969 14954868327 17544460346 15054994907 15379003974 13201162619 15761207384 18006875786 14580503458

18796594254 19978267009 13060049409 15928835339 17793030812 17518533606 13914328389 18166327725 17657998156

18468089905 15362218447 13074674349 18781906774 13323110966 18696735583 15881635791 18002710993 16678146395

18798622520 13342462903 17624051009 13619974356 13345374749 16609897105 13534435118 17310517467 18684928878

18816788389 18150702483 15589059984 18268183143 17339431604 17684578452 15213024210 15320908042 18504243191

18276232474 19924361496 16667941657 17830537213 19972416264 13133289015 18781478018 17303851613 18610761576

13601236152 13306041324 15585810920 15728289812 14976393553 13133930748 17260889588 19928454826 16698461428

18378640009 15310568124 17613020765 15856030053 13303211371 18514500039 15239098767 14913286017 15549864250

13661804373 13362483514 13144172580 19839437317 18939024838 13267863655 13988340281 17779984815 14501820426

13955709319 18109650540 16609379924 13950592686 18096692723 13048403197 17883089865 19900010711 18682813221

13707834672 13376938885 17696205075 18405508115 15355176387 16685364017 13485027433 19989019319 18521274563

14755603203 15354971321 18698317292 18861952012 14922627399 14560984629 18738805940 17379308868 15524566417

19893571875 17736884909 18517485309 18432197994 14953924544 14546638173 18782001497 18914942280 13120736146

17817113487 14968830686 17124902753 18215300962 18080192994 18629313731 15275178182 14989482692 17597151032

18458913654 15345590005 18527480894 13687401805 18044910572 14540746538 18758578786 15349587757 17688166906

13403952189 18905774163 15517295783 13588213252 18925096782 16690464612 15852991384 18920506941 18653866356

19863534636 18063057464 17633620912 13983472479 14943136701 16645957840 15100868980 17348103678 18550141690

15856263960 18914763877 13122433047 18318765493 17744555261 17563106187 18761984703 15324055076 13067936551

15812491707 15320360120 13168487176 18413155528 14981830768 16609758334 15247942502 18168247917 171957704593.分析:

(1)Mapreduce中会将map输出的kv对,按照相同key分组,然后分发给不同的reducetask。默认的分发规则为:根据key的hashcode%reducetask数来分发

(2)如果要按照我们自己的需求进行分组,则需要改写数据分发(分组)组件Partitioner自定义一个CustomPartitioner继承抽象类:Partitioner

(3)在job驱动中,设置自定义partitioner:job.setPartitionerClass(CustomPartitioner.class)

4.代码实现:

新建四个class类:

PartitionMapper.java

PhionPartition.java

PartitionReduce.java

PartitionDriver.java

(1)PartitionMapper类:

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import java.io.IOException;

/**

* Author : 若清 and wgh

* Version : 2020/4/13 & 1.0

*/

public class PartitionMapper extends Mapper<LongWritable, Text,Text, NullWritable> {

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

//1.获取数据

String line = value.toString();

//2.切分“\t”

String[] phions = line.split("\t");

for (String phion : phions){

context.write(new Text(phion),NullWritable.get());

}

}

}

(2)PhionPartition类:

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Partitioner;

import java.util.Arrays;

/**

* Author : 若清 and wgh

* Version : 2020/4/13 & 1.0

*/

public class PhionPartition extends Partitioner<Text, NullWritable> {

//1.移动号码前三位

public static final String[] YD = {

"134","135","136",

"137","138","139",

"150","151","152",

"157","158","159",

"188","187","182",

"183","184","178",

"147","172","198"

};

//2.电信号码前三位

public static final String[] DX = {

"133","149","153",

"173","177","180",

"181","189","199"};

//3.联通号码前三位

public static final String[] LT = {

"130","131","132",

"145","155","156",

"166","171","175",

"176","185","186","166"

};

public int getPartition(Text text, NullWritable nullWritable, int i) {

String phion = text.toString();

String phion1 = phion.substring(0, 3);

//4.判断手机号码的前三位属于哪个运营商

if (Arrays.asList(YD).contains(phion1)){

return 0;

}else if (Arrays.asList(LT).contains(phion1)){

return 1;

}else {

return 2;

}

}

}

(3)PartitionReduce类:

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

import java.io.IOException;

/**

* Author : 若清 and wgh

* Version : 2020/4/13 & 1.0

*/

public class PartitionReduce extends Reducer<Text, NullWritable,Text,NullWritable> {

@Override

protected void reduce(Text key, Iterable<NullWritable> values, Context context) throws IOException, InterruptedException {

context.write(key,NullWritable.get());

}

}(4)PartitionDriver类:

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import java.io.IOException;

/**

* Author : 若清 and wgh

* Version : 2020/4/13 & 1.0

*/

public class PartitionDriver {

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

args = new String[]{"D:\\input\\plus\\input\\phone.txt","D:\\input\\plus\\output\\0818"};

//实例化配置文件

Configuration configuration = new Configuration();

//定义一个job任务

Job job = Job.getInstance(new Configuration());

//配置job的信息

job.setJarByClass(PartitionDriver.class);

//配置mapper的类及输出数据的类型

job.setMapperClass(PartitionMapper.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(NullWritable.class);

//设置map预合并

job.setCombinerClass(PartitionReduce.class);

//自定义分区,判断手机号的前三位属于哪个运营商来分区

job.setPartitionerClass(PhionPartition.class);

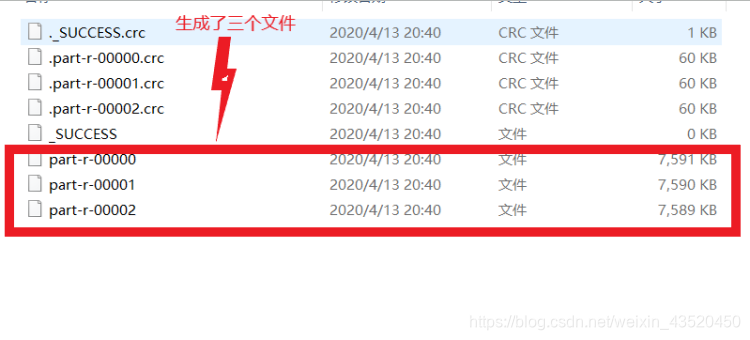

job.setNumReduceTasks(3); //**这里设置了三个ReduceTask,分别代表三个分区**

//输入数据的路径

FileInputFormat.setInputPaths(job,new Path(args[0]));

//输出数据的路径

FileOutputFormat.setOutputPath(job,new Path(args[1]));

//提交任务

job.waitForCompletion(true);

}

}5.运行结果:

注意:1.如果reduceTask的数量> getPartition的结果数,则会多产生几个空的输出文件part-r-000xx;

2.如果1<reduceTask的数量<getPartition的结果数,则有一部分分区数据无处安放,会Exception;

3.如果reduceTask的数量=1,则不管mapTask端输出多少个分区文件,最终结果都交给这一个reduceTask,最终也就只会产生一个结果文件 part-r-00000;