一、需求:

有大量的文本(文档、网页),需要建立搜索索引

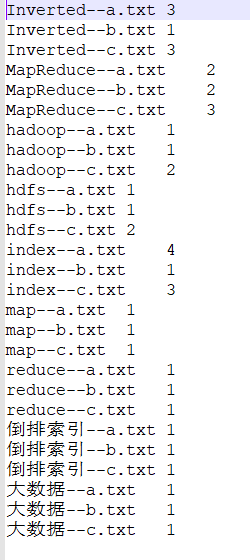

(1)第一次预期输出结果:

itstar--a.txt 3

itstar--b.txt 2

itstar--c.txt 2

pingping--a.txt 1

pingping--b.txt 3

pingping--c.txt 1

ss--a.txt 2

ss--b.txt 1

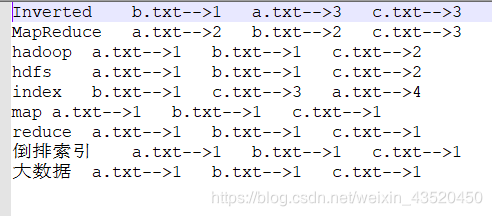

ss--c.txt 1(2)第二次预期输出结果:

itstar c.txt-->2 b.txt-->2 a.txt-->3

pingping c.txt-->1 b.txt-->3 a.txt-->1

ss c.txt-->1 b.txt-->1 a.txt-->2 (3)第三次预期输出结果:

使用多job串联的方法,达到在一次处理中得到两个文件

二、数据导入

新建三个txt文件即可:

a.txt

map

reduce

MapReduce

index Inverted index

Inverted index

倒排索引

大数据

hadoop MapReduce hdfs

Inverted index

b.txthadoop

MapReduce

hdfs Inverted

index 倒排索引

大数据 map

reduce MapReduce

c.txt

Inverted index

倒排索引

大数据

hadoop MapReduce hdfs

Inverted index

hadoop MapReduce hdfs

Inverted index

map

reduce

MapReduce

三、代码实现

(1)第一次编写一个IndexDeriveOne类:

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.input.FileSplit;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import java.io.IOException;

public class IndexDeriveOne {

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

args = new String[]{"D:\\input\\plus\\input\\index","D:\\input\\plus\\output\\indexone"};

Configuration conf = new Configuration();

Job job1 = Job.getInstance(conf);

job1.setJarByClass(IndexDeriveOne.class);

job1.setMapperClass(IndexMapperOne.class);

job1.setReducerClass(IndexReduceOne.class);

job1.setMapOutputKeyClass(Text.class);

job1.setMapOutputValueClass(IntWritable.class);

job1.setOutputKeyClass(Text.class);

job1.setOutputValueClass(IntWritable.class);

//判断输出路径是否存在

Path path = new Path(args[1]);

FileSystem fs = FileSystem.get(conf);

if(fs.exists(path)) {

fs.delete(path, true);

}

FileInputFormat.setInputPaths(job1, new Path(args[0]));

FileOutputFormat.setOutputPath(job1, path);

System.exit(job1.waitForCompletion(true) ? 0 : 1);

}

}

/**

* mapper

* 实现:1拿到每个文件的名字

* 2.拿到每个单词

* 3.统计每个文件里面单词的个数

* */

class IndexMapperOne extends Mapper<LongWritable, Text,Text, IntWritable>{

String name;

Text k = new Text();

IntWritable v = new IntWritable();

@Override

protected void setup(Context context) throws IOException, InterruptedException {

//获取文件对象

FileSplit fileSplit = (FileSplit) context.getInputSplit();

//获取文件的名字

name = fileSplit.getPath().getName();

}

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

//获取数据

String line = value.toString();

//获取关键词

String[] split = line.split(" ");

for (String s :split){

//将关键词和文件名字拼接,作为输出的key MapReduce--a.txt

k.set(s+"--"+name);

v.set(1);

//输出

context.write(k,v);

}

}

}

/**

* reduce

* 将每个文件中的单词进行统计

* */

class IndexReduceOne extends Reducer<Text, IntWritable,Text, IntWritable>{

@Override

protected void reduce(Text key, Iterable<IntWritable> values, Context context) throws IOException, InterruptedException {

//定义一个计数器

int count = 0;

for (IntWritable i : values){

count+=Integer.parseInt("1");

}

context.write(key,new IntWritable(count));

}

}运行结果:

(2)第二次编写一个 IndexDriveTow类:

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import java.io.IOException;

public class IndexDriveTow {

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

args = new String[]{"D:\\input\\plus\\output\\indexone","D:\\input\\plus\\output\\indexTow"};

Configuration conf = new Configuration();

Job job2 = Job.getInstance(conf);

job2.setJarByClass(IndexDriveTow.class);

job2.setMapperClass(IndexMapperTow.class);

job2.setReducerClass(IndexReduceTow.class);

job2.setMapOutputKeyClass(Text.class);

job2.setMapOutputValueClass(Text.class);

job2.setOutputKeyClass(Text.class);

job2.setOutputValueClass(Text.class);

//判断输出路径是否存在

FileSystem fs = FileSystem.get(conf);

Path path1 = new Path(args[1]);

if(fs.exists(path1)) {

fs.delete(path1, true);

}

FileInputFormat.setInputPaths(job2, new Path(args[0]));

FileOutputFormat.setOutputPath(job2, path1);

job2.waitForCompletion(true);

}

}

/**

* mapper

* 将关键词和文件名字分开,输出到reduce,利用MapReduce的分组,做最终的倒排索引

* */

class IndexMapperTow extends Mapper<LongWritable, Text,Text,Text>{

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

//获取数据

String line = value.toString();

//切分,按照“--”

String[] split = line.split("--");

//输出

context.write(new Text(split[0]),new Text(split[1]));

}

}

/**

*

* reduce

*

* */

class IndexReduceTow extends Reducer<Text,Text,Text,Text>{

@Override

protected void reduce(Text key, Iterable<Text> values, Context context) throws IOException, InterruptedException {

//通过循环迭代器获取每个文件名字,然后拼接成一行

StringBuilder stringBuilder =new StringBuilder();

for (Text text : values){

//a.txt 2,将制表符换成 -->

String[] split = text.toString().split("\t");

String s = split[0]+"-->"+split[1];

stringBuilder.append(s).append("\t");

}

//输出

context.write(key,new Text(stringBuilder.toString()));

}

}

运行结果:

(3)第三次编写一个IndexDerive 类:

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.jobcontrol.ControlledJob;

import org.apache.hadoop.mapreduce.lib.jobcontrol.JobControl;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import java.io.IOException;

public class IndexDerive {

public static void main(String[] args) throws IOException, InterruptedException {

args = new String[]{"D:\\input\\plus\\input\\index","D:\\input\\plus\\output\\index1","D:\\input\\plus\\output\\index2"};

//实例化配置文件

Configuration conf = new Configuration();

//定义job任务

Job job1 = Job.getInstance(conf);

//配置mapper和reducer

job1.setMapperClass(IndexMapperOne.class);

job1.setReducerClass(IndexReduceOne.class);

//配置mapper输出数据类型,总的输出类型

job1.setMapOutputKeyClass(Text.class);

job1.setMapOutputValueClass(IntWritable.class);

job1.setOutputKeyClass(Text.class);

job1.setOutputValueClass(IntWritable.class);

//判断输出路径是否存在,如果存在就删除输出路径,如果不存运行job任务

Path path = new Path(args[1]);

FileSystem fs = FileSystem.get(conf);

if(fs.exists(path)) {

fs.delete(path, true);

}

FileInputFormat.setInputPaths(job1, new Path(args[0]));

FileOutputFormat.setOutputPath(job1, path);

Job job2 = Job.getInstance(conf);

job2.setMapperClass(IndexMapperTow.class);

job2.setReducerClass(IndexReduceTow.class);

job2.setMapOutputKeyClass(Text.class);

job2.setMapOutputValueClass(Text.class);

job2.setOutputKeyClass(Text.class);

job2.setOutputValueClass(Text.class);

//判断输出路径是否存在

Path path1 = new Path(args[2]);

if(fs.exists(path1)) {

fs.delete(path1, true);

}

FileInputFormat.setInputPaths(job2, new Path(args[1]));

FileOutputFormat.setOutputPath(job2, path1);

ControlledJob aJob = new ControlledJob(job1.getConfiguration());

ControlledJob bJob = new ControlledJob(job2.getConfiguration());

//创建管理job任务的control组

JobControl wgh = new JobControl("wgh");

//添加job任务

wgh.addJob(aJob);

wgh.addJob(bJob);

//任务的依赖关系

bJob.addDependingJob(aJob);

//提交

Thread thread = new Thread(wgh);

thread.start();

//控制任务停止

while(!wgh.allFinished()){

Thread.sleep(1000);

}

System.exit(0);

}

}

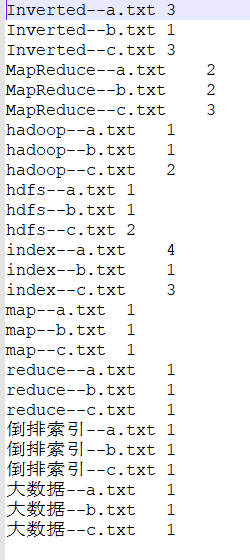

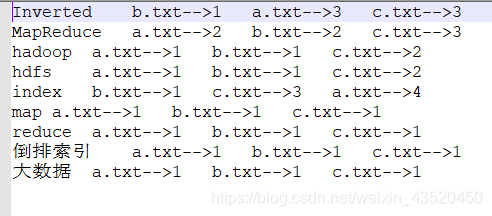

运行结果:

两个文件里的结果分别为: