1. Java

Download the Java 1.8 64 bit version from https://java.com/en/download/ --------- If not 64 bit java there will be error when start resource node manager

Java 安装自选目录:比如 C:\java64 -----这里重要

Once installed confirm that you’re running the correct version from command line using ‘java -version’ command, output of which you can confirm in command line like this:

C:\Users>java -version

java version "1.8.0_251"

Java(TM) SE Runtime Environment (build 1.8.0_251-b08)

Java HotSpot(TM) 64-Bit Server VM (build 25.251-b08, mixed mode)

2. WinRAR/7-ZIP

Downloaded and installed WinRAR 64 bit release from http://www.rarlab.com/download.htm that will later allow me to decompress Linux type tar.gz packages on Windows. Or use 7-zip https://www.7-zip.org/download.html

3. Hadoop

The next step was to install a Hadoop distribution. Download a binary form:

https://archive.apache.org/dist/hadoop/common/hadoop-2.9.1/hadoop-2.9.1.tar.gz

Unzip it and rename folder name to hadoop and put it under C:\Learning ------- 如果用你自己的目录,名字中间不要有空格(因为Hadoop初始开发基于linux, linux 文件名字不能有空格)。 后续设置配置文件内容时目录都要修改

It's time to start the Hadoop cluster with a single node.

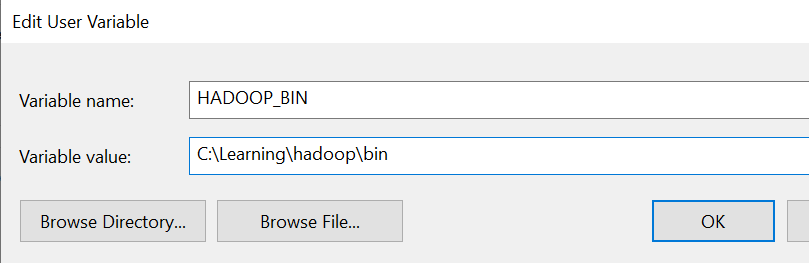

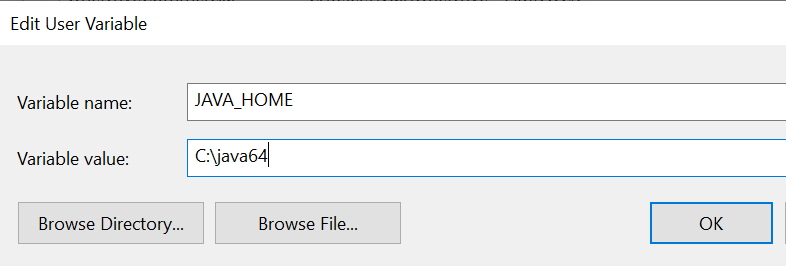

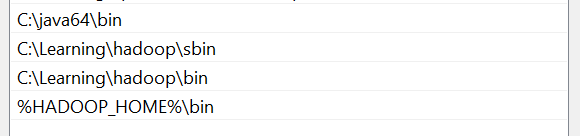

3. 1 Setup Environmental Variables

list as below:

Add following dir to path:

3.2 Edit Hadoop Configuration

注意:如果你不想自己配置下面 3.2 过程, 除了3.2.3 其它的步骤可以忽略, 那么下载下面链接(bin data etc)文件夹放到你的hadoop目录下覆盖(https://github.com/yjy24/bigdata_learning ), 然后从3.2.3 开始继续!

3.2.1 新建data 目录和子目录

c:/Learning/hadoop/data

c:/Learning/hadoop/data/namenode

c:/Learning/hadoop/data/datanode

3.2.2 Configure Hadoop

a) C:\Learning\hadoop\etc\hadoop\core-site.xml

b) C:\Learning\hadoop\etc\hadoop\mapred-site.xml

c) C:\Learning\hadoop\etc\hadoop\hdfs-site.xml

3.2.3 windows 需要的的 libs

1. install Visual C++ 2010 Redistributable Package (x64): https://www.microsoft.com/en-us/download/details.aspx?id=14632

3.3 Start Hadoop all service

3.3.1 格式化 Hadoop namenode:

20/04/19 10:40:13 INFO common.Storage: Storage directory \Learning\hadoop\data\namenode has been successfully formatted.

20/04/19 10:40:13 INFO namenode.FSImageFormatProtobuf: Saving image file \Learning\hadoop\data\namenode\current\fsimage.ckpt_0000000000000000000 using no compression

20/04/19 10:40:13 INFO namenode.FSImageFormatProtobuf: Image file \Learning\hadoop\data\namenode\current\fsimage.ckpt_0000000000000000000 of size 320 bytes saved in 0 seconds .

20/04/19 10:40:13 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

20/04/19 10:40:13 INFO namenode.NameNode: SHUTDOWN_MSG:

3.3.2 启动 Hadoop all service:

C:\Learning\hadoop\sbin\start-all.cmd ---- 要用管理员身份运行

四个service窗口会打开运行 ------ 任何一个都不能有错误

恭喜你可以开始运行你的Hadoop程序了!

3.4 Open Hadoop GUI

Once all above steps were completed,opened browser and navigated to: http://localhost:8088/cluster

这里可以查看job的运行情况 log 信息。

--------------------------------------------------End---------------------------------------------------