《Linux内核 学习笔记》--- 第二章 内存管理

2.1 物理内存初始化

从硬件角度来看内存,随机存储器(Random Access Memory, RAM)是与CPU直接交换数据的内部存储器。

现在大部分计算机都使用DDR(Dual Data Rate SDRAM)的存储设备,DDR包括DDR3L、DDR4L、LPDDR3/4等。

DDR的初始化一般是在BIOS或boot loader中,BIOS或boot loader把DDR的大小传递给Linux内核,因此从Linux内核角度来看DDR其实就是一段物理内存空间。

2.1.1 内存管理概述

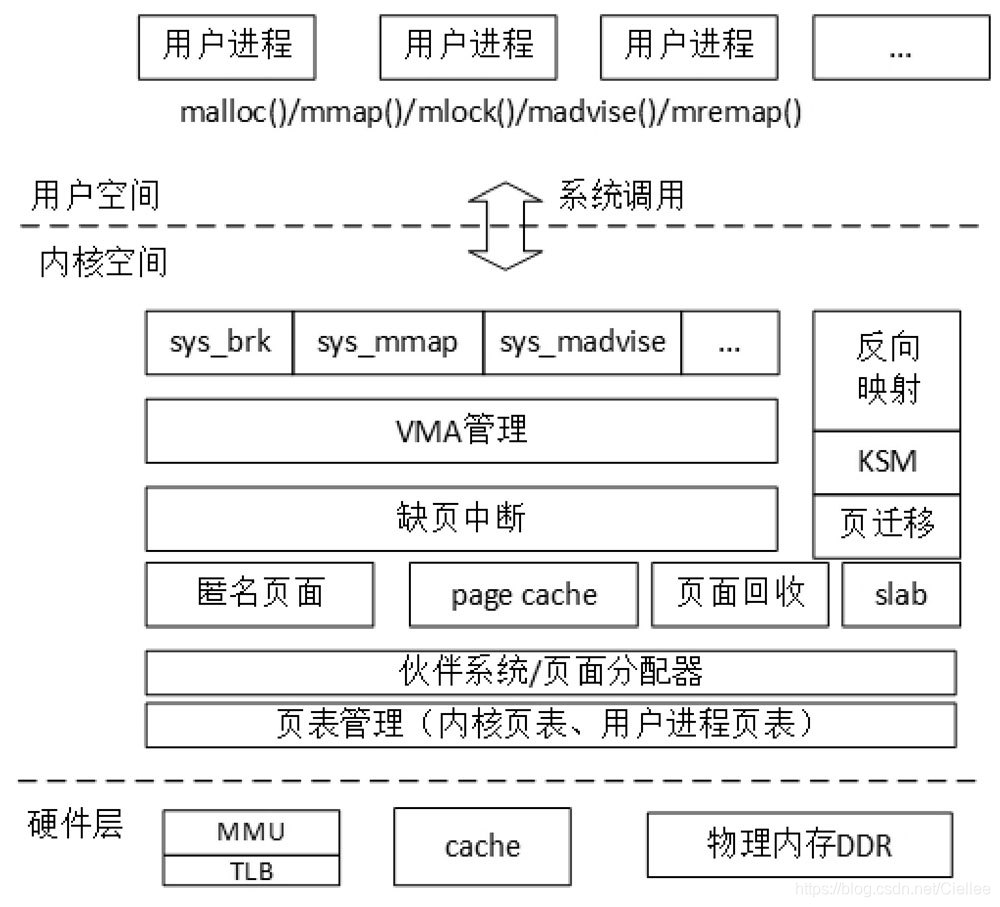

内存管理是一个很复杂的系统,内存空间可以分成3个层次,分别是用户空间层、内核空间层和硬件层。

-

用户空间层

用户空间层可以理解为Linux内核内存管理为用户空间暴露的系统调用接口,例如brk、mmap等系统调用。通常libc库会封装成大家常见的C语言函数,例如malloc()和mmap()等。 -

内核空间层

内核空间层包含的模块相当丰富。

用户空间和内核空间的接口是系统调用,因此内核空间层首先需要处理这些内存管理相关的系统调用,

例如sys_brk、sys_mmap、sys_madvise等。

接下来就包括VMA管理、缺页中断管理、匿名页面、page cache、页面回收、反向映射、slab分配器、页表管理等模块了。 -

硬件层

硬件层,包括处理器的MMU、TLB和cache部件,以及板载的物理内存,例如LPDDR或者DDR。

2.1.2 内存大小

在ARM Linux中,各种设备的相关属性描述都采用DTS方式来呈现。

在ARM Vexpress平台中,内存的定义在vexpress-v2p-ca9.dts文件中。

该DTS文件定义了内存的起始地址为0x60000000,大小为0x40000000,即1GB大小内存空间。

// arch/arm/boot/dts/vexpress-v2p-ca9.dts

memory@60000000 {

device_type = "memory";

reg = <0x60000000 0x40000000>;

};

内核在启动的过程中,需要解析这些DTS文件,实现代码在early_init_dt_scan_memory()函数中。

代码调用关系为:

start_kernel() -> setup_arch() -> setup_machine_fdt() -> early_init_dt_scan_nodes() -> early_init_dt_scan_memory()

// \kernel\msm-3.18\drivers\of\fdt.c

/** early_init_dt_scan_memory - Look for an parse memory nodes */

int __init early_init_dt_scan_memory(unsigned long node, const char *uname, int depth, void *data)

{

const char *type = of_get_flat_dt_prop(node, "device_type", NULL);

const __be32 *reg, *endp;

int l;

if (strcmp(type, "memory") != 0) //检查 device_type = "memory";

return 0;

reg = of_get_flat_dt_prop(node, "linux,usable-memory", &l);

if (reg == NULL)

reg = of_get_flat_dt_prop(node, "reg", &l); // 获取 reg = <0x60000000 0x40000000>;

endp = reg + (l / sizeof(__be32));

pr_debug("memory scan node %s, reg size %d, data: %x %x %x %x,\n",

uname, l, reg[0], reg[1], reg[2], reg[3]);

while ((endp - reg) >= (dt_root_addr_cells + dt_root_size_cells)) {

u64 base, size;

base = dt_mem_next_cell(dt_root_addr_cells, ®);

size = dt_mem_next_cell(dt_root_size_cells, ®);

if (size == 0)

continue;

pr_debug(" - %llx , %llx\n", (unsigned long long)base, (unsigned long long)size);

early_init_dt_add_memory_arch(base, size);

}

return 0;

}

解析“memory”描述的信息从而得到内存的base_address和size信息,

最后内存块信息通过 early_init_dt_add_memory_arch ()->memblock_add()函数添加到memblock子系统中。

2.1.3 物理内存映射

在内核使用内存前,需要初始化内核的页表,初始化页表主要在map_lowmem()函数中。

在映射页表之前,需要把页表的页表项清零,主要在prepare_page_table()函数中实现。

start_kernel() --> setup_arch() --> paging_init()

static inline void prepare_page_table(void)

{

unsigned long addr;

phys_addr_t end;

for( addr = 0; addr < MODULES_VADDR; addr += PMD_SIZE )

pmd_clear( pmd_off_k(addr) );

for( ; addr < PAGE_OFFSET; addr += PMD_SIZE )

pmd_clear( pmd_off_k(addr) );

end = memblock.memory.regions[0].base + memblock.memory.regions[0].size;

for( addr = __phys_to_virt(end); addr < VMALLOC_START; addr += PMD_SIZE )

pmd_clear( pmd_off_k(addr) );

}

这里对如下 3 段地址调用 pmd_clear() 函数来清除一级页表表项的内容。

❑ 0x0 ~ MODULES_VADDR

❑ MODULES_VADDR ~ PAGE_OFFSET

❑ arm_lowmem_limit ~ VMALLOC_START

start_kernel() --> setup_arch() --> paging_init() --> map_lowmem()

static void __init map_lowmem(void)

{

struct memblock_region * reg;

phys addr_t kernel_x_start = round_down( __pa(_stex_t), SECTION_SIZE );

phys addr_t kernel_x_end = round_up( __pa(_init_end), SECTION_SIZE );

// map all the lowmem memory banks

for_each_memblock( memory, reg ){

phys_addr_t start = reg->base;

phys_addr_t end = start + reg->size;

struct map_desc map;

if( end > arm_lowmem_limit )

end = arm_lowmem_limit;

// 映射 kernel image 区域

map.pfn = phys_to_pfn(krenel_x_start);

map.virtual = phys_to_virt(kernel_x_start);

map.length = kernel_x_end - kernel_x_start;

map.type = MT_MEMORY_RWX;

create_mappting(&map);

//映射低端内存

if( kernel_x_end < end ){

map.pfn = phys_to_pfn(krenel_x_end);

map.virtual = phys_to_virt(kernel_x_end);

map.length = end - kernel_x_end;

map.type = MT_MEMORY_RW;

create_mappting(&map);

}

}

}

真正创建页表是在map_lowmem()函数中,会从内存开始的地方覆盖到arm_lowmem_limit处。

这里需要考虑kernel代码段的问题,kernel的代码段从_stext开始,到_init_end结束。

以ARM Vexpress平台为例

❑ 内存开始地址 : 0x60000000

❑ _stext : 0x60000000

❑ _init_end : 0x60800000

❑ arm_lowmem_limit : 0x8f800000

其中,arm_lowmem_limit地址需要考虑高端内存的情况,该值的计算是在sanity_check_meminfo()函数中。

在ARM Vexpress平台中,arm_lowmem_limit等于vmalloc_min,其定义如下:

static void * __initdata vmalloc_mic = (void *)(VMALLOC_END -(240 << 20) - VMALLOC_OFFSET);

phys addr_t vmalloc_limit = __pa(vmalloc_min - 1) + 1;

map_lowmem()会对两个内存区间创建映射。

(1)区间1

❑ 物理地址:0x60000000~0x608000000

❑ 虚拟地址:0xc0000000~0xc08000000

❑ 属性:可读、可写并且可执行(MT_MEMORY_RWX)

(2)区间2

❑ 物理地址:0x60800000~0x8f8000000

❑ 虚拟地址:0xc0800000~0xef8000000

❑ 属性:可读、可写(MT_MEMORY_RW)

MT_MEMORY_RWX和MT_MEMORY_RW的区别在于

RW页表项有一个XN比特位,XN比特位置为1,表示这段内存区域不允许执行。

映射函数为create_mapping(),这里创建的映射就是物理内存直接映射,或者叫作线性映射,该函数会在第2.2节中详细介绍。

2.1.4 zone初始化

对页表的初始化完成之后,内核就可以对内存进行管理了,

但是内核并不是统一对待这些页面,而是采用区块zone的方式来管理。

struct zone数据结构的主要成员如下:

// \kernel\msm-3.18\include\linux\mmzone.h

struct zone {

/* Read-mostly fields */

/* zone watermarks, access with *_wmark_pages(zone) macros */

unsigned long watermark[NR_WMARK];

/*

* We don't know if the memory that we're going to allocate will be freeable

* or/and it will be released eventually, so to avoid totally wasting several

* GB of ram we must reserve some of the lower zone memory (otherwise we risk

* to run OOM on the lower zones despite there's tons of freeable ram

* on the higher zones). This array is recalculated at runtime if the

* sysctl_lowmem_reserve_ratio sysctl changes.

*/

long lowmem_reserve[MAX_NR_ZONES];

#ifdef CONFIG_NUMA

int node;

#endif

/*

* The target ratio of ACTIVE_ANON to INACTIVE_ANON pages on

* this zone's LRU. Maintained by the pageout code.

*/

unsigned int inactive_ratio;

struct pglist_data *zone_pgdat;

struct per_cpu_pageset __percpu *pageset;

/*

* This is a per-zone reserve of pages that should not be

* considered dirtyable memory.

*/

unsigned long dirty_balance_reserve;

#ifdef CONFIG_CMA

bool cma_alloc;

#endif

#ifndef CONFIG_SPARSEMEM

/*

* Flags for a pageblock_nr_pages block. See pageblock-flags.h.

* In SPARSEMEM, this map is stored in struct mem_section

*/

unsigned long *pageblock_flags;

#endif /* CONFIG_SPARSEMEM */

#ifdef CONFIG_NUMA

/*

* zone reclaim becomes active if more unmapped pages exist.

*/

unsigned long min_unmapped_pages;

unsigned long min_slab_pages;

#endif /* CONFIG_NUMA */

/* zone_start_pfn == zone_start_paddr >> PAGE_SHIFT */

unsigned long zone_start_pfn;

/*

* spanned_pages is the total pages spanned by the zone, including

* holes, which is calculated as:

* spanned_pages = zone_end_pfn - zone_start_pfn;

*

* present_pages is physical pages existing within the zone, which

* is calculated as:

* present_pages = spanned_pages - absent_pages(pages in holes);

*

* managed_pages is present pages managed by the buddy system, which

* is calculated as (reserved_pages includes pages allocated by the

* bootmem allocator):

* managed_pages = present_pages - reserved_pages;

*

* So present_pages may be used by memory hotplug or memory power

* management logic to figure out unmanaged pages by checking

* (present_pages - managed_pages). And managed_pages should be used

* by page allocator and vm scanner to calculate all kinds of watermarks

* and thresholds.

*

* Locking rules:

*

* zone_start_pfn and spanned_pages are protected by span_seqlock.

* It is a seqlock because it has to be read outside of zone->lock,

* and it is done in the main allocator path. But, it is written

* quite infrequently.

*

* The span_seq lock is declared along with zone->lock because it is

* frequently read in proximity to zone->lock. It's good to

* give them a chance of being in the same cacheline.

*

* Write access to present_pages at runtime should be protected by

* mem_hotplug_begin/end(). Any reader who can't tolerant drift of

* present_pages should get_online_mems() to get a stable value.

*

* Read access to managed_pages should be safe because it's unsigned

* long. Write access to zone->managed_pages and totalram_pages are

* protected by managed_page_count_lock at runtime. Idealy only

* adjust_managed_page_count() should be used instead of directly

* touching zone->managed_pages and totalram_pages.

*/

unsigned long managed_pages;

unsigned long spanned_pages;

unsigned long present_pages;

const char *name;

/*

* Number of MIGRATE_RESEVE page block. To maintain for just

* optimization. Protected by zone->lock.

*/

int nr_migrate_reserve_block;

#ifdef CONFIG_MEMORY_ISOLATION

/*

* Number of isolated pageblock. It is used to solve incorrect

* freepage counting problem due to racy retrieving migratetype

* of pageblock. Protected by zone->lock.

*/

unsigned long nr_isolate_pageblock;

#endif

#ifdef CONFIG_MEMORY_HOTPLUG

/* see spanned/present_pages for more description */

seqlock_t span_seqlock;

#endif

/*

* wait_table -- the array holding the hash table

* wait_table_hash_nr_entries -- the size of the hash table array

* wait_table_bits -- wait_table_size == (1 << wait_table_bits)

*

* The purpose of all these is to keep track of the people

* waiting for a page to become available and make them

* runnable again when possible. The trouble is that this

* consumes a lot of space, especially when so few things

* wait on pages at a given time. So instead of using

* per-page waitqueues, we use a waitqueue hash table.

*

* The bucket discipline is to sleep on the same queue when

* colliding and wake all in that wait queue when removing.

* When something wakes, it must check to be sure its page is

* truly available, a la thundering herd. The cost of a

* collision is great, but given the expected load of the

* table, they should be so rare as to be outweighed by the

* benefits from the saved space.

*

* __wait_on_page_locked() and unlock_page() in mm/filemap.c, are the

* primary users of these fields, and in mm/page_alloc.c

* free_area_init_core() performs the initialization of them.

*/

wait_queue_head_t *wait_table;

unsigned long wait_table_hash_nr_entries;

unsigned long wait_table_bits;

ZONE_PADDING(_pad1_)

/* Write-intensive fields used from the page allocator */

spinlock_t lock;

/* free areas of different sizes */

struct free_area free_area[MAX_ORDER];

/* zone flags, see below */

unsigned long flags;

ZONE_PADDING(_pad2_)

/* Write-intensive fields used by page reclaim */

/* Fields commonly accessed by the page reclaim scanner */

spinlock_t lru_lock;

struct lruvec lruvec;

/* Evictions & activations on the inactive file list */

atomic_long_t inactive_age;

/*

* When free pages are below this point, additional steps are taken

* when reading the number of free pages to avoid per-cpu counter

* drift allowing watermarks to be breached

*/

unsigned long percpu_drift_mark;

#if defined CONFIG_COMPACTION || defined CONFIG_CMA

/* pfn where compaction free scanner should start */

unsigned long compact_cached_free_pfn;

/* pfn where async and sync compaction migration scanner should start */

unsigned long compact_cached_migrate_pfn[2];

#endif

#ifdef CONFIG_COMPACTION

/*

* On compaction failure, 1<<compact_defer_shift compactions

* are skipped before trying again. The number attempted since

* last failure is tracked with compact_considered.

*/

unsigned int compact_considered;

unsigned int compact_defer_shift;

int compact_order_failed;

#endif

#if defined CONFIG_COMPACTION || defined CONFIG_CMA

/* Set to true when the PG_migrate_skip bits should be cleared */

bool compact_blockskip_flush;

#endif

ZONE_PADDING(_pad3_)

/* Zone statistics */

atomic_long_t vm_stat[NR_VM_ZONE_STAT_ITEMS];

} ____cacheline_internodealigned_in_smp;

首先struct zone是经常会被访问到的,因此这个数据结构要求以L1Cache对齐。

另外,这里的ZONE_PADDING()是让zone->lock和zone->lru_lock这两个很热门的锁可以分布在不同的cache line中。

一个内存节点最多也就几个zone,因此zone数据结构不需要像struct page一样关注数据结构的大小,

因此这里ZONE_PADDING()可以为了性能而浪费空间。

❑ watermark:

每个zone在系统启动时会计算出3个水位值,分别是WMARK_MIN、WMARK_LOW和WMARK_HIGH水位,这在页面分配器和kswapd页面回收中会用到。

❑ lowmem_reserve: zone中预留的内存。

❑ zone_pgdat: 指向内存节点。

❑ pageset: 用于维护Per-CPU上的一系列页面,以减少自旋锁的争用。

❑ zone_start_pfn: zone中开始页面的页帧号。

❑ managed_pages: zone中被伙伴系统管理的页面数量。

❑ spanned_pages: zone包含的页面数量。

❑ present_pages: zone里实际管理的页面数量。对一些体系结构来说,其值和spanned_pages相等。

❑ free_area: 管理空闲区域的数组,包含管理链表等。

❑ lock: 并行访问时用于对zone保护的自旋锁。

❑ lru_lock: 用于对zone中LRU链表并行访问时进行保护的自旋锁。

❑ lruvec: LRU链表集合。❑ vm_stat:zone计数。

通常情况下,内核的zone分为ZONE_DMA、ZONE_DMA32、ZONE_NORMAL 和 ZONE_HIGHMEM。

zone类型的定义在include/linux/mmzone.h文件中。

enum zone_type {

#ifdef CONFIG_ZONE_DMA

/*

* ZONE_DMA is used when there are devices that are not able

* to do DMA to all of addressable memory (ZONE_NORMAL). Then we

* carve out the portion of memory that is needed for these devices.

* The range is arch specific.

*

* Some examples

*

* Architecture Limit

* ---------------------------

* parisc, ia64, sparc <4G

* s390 <2G

* arm Various

* alpha Unlimited or 0-16MB.

*

* i386, x86_64 and multiple other arches

* <16M.

*/

ZONE_DMA,

#endif

#ifdef CONFIG_ZONE_DMA32

/*

* x86_64 needs two ZONE_DMAs because it supports devices that are

* only able to do DMA to the lower 16M but also 32 bit devices that

* can only do DMA areas below 4G.

*/

ZONE_DMA32,

#endif

/*

* Normal addressable memory is in ZONE_NORMAL. DMA operations can be

* performed on pages in ZONE_NORMAL if the DMA devices support

* transfers to all addressable memory.

*/

ZONE_NORMAL,

#ifdef CONFIG_HIGHMEM

/*

* A memory area that is only addressable by the kernel through

* mapping portions into its own address space. This is for example

* used by i386 to allow the kernel to address the memory beyond

* 900MB. The kernel will set up special mappings (page

* table entries on i386) for each page that the kernel needs to

* access.

*/

ZONE_HIGHMEM,

#endif

ZONE_MOVABLE,

__MAX_NR_ZONES

};

zone的初始化函数集中在bootmem_init()中完成,所以需要确定每个zone的范围。

在find_limits()函数中会计算出min_low_pfn、max_low_pfn和max_pfn这3个值。

其中,min_low_pfn是内存块的开始地址的页帧号(0x60000), max_low_pfn(0x8f800)表示normal区域的结束页帧号,

它由arm_lowmem_limit这个变量得来,

max_pfn(0xa0000)是内存块的结束地址的页帧号。

Linux 内存 zone 布局

Linux 内存布局如下:

|=======================================|

| Kernel space : 3G - 4G |

| |

| |-------------------------------| |

| | ZONE_HIGHMEM | |

| |-------------------------------| |

| | ZONE_NORMAL | |

| |-------------------------------| |

| | ZONE_DMA32 | |

| |-------------------------------| |

| | ZONE_DMA | |

| |-------------------------------| |

|=======================================|

| user space : 0G - 3G |

| |

| |-------------------------------| |

| | stack 栈 | |

| |-------------------------------| |

| | heap 堆 (mmap) | |

| |-------------------------------| |

| | data 数据段 未初始化 | |

| |-------------------------------| |

| | data 数据段 已初始化 | |

| |-------------------------------| |

| | code 代码段 | |

| |-------------------------------| |

|=======================================|

如果其中ZONE_NORMAL是从0xc0000000到0xef800000,这个地址空间有多少个页面呢?

(0xc0000000 - 0xef800000) / 4096 = 194560

所以 ZONE_NORMAL 有 194560 个页面

zone的初始化函数在free_area_init_core()中

start_kernel() --> setup_arch() --> paging_init() --> bootmem_init()

--> zone_sizes_init() --> frea_area_init_node() --> free_area_init_core()

static void __paginginit freee_area_init_core(struct pa_list_data *pgdat, unsigned long node_start_pfn, unsigned long node_end_pfn, unsigned long * zone_size, unsigned long * zholes_size)

{

pgdat_resize_init(pgdat);

init_waitqueue_head(&pgdat->kswapd_wait);

init_waitqueue_head(&pgdat_pfmemalloc_wait);

pgdat_page_ext_init(pgdat);

for( j = 0; j<MAX_NR_ZONES; j++)

{

size = zone_spanned_pages_in_node(nid, j ,node_start_pfn), node_end_pfn, zones_size);

realsize = freesize = size - zone_absent_pages_int_node(nid, j , node_start_pfn, node_end_pfn, zholes_size);

memmap_pages = calc_memmap_size(size, realsize);

if(!is_highmem_idx(j)){

if(freesize >= memmap_pages)

freesize -= memmap_pages;

}

// account for reserved pages

if( j=0 && freesize > dma_reserve ){

freesize -= dma_reserve;

}

if( !is_highmem_idx(j) )

nr_kernel_pages += freesize;

// charge for highmem memmap if there are enough kernel pages

else if(nr_kernel_pages > memmap_pages * 2)

nr_kernel_pages -= memmap_pages;

nr_all_pages += freesize;

zone->spanned_pages = size;

zone->present_pages = realsize;

zone->managed_pages = is_highmem_idx(j) ? realsize : freesize;

zone->name = zone_names[j];

spin_lock_init(&zone->lock);

spin_lock_init(&zone->lru_lock);

zone_seqlock_init(zone);

zone->zone_pgdat = pgdat;

zone_pcp_init(zone);

// for bootup initialized

mod_zone_page_state(zone, NR_ALLOC_BATCH,zone->managed_pages);

lruvec_init(&zone->lruvec);

set_pageblock_order();

setup_usemap(pgdat, zone, zone_start_pfn, size);

ret = init_currently_empty_zone(zone, zone_start_pfn, size, MEMMAP_EARLY);

BUG_ON(ret);

memmap_init(size, nid, j, zone_start_pfn);

zone_start_pfn += size;

}

}

另外系统中会有一个zonelist的数据结构,伙伴系统分配器会从zonelist开始分配内存,

zonelist有一个zoneref数组,数组里有一个成员会指向zone数据结构。

zoneref数组的第一个成员指向的zone是页面分配器的第一个候选者,其他成员则是第一个候选者分配失败之后才考虑,优先级逐渐降低。

另外,系统中还有一个非常重要的全局变量——mem_map,它是一个struct page的数组,可以实现快速地把虚拟地址映射到物理地址中,这里指内核空间的线性映射,它的初始化是在free_area_init_node()->alloc_node_mem_map()函数中。

2.1.5 空间划分

在32bit Linux中,一共能使用的虚拟地址空间是4GB,用户空间和内核空间的划分通常是按照3:1来划分,也可以按照2:2来划分。

// Kernel/arch/arm/Kconfig

choice

prompt "Memory split"

depends on MMU

default VMSPLIT_3G

help

Select the desired split between kernel and user memory.

If you are not absolutely sure what you are doing, leave this option alone!

config VMSPLIT_3G

bool "3G/1G user/kernel split"

config VMSPLIT_2G

bool "2G/2G user/kernel split"

config VMSPLIT_1G

bool "1G/3G user/kernel split"

endchoice

config PAGE_OFFSET

hex

default PHYS_OFFSET if !MMU

default 0x40000000 if VMSPLIT_1G

default 0x80000000 if VMSPLIT_2G

default 0xC0000000

在ARM Linux中有一个配置选项“memory split”,可以用于调整内核空间和用户空间的大小划分。

通常使用“VMSPLIT_3G”选项,用户空间大小是3GB,内核空间大小是1GB,那么PAGE_OFFSET描述内核空间的偏移量就等于0xC000_0000。

也可以选择“VMSPLIT_2G”选项,这时内核空间和用户空间的大小都是2GB, PAGE_OFFSET就等于0x8000_0000。

内核中通常会使用PAGE_OFFSET这个宏来计算内核线性映射中虚拟地址和物理地址的转换。

线性映射的物理地址等于虚拟地址vaddr减去PAGE_OFFSET(0xC000_0000)再减去PHYS_OFFSET(在部分ARM系统中该值为0)。

// \kernel\msm-3.18\arch\arm\include\asm\memory.h

static inline phys_addr_t __virt_to_phys(unsigned long x)

{

return (phys_addr_t) x - PAGE_OFFSET + PHYS_OFFSET;

}

static inline unsigned long __phys_to_virt(phys_addr_t x)

{

return x - PHYS_OFFSET + PAGE_OFFSET;

}

2.1.6 物理内存初始化

在内核启动时,内核知道物理内存DDR的大小并且计算出高端内存的起始地址和内核空间的内存布局后,

物理内存页面page就要加入到伙伴系统中,那么物理内存页面如何添加到伙伴系统中呢?

伙伴系统(Buddy System)是操作系统中最常用的一种动态存储管理方法,在用户提出申请时,分配一块大小合适的内存块给用户,反之在用户释放内存块时回收。

在伙伴系统中,内存块是2的order次幂。Linux内核中order的最大值用MAX_ORDER来表示,通常是11,也就是把所有的空闲页面分组成11个内存块链表,每个内存块链表分别包括1、2、4、8、16、32、…、1024个连续的页面。1024个页面对应着4MB大小的连续物理内存。

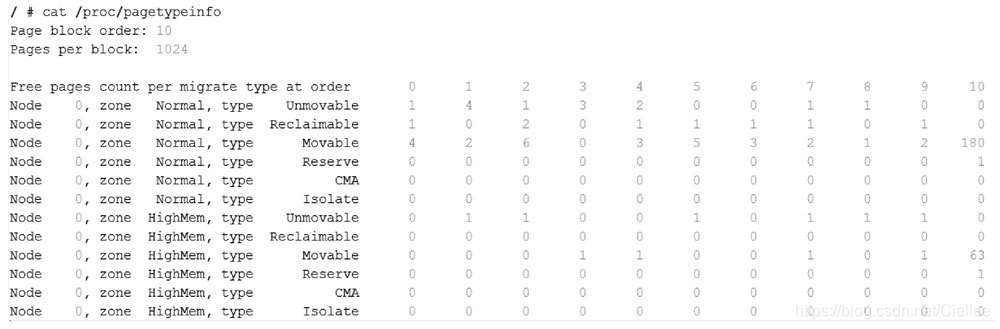

MIGRATE_TYPES类型包含MIGRATE_UNMOVABLE、MIGRATE_RECLAIMABLE、MIGRATE_MOVABLE以及MIGRATE_RESERVE等几种类型。

当前页面分配的状态可以从/proc/pagetypeinfo中获取得到。

❑ 大部分物理内存页面都存放在MIGRATE_MOVABLE链表中。

❑ 大部分物理内存页面初始化时存放在2的10次幂的链表中。

每个pageblock有一个相应的MIGRATE_TYPES类型。

zone数据结构中有一个成员指针pageblock_flags,它指向用于存放每个pageblock的MIGRATE_TYPES类型的内存空间。

pageblock_flags指向的内存空间的大小通过usemap_size()函数来计算,每个pageblock用4个比特位来存放MIGRATE_TYPES类型。

zone的初始化函数free_area_init_core()会调用setup_usemap()函数来计算和分配pageblock_flags所需要的大小,并且分配相应的内存。

usemap_size()函数首先计算zone有多少个pageblock,每个pageblock需要4bit来存放MIGRATE_TYPES类型,最后可以计算出需要多少Byte。然后通过memblock_virt_alloc_try_nid_nopanic()来分配内存,并且zone->pageblock_flags成员指向这段内存。