背景:

三台 centos 虚拟机,部署 hadoop,1 为 namenode,2 和 3 为 datanode

现象描述:

启动 start-dfs 后,检查 jps,1 的 NameNode,SecondaryNameNode 和 DataNode,以及 2,3 的 DataNode 都启动了

在 windows 侧访问 http://spark1:50070,无法访问。

排查步骤:

- 在 1 上检查 50070 端口,netstat -ano |grep 50070,处于监听状态,表示启动正常

tcp 0 0 0.0.0.0:50070 0.0.0.0:* LISTEN off (0.00/0/0)

- 在 1 上通过 curl 访问,返回结果正常

<!--

Licensed to the Apache Software Foundation (ASF) under one or more

contributor license agreements. See the NOTICE file distributed with

this work for additional information regarding copyright ownership.

The ASF licenses this file to You under the Apache License, Version 2.0

(the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

-->

<!DOCTYPE html PUBLIC "-//W3C//DTD XHTML 1.0 Strict//EN"

"http://www.w3.org/TR/xhtml1/DTD/xhtml1-strict.dtd">

<html xmlns="http://www.w3.org/1999/xhtml">

<head>

<meta http-equiv="REFRESH" content="0;url=dfshealth.html" />

<title>Hadoop Administration</title>

</head>

</html>

- 关闭防火墙

service iptables stop

chkconfig iptables off

- windows 上尝试访问,仍然失败,并且能够 ping 通 IP

- 在 DataNode 所在的 2 和 3 虚拟机上 curl 访问,也失败,猜测,防火墙没关闭成功

- 查到 centos7 的防火墙采用 fire-wall,于是检查运行状态

[root@spark1 local]# firewall-cmd --state

running

果然是防火墙的原因,执行关闭命令和开机不启动命令

systemctl stop firewalld.service

systemctl disable firewalld.service

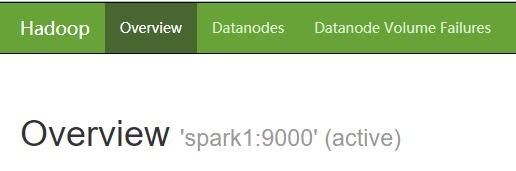

- windows 尝试访问,浏览器正常显示 hadoop 管理界面!