本节基础知识Soft Value function基础和Soft Q Learning中Policy Improvement 证明

首先回顾一下Soft value function的定义:

Vsoffπ(s)≜log∫exp(Qsoftπ(s,a))da

假定

π(a∣s)=exp(Qsoftπ(s,a)−Vsoftπ(s)),我们有:

Qsoftπ(s,a)=r(s,a)+γEs′∼ps[H(π(⋅∣s′))+Ea′∼π(⋅∣s′)[Qsoftπ(s′,a′)]]=r(s,a)+γEs′∼ps[Vsoftπ(s′)]

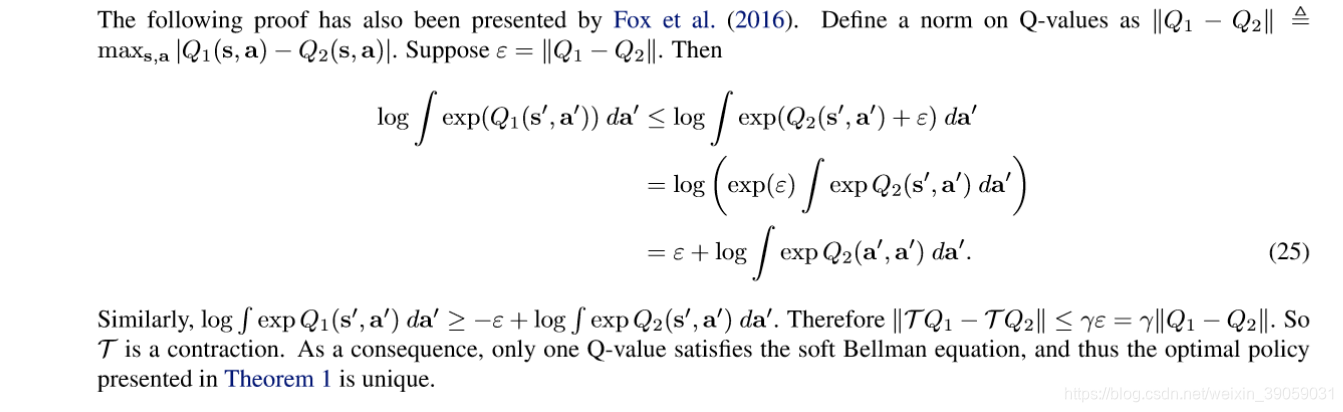

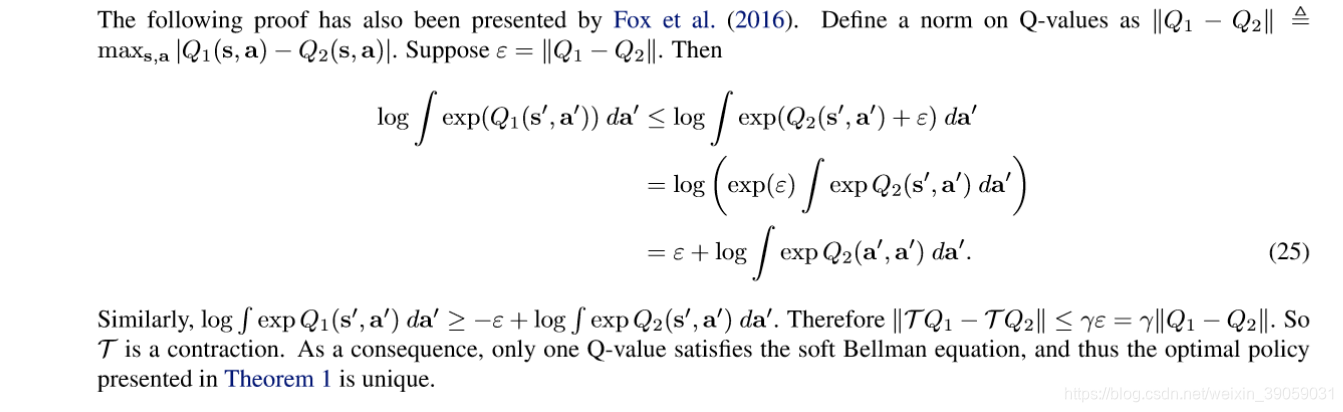

最后。我们定义soft value iteration operator

T :

TQ(s,a)≜r(s,a)+γEs′∼ps[log∫expQ(s′,a′)da′]

它是一个压缩映射里面的一种映射(contraction),我们就得证了。

具体参考论文:Reinforcement Learning with Deep Energy-Based Policies