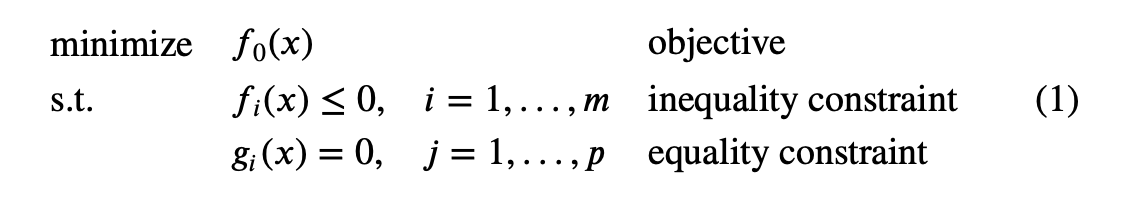

Consider an optimization problem in the standard form (we call this a primal problem):

We denote the optimal value of this as . We don’t assume the problem is convex.

The Lagrange dual function

We define the Lagrangian

associated with the problem as

We call vectors

and

the dual variables or Lagrange multiplier vectors associated with the problem (1).

We define the Lagrange dual function (or just dual function)

as the minimum value of the Lagrangian over

: for

,

Note that once we choose an , and are fixed and therefore the dual function is a family of affine functions of ( , ), which is concave even the problem (1) is not convex.

Lower bound property

The dual function yields lower bounds on the optimal value

of the problem (1): For any

and any

we have

Suppose is a feasible point for the problem (1), i.e., and , and . Then we have

Hence

Since holds for every feasible point , the inequality follows. The inequality holds, but is vacuous, when . The dual function gives a nontrivial lower bound on only when and , i.e., . We refer to a pair with and as dual feasible.

Derive an analytical expression for the Lagrange dual function

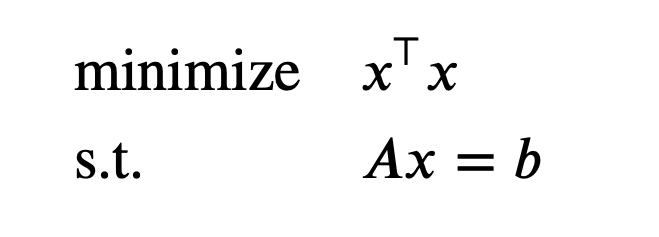

Practice problem 1: Least-squares solution of linear equations

The Lagrangian is . The dual function is given by . Since is a convex quadratic function of , we can find the minimizing from the optimality condition

which yields . Therefore the dual function is

Therefore, . The next step is to maximize .

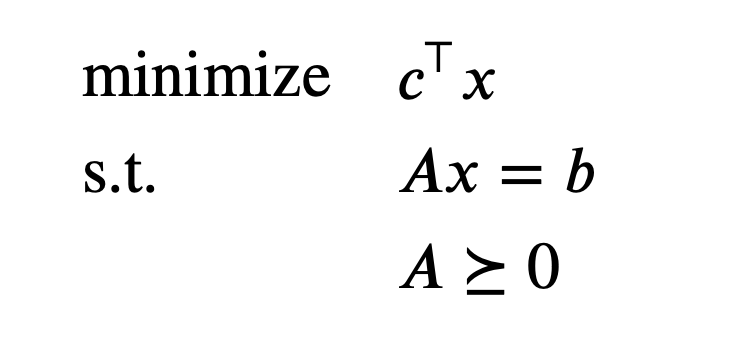

Practice problem 2: Standard form Linear Programming

The Lagrangian is

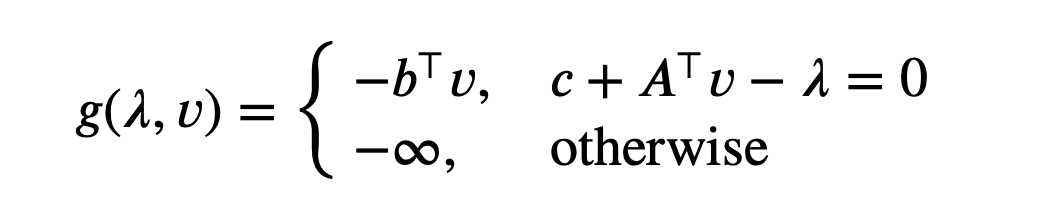

The dual function is

We see that is a linear function. Since a linear function is bounded below only when it is zero. Thus, except when . Therefore,

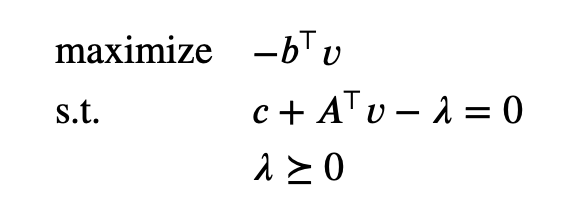

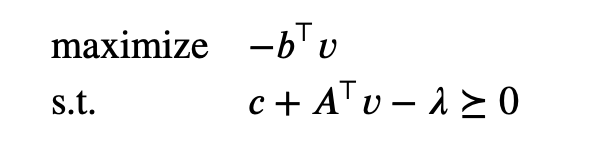

The lower bound property is nontrivial only when and satisfy and . When this occurs, is a lower bound on the optimal value of the LP. We can form an equivalent dual problem by making these equality constraints explicit:

This problem, in turn, can be expressed as

The Lagrange dual problem

For each pair with , the Lagrange dual function gives us a lower bound on the optimal value of the optimization problem (1). Thus we have a lower bound that depends on some parameters .

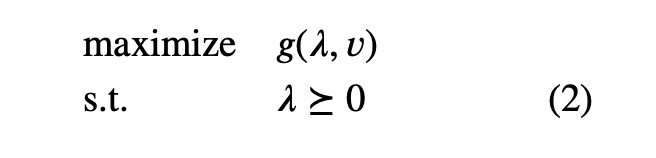

This leads to the optimization problem

This problem is called the Lagrange dual problem associated with the problem (1). In this context the original problem (1) is sometimes called the primal problem. We refer to as dual optimal or optimal Lagrange multipliers if they are optimal for the problem (2). The Lagrange dual problem (2) is a convex optimization problem, since the objective to be maximized is concave and the constraint is convex. This is the case whether or not the primal problem (5.1) is convex.

Note: the dual problem is always convex.

Weak/ Strong duality

The optimal value of the Lagrange dual problem, which we denote , is, by definition, the best lower bound on that can be obtained from the Lagrange dual function. The inequality

which holds even if the original problem is not convex. This property is called weak duality.

We refer to the difference as the optimal duality gap of the original problem. Note that th optimal duality gap is always nonnegative.

We say that strong duality holds if

Note that strong duality does not hold in general. But if the primal problem (11) is convex with convex, we usually (but not always) have strong duality.

Slater’s condition

Slater’s condition: There exists an such that

Such a point is called strictly feasible.

Slater’s theorem: If Slater’s condition holds for a convex problem, then the strong duality holds.

Complementary slackness

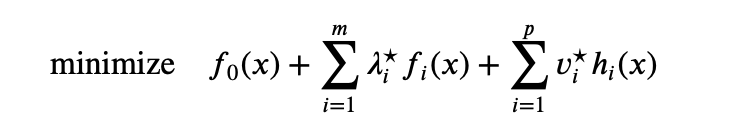

Suppose the strong duality holds. Let be a primal optimal and be a dual optimal point. This means that

We conclude that the two inequalities in this chain hold with equality. Since the inequality in the third line is an equality, we conclude that minimizes over .

Another important conclusion is

KKT optimality conditions

We now assume that the functions are differentiable, but we make no assumptions yet about convexity.

KKT conditions for nonconvex problems:

Suppose the strong duality holds. Let be a primal optimal and be a dual optimal point. Since minimizes over , it follows that its gradient must vanish at , i.e.,

The KKT conditions are the following:

For any optimization problem with differentiable objective and constraint functions for which strong duality obtains, any pair of primal and dual optimal points must satisfy the KKT conditions.

KKT conditions for convex problems:

When the primal problem is convex, the KKT conditions are also sufficient for the points to be primal and dual optimal. That is, if are convex and are affine, and are any points that satisfy the KKT conditions

then and are primal and dual optimal, with zero duality gap. To see this, note that the first two conditions state that is primal feasible. Since ≥ 0, is convex in ; the last KKT condition states that its gradient with respect to vanishes at , so it follows that minimizes over . From this we conclude that

This shows that and have zero duality gap, and therefore are primal and dual optimal.

We conclude the following:

- For any convex optimization problem with differentiable objective and constraint functions, any points that satisfy the KKT conditions are primal and dual optimal, and have zero duality gap.

- If a convex optimization problem with differentiable objective and constraint functions satisfies Slater’s condition, then the KKT conditions provide necessary and sufficient conditions for optimality: Slater’s condition implies that the optimal duality gap is zero and the dual optimum is attained, so is optimal iff there are that, together with , satisfy the KKT conditions.

Solving the primal problem via the dual

Note that if strong duality holds and a dual optimal solution exists, then any primal optimal point is also a minimizer of . This fact sometimes allows us to compute a primal optimal solution from a dual optimal solution.

More precisely, suppose we have strong duality and an optimal is known. Suppose that the minimizer of , i.e., the solution of

is unique (For a convex problem this occurs). Then if the solution is primal feasible, it must be primal optimal; if it is not primal feasible, then no primal optimal point can exist, i.e., we can conclude that the primal optimum is not attained.

Reference: Convex Optimization by Stephen Boyd and Lieven Vandenberghe.