目录

- 一、Pod清单的简述

- 二、实验环境的检测

- 三、创建Pod清单

- 四、Pod中创建多容器

- 五、对于有些镜像,需要在清单中添加一个bash

- 六、标签的相关用法

一、Pod资源清单的简述

Pod清单时将Pod要实现的操和命令全部放进一个文件中,通过应用文件中的内容来完成 相应的部署等

Pod 模板是包含在其他对象中的 Pod 规范,例如 Replication Controllers、 Jobs 和 DaemonSets。 控制器使用 Pod 模板来制作实际使用的 Pod。 下面的示例是一个简单的 Pod 清单,它包含一个打印消息的容器。

apiVersion: v1

kind: Pod

metadata:

name: myapp-pod

labels:

app: myapp

spec:

containers:

- name: myapp-container

image: busybox

command: ['sh', '-c', 'echo Hello Kubernetes! && sleep 3600']Pod 模板就像饼干切割器,而不是指定所有副本的当前期望状态。 一旦饼干被切掉,饼干就与切割器没有关系。 没有“量子纠缠”。 随后对模板的更改或甚至切换到新的模板对已经创建的 Pod 没有直接影响。 类似地,由副本控制器创建的 Pod 随后可以被直接更新。 这与 Pod 形成有意的对比,Pod 指定了属于 Pod 的所有容器的当前期望状态。 这种方法从根本上简化了系统语义,增加了原语的灵活性。

接下来实验步骤:

二、实验环境的检测

- 1.查看集群的状态

- 2.清理实验环境

1、查看集群的状态

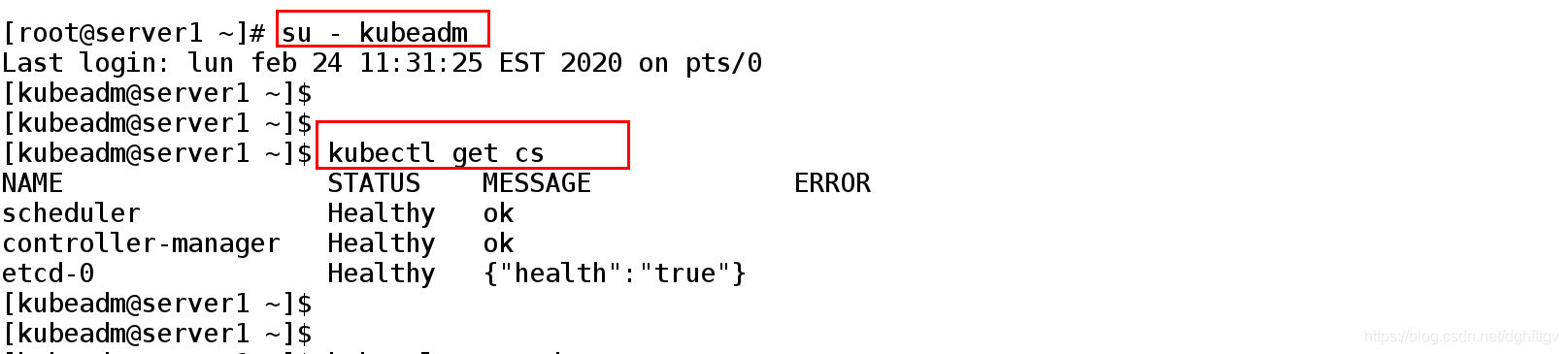

1.1、在用普通用户完成相关的实验

开始实验时要把集群集起来所以要进行以下的操作

[root@server1 ~]# su - kubeadm ##进入普通用户

Last login: lun feb 24 11:31:25 EST 2020 on pts/0

[kubeadm@server1 ~]$

[kubeadm@server1 ~]$

[kubeadm@server1 ~]$ kubectl get cs ##查看cs是否正常工作

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health":"true"}

[kubeadm@server1 ~]$

[kubeadm@server1 ~]$

1.2、查看集群的工作是否正常

[kubeadm@server1 ~]$ kubectl get nodes ##查看各个节点是否正常的运行

NAME STATUS ROLES AGE VERSION

server1 Ready master 45h v1.17.3

server3 Ready <none> 45h v1.17.3

server4 Ready <none> 45h v1.17.3

[kubeadm@server1 ~]$ kubectl get pod -n kube-system ##查看个服务是否正常的开启

NAME READY STATUS RESTARTS AGE

coredns-9d85f5447-9p6b8 0/1 Running 2 5h57m

coredns-9d85f5447-nxwch 1/1 Running 2 76m

etcd-server1 1/1 Running 10 45h

kube-apiserver-server1 1/1 Running 17 45h

kube-controller-manager-server1 1/1 Running 25 45h

kube-flannel-ds-amd64-h6mpc 1/1 Running 4 40h

kube-flannel-ds-amd64-h8k92 1/1 Running 5 40h

kube-flannel-ds-amd64-w4ws4 1/1 Running 3 40h

kube-proxy-8hc7t 1/1 Running 3 45h

kube-proxy-ktxlp 1/1 Running 3 45h

kube-proxy-w9jxm 1/1 Running 2 45h

kube-scheduler-server1 1/1 Running 24 45h

1.3、yaml 文件相当是pod的控制器 所以删除掉副本之后有重新生副本

[kubeadm@server1 ~]$ kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-6db489d4b7-5pph4 1/1 Running 2 14h

nginx-6db489d4b7-gzr7z 1/1 Running 2 78m

nginx-6db489d4b7-jvjnf 1/1 Running 2 78m

[kubeadm@server1 ~]$

[kubeadm@server1 ~]$

[kubeadm@server1 ~]$ kubectl delete pod nginx-6db489d4b7-5pph4

pod "nginx-6db489d4b7-5pph4" deleted

\

[kubeadm@server1 ~]$ ##删除后又重新生成

[kubeadm@server1 ~]$ kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-6db489d4b7-5pph4 1/1 Running 2 14h

nginx-6db489d4b7-gzr7z 1/1 Running 2 78m

nginx-6db489d4b7-jvjnf 1/1 Running 2 78m

[kubeadm@server1 ~]$

1.4、查看资源清单

[kubeadm@server1 ~]$ kubectl get pod -o yaml ##查看资源清单

[kubeadm@server1 ~]$

2、清理实验环境

[kubeadm@server1 ~]$ kubectl get deployments.app ##删除管理文件信息

NAME READY UP-TO-DATE AVAILABLE AGE

nginx 3/3 3 3 20h

[kubeadm@server1 ~]$

[kubeadm@server1 ~]$ kubectl delete deployments.apps nginx

deployment.apps "nginx" deleted

[kubeadm@server1 ~]$

[kubeadm@server1 ~]$ kubectl get deployments.[kubeadm@server1 ~]$ 删除掉之前的^C

app

No resources found in default namespace.

2.2、删除之前实验暴露的端口信息

[kubeadm@server1 ~]$ kubectl get svc ##查看之前service控制器对外暴露的端口

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 45h

nginx NodePort 10.96.9.159 <none> 80:31641/TCP 17h

[kubeadm@server1 ~]$

[kubeadm@server1 ~]$ kubectl delete svc ##删除掉端口暴露的信息

error: resource(s) were provided, but no name, label selector, or --all flag specified

[kubeadm@server1 ~]$

[kubeadm@server1 ~]$ kubectl delete svc nginx ##删除端口暴露的镜像信息

service "nginx" deleted

[kubeadm@server1 ~]$

[kubeadm@server1 ~]$

[kubeadm@server1 ~]$ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 45h

[kubeadm@server1 ~]$

[kubeadm@server1 ~]$

三、创建Pod清单

- 1.查看创建清单的配置信息

- 2.创建Pod清单

- 3.副本的删除

1、查看创建清单的配置信息

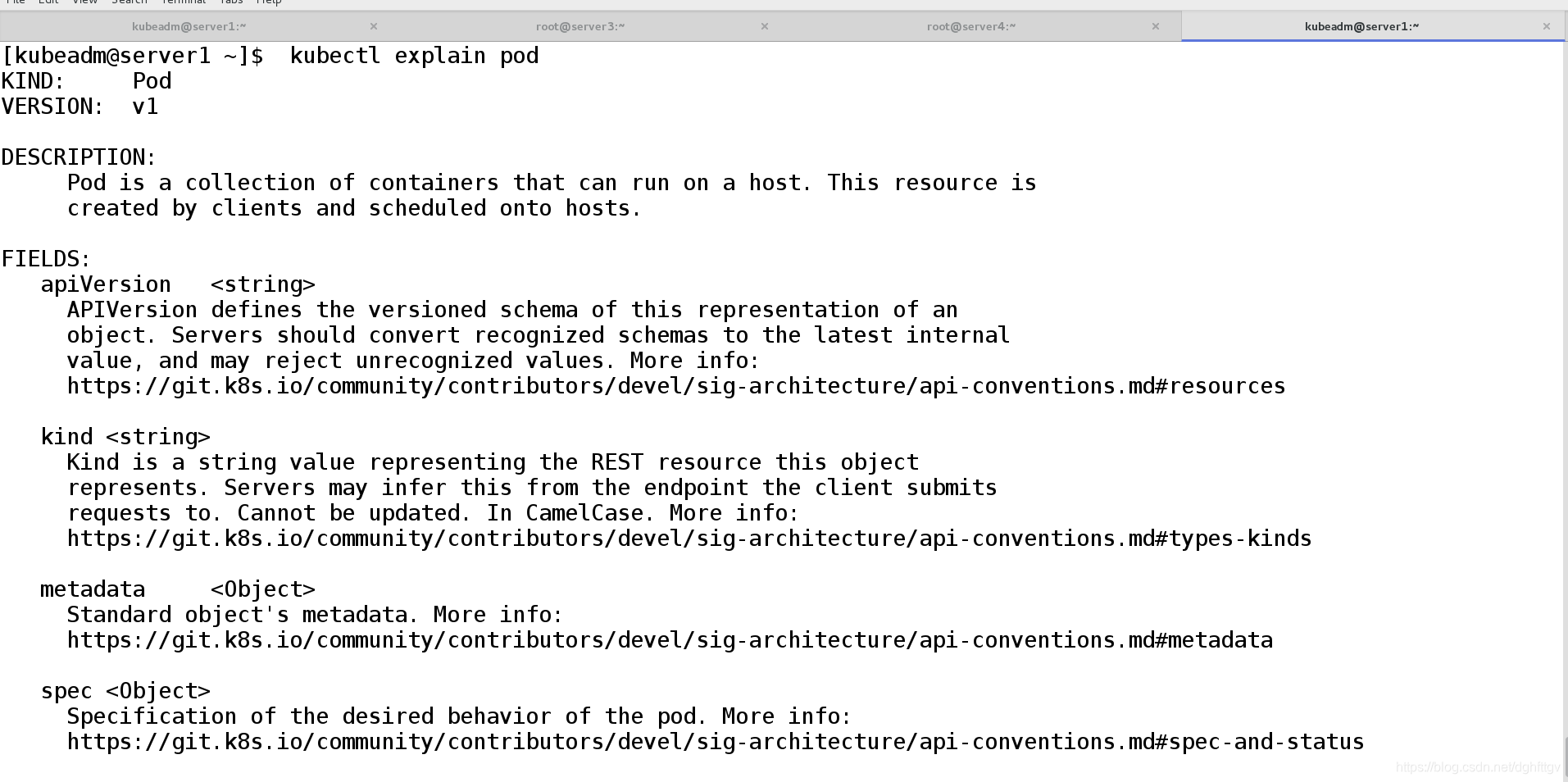

1.1、查看版本信息

[kubeadm@server1 ~]$ kubectl api-versions ##版本的 查询命令

[kubeadm@server1 ~]$ kubectl explain pod ##查看帮助文档

1.2、查看帮助文档

1.2、查看资源清单的配置方法

[kubeadm@server1 ~]$ kubectl explain pod ##查看资源清单的配置方法

KIND: Pod

VERSION: v1

DESCRIPTION:

Pod is a collection of containers that can run on a host. This resource is

created by clients and scheduled onto hosts.

FIELDS:

apiVersion <string>

APIVersion defines the versioned schema of this representation of an

object. Servers should convert recognized schemas to the latest internal

value, and may reject unrecognized values. More info:

https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#resources

kind <string>

Kind is a string value representing the REST resource this object

represents. Servers may infer this from the endpoint the client submits

requests to. Cannot be updated. In CamelCase. More info:

https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#types-kinds

metadata <Object>

Standard object's metadata. More info:

https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#metadata

spec <Object>

Specification of the desired behavior of the pod. More info:

https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#spec-and-status

status <Object>

Most recently observed status of the pod. This data may not be up to date.

Populated by the system. Read-only. More info:

https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#spec-and-status

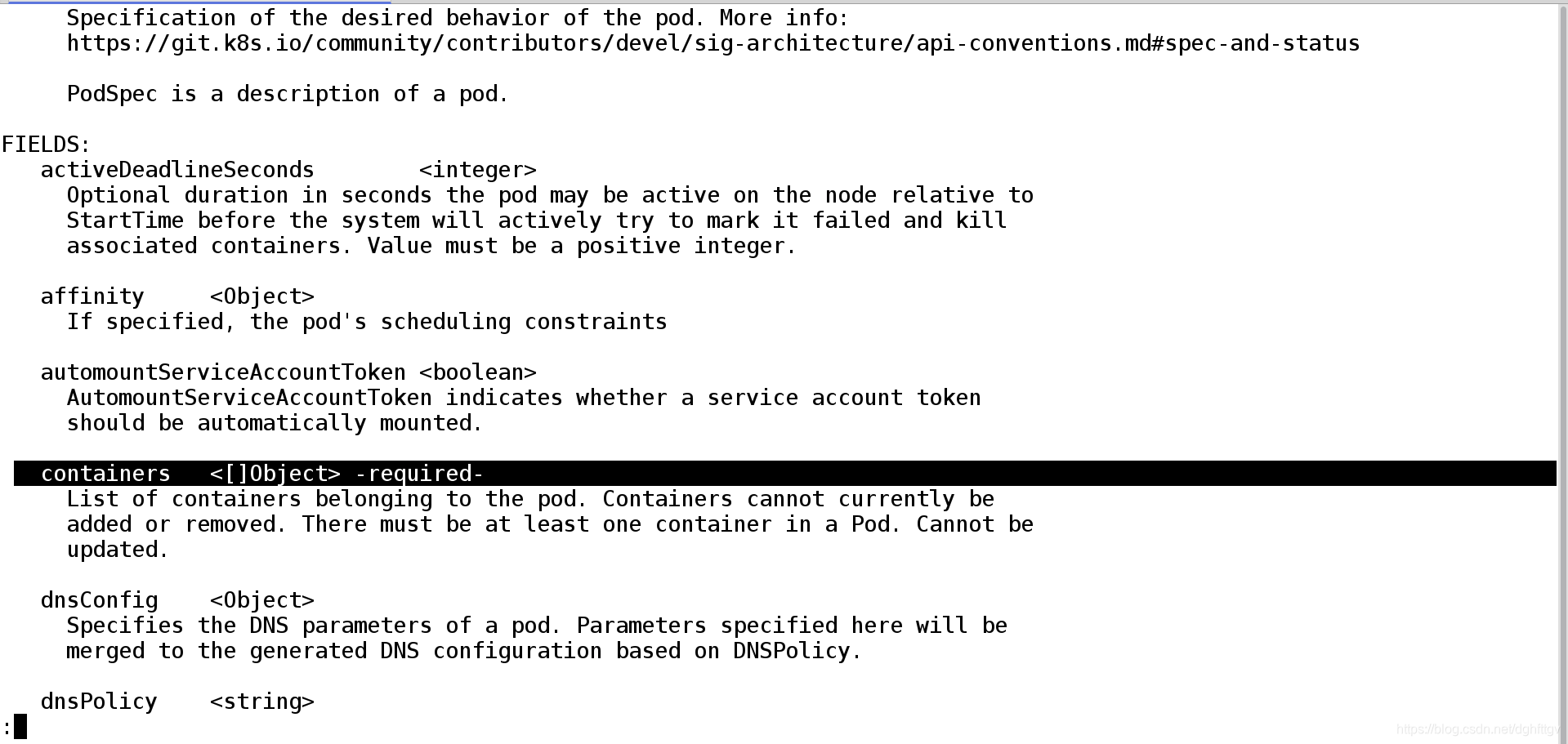

1.3、后边有-required- 表示必须要加 是一定要加的参数

[kubeadm@server1 ~]$ kubectl explain pod.spec | less

containers <[]Object> -required- ## 后边有-required- 表示必须要加

List of containers belonging to the pod. Containers cannot currently be

added or removed. There must be at least one container in a Pod. Cannot be

updated.

[kubeadm@server1 ~]$ kubectl explain pod.spec.containers

2、创建Pod清单

2.1创建一个简单的yaml文件

[kubeadm@server1 ~]$ cat pod.yaml

apiVersion: v1 ##版本为v1

kind: Pod ##类型为pod

metadata: ##数据卷的名称

name: demo

labels:

app: demo ##标签的名称

spec:

containers:

- name: demo ##pod的名称

image: nginx ##镜像

2.2、测试:

[kubeadm@server1 ~]$ kubectl create -f pod.yaml ##建立资源清单

pod/demo created

[kubeadm@server1 ~]$

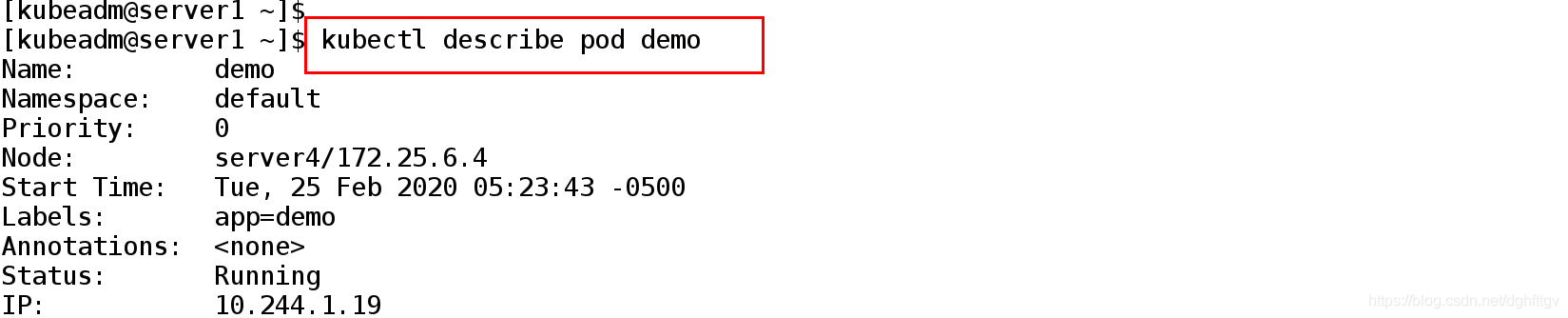

2.3查看副本的运行情况 、日志等

[kubeadm@server1 ~]$ kubectl get pod ##查看副本的运行情况

NAME READY STATUS RESTARTS AGE

demo 1/1 Running 0 99s

[kubeadm@server1 ~]$

[kubeadm@server1 ~]$ kubectl describe pod demo ##查看日志

3、副本的删除

原先有控制器的时候 删除副本会自动生成新的副本 现在时自定义的yaml文件 删除之后不能自动生成新的副本

[kubeadm@server1 ~]$ kubectl delete pod demo

pod "demo" deleted

[kubeadm@server1 ~]$

[kubeadm@server1 ~]$

[kubeadm@server1 ~]$ kubectl get pod

No resources found in default namespace.

[kubeadm@server1 ~]$

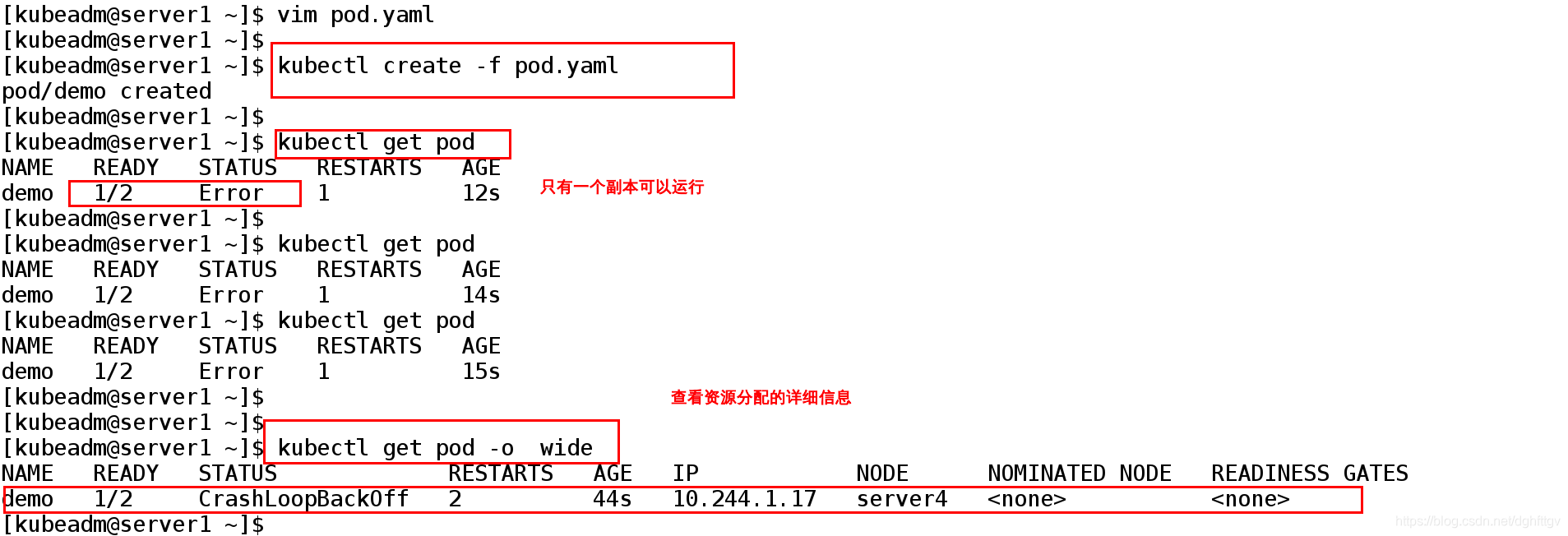

四、Pod中创建多容器

- 1.发生资源争抢的情况(镜像使用的端口不同)

- 2,.多镜像的创建

1、发生资源争抢的情况

1.1、容器监听相同端口会出现资源争抢的情况

一个pod中可以起多个容器 但是一个pod中共享网络资源 如果所有资源都监听一个端口 会发生资源争抢的情况

apiVersion: v1 ##版本

kind: Pod ##类型

metadata: ##数据卷

name: demo ##数据卷的名称

labels: ##标签

app: demo

spec:

containers: ##pod的名称

- name: vm1

image: nginx

- name: vm2

image: nginx ##vm1、vm2都用同样的镜像来模拟端口资源争抢的情况

1.2、测试:

[kubeadm@server1 ~]$ kubectl get pod ##查看副本的运行情况

NAME READY STATUS RESTARTS AGE

demo 1/2 Error 1 12s ##出现报错只有一个pod能够正常启动

[kubeadm@server1 ~]$

[kubeadm@server1 ~]$ kubcetl get pod -o wide ##查看资源配置的详细信息

1.3、查看日志

(发生了资源争抢的情况)

1.4、查看vm2日志

[kubeadm@server1 ~]$ kubectl logs demo -c vm2 ##查看vm2的日志

2、两个不同的镜像

1.1、修改yaml文件

apiVersion: v1 ##版本

kind: Pod ##类型

metadata: ##数据卷

name: demo ##标签

labels:

app: demo

spec:

containers:

- name: vm1

image: nginx

- name: vm2

image: redis ##一个pod里可以取多个容器

1.2、查看日志:

1.3、查看详细的分配信息

五、对于有些镜像,需要在清单中添加一个bash

对于不能运行的镜像例如busybox是一个交互式的用户不能直接的运行需要在文件 中添加 bash

- 1.没有添加bash

- 2.添加bash

1、没有在文件中添加bash

1.2、测试:

2、添加一个bash

apiVersion: v1 ##版本

kind: Pod ##类型

metadata: ##数据卷

name: demo ##标签

labels:

app: demo

spec:

containers:

- name: vm1

image: nginx

- name: vm2

image: redis ##一个pod里可以取多个容器

- name: vm3

image: busybox

command: ##命令

- /bin/sh ##执行命令

- -c

- sleep 300 ##持续300s,然后退出

2.1、测试:

[kubeadm@server1 ~]$

[kubeadm@server1 ~]$ kubectl create -f pod.yaml

pod/demo created

[kubeadm@server1 ~]$ kubectl get pod ##查看副本的运行情况

NAME READY STATUS RESTARTS AGE

demo 3/3 Running 0 29s

2.2、查看日志:

查看日志:

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled <unknown> default-scheduler Successfully assigned default/demo to server4

Normal Pulling 52s kubelet, server4 Pulling image "nginx"

Normal Pulled 51s kubelet, server4 Successfully pulled image "nginx"

Normal Created 50s kubelet, server4 Created container vm1

Normal Started 49s kubelet, server4 Started container vm1

Normal Pulling 49s kubelet, server4 Pulling image "redis"

Normal Pulled 49s kubelet, server4 Successfully pulled image "redis"

Normal Created 46s kubelet, server4 Created container vm2

Normal Started 45s kubelet, server4 Started container vm2

Normal Pulling 45s kubelet, server4 Pulling image "busybox"

Normal Pulled 45s kubelet, server4 Successfully pulled image "busybox"

Normal Created 44s kubelet, server4 Created container vm3

Normal Started 43s kubelet, server4 Started container vm3

[kubeadm@server1 ~]$

六、标签的相关用法

标签是k8s集群选择操作对象的一个中要因素

- 1.查看标签

- 2.打标签

- 3.修改标签

- 4.把标签放到固定的节点上

- 5.在清单中增加标签选择器

1、查看标签的命令

[kubeadm@server1 ~]$ kubectl get pod --show-labels ##查看pod的标签

[kubeadm@server1 ~]$ kubectl get pod -l app ##查看节点的标签

[kubeadm@server1 ~]$ kubectl get pod -L app

2、打标签

[kubeadm@server1 ~]$ kubectl label pod demo version=v1 ##给pod打标签

[kubeadm@server1 ~]$ kubectl get pod -L version ##查看标签的命令

NAME READY STATUS RESTARTS AGE VERSION

demo 3/3 Running 0 4m59s v1

[kubeadm@server1 ~]$ kubectl get pod -n kube-system --show-labels ##查看节点的标签

(app 是 key demo是值)

3、修改标签

[kubeadm@server1 ~]$ kubectl label pod demo app=nginx --overwrite ## 修改标签

pod/demo labeled

[kubeadm@server1 ~]$

[kubeadm@server1 ~]$ kubectl get pod --show-labels ##查看修改后标签的值

NAME READY STATUS RESTARTS AGE LABELS

demo 2/3 Running 11 74m app=nginx,version=v1

[kubeadm@server1 ~]$

3.2、测试

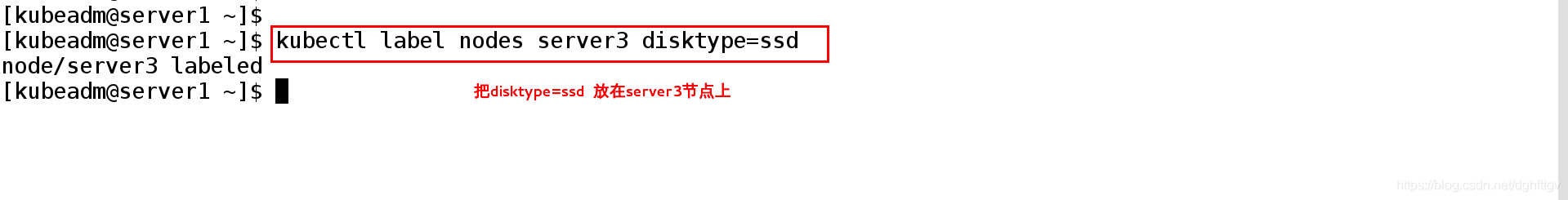

4、把标签放到固定的节点上

[kubeadm@server1 ~]$ kubectl label nodes server3 disktype=ssd ##把标签放到server3的节点上

node/server3 labeled

[kubeadm@server1 ~]$ kubectl get node --show-labels

##查看节点的标签信息

NAME STATUS ROLES AGE VERSION LABELS

server1 Ready master 2d1h v1.17.3 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=server1,kubernetes.io/os=linux,node-role.kubernetes.io/master=

server3 Ready <none> 2d1h v1.17.3 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,disktype=ssd,kubernetes.io/arch=amd64,kubernetes.io/hostname=server3,kubernetes.io/os=linux

server4 Ready <none> 2d1h v1.17.3 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=server4,kubernetes.io/os=linux

[kubeadm@server1 ~]$

[kubeadm@server1 ~]$ kubectl get node -l disktype ##查看disktype标签在节点上

NAME STATUS ROLES AGE VERSION

server3 Ready <none> 2d1h v1.17.3

[kubeadm@server1 ~]$

[kubeadm@server1 ~]$

4.1、测试:

4.2、在server3中生成容器

[root@server3 ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

152d0d71eff4 ff281650a721 "/opt/bin/flanneld -…" Less than a second ago Up Less than a second k8s_kube-flannel_kube-flannel-ds-amd64-w4ws4_kube-system_8c0ee785-9440-4a69-9132-2d56130a5f5e_3

0678447c8d4d ae853e93800d "/usr/local/bin/kube…" Less than a second ago Up Less than a second k8s_kube-proxy_kube-proxy-w9jxm_kube-system_b2e2556d-2cc3-4194-8a72-61cc8c89bd00_2

73afa35e4faa registry.aliyuncs.com/google_containers/pause:3.1 "/pause" Less than a second ago Up Less than a second k8s_POD_kube-flannel-ds-amd64-w4ws4_kube-system_8c0ee785-9440-4a69-9132-2d56130a5f5e_2

75e05fef822d registry.aliyuncs.com/google_containers/pause:3.1 "/pause" Less than a second ago Up Less than a second k8s_POD_kube-proxy-w9jxm_kube-system_b2e2556d-2cc3-4194-8a72-61cc8c89bd00_2

[root@server3 ~]# ##在server3上上生成容器

5、在yaml文件中增加标签选择器

apiVersion: v1 ##版本

kind: Pod ##类型

metadata: ##数据卷

name: demo ##标签

labels:

app: demo

spec:

containers:

- name: vm1

image: nginx

- name: vm2

image: redis ##一个pod里可以取多个容器

- name: vm3

image: busybox

command: ##命令

- /bin/sh ##执行命令

- -c

- sleep 300 ##持续300s

nodeSelector: ##标签选择器

disktype: ssd ##磁盘的类型 通过标签来选择节点

5.2、测试:

因为之前给server打标签为: disktype=ssd 所以创建清单时会选择在server3上进行

![]()

官网链接:https://kubernetes.io/docs/concepts/workloads/controllers/jobs-run-to-completion/