Kubernetes简介

由于公司有需要,需要将外后的服务外加Tensorflow模型部署加训练全部集成到k8s上,所以特意记录下这次简单部署的过程。

k8s安装部署

首先,我们在部署任何大型的组件前都必须要做的事情就是关闭防火墙和设置hostname了

vi /etc/hosts

k8s001 xxx.xxx.xxx.xx

k8s002 xxx.xxx.xxx.xx

...

systemctl stop firewalld.service

systemctl stop iptables.service

systemctl disable firewalld.service

systemctl disable firewalld.service

禁用seLinux

setenforce 0

vi /etc/selinux/config

# 设置里面的SELINUX=disable

临时关闭swap

[root@k8s001 ~]$ swapoff -a

安装docker

以下在所有机器上都要执行

我们这里选用的是docker-ce-18.06.1.ce这个版本

cd /etc/yum.repos.d

wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum -y install docker-ce-18.06.1.ce

安装kubernetes相关组件

同上需要在所有机器上执行以下操作

# 配置kubernetes.repo的源,由于官方源国内无法访问,这里使用阿里云yum源

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

随后开始安装,这些都是我们使用k8s需要的组件

yum -y install kubernetes-cni-0.6.0-0 kubelet-1.13.2 kubeadm-1.13.2 kubectl-1.13.2

systemctl enable kubelet

这些我们各个节点上需要到的镜像

| 主机名 | 角色 | OS | 组件 |

|---|---|---|---|

| k8s001 | master | centos7.2 | kube-apiserver kube-controller-manager kube-scheduler kube-proxy etcd coredns kube-flannel |

| k8s002 | slave | centos7.2 | kube-proxy kube-flannel |

| k8s003 | slave | centos7.2 | kube-proxy kube-flannel |

到这里,我们就开始转战到master机器上进行操作了,由于我们国内的机器无法访问到google的服务器,所以我们就需要用到阿里的景象来协助我们完成操作。

[root@k8s001 ~]$ docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver-amd64:v1.13.2

[root@k8s001 ~]$ docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager-amd64:v1.13.2

[root@k8s001 ~]$ docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler-amd64:v1.13.2

[root@k8s001 ~]$ docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy-amd64:v1.13.2

[root@k8s001 ~]$ docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/etcd-amd64:3.2.24

[root@k8s001 ~]$ docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.1

[root@k8s001 ~]$ docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.2.6

[root@k8s001 ~]$ docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.1 k8s.gcr.io/pause:3.1

[root@k8s001 ~]$ docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.2.6 k8s.gcr.io/coredns:1.2.6

[root@k8s001 ~]$ docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/etcd-amd64:3.2.24 k8s.gcr.io/etcd:3.2.24

[root@k8s001 ~]$ docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler-amd64:v1.13.2 k8s.gcr.io/kube-scheduler:v1.13.2

[root@k8s001 ~]$ docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager-amd64:v1.13.2 k8s.gcr.io/kube-controller-manager:v1.13.2

[root@k8s001 ~]$ docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver-amd64:v1.13.2 k8s.gcr.io/kube-apiserver:v1.13.2

[root@k8s001 ~]$ docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy-amd64:v1.13.2 k8s.gcr.io/kube-proxy:v1.13.2

[root@k8s001 ~]$ docker rmi registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver-amd64:v1.13.2

[root@k8s001 ~]$ docker rmi registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager-amd64:v1.13.2

[root@k8s001 ~]$ docker rmi registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler-amd64:v1.13.2

[root@k8s001 ~]$ docker rmi registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy-amd64:v1.13.2

[root@k8s001 ~]$ docker rmi registry.cn-hangzhou.aliyuncs.com/google_containers/etcd-amd64:3.2.24

[root@k8s001 ~]$ docker rmi registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.1

[root@k8s001 ~]$ docker rmi registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.2.6

当然上面这些操作,你也可以写一个脚本用循环来操作

这里还需要确认两个文件里面的内容要是1

cat /proc/sys/net/bridge/bridge-nf-call-ip6tables

#输入要为1

cat /proc/sys/net/bridge/bridge-nf-call-iptables

#同样输出要为1,否则自己改成1

然后,同样是在master机器上执行以下命令

[root@k8s001 ~]$ kubeadm init \

--kubernetes-version=v1.13.2 \

--pod-network-cidr=10.244.0.0/12 \

--service-cidr=10.96.0.0/12 \

--ignore-preflight-errors=Swap

# 执行的过程当中可能会小卡在4m0s

当执行完成后,这里讲输出一个token和discovery-token-ca-cert-hash,要记得保存下来,这时slave节点加入到集群中的口令指纹,直接将下面这个命令copy后,在相关机器上输入就可以

[root@k8s001 ~]$ kubeadm join 172.24.182.138:6443 --token xog1zi.aktwl53zv1t2mkxj --discovery-token-ca-cert-hash sha256:e75b7e612b0206c00b620588c29bfc3e7cc6f8215c9f6909034308c302b15760

# 当前可以现在master上输入

一般用户可能还需要执行下面的命令

[root@k8s001 ~]$ mkdir -p $HOME/.kube

[root@k8s001 ~]$ cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@k8s001 ~]$ chown $(id -u):$(id -g) $HOME/.kube/config

查看集群状态

[root@k8s001 ~]$ kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health": "true"}

查看节点状态

[root@k8s001 ~]$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s001 NotReady master 36m v1.13.2

我们可以看到这个时候,就算是master也是NotReady状态,我们还需要一个flannel组件

[root@k8s001 ~]$ kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

# 输出的结果一般是这样的:

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.extensions/kube-flannel-ds-amd64 created

daemonset.extensions/kube-flannel-ds-arm64 created

daemonset.extensions/kube-flannel-ds-arm created

daemonset.extensions/kube-flannel-ds-ppc64le created

daemonset.extensions/kube-flannel-ds-s390x created

然后就是在拉取flannel的镜像了,这个过程有点久,你使用docker images查看镜像里面只有多了个

quay.io/coreos/flannel v0.11.0-amd64 ff281650a721 7 months ago 52.6MB

这才算正常

过程可以结合kubectl get node(如果节点状态已经是Ready就行了)和kubectl get pods -n kube-system(如果里面Ready列没有0/1就可以了)

最后使用命令kubectl get ns 看到都是active状态那么master节点就好了

接下来是slave节点上的部署

当我们把kubeadm join 172.24.182.138:6443 --token xog1zi.aktwl53zv1t2mkxj --discovery-token-ca-cert-hash sha256:e75b7e612b0206c00b620588c29bfc3e7cc6f8215c9f6909034308c302b15760这句话在slave节点上输入后,我们使用kubectl get nodes可以看到我们集群中就增加了slave节点了,但是其状态大概都是NotReady的,再用命令kubectl get pod --all-namespaces查看下会发现还有一些pod是0/1状态的,我们还需要为其添加一下镜像依赖

切到slave机器上

[root@k8s002 ~]$ docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.1

[root@k8s002 ~]$ docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.1 k8s.gcr.io/pause:3.1

[root@k8s002 ~]$ docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy-amd64:v1.13.2

[root@k8s002 ~]$ docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy-amd64:v1.13.2 k8s.gcr.io/kube-proxy:v1.13.2

#这里一定要和master上的机器版本一致哦

这个时候,无论是kubectl get pods --all-namespaces还是kubectl get nodes都应该符合我们的要求了,最后检查一下kubectl get cs就可以了。

安装dashboard

输入命令

wget https://raw.githubusercontent.com/kubernetes/dashboard/v1.10.1/src/deploy/recommended/kubernetes-dashboard.yaml

在当前目录下将会多出一个kubernetes-dashboard.yaml文件,然后修改里面的参数

[root@k8s001 ~]$ vim kubernetes-dashboard.yaml

# ------------------- Dashboard Deployment ------------------- #

......

containers:

- name: kubernetes-dashboard

#image: k8s.gcr.io/kubernetes-dashboard-amd64:v1.10.1 原来的

image: registry.cn-hangzhou.aliyuncs.com/google_containers/kubernetes-dashboard-amd64:v1.10.1

ports:

......

然后执行以下命令

[root@k8s001 ~]$ kubectl create -f kubernetes-dashboard.yaml

# 下面是输出的结果

secret/kubernetes-dashboard-certs created

serviceaccount/kubernetes-dashboard created

role.rbac.authorization.k8s.io/kubernetes-dashboard-minimal created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard-minimal created

deployment.apps/kubernetes-dashboard created

service/kubernetes-dashboard created

检查安装情况

[root@k8s001 ~]$ kubectl get pod --namespace=kube-system -o wide | grep dashboard

kubernetes-dashboard-847f8cb7b8-wrm4l 1/1 Running 0 19m 10.244.2.5 k8s-node2 <none> <none>

Dashboard 会在 kube-system namespace 中创建自己的 Deployment 和 Service:

[root@k8s001 ~]$ kubectl get deployment kubernetes-dashboard --namespace=kube-system

NAME READY UP-TO-DATE AVAILABLE AGE

kubernetes-dashboard 1/1 1 1 21m

[root@k8s001 ~]$

[root@k8s001 ~]$ kubectl get service kubernetes-dashboard --namespace=kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes-dashboard ClusterIP 10.104.254.251 <none> 443/TCP 21m

访问dashboard的几种方式

有以下几种方式访问dashboard:

- Nodport方式访问dashboard,service类型改为NodePort

- loadbalacer方式,service类型改为loadbalacer

- Ingress方式访问dashboard

- API server方式访问 dashboard

- kubectl proxy方式访问dashboard

我这里就直接使用NodePort这个方法了

NodePort方式

[root@k8s001 ~]$ vim kubernetes-dashboard.yaml

......

---

# ------------------- Dashboard Service ------------------- #

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

type: NodePort #增加type: NodePort

ports:

- port: 443

targetPort: 8443

nodePort: 31620 #增加nodePort: 31620

selector:

k8s-app: kubernetes-dashboard

重新应用yaml文件

[root@k8s001 ~]$ kubectl apply -f kubernetes-dashboard.yaml

查看service,TYPE类型已经变为NodePort,端口为31620

[root@k8s001 ~]$ kubectl get service -n kube-system | grep dashboard

kubernetes-dashboard NodePort 10.107.160.197 <none> 443:31620/TCP 32m

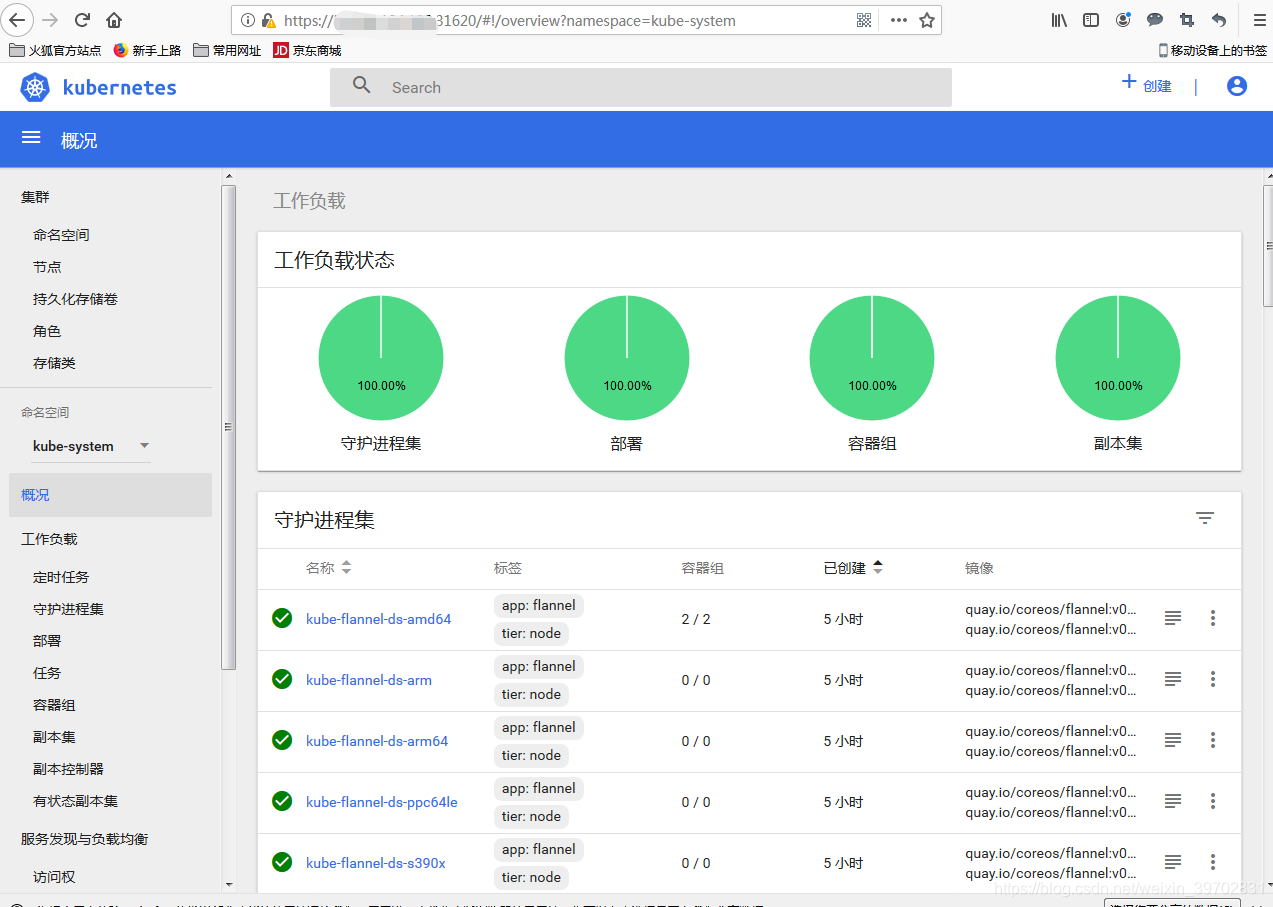

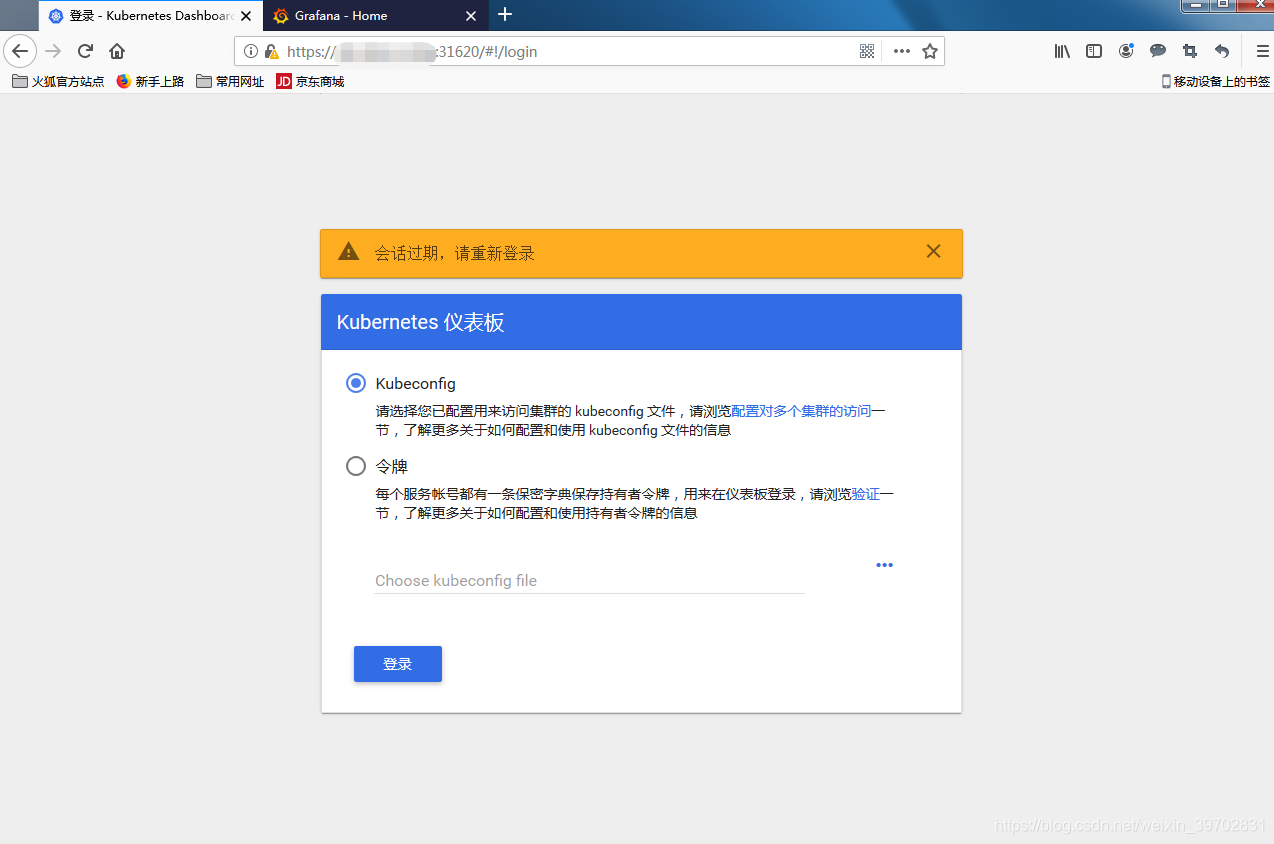

通过浏览器访问:https://xxx.xxx.xxx.xx:31620/, 登录界面如下:

我们使用token的方式登录

[root@k8s001 ~]$ vim dashboard-adminuser.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kube-system

然后,执行命令

[root@k8s001 ~]$ kubectl create -f dashboard-adminuser.yaml

这里创建了一个叫admin-user的服务账号,并放在kube-system命名空间下,然后cluster-admin角色绑定到admin-user账户,这样admin-user账户就有了管理员的权限。

[root@k8s001 ~]$ kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep admin-user | awk '{print $1}')

Name: admin-user-token-jtlbp

Namespace: kube-system

Labels: <none>

Annotations: kubernetes.io/service-account.name: admin-user

kubernetes.io/service-account.uid: a345b4d5-1006-11e9-b90d-000c291c25f3

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1025 bytes

namespace: 11 bytes

token: XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX

把token输入到令牌中就好了,进入界面